In 1977 audiences were wowed by the special effects of the first Star Wars film, which included a hologram of Princess Leia making a distress call to Obi-Wan Kenobi after her ship had fallen under attack by the Empire. Now, the idea of real-time, dynamic holograms depicting scenes occurring in different locations is almost a reality, thanks to a breakthrough at the University of Arizona and Nitto Denko Technical Corporation.

Current interest in 3D display technology is higher than ever, spurred by the demonstration of 3D TV and the release of films produced in this format, such as Avatar. The action appears to come out of the screen because two perspectives combine to generate a 3D image. But to see 3D images, viewers have to wear specialized glasses with two different lenses that let through light polarized in different directions.

Holography is different from this, producing many perspectives that allow the viewer to see the “object” from multiple angles. With this approach the amplitude and phase of the light are reproduced by diffraction, allowing the viewer to perceive the light as it would have been scattered by the real object. In practice this is achieved by creating a screen – out of materials such as silver halide films or photopolymers – that provides the viewer with a slightly different perspective, depending on the observation angle.

A new hope

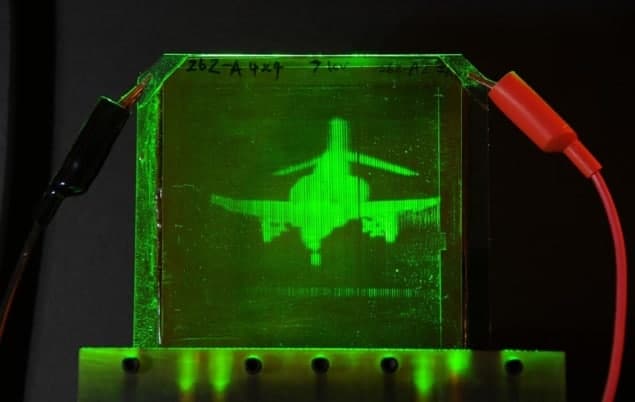

Progress towards achieving more dynamic holograms, with the ultimate goal of real-time reproduction, took a major step forward two years ago when a team led by Nasser Peyghambarian created a monochromatic display that could produce a new image every four minutes. Now, with this latest work the researchers have taken a dramatic leap by unveiling a 17 inch display that can reproduce an object in colour every two seconds.

The system works by taking multiple images of an object with 16 different cameras positioned at a range of different angles. A computer processes all this information into “hogel data”, which is transferred to a second computer via an ethernet link. At this location three different holograms are written into the material at different angles. Illuminating the polymer with incoherent emission from red, blue and green LEDs creates colour images.

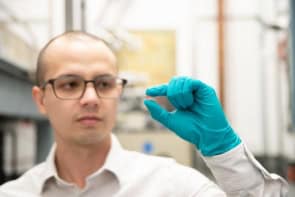

The key to the breakthrough is the material from which the screen is fabricated – a photorefractive polymer. Switching to this polymer has slashed the time taken for a laser to “write” on a holographic pixel, known as hogel, from a second to just six nanoseconds. “[The latest polymer] can also be erased with the same beams used to write the image, so a separate erasing set-up is not required,” explains lead author Pierre-Alexandre Blanche from the University of Arizona.

Towards telepresence

Peyghambarian believes that his team’s technology could aid medical operations. “The cameras would be sitting around where the surgery is done, so that different doctors from around the world could participate, and see things just as if they were there,” he says.

To commercialize the system, writing speeds must increase to 30 frames per second, and the display must be larger, deliver a better colour palette and have a higher resolution. “If you want a true, real-time telepresence you need to go to at least 6–8 feet by 6–8 feet, so that the human person can be demonstrated as they are,” says Peyghambarian.

The ultimate goal is to achieve “telepresence”, where you could chat with others via 3D replications. In moving towards this, the technology will have to improve its resolution as well as its speed.

The team details its work in Nature.