Computing has quickly evolved to become the third “pillar” of science. But to reap its true rewards, researchers need software code that is flexible and can be easily adapted to meet new needs, as Benjamin Skuse finds out

Theory and experiment. They are the two pillars of science that for centuries have underpinned our understanding of the world around us. We make measurements and observations, which we then link to theories that describe, explain and predict natural phenomena. The constant interplay of theory and experiment, which allows theories to be confirmed, refined and sometimes even overturned, lies at the heart of the traditional scientific method.

Take gravity. At the start of the 20th century, Newton’s law of universal gravitation had stood the test of time for over 200 years, but it could not explain the perihelion precession of Mercury’s orbit, which was slightly off what Newtonian gravity predicted. Different ideas were proposed to explain this blot on Newton’s otherwise spotless copybook, but they were all rejected based on other observations. It took Einstein’s general theory of relativity – which revolutionized how we perceive time, space, matter and gravity – to finally explain the tiny disagreement between the predictions from Newton’s theory and observations.

Even now, a century later, Einstein’s theory continues to be poked and prodded for signs of weakness. The difference today is that these tests are not necessarily based solely on experiments or observations. Instead, they often rely on computer simulations.

New views of the world

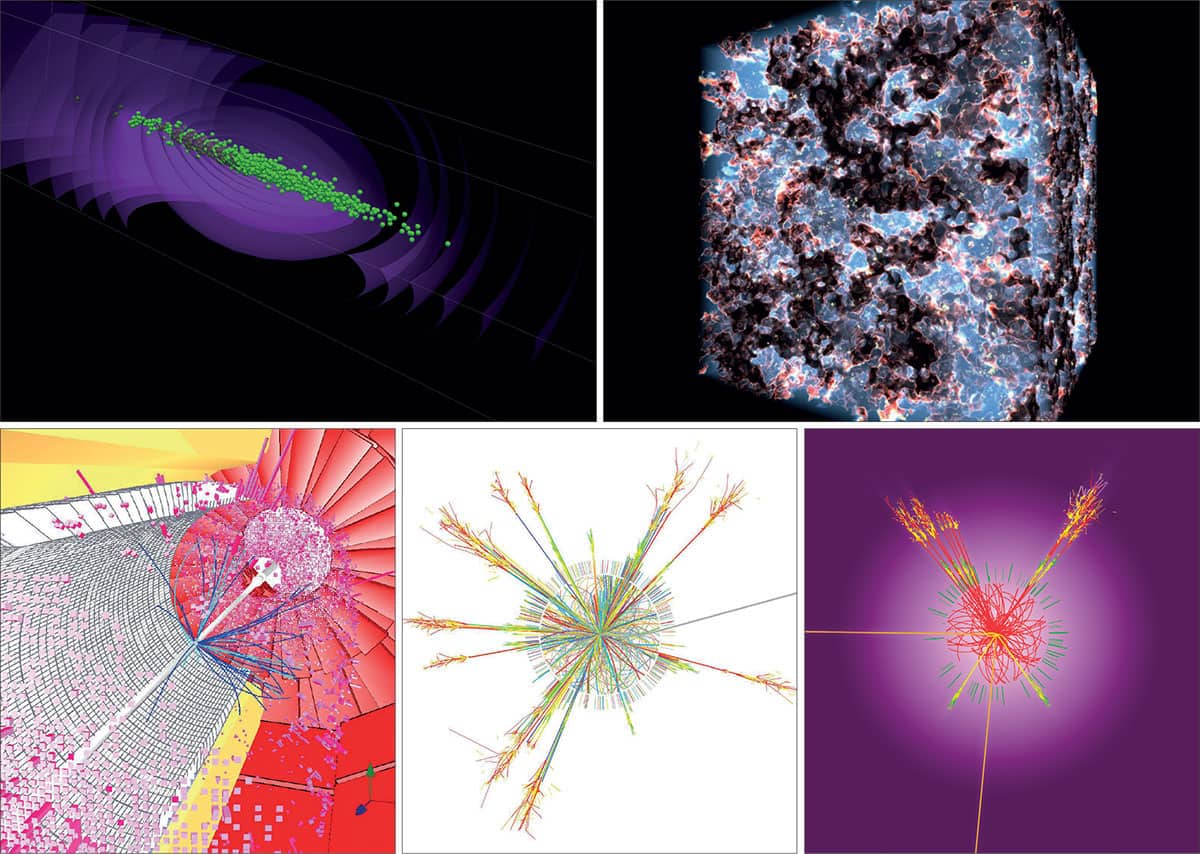

For more than half a century, computational science has expanded scientists’ toolkit to go far beyond what we could hope to observe with our limited vision and short lifespans. In the case of relativity, astrophysicists can now build entire simulated universes based on different modifications to relativity. Powerful computers and clever codes let researchers alter the initial physical conditions in the model, which can be run automatically from soon after the Big Bang to today and beyond.

“Computation fills in a gap between theory and experiment,” says David Ham, a computational scientist at Imperial College London in the UK. “A computation tells you what the consequences of your theory are, which facilitates experimentation and observation work because you can tell what you are supposed to look for to judge whether your theory is valid.”

Computation is not just an extra tool. It is a new way of doing science, irrevocably changing how scientists learn, experiment and theorize

But computation is not just an extra tool. It is a new way of doing science, irrevocably changing how scientists learn, experiment and theorize. In her 2009 book Simulation and its Discontents, Sherry Turkle – a sociologist of science from the Massachusetts Institute of Technology in the US – outlined some of the anxieties that scientists had from the 1980s to the 2000s over the growing pervasiveness of computation and how it was changing the nature of scientific inquiry. She summarized them as “the enduring tension between doing and doubting”, pointing to a worry that younger researchers had become “drunk with code”. So immersed were they in what they created on screen, they could no longer doubt whether their simulations truly reflected reality.

However, Matt Spencer, an anthropologist from the University of Warwick in the UK, believes that science and scientists have evolved in the decade since Turkle’s book was published. “I don’t think you’ll hear this kind of opinion that much in physics these days,” says Spencer, who studies computational practices in science. Indeed, he points to many natural phenomena – such as the behaviour of whole oceans – that simply cannot be manipulated on an experimental scale. “Through exploring a vastly greater range of possible states and processes with simulations, there may be better ways to truly understand underlying physics with simulations,” he says.

Ham agrees, noting that physicists have had to accept they will never be able to understand everything about a simulation. “It’s an inevitable consequence of the systems we study and the techniques we use getting bigger and more complicated,” he says. “The proportion of physicists who actually understand what a compiler does, for example, is vanishingly small – but that’s fine because we have a mechanism for making that OK, and it’s called maths.”

Disruptive technology

Although most physicists now view computation and simulation as an essential part of their work, many are still coming to terms with the disruption caused by this third pillar of science, with one of the biggest challenges being how to verify the increasingly complex codes they produce. How can researchers, in other words, be confident their results are correct, when they don’t know if their code does what they think it does? The problem is that while programming is widely taught in undergraduate and graduate physics courses, code verification is not.

Ham voices another concern. “Something that absolutely is the enemy of progress is the ‘not-invented-here’ syndrome,” he says, referring to the belief – particularly in smaller research groups – that it is cheaper, better and faster to develop code in house than bring it in from elsewhere. “It’s a recipe for postdocs spending most of their science time badly reinventing the wheel,” Ham warns.

With limited budgets to explore better methods, Ham says that researchers often find themselves using code that “doesn’t even work very well for them”. So to help these teams out, Ham and colleagues at Imperial in the Firedrake project are building compilers that take the maths of a given problem from the scientists and then automatically generate the code to solve it. “We’re effectively separating out what they would like numerically to happen from how it happens,” he says.

For physicists who do develop code that fits their purpose well, it’s tempting to use it to solve other scientific questions. But this can lead to further problems. In 2015 Spencer published an account of 18 months he spent observing a group of researchers at Imperial, in which he explored their relationship with a software toolkit called Fluidity. Developed in the UK in around 1990, Fluidity started out as a small-scale fluid-dynamics program to solve problems related to safely transporting radioactive materials for nuclear reactors. But its elegance and utility were soon recognized as having potential in other fields too.

The Imperial group therefore started building new functions on top of Fluidity to serve more researchers and solve further problems in geophysics, ranging from oceans, coasts and rivers to the Earth’s atmosphere and mantle. “Scientific discovery often takes opportunistic pathways of investigation,” says Spencer. “Because Fluidity grew in complexity in this organic way, without foresight of how extensive it would eventually become, problems did accumulate that over time made it harder to use.”

Keeping the code flexible, readable and easy to debug eventually became a serious challenge for the researchers, to the point where one member of the Fluidity team complained that “there were so many hidden assumptions in the code that as soon as you change one detail the whole thing breaks down”.

The team responded by investing in a rewrite of Fluidity, reimplementing the algorithms using best practice software architecture. Yet growing software is not just a technical challenge; it is also a social one. “We could think about the transition to the new Fluidity as crossing into something more akin to ‘big science’,” says Spencer. “Large-scale collaboration often has to be more bureaucratically managed, and this of course is a fresh source of tension and negotiation for researchers.”

Code that can grow

One large software project that has considered the inherent problems with extending code and collaboration from the outset is AMUSE. Short for the Astrophysical Software Environment, it’s a free, open-source software framework developed over more than a decade, in which existing astrophysics codes – modelling stellar dynamics, stellar evolution, hydrodynamics and radiative transfer – can be used simultaneously to explore deeper questions.

Like LEGO bricks, AMUSE is made up of individual codes, each of which can be added or taken away as required to create a new simulation. Managed by a core team led by Simon Portegies Zwart at Leiden Observatory in the Netherlands, the design allows researchers to change the physics and algorithms without affecting the global framework. AMUSE can therefore evolve while keeping the underlying structure solid.

Yet even AMUSE faces challenges. Portegies Zwart sees funding for basic software maintenance and development as the greatest threat to AMUSE and the next generation of large-scale simulations. “Compilers change, operating systems change, and the underlying codes eventually need to be updated,” he says. “It’s relatively easy to acquire funding to start a new project or to write a new code, but almost impossible if you want to update or improve an existing tool, regardless of how many people use it.”

It’s relatively easy to acquire funding to start a new project or to write new code, but almost impossible if you want to update or improve an existing tool

Expert support

So where does all this leave us? We stand at a point where theoretical calculations coupled with laboratory experiments are often not enough to deal with the complex physics questions researchers are trying to address. Even then, simulating this physics is usually beyond the coding skills of a solo scientist or even a group of scientists. As a result, computations and simulations are increasingly being developed in collaboration with expert coders who have very different and complementary skills.

To keep up the pace of scientific progress, many computational scientists feel that funders need to fully recognize that our third pillar of science must support all of these experts. If they don’t, those who maintain and develop collaborative large-scale physics code bases could start looking to take their careers elsewhere. After all, they have many valuable and marketable skills. “There are,” Ham warns, “plenty of non-science jobs out there for them.”