Compared to scribbling mathematical expressions for entangled quantum states on a sheet of paper, producing real entanglement is a tricky task. In the lab, physicists can only claim a prepared quantum state is entangled after it passes an entanglement verification test, and all conventional testing strategies have a major drawback: they destroy the entanglement in the process of certifying it. This means that, post-certification, experimenters must prepare the system in the same state again if they want to use it – but this assumes they trust their source to reliably produce the same state each time.

In a new study, physicists led by Hyeon-Jin Kim from the Korea Advanced Institute of Science and Technology (KAIST) found a way around this trust assumption. They did this by refining conventional entanglement certification (EC) strategies in a way that precludes complete destruction of the initial entanglement, making it possible to recover it (albeit with probability < 1) along with its certification.

A mysterious state with a precise definition

Entanglement, as mysterious as it is made to sound, has a very precise definition within quantum mechanics. According to quantum theory, composite systems (that is, two or more systems considered as a joint unit) are either separable or entangled. In a separable system, as the name might suggest, each subsystem can be assigned an independent state. In an entangled system, however, this is not possible because the subsystems can’t be seen as independent; as the maxim goes, “the whole is greater than its parts”. Entanglement plays a crucial role in many fields, including quantum communication, quantum computation and demonstrations of how quantum theory differs from classical theory. Being able to verify it is thus imperative.

In the latest work, which they describe in Science Advances, Kim and colleagues studied EC tests involving multiple qubits – the simplest possible quantum systems. Conventionally, there are three EC strategies. The first, called witnessing, applies to experimental situations where two (or more) devices making measurements on each subsystem are completely trusted. In the second, termed steering, one of the devices is fully trusted, but the other isn’t. The third strategy, called Bell nonlocality, applies when none of the devices are trusted. For each of these strategies, one can derive inequalities which, if violated, certify entanglement.

Weak measurement is the key

Kim and colleagues reconditioned these strategies in a way that enabled them to recover the original entanglement post certification. The key to their success was a process called weak measurement.

In quantum mechanics, a measurement is any process that probes a quantum system to obtain information (as numbers) from it, and the theory models measurements in two ways: projective or “strong” measurements and non-projective or “weak” measurements. Conventional EC strategies employ projective measurements, which extract information by transforming each subsystem into an independent state such that the joint state of the composite system becomes separable – in other words, it completely loses its entanglement. Weak measurements, in contrast, don’t disturb the subsystems so sharply, so the subsystems remain entangled – albeit at the cost of lesser information extraction compared to projective measurements.

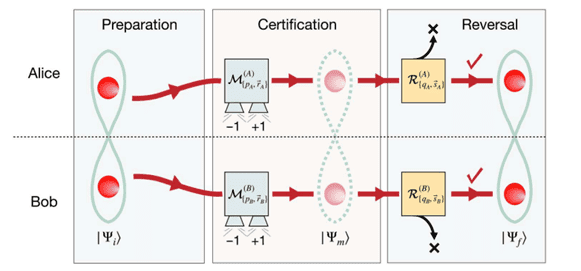

The team introduced a control parameter for the strength of measurement on each subsystem and re-derived the certifying inequality to incorporate these parameters. They then iteratively prepared their qubit system in the state to be certified and measured a fixed sub-unit value (weak measurement) of the parameters. After all the iterations, they collected statistics to check for the violation of the certification inequality. Once a violation occurred, meaning that the state is entangled, they implemented further suitable weak measurements of the same strength on the same subsystems to recover the initial entangled state with some probability R (for “reversibility”).

Lifting the trust assumption

The physicists also demonstrated this theoretical proposal on a photonic setup called a Sagnac interferometer. For each of the three strategies, they used a typical Sagnac setup for a bi-partite system that encodes entanglement into the polarization state of two photons. This involves introducing certain linear optical devices to control the measurement strength and settings for the certification and further retrieval of the initial state.

As predicted, they found that as the measurement strength increases, the reversibility R goes down and the degree of entanglement decreases, while the certification level (a measure of how much the certifying inequality is violated) for each case increases. This implies the existence of a measurement strength “sweet spot” such that the certification levels remain somewhat high without too much loss of entanglement, and hence reversibility.

In praise of weakness

In an ideal experiment, the entanglement source would be trusted to prepare the same state in every iteration, and destroying entanglement in order to certify it would be benign. But a realistic source may never output a perfectly entangled state every time, making it vital to filter out useful entanglement soon after it is prepared. The KAIST team demonstrated this by applying their scheme to a noisy source that produces a multi-qubit mixture of an entangled and a separable state as a function of time. By employing weak measurements at different time steps and checking the value of the witness, the team certified and recovered the entanglement from the mixture, lifting the trust assumption, and using it further for a Bell nonlocality experiment.