Physicists are increasingly developing artificial intelligence and machine learning techniques to advance our understanding of the physical world but there is a rising concern about the bias in such systems and their wider impact on society at large. Julianna Photopoulos explores the issues of racial and gender bias in AI – and what physicists can do to recognize and tackle the problem

In 2011, during her undergraduate degree at Georgia Institute of Technology, Ghanaian-US computer scientist Joy Buolamwini discovered that getting a robot to play a simple game of peek-a-boo with her was impossible – the machine was incapable of seeing her dark-skinned face. Later, in 2015, as a Master’s student at Massachusetts Institute of Technology’s Media Lab working on a science–art project called Aspire Mirror, she had a similar issue with facial analysis software: it detected her face only when she wore a white mask. Was this a coincidence?

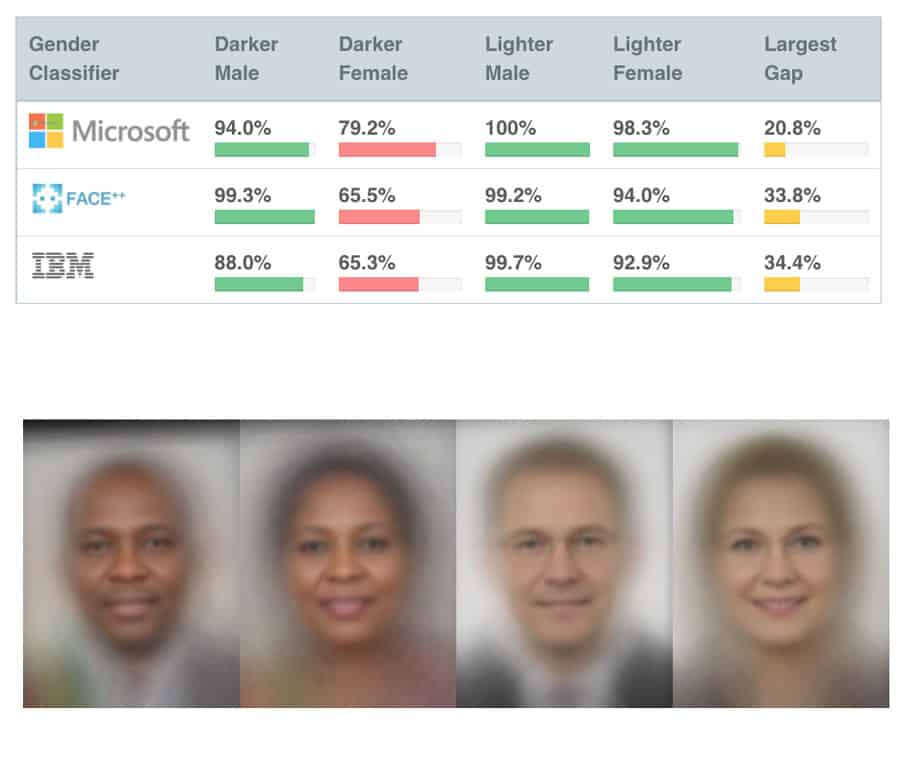

Buolamwini’s curiosity led her to run one of her profile images across four facial recognition demos, which, she discovered, either couldn’t identify a face at all or misgendered her – a bias that she refers to as the “coded gaze”. She then decided to test 1270 faces of politicians from three African and three European countries, with different features, skin tones and gender, which became her Master’s thesis project “Gender Shades: Intersectional accuracy disparities in commercial gender classification” (figure 1). Buolamwini uncovered that three commercially available facial-recognition technologies made by Microsoft, IBM and Megvii misidentified darker female faces nearly 35% of the time, while they worked almost perfectly (99%) on white men (Proceedings of Machine Learning Research 81 77).

Machines are often assumed to make smarter, better and more objective decisions, but this algorithmic bias is one of many examples that dispels the notion of machine neutrality and replicates existing inequalities in society. From Black individuals being mislabelled as gorillas or a Google search for “Black girls” or “Latina girls” leading to adult content to medical devices working poorly for people with darker skin, it is evident that algorithms can be inherently discriminatory (see box below).

“Computers are programmed by people who – even with good intentions – are still biased and discriminate within this unequal social world, in which there is racism and sexism,” says Joy Lisi Rankin, research lead for the Gender, Race and Power in AI programme at the AI Now Institute at New York University, whose books include A People’s History of Computing in the United States (2018 Harvard University Press). “They only reflect and amplify the larger biases of the world.”

Our main goal is to develop tools and algorithms to help physics, but unfortunately, we don’t anticipate how these can also be deployed in society to further oppress marginalized individuals

Jessica Esquivel

Physicists are increasingly using artificial intelligence (AI) and machine learning (ML) in a variety of fields, ranging from medical physics to materials. While they may believe their research will only be applied in physics, their findings can also be translated to society. “As particle physicists, our main goal is to develop tools and algorithms to help us find physics beyond the Standard Model, but unfortunately, we don’t step back and anticipate how these can also be deployed in technology and used every day within society to further oppress marginalized individuals,” says Jessica Esquivel, a physicist and data analyst from the Fermi National Accelerator Laboratory (Fermilab) in Chicago, Illinois, who is working on developing AI algorithms to enhance beam storage and optimization in the Muon g-2 experiment.

What’s more, the lack of diversity that exists in physics affects both the work carried out and the systems that are being created. “The huge gender and race imbalance problem is definitely a hindrance to rectifying some of these broader issues of bias in AI,” says Savannah Thais, a particle-physics and machine-learning researcher at Princeton University in New Jersey. That’s why physicists need to be aware of their existing biases and more importantly, as a community, need to be asking what exactly they should be doing.

‘Intelligent being’ beginnings

The idea that machines could become intelligent beings has existed for centuries, with myths about robots in ancient Greece and automatons in numerous civilizations. But it wasn’t until after the Second World War that scientists, mathematicians and philosophers began to discuss the possibility of creating an artificial mind. In 1950 the British mathematician Alan Turing famously asked whether machines could think and proposed the Turing Test for measuring their intelligence. Six years later, the research field of AI was formally founded during the Dartmouth Summer Research Project on Artificial Intelligence in Hanover, New Hampshire. Based on the notion that human thought processes could be defined and replicated in a computer program, the term “artificial intelligence” was coined by US mathematician John McCarthy – replacing the previously used “automata studies”.

Although the groundwork for AI and machine learning was laid down in the 1950s and 1960s, it took a while for the field to really take off. “It’s only in the past 10 years that there has been the combination of vast computing power, labelled data and wealth in tech companies to make artificial intelligence on a massive scale feasible,” says Rankin. And although Black and Latinx women in the US were discussing issues of discrimination and inequity in computing as far back as the 1970s, as highlighted by the 1983 Barriers to Equality in Academia: Women in Computer Science at MIT report, the problems of bias in computing systems have only become more widely discussed over the last decade.

The bias is all the more surprising given that women in fact formed the heart of the computing industry in the UK and the US from 1940s to the 1960s. “Computers used to be people, not machines,” says Rankin. “And many of those computers were women.” But as they were pushed out and replaced by white men, the field changed, as Rankin puts it, “from something that was more feminine and less valued to something that became prestigious, and therefore, also more masculine”. Indeed, in the mid-1980s nearly 40% of all those who graduated with a degree in computer science in the US were women, but that proportion had fallen to barely 15% by 2010.

Computer science, like physics, has one of the largest gender gaps in science, technology, engineering, mathematics and medicine (PLOS Biol. 16 e2004956). Despite increases in the number of women earning physics degrees, the proportion of women is about 20% across all degree levels in the US. Black representation in physics is even lower, with barely 3% of physics undergraduate degrees in the US being awarded to Black students in 2017. There is a similar problem in the UK, where women made up 57.5% of all undergraduate students in 2018, but only 1.7% of all physics undergraduate students were Black women.

This under-representation has serious consequences for how research is built, conducted and implemented. There is a harmful feedback loop between the lack of diversity in the communities building algorithmic technologies and the ways these technologies can harm women, people of colour, people with disabilities and the LGBTQ+ community, says Rankin. One example is Amazon’s experimental hiring algorithms, which – based as they were on their past hiring practices and applicant data – preferentially rejected women’s job applications. Amazon eventually abandoned the tool because gender bias was embedded too deeply in their system from past hiring practices and could not ensure fairness.

Many of these issues were tackled in Discriminating Systems – a major report from the AI Now Institute in 2019, which demonstrated that diversity and AI bias issues should not be considered separately because “they are two sides of the same problem”. Rankin adds that harassment within the workplace is also tied to discrimination and bias, noting that it has been reported by the National Academies of Sciences, Engineering, and Medicine that over 50% of female faculty and staff in scientific fields have experienced some form of harassment.

Having diverse voices in physics is essential for a number of reasons, according to Thais, who is currently developing accelerated ML-based reconstruction algorithms for the High-Luminosity Large Hadron Collider at CERN. “A large portion of physics researchers do not have direct lived experience with people of other races, genders and communities, which are impacted by these algorithms,” she says. That’s why marginalized individual scientists need to be involved in developing algorithms to ensure they are not inundated with bias, argues Esquivel.

It’s a message echoed by Pratyusha Kalluri, an AI researcher from Stanford University in the US, who co-created the Radical AI Network, which advocates anti-oppressive technologies, and gives a voice to those marginalized by AI. “It is time to put marginalized and impacted communities at the centre of AI research – their needs, knowledge and dreams should guide development,” she wrote last year in Nature (583 169).

Role of physicists

Back at Fermilab, Brian Nord is a cosmologist using AI to search for clues about the origins and evolution of the universe. “Telescopes scan the sky in multi-year surveys to collect very large amounts of complex data, including images, and I analyse that data using AI in pursuit of understanding dark energy which is causing space–time expansion to accelerate,” he explains.

But in 2016 he realized that AI could be harmful and biased against Black people, after reading an investigation by ProPublica that analysed a risk-assessment software known as COMPAS, which is used in US courts to predict which criminals are most likely to reoffend and make decisions about bail setting. The investigation found that Black people were almost twice as likely as whites to be labelled a higher risk; irrespective of the severity of the crime committed, or the actual likelihood of re-offending. “I’m very concerned about my complicity in developing algorithms that could lead to applications where they’re used against me,” says Nord, who is Black and knows that facial-recognition technology, for example, is biased against him, often misidentifies Black men, and is under-regulated. So while physicists may have developed a certain AI technology to tackle purely scientific problems, its application in the real world is beyond their control and may be used for insidious purposes. “It’s more likely to lead to an infringement on my rights, to my disenfranchisement from communities and aspects of society and life,” he says.

Nord decided not to “reinvent the wheel” and is instead building a coalition of physicists and computer scientists to fight for more scrutiny when developing algorithms. He points to companies such as Clearview AI – a US facial-recognition outfit used by law-enforcement agencies and other private institutions – that are scraping social-media data and then selling a surveillance service to law enforcement without explicit consent. Countries around the world, including China, are using such surveillance technology for widespread oppressive purposes, he warns. “Physicists should be working to understand power structures – such as data privacy issues, how data and science have been used to violate civil rights, how technology has upheld white supremacy, the history of surveillance capitalism – in which data-driven technologies disenfranchise people.”

To bring this issue to wider attention, Nord, Esquivel and other colleagues wrote a letter to the entire particle-physics community as part of the Snowmass process, which regularly develops a scientific vision for the future of the community, both in the US and abroad. Their letter, which discussed the “Ethical implications for computational research and the roles of scientists”, emphasizes why physicists, as individuals or at institutions and funding agencies, should care about the algorithms they are building and implementing.

Thais also urges physicists to actively engage with AI ethics, especially as citizens with deep technical knowledge (APS Physics 13 107). “It’s extremely important that physicists are educated on these issues of bias in AI and machine learning, even though it typically doesn’t come up in physics research applications of ML,” she says. One reason for this, Thais explains, is that many physicists leave the field to work in computer software, hardware and data science companies. “Many of these companies are using human data, so we have to prepare our students to do that work responsibly,” she says. “We can’t just teach the technical skills and ignore the broader societal context because many are eventually going to apply these methods beyond physics.”

Both Thais and Esquivel also believe that physicists have an important role to play in understanding and regulating AI because they often have to interpret and quantify systematic uncertainties using methods that produce more accurate output data, which can then counteract the inherent bias in the data. “With a machine-learning algorithm that is more ‘black boxy’, we really want to understand how accurate the algorithm is, how it works on edge cases, and why it performs best for that certain problem,” Thais says, “and those are tasks that a physicist did before.”

Another researcher who is using physics to improve accuracy and reliability in AI is Payel Das, a principal research staff manager with IBM’s Thomas J Watson Research Center. To design new materials and antibiotics, she and her team are developing ML algorithms that can combine learning from both data and physics principles, increasing the success rate of a new scientific discovery up to 100-fold. “We often enhance, guide or validate the AI models with the help of prior scientific or other form of knowledge, for example, physics-based principles, in order to make the AI system more robust, efficient, interpretable and reliable,” says Das, further explaining that “by using physics-driven learning, one can crosscheck the AI models in terms of accuracy, reliability and inductive bias”.

The real-world impact of algorithmic bias

- In 2015 a Black software developer Tweeted that Google Photos had labelled images of him with a friend as “gorillas”. Google managed to fix this issue by deleting the word “gorilla”, and some others referring to primates, from its vocabulary. By censoring these searches, the service can no longer find primates such as “gorilla”, “chimp”, “chimpanzee” or “monkey.”

- When searching for the terms “Black girls”, “Latina girls” or “Asian girls”, the Google Ad portal would offer keyword suggestions related to pornography. Searches for “boys” of those same ethnicities also mostly returned suggestions related to pornography, but searches for “white girls” or “white boys” offered no suggested terms. In June 2020 the Google Ad portal was still perpetuating the objectification of Black, Latinx and Asian people, and has now solved the issue by blocking results from these terms.

- Infrared technology, such as that in pulse oximeters, does not work properly on darker skin because less light passes through the skin. This can lead to inaccurate readings that may mean not getting the medical care needed. The same infrared technology has also been shown to fail in soap dispensers.

Auditing algorithms

In 2020 the Centre for Data Ethics and Innovation, the UK government’s independent advisory body on data-driven technologies, published a review on bias in algorithmic decision-making. It found that there has been a notable growth in algorithmic decision-making over the last few years across four sectors – recruitment, financial services, policing and local government – and discovered clear evidence of algorithmic bias. The report calls for organizations to actively use data to identify and mitigate bias, making sure to understand the capabilities and limitations of their tools. It’s a sentiment echoed by Michael Rovatsos, AI professor and director of the Bayes Centre at the University of Edinburgh. “It’s very hard to actually get access to the data or to the algorithms used,” he explains, adding that companies should be required by government to audit and be transparent about their systems applied in the real world.

Some researchers, just like Buolamwini, are trying to use their scientific experience in AI to uncover bias in commercial algorithms from the outside. They include the mathematician Cathy O’Neil, who wrote Weapons of Math Destruction in 2016 about her work on data-driven biases and who in 2018 founded a consultancy to work privately with companies and audit their algorithms. Buolamwini also continues her work trying to create more equitable and accountable technology through her non-profit Algorithmic Justice League – an interdisciplinary research institute that she founded in 2016 to understand the social implications of AI technologies.

Following her 2018 Gender Shades study, published with computer scientist Timnit Gebru who co-founded Black in AI, the findings were sent to the companies involved. A year later, a follow-up study was carried out to rerun the audits and added two more companies: Amazon and Kairos (AIES ’19: Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society 429). Led by Deborah Raji, a computer scientist and currently a Mozilla Foundation fellow, the follow-up found that the two had huge accuracy errors – Amazon’s facial recognition software even failed to classify Michelle Obama’s face correctly – but the original three companies had improved significantly, suggesting their dataset was trained with more diverse images.

These two studies had a profound real-world impact, leading to two US federal bills – the Algorithmic Accountability Act and No Biometric Barriers Act – as well as state bills in New York and Massachusetts. The research also helped persuade Microsoft, IBM and Amazon to put a hold on their facial-recognition technology for the police. “We designed an ‘actionable audit’ to lead to some accountability action, whether that be an update or modification to the product or its removal – something that past audits had struggled with,” says Raji. She is continuing her work on algorithmic evaluation and in 2020 developed with colleagues at Google a framework of algorithmic audits (arXiv:2001.00973) for AI accountability. “Internal auditing is essential as it allows for changes to be made to a system before it gets deployed out in the world,” she says, adding that sometimes the bias involved can be harmful for a particular population “so it’s important to identify the groups that are most vulnerable in these decisions and audit for the moments in the development pipeline that could introduce bias”.

In 2019 the AI Now Institute published a detailed report outlining a framework for public agencies interested in adopting algorithmic decision-making tools responsibly, and subsequently released an Algorithmic Accountability Policy Toolkit. The report called for AI and ML researchers to know what they are building; to account for potential risks and harms; and to better document the origins of their models and data. Esquivel points out the importance of physicists knowing where their data has come from, especially the data sets used to train ML systems. “Many of the algorithms that are being used on particle-physics data are fine-tuned architectures that were developed by AI experts and trained on industry standard data sets – data sets that have been shown to be racist, discriminatory and sexist,” she says, using the example of MIT permanently taking down a widely used, 80-million-image AI data set because existing images were labelled in offensive, racist and misogynistic ways.

Gebru and her colleagues also recently highlighted problems with large data sets such as the Common Crawl open repository of web crawl data, where there is an over-representation of white supremacist, ageist and misogynistic views (FAccT ’21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency) – a paper for which she was recently fired from Google’s AI Ethics team. Consequently, Esquivel is clear that academics “have the opportunity to act as an objective third party to the development of these tools”.

Removing bias

The 2019 AI Now Institute report also recommends AI bias research to move beyond technical fixes. “It’s not just that we need to change the algorithms or the systems; we need to change institutions and social structures,” explains Rankin. From her perspective, to have any chance of removing or minimizing and regulating bias and discrimination, there would need to be “massive, collective action”. Involving people from beyond the natural sciences community in the process would also help.

It’s not just that we need to change the algorithms or the systems; we need to change institutions and social structures

Joy Lisi Rankin

Nord agrees that physicists need to work with scientists from other disciplines, as well as with social scientists and ethicists. “Unfortunately, I don’t see physical scientists or computer scientists engaging sufficiently with the literature and communities of these other fields that have spent so much time and energy in studying these issues,” he says, noting that “it seems like every couple of weeks there is a new terrible, harmful and inane machine-learning application that tries to do the impossible and the unethical.” For example, the University of Texas in Austin only recently stopped using an ML system to predict success in graduate school, whose data was based on previous admissions cycles and so would have carried biases. “Why do we pursue such technocratic solutions in a necessarily humanistic space?” asks Nord.

Thais insists that physicists must become better informed about the current state of these issues of bias, and then understand the approaches adopted by others for mitigating them. “We have to bring these conversations into all of our discussions around machine learning and artificial intelligence,” she says, hoping physicists might attend relevant conferences, workshops or talks. “This technology is impacting and already ingrained in so many facets of our lives that it’s irresponsible to not contextualize the work in the broader societal context.”

Nord is even clearer. “Physicists should be asking whether they should, before asking whether they could, create or implement some AI technology,” he says, adding that it is also possible to stop using existing, harmful technology. “The use of these technologies is a choice we make as individuals and as a society.”