In less than 100 seconds, John Rarity explains why it is tricky to copy quantum information.

US telescopes faced with closure

A committee of the National Science Foundation (NSF) has recommended closing six major astronomy facilities in favour of building and supporting new telescopes. The observatories under threat include two leading radio telescopes – the Green Bank Telescope (GBT) in West Virginia and the Very Large Base Array (VLBA), which comprises 10 radio-dish antennas spread across the US from Hawaii to the US Virgin Islands. They are joined by four others based at Kitt Peak in Arizona – the Mayall Telescope, the Wisconsin-Indiana-Yale-National Optical Astronomy Observatory, a 2.1 m telescope and the McMath-Pierce Solar Telescope. US astronomers fear that if the telescopes close it will jeopardize the country’s position as a world leader in astronomy.

The NSF’s astronomy portfolio review, chaired by astronomer Daniel Eisenstein from Harvard University, calls for the NSF to stop supporting the six facilities within the next five years. Given tougher budget conditions for the NSF, the money saved from such closures would go towards supporting newer facilities such as Atacama Large Millimeter/submillimeter Array (ALMA), the Advanced Technology Solar Telescope and eventually the Large Synoptic Survey Telescope.

The VLBA, with a “baseline” of more than 5000 miles, makes it the largest in the world, with the GBT sporting the largest steerable radio dish at 100 m across. However, the 17-person NSF committee believes that other radio telescopes could take on the workload of the GBT and that the capabilities of the VLBA to precisely measure the position and movements of stars has not been deemed a priority in the recent Astrophysics Decadal Survey. “We were charged to recommend the best possible overall portfolio in light of the budget constraints and the Decadal Survey science priorities,” says Einsenstein. “Hard choices have to be made.”

“A huge surprise”

Many astronomers, however, are not satisfied with the committee’s recommendations. “The loss of the GBT and the VLBA will be a blow to astronomers within the US and around the world,” says GBT site director Karen O’Neil. “Implementation of the recommendations will have a serious impact on the accessibility of major astronomy facilities to US observers as competition for those facilities becomes extremely high.”

Michael Kramer of the Max Planck Institute for Radio Astronomy in Bonn, which operates the German-based Effelsberg 100-m Radio Telescope, says that the GBT is one of the most important radio telescopes in the world, as its location in a radio quiet zone in the northern hemisphere is unique. “The report has come as a huge and unpleasant surprise,” says Kramer. “I cannot follow the arguments of the report and find it particularly difficult to accept the report’s conclusions.”

That view is shared by Tony Beasley who is director of the National Radio Astronomy Observatory, which operates the GBT and the VLBA. “We are pleased that ALMA and the Very Large Array were highly ranked, but the loss of the GBT and VLBA would break up the quartet of the best radio facilities in the world, which cover nearly the entire range of resolution, wavelength coverage and imaging capability,” he adds.

However, there may still be a lifeline for the VLBA and GBT as well as the other four telescopes affected in the committee’s report. Although the recommendation calls for the NSF to cease funding of the observatories, other funding sources could be found to keep them running in the long-term, such as private investment. “The report recommends divestment, which need not be the same as closure,” says Eisenstein. “We are recommending an end to NSF funding but there are many possible implementations of that.”

Integrated quantum chip may help close quantum metrology triangle

Researchers in Germany have moved one step closer to closing the “quantum metrology triangle”, by fabricating a proof-of-principle circuit that links two quantum electrical devices in series, for the first time. A closed triangle – something scientists have been chasing for more than 20 years – would finally allow standardized units of voltage, current and resistance to be defined solely in terms of fundamental constants of nature.

Metrology – the science of measurement – has evolved as new and more accurate ways of standardizing measurements have been found. For example, the metre is nowadays defined in terms of the speed of light, rather than by the old platinum–iridium prototype, because even though it was held in a controlled environment, the prototype was susceptible to tiny chemical and structural changes over long timescales. Quantum metrologists seek to improve upon traditional metrological methods by looking for ways of making high-resolution measurements of physical parameters using quantum theory and trying to link measurements to nature’s fixed fundamental constants.

Uncomplicated relationships?

In the 1960s Brian Josephson discovered that when a junction between two superconductors is irradiated with microwaves, the voltage that appears across the junction is proportional to Plank’s constant (h) and inversely proportional to the electron charge (e) – two fundamental constants of nature. Since the voltage is not affected by the dimensions of the junction or the materials it is made of, this voltage standard can be reproduced anywhere at any time and will always be the same.

Similarly, a quantum standard for resistance, known as the quantum Hall effect, was defined by Klaus von Klitzing 15 years later. He found that putting a superconducting material at a temperature of almost absolute zero in a magnetic field 100,000 times stronger than the Earth’s renders the superconductor’s resistance independent of the properties of the material, and again only dependent on e and h.

But, explains Bernd Kaestner of the Physikalisch-Technische Bundesanstalt, Germany, who helped build the new chip, “There is an uncertainty to e and h that is still quite large, so one can never be sure whether the quantum Hall effect and the Josephson effect are absolutely determined by these quantum relations or whether there are small corrections to them.”

Trouble with triangles

For the last 20 years, scientists have been attempting to link these two quantum-electrical standards in a triangle, defining a quantum-current standard in terms of e and h – the single-electron transport effect – as the third arm. If realized, this quantum metrological triangle would be able to test the consistency of the three electrical standards and work out whether any of the relations are in need of some fine-tuning.

“Any renormalizing factor would be incredibly small,” explains J T Janssen of the UK’s National Physical Laboratory, who was not involved in the research, “but it would also be incredibly important, because it would undermine the existing theory.”

Single chip approach

Kaestner and colleagues designed a chip that would generate discrete quantized voltages, by placing a semiconducting single-electron pump and a quantum Hall device in series. The voltage generated was dependent only on the current, which in turn was dependent only on the frequency of the single-electron pump.

“So you have now two sides of the triangle, if you like, combined in one device,” explains Kaestner. This offers an independent check on the Josephson voltage, because the Josephson effect relies on superconductor physics, and the new device on semiconductor physics. “Now one can [try to] produce exactly the same voltage with two fundamentally different physics…This is one elegant way of closing the triangle.”

Simplifying and scaling

“It is certainly a nice experiment to make these two quantum standards in one device,” comments Janssen. But the voltages it generates are only of the order of microvolts. Because quantum Hall devices produce noise in the region of nanovolts, he cautions “You would have to measure for a very long time to get any sort of resolution.”

The researchers, on the other hand, suggest that their device is easily scalable, which would allow the signal-to-noise ratio to be brought down significantly. Kaestner argues that an integrated circuit is also the most logical approach, since each micron-sized chip must be kept at a given temperature – about 1 K in this case – by cryostats that occupy several cubic metres of lab space.

But Janssen questions the practicality of connecting 10 current sources and 100 quantum Hall devices in series on one tiny chip, as the researchers propose. His team favour a current amplifier, which ramps the current from a separate single-electron device by a factor of about 10,000 without introducing much error.

“It is a nice experiment they have done, it is a nice device, but it would be very difficult for this device to compete with the traditional technology that we are using at the moment…there would have to be a significant breakthrough in device replication to make this competitive,” he says.

Kaestner hopes that this initial proof-of-principle work will prompt others to think of new and innovative combinations of integrated quantum devices. “Single-electron sources are very new, and I think the semiconductor industry is not aware of the possibilities,” he explains, adding “this is really a new circuit component.”

The research is published in Physical Review Letters.

How can you use X-rays to explore the nanoworld?

In less than 100 seconds, Annela Seddon explains why X-rays are such a useful imaging tool.

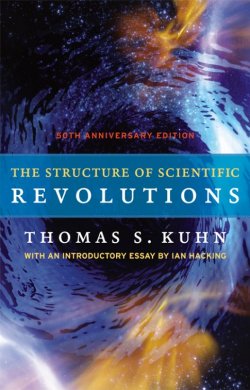

Thomas Kuhn's paradigm shift 50 years on

By Hamish Johnston

“Great books are rare. This is one. Read it and you will see.”

That’s the opening paragraph of an introductory essay included in the 50th anniversary edition of Thomas Kuhn’s book The Structure of Scientific Revolutions, which was first published in August 1962 by University of Chicago Press. About 1.4 million copies of the book have been sold and it was recently described by the Observer as “one of the most influential books of the 20th century”.

The introductory essay is written by the Canadian philosopher Ian Hacking, who explores how Kuhn’s ideas have changed our view of the scientific process over the past five decades – and how controversial they were when the book was first published.

Kuhn was an American physicist who was born in 1922 and died in 1996. His career took an important turn in the 1950s when he taught a course at Harvard University on the history of science.

At the time, science was seen as a cumulative process in which knowledge is built up gradually. As such, it should have been possible for Kuhn to look back over the ages and conclude that the ancient Greeks understood X% of mid-20th century physics, while Newton understood Y%.

Instead, he realized that the way he understood physics was fundamentally different from how an ancient Greek philosopher understood physics. Indeed, he found it impossible to compare the science of ancient Greece with that of the mid-20th century – a property he later called “incommensurability”.

Fascinated by these ideas, Kuhn gave up physics and focused first on the history of science and then its philosophy.

Central to Kuhn’s analysis is the idea that our understanding of the universe has evolved in a series of discontinuities in which an intellectual framework (or paradigm) is built up, only to be brought crashing down in a crisis in which it becomes clear that theory is incapable of describing nature. An example familiar to physicists is the failure in the early 20th century of classical mechanics and electromagnetics to explain what we now understand as quantum physics. The two paradigms are incommensurate because quantum concepts such as superposition and entanglement simply do not exist in classical physics.

The intervals between these “paradigm shifts” – a much used and abused phrase popularized by Kuhn – are dubbed as periods of “normal science”, in which scientists work within a paradigm and solve “puzzles” that are thrown up when observation doesn’t quite agree with theory. This is exactly where particle physicists have been for the last 50 years with the Standard Model. Although many hope the Large Hadron Collider (LHC) will deliver observations that will put particle physics into a period of crisis, so far it has discovered exactly what it was expected to discover.

Indeed, it must be the fear of some particle physicists that the LHC will end up being an extremely expensive puzzle solver rather than a shifter of paradigms.

Sláinte to science

By Margaret Harris

I didn’t make it over to Ireland in mid-July for the big 2012 European Science Open Forum (ESOF) conference/science party in Dublin, so I was pleased to see one of ESOF’s more unusual offshoots land in my in-tray this week.

2012: Twenty Irish Poets Respond to Science in Twelve Lines is a lightweight little book with some hefty thinking inside it. As the title implies, the book contains 20 short poems about science – each written by a different poet from the island that gave the world such scientific luminaries as John Bell, William Rowan Hamilton and George Stokes. The poems’ subject matter ranges from the cosmic to the whimsical to the mundane, and two of the entries are composed of six lines in Irish Gaelic paired with six-line English translations. One that I particularly like (even though – or perhaps because – my pronunciation skills aren’t up to speaking it in the original) is called “Manannán”, and author Gabriel Rosenstock has provided the following translation:

Ladies and gentlemen

Allow me to introduce Manannán:

A microchip which is planted in the brain

Enabling us

To speak Manx

Fluently

The book has been edited by Iggy McGovern, a physicist at Trinity College Dublin, so naturally, physics features in a number of the poems. One of the most inventive of these is Maurice Riordan’s “Nugget”, which is about – yes, really – the gold-covered lump of plutonium that Los Alamos scientists occasionally used as a doorstop during the Manhattan Project. Two others try to capture the sense of wonder found in gazing up at the night sky (with or without a telescope). All in all, it’s a lovely little book for anyone interested in Ireland, poetry or science – or better yet, all three.

What is carbon capture and storage?

In less than 100 seconds, James Verdon explains the principle of trapping carbon dioxide in rocks.

Coated quantum dots make superior solar cells

Researchers at the University of Toronto in Canada and KAUST in Saudi Arabia have made a solar cell out of colloidal quantum dot (CQD) films that has a record-breaking efficiency of 7%. This is almost 40% more efficient than the best previous devices based on CQDs.

CQDs are semiconductor particles only a few nanometres in size. They can be synthesized in solution, which means that films of the particles can be deposited quickly and without fuss on a range of flexible or rigid substrates – just as paint or ink can be.

CQDs could be used as the light-absorbing component in cheap, highly efficient inorganic solar cells. In a solar cell, high-energy photons hitting the photovoltaic material can produce excited electrons and holes (charge carriers) that have energies at least equal to or greater than the band gaps of the material. The advantage of using CQDs as the photovoltaic material is that they absorb light over a spectrum of wavelengths. This is possible because the band gap of a CQD can be tuned over a large energy range by simply changing the size of the nanoparticles.

Trapped electrons

There is a snag, however – the high surface-area-to-volume ratio of nanoparticles results in bare surfaces that can became “traps” in which electrons invariably get stuck. This means that electrons and holes have time to recombine instead of being whisked apart to produce useful current. The result is a reduction in the efficiency of devices made from CQD films. A team led by Edward Sargent at Toronto may now have come up with a solution to this annoying problem. The researchers have succeeded in passivating the surface of CQD films by completely covering all exposed surfaces using a chlorine solution that they added to the quantum-dot solution immediately after it was synthesized. “We employed chlorine atoms because they are small enough to penetrate all of the nooks and crannies previously responsible for the poor surface quality of the CQD films,” explains Sargent.

The team then spin cast the CQD solution onto a glass substrate that was covered with a transparent conductor. Next, an organic linker was used to bind the quantum dots together. This final step in the process results in a very dense film of nanoparticles that absorbs a much greater amount of sunlight.

Boosting absorption

“Our hybrid passivation scheme employs chlorine atoms to reduce the number of traps for electrons associated with poor CQD film-surface quality while simultaneously ensuring that the films are dense and highly absorbing thanks to the organic linkers,” Sargent says.

Electronic-spectroscopy measurements confirmed that the films contained hardly any electron traps at all, he adds. Synchrotron X-ray scattering measurements at sub-nanometre resolution performed by the scientists at KAUST corroborated the fact that the films were highly dense and contained closely packed nanoparticles. “Most solar cells on the market today are made of heavy crystalline materials,” explains Sargent, “but our work shows that light and versatile materials such as CQDs could potentially become cost-competitive with these traditional technologies. Our results also pave the way for low-cost photovoltaics that could be fabricated on flexible substrates, for example using roll-to-roll manufacturing (in the same way that newspapers are printed in mass quantities).”

Exploring new materials

The team is now looking at further reducing electron traps in CQD films for even higher efficiency. “It turns out that there are many organic and inorganic materials out there that might well be used in such hybrid passivation schemes,” adds Sargent, “so finding out how to reduce electron traps to a minimum would be good.”

The researchers say that they are also interested in using layers of different-sized quantum dots to make a multi-junction solar cell that could absorb over an even broader range of light wavelengths.

The research is described in Nature Nanotechnology.

Geologist claims to have found plate tectonics on Mars

A geologist in the US claims to have found the first strong evidence for plate tectonics on Mars by studying satellite images of a huge trough in the Martian surface. It had been thought, until now, that tectonic movements were only present on Earth.

An Yin, professor of geology at the University of California, Los Angeles, spotted the tectonic activity in Valles Marineris – a 4000-km-long canyon system named after the Mariner 9 Mars orbiter that discovered the system in the 1970s. Valles Marineris stretches one-fifth of the way round the Martian surface and reaches depths of up to 7 km. The Earth’s 1.6-km-deep Grand Canyon is a mere surface scratch in comparison.

The formation of Valles Marineris is still not understood despite four decades of research. The most widely accepted theory is that spreading apart of the Martian surface created the system, similar to how rift valleys form on Earth, with the resulting crack being deepened by erosion. But Yin has now found evidence for a completely different process.

Looking for clues

Yin made use of high-resolution images taken by several Mars orbiters, including NASA’s Mars Odyssey and Mars Reconnaissance Orbiter. He focused particularly on the southern region of Valles Marineris, where a 2400-km-long trough connects three large canyons: the Ius, Melas and Coprates Chasmata. He painstakingly trawled through these images to look for “kinematic indicators” on the Martian surface – marks that reveal how the crust has moved. He discovered faults in the Ius Melas Coprates trough with a consistent, slanted orientation, which indicates a horizontal, shearing motion. He also noticed “headless” landslips at the bottom of the trough – that is, landslips without any traceable source, possibly caused by a horizontal movement of the crust since the landslips occurred.

Furthermore, Valles Marineris is exceptionally long and straight. “On Earth, there is only one kind of fault that can make a very straight and linear trace,” says Yin, “and that’s a ‘strike-slip’ fault – a fault that’s moving horizontally over a very large distance.” He also adds that the rocks on both sides of Valles Marineris are extremely flat, whereas rocks near a rift tend to be tilted.

California on Mars

Yin studied the offsets of three surface features around the fault zone to estimate the magnitude of the slip. All three measurements gave roughly the same value – 150 km – for the total distance moved by the fault. By comparison, the San Andreas Fault in California has moved around 300 km, meaning that, when scaled by the planets’ radii, the two faults are similar (the radius of Earth is around twice that of Mars).

All of Yin’s evidence points to a strike-slip system at a plate boundary, otherwise known as a transform fault. “If you have rigid blocks on the lithosphere of a planet that move horizontally over a large distance, then that’s plate tectonics,” says Yin. He names the two plates “Valles Marineris North” and “Valles Marineris South”.

“Clearly, if the reconstruction is right, this is a large transform fault,” says Norm Sleep, professor of geophysics at Stanford University. Sleep also comments that the fault should have “a net subduction effect at one end and a net spreading effect at the other”.

“The eastern end is a ‘spreading centre’ without eruption of volcanic rocks,” Yin confirms, “whereas the western end is an extensional zone filled with volcanic rocks.”

Primitive tectonics

Yin believes that the Valles Marineris fault zone is still active today but that tremors – or “Marsquakes” – are likely to be rare occurrences. “If our history of Mars is correct, everything has evolved very slowly, tectonically,” he says, “so the fault found in Valles Marineris may wake up once every million years.”

This slow geological pace may explain why the red planet is at a primitive stage of plate tectonics compared with the Earth. Yin notes that the plate-tectonic activity on Mars is localized, covering only around 20–25% of the Martian surface – the rest of Mars reveals no signs of tectonic activity.

So why do Earth and Mars have plate tectonics but not Mercury and Venus? Yin thinks that this is related to the density of a planet’s crust during its early formation, which would determine whether fractured pieces of crust could subduct into the underlying mantle. He hopes to publish this hypothesis in a future paper.

The research is described in Lithosphere.

What is a black hole?

In less than 100 seconds, Andy Young explains why not even light can escape from black holes.