Physicists in Hong Kong have used tiny spheres to simulate what happens when a crystalline solid starts to melt. Instead of seeing the emergence of crystalline defects during the melting process, the team found that the transition from solid to liquid is driven by small groups of spheres that move co-operatively in small loops.

Almost all solid objects – including ice cubes – melt from the surface in a process that is well understood by physicists. But while some materials could be heated internally by focussing a beam of light at a point well below their surface, actually seeing how the atoms or molecules make the transition from solid to liquid would be extremely hard. As a result, scientists have little understanding of how internal melting occurs.

To get around this problem Yilong Han and colleagues from the Hong Kong University of Science and Technology simulated the melting process using a crystal made of tiny N-isopropylacrylamide (NIPA) spheres. These spheres all have the same radius of about 700 nm, which is about 1000 times larger than a typical unit cell in a crystalline solid. Crucially, though, this is large enough to be seen using an optical microscope.

Tracking the spheres

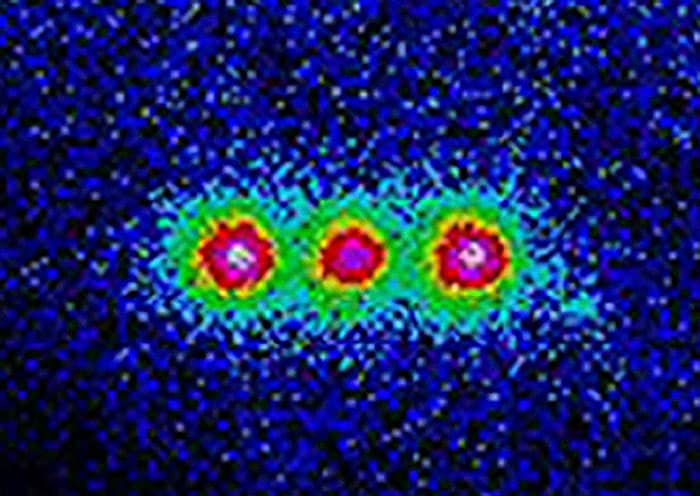

The team began by compressing a collection of these spheres to create a face-centred cubic (FCC) lattice – the crystal structure adopted by copper, aluminium and many other metals. A light was then focussed to a point about 45 μm beneath the surface, which is well within the bulk of the crystal. The researchers then watched how the spheres moved, using an optical microscope equipped with a camera that could take pictures at a rate of 15 frames per second.

In a crystalline material, melting is driven by the increase in random thermal motion of the constituent atoms or molecules as the material heats up. For much larger spheres, however, the amount of energy that would be needed to make them move about like atoms would be impossible to deliver. But what is interesting about NIPA is that it shrinks as it is heated, with the volume of the spheres dropping by more than 30% as they are warmed up from 26 °C to 31 °C. The spheres therefore have enough room to move around when heated.

The team began with a NIPA FCC crystal in a “solid state” in which more than 54.5% of the volume is occupied by spheres and the rest by water. While this “volume fraction” is much smaller than the familiar 74% for a hard-sphere FCC lattice, Han points out that this is still the most stable configuration until the volume fraction drops below 54.5%. Below this value, the material becomes disordered and resembles a liquid.

Deviating from equilibrium

Han’s team then focused a heating lamp on a spot of the crystal that is about 75 µm across and 20 μm deep and gently heated the spheres for about 107 minutes. The degree to which the spheres deviate from their equilibrium positions is analysed by calculating their Lindemann parameter (L).

The team concluded that the melting process involves two main steps. The material begins in the solid FCC phase with a low L value. As it heats up, large domains of particles then emerge with high L numbers. Finally, a small region of liquid is created that then grows throughout the crystal. According to the team, this two-step process is in line with a century-old principle of melting and crystallization called “Ostwald’s step rule”, which says that an intermediate unstable structure is involved.

When the physicists took a closer look at how melting occurred, they found that the process seemed to begin via “loop rearrangements” of the spheres. This involves a small number of spheres moving in a loop, with one sphere moving into the space vacated by its neighbour and another sphere moving in behind it. While the integrity of the FCC lattice is maintained by these loops, they become surrounded by regions of high L, which then make the transition to the liquid state.

Surprisingly long time

Han told that the team was initially surprised to see that the large L domains lasted for relatively long times of about 30 s. “Then we realized that particle swapping can stabilize the large-L region and the large-L region can, in turn, promote particle swapping,” he says.

One interesting phenomenon that had not been predicted by theory – but that was seen by the team – was the coalescence of two or more liquid regions into a larger region. This big domain rapidly assumes a spherical shape because of the surface tension at the boundary between the liquid and solid phases. However, one thing that is predicted by some theories – but that was not seen in the experiment – is the emergence of crystalline defects, such as disclinations, during the melting process. Han, however, points out that a defect-mediated melting theory is “just a guess without a theoretical derivation”.

Difficult measurement

Gary Bryant of RMIT University in Australia told physicsworld.com that the new work is an important step towards a better understanding of crystal melting, adding that the study “goes a long way to measuring homogeneous melting in bulk materials, which is normally tricky because temperature variations are usually transferred from the container walls, leading to inhomogeneous or heterogeneous melting”.

Although the work provides important insights into the melting process, both Han and Bryant admit that there are few practical applications of understanding internal melting because it rarely occurs in practice. “One possible application might be in selective annealing – for those trying to create large, defect-free colloidal crystals,” says Bryant. Such crystals could find use in photonics or other nanotechnology applications.

Han and colleagues are now planning to use their technique to look at the second stage of melting – when the nucleus of liquid expands rapidly. They also want to study the effect of local heating on regions of a crystal that contain a single defect, such as a dislocation or grain boundary, to see how its presence affects the melting. Studying solid–solid transitions between two crystal structures as well as solid–glass transitions are also on the researchers’ to-do list.

The research is described in Science.