The Iitate region of Japan is a beautiful place. Its pleasant roads wind through spectacular, steep-sided mountains and terraced paddy fields, and its villages are far away from the coastal areas devastated by the 2011 tsunami. But anyone who visits Iitate – as we did at the end of last year – will immediately notice a problem. There are very few people there. None of the shops were open as we drove around the region’s villages. There were no children playing in the gardens, and ours was one of only a handful of cars on the road.

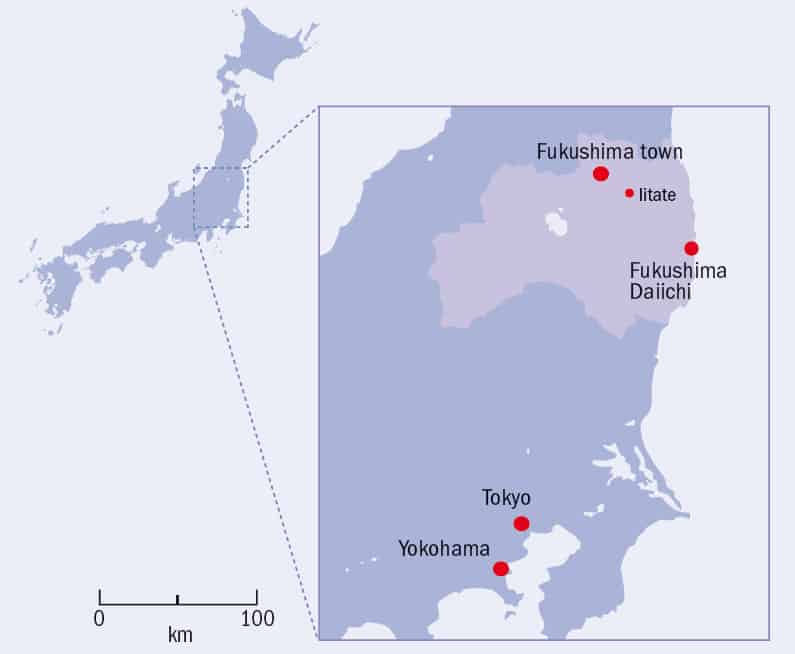

A look at a map (figure 1) reveals the reason for their absence. The Iitate region is located in Japan’s Fukushima prefecture, around 60 km from the Fukushima Daiichi nuclear power station. After the Tōhoku earthquake on 11 March, a 15 m high tsunami wiped out the station’s power supplies, sending three of the six reactors into a partial meltdown. Tens of quadrillions (1015) of becquerels of radioactive caesium were released into the air, and over the next few days, wind and rain carried these radioactive isotopes to the surrounding countryside – including the villages of Iitate.

In the months since the meltdown, much of the media attention has focused on what went wrong at the power station, and how safety measures could be improved to prevent it from happening again (see Physics World May 2011 pp12–13 and March 2012 pp19–20). But the people in areas such as Iitate and even further afield have a more immediate concern: their health. It was this concern that led us to visit the region in late 2011, in connection with a project sponsored by TUV Rheinland Japan. The company had been receiving many enquiries about safety and the import/export of goods, and wanted to be able to provide clear, accurate and independent information about the radiological situation in Japan. The goal of the project was to set up new instrumentation at TUV Rheinland’s laboratories in Yokohama to check food and soil for radioactive contamination. Before doing this, we drove across the affected region, collecting soil samples at different distances from the stricken reactor. Then, when we returned to the laboratory, we conducted detailed studies of the samples to help us devise the procedures we would be using for food and soil measurements.

Areas of concern

At first sight, the numbers we found were scary. Tests on the soil around Iitate showed nearly 100,000 caesium disintegrations per second for every kilogram of soil, 2500 times the level found in soil near nuclear power stations in the UK. Such high levels of radioactivity meant that the dose rates in the region’s villages were similar to those present in restricted areas inside nuclear power stations, which only radiation workers wearing protective equipment and dosimeters are permitted to enter. The rates were particularly high near drains and ditches, and on mossy ground – anywhere that rainwater had collected after the accident.

It would be a mistake, however, to get too caught up in the raw numbers. While 100,000 disintegrations per second sounds like a lot, it is not immediately obvious what such figures mean for human health. It is also worth remembering that detecting radioactivity is much easier than detecting other industrial pollutants. The soil in the Iitate region might be contaminated with agrochemicals and heavy metals as well, but this would be much harder to detect. Most of us are willing to delegate our worries about these harder-to-detect contaminants to authorities such as the UK’s Food Standards Agency. Why can we not seem to do the same for radioactivity? Do we not trust the authorities to act in our best interests? If not, who can we trust to keep us safe?

For the citizens of Japan, these are pressing questions. People in this earthquake-prone country are used to living under the threat of natural disasters, and coping with their aftermath is a part of everyday life. Outwardly, the reactions of authorities and individuals have been pragmatic and efficient. Thousands of people have been trained as radiation workers to help with the clean-up efforts, many organizations including supermarket chains have invested in radiation detection equipment to check foodstuffs for contamination, and many private inspection and certification firms (including TUV Rheinland) have taken on new responsibilities for checking industrial products and export cargoes for radioactivity.

But underneath the country’s calm exterior, there is real concern. Low levels of radioactivity can be detected in the soil in Tokyo, more than 200 km from the Daiichi reactors. Reports that traces of radioactive caesium have been found in the urine of children living in Fukushima town, 90 km away, have given parents some sleepless nights. Schools are particularly worried. The International School at Yokohama, for example, has tried to reassure parents by arranging regular radiation surveys and placing restrictions on where it buys food.

The risk to health

One thing that has not helped the situation is the plethora of scientific terms for measuring radioactivity. Confusion between units such as sieverts and becquerels – not to mention prefixes such as milli and micro – has sown confusion among journalists and members of the public, while creating headaches for scientists and officials trying to evaluate and communicate the risks.

In the midst of this muddle, however, one thing is clear: away from the Daiichi site itself, the principal risk to health comes from an increased probability of contracting cancer caused by interactions of radiation with the body. This risk is related to the energy deposited by the radiation in tissue, a quantity known as the dose and measured in sieverts (Sv), where 1 Sv = 1 J kg–1 (see “Dose”). Ionizing radiation can reach the body in two ways. One is via direct gamma radiation from, for example, radioactivity deposited on surfaces. The other is by ingesting radioactive substances (including alpha, beta and gamma emitters), for example in food.

The external gamma dose can be measured directly with a hand-held meter. The dose from ingestion, however, has to be estimated from another quantity called the activity, which is measured in units of disintegrations per second. The standard SI unit of activity is the becquerel (Bq), where 1 Bq = 1 disintegration per second. Unfortunately, this means that the cause of the problem (radioactive isotopes of caesium) is measured in becquerels, while the risk of harm (the effective dose) is measured in sieverts. To add to the communication difficulties, these are not the only units in common usage. The US nuclear industry uses the unit rem instead of sieverts to measure dose (1 Sv = 100 rem), and the curie instead of becquerels to measure activity (1 curie = 3.7 × 1010 Bq). But even if you stick to SI units, it is still confusing that the number 1 can denote both a very large quantity and a very small one: a dose of 1 Sv is enough to cause immediate radiation sickness, whereas a radioactivity content of 1 Bq is tiny, thousands of times less than the activity caused by traces of radioactive potassium in the human body. As a rule of thumb, millisieverts (mSv, 10–3 Sv) and megabecquerels (MBq, 106 Bq) are the levels at which the risks to human health may start to become significant.

In most countries, members of the public receive a dose of about 2–3 mSv per year from a combination of cosmic rays and naturally occurring radioactivity in the environment. To quantify how much the Fukushima disaster has added to this dose, you need to take into account two factors: the external dose (as measured on the dosimeter) and the internal dose from ingesting contaminated food. For foodstuffs, the Japanese authorities initially imposed a limit of 0.5 MBq of caesium isotopes per kilogram. Someone who ate food at this limit for an entire year would receive, at most, an additional dose of 5 mSv.

As for the external dose, the radioactivity in some of the villages near the Fukushima nuclear site would result in an annual dose of about 70 mSv. This is high enough to pose a measurable threat to health, and is well in excess of the 20 mSv annual limit for radiation workers in the UK (in practice, occupational radiation doses are much less than this, since employers must ensure that doses are kept “as low as reasonably practicable”). If a worker is exposed to 20 mSv every year for their entire working life, experts estimate that it will increase that person’s risk of dying from cancer by about 0.1%, so exposure to 70 mSv per year increases the risk by about 0.4% – over and above the 20–25% chance we all have of dying from cancer.

However, few people are still living close to the Fukushima Daiichi site, and the dose rate further from the nuclear site falls off rapidly. In major population centres such as Fukushima town, an annual additional dose of 1 mSv per year might be more typical, although the dose for an individual will depend heavily on diet and lifestyle factors – such as how much time a person spends near highly contaminated areas.

So in total, a person living in a “typical” village in Fukushima prefecture and eating food with the maximum allowed level of radioactive contamination may receive a dose that is roughly double that of the natural background in Japan. This sounds like a lot, but in fact it is less than the additional dose someone would receive by relocating from London to Cornwall, which has a higher natural background because of uranium-bearing ores in the rock. This is a risk that most people would probably be prepared to accept.

However, the fact remains that there are large quantities of radioactive caesium isotopes in the soil, which can be taken up by food grown in the soil, and results from studies around Chernobyl have shown that these isotopes tend to remain in the top 20 cm of soil for decades. A greater challenge than assessing the average or “typical” risk will be to check and control contaminated areas and foodstuffs to prevent higher levels of activity from affecting large populations. Some foreign governments have responded to this risk by carrying out their own measurements of foodstuffs imported from Japan. For example, the Hong Kong government has increased its food surveillance programme and last year measured 70,000 samples.

The view from the ground

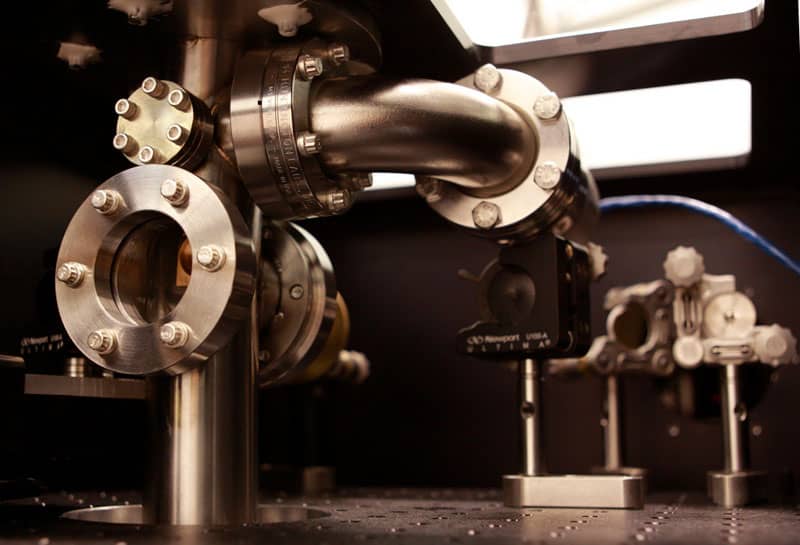

When we went to carry out checks on contaminated areas near Iitate, our task was made simpler by the fact that contamination from the Daiichi plant is almost entirely down to caesium-137 and caesium-134 isotopes, which are easy to detect using a technique called high-resolution gamma spectrometry (see “High-resolution gamma spectroscopy”). Moreover, advances in digital signal processing and software developments mean that such spectrometers can be operated by relatively inexperienced staff. In contrast, the Chernobyl disaster in 1986 involved a more complex mix of radionuclides, and the instrumentation available at the time was difficult to set up and run.

Another factor in our favour is that the accuracy of measured dose rates and radioactivity content is underpinned by a mature international measurement system. The starting point for all the measurements are specialist instruments and techniques known as “primary standards”, which are held at national standards laboratories, such as the National Metrology Institute of Japan in Tsukuba and the National Physical Laboratory in the UK, and are used to measure dose rates and activity in terms of fundamental units. These primary standards are crosschecked against each other and are independent of any possible government interference. The primary standards are used to calibrate other instruments or reference materials in an unbroken chain that leads to the measurements themselves.

By and large, then, the raw measurements of the activity and dose rates should be accurate, thanks to historical work at national laboratories worldwide. The more difficult questions concern how these measurements are interpreted, and what authorities choose to do in response. Standard techniques for interpreting the results do exist thanks to work done primarily by the US Environmental Protection Agency, and the nuclear industry has adopted them for decommissioning plants and surveying contaminated land. But unfortunately, at the moment there is not enough information from the Japanese authorities in the public domain to assess how they are interpreting the measurements.

To understand why interpretations matter, consider the task of keeping radioactivity out of the food supply. At the time of our visit, the Japanese government was planning to check one sample of rice per hectare cultivated as part of its efforts to prevent contaminated foodstuffs from appearing in grocery stores. The question we heard often was “Surely this isn’t enough?”. In fact, it depends on how the result is used. If a single result on a single sample of rice is used to decide whether that particular hectare of rice is below the legal limit, then no, it is not enough. Without further measurements, you cannot know the variation in the results and consequently how close one result is to the “true mean activity”. On the other hand, if that result is combined with others from many fields in a particular area, you can obtain a very accurate estimate of the true mean activity for the whole area – and thus be fairly sure whether food grown there is safe for humans.

In perspective

The problem of radioactive contamination of the environment around the Fukushima area is real, with levels of radioactivity in some places far in excess of the natural background. However, all the building blocks for limiting the risk to human health are available, in the form of quantified dose limits, accurate measurements with easy-to-use instrumentation and statistical techniques for interpreting the results. It remains to be seen how these resources will be deployed by the Japanese authorities and supported by a regulatory framework. Our impression from talking to our colleagues in Japan during our visit is that people have a palpable fear of radioactivity above and beyond the real risks, and this is bound to influence the authorities in the long term.

But regardless of the methods Japan decides to use to deal with the problem of radioactivity, the overriding impression we got from our visit is that the environmental consequences of the explosions at the Fukushima Daiichi plant are insignificant compared with the human cost of the natural disaster that preceded them. The tsunami killed more than 20,000 people and left 100,000 homeless. Large swathes of the coastal areas are still full of the foundations of former family homes, piles of building rubble and poignant sights such as lines of wrecked motorbikes in a disused school yard and small shrines to lost relatives. Such destruction is a very distressing and sobering sight, and puts into perspective our fears about radioactivity.