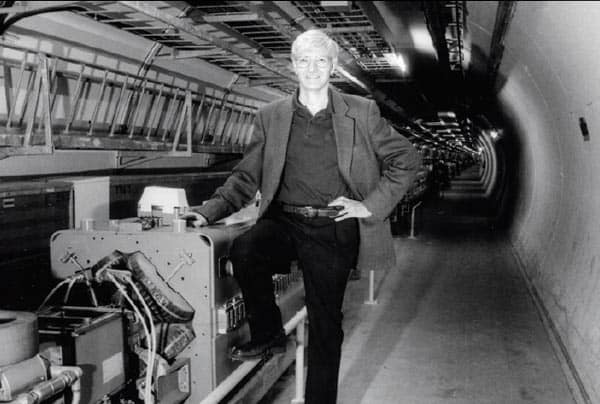

With his glasses and shock of thick, white hair, Chris Llewellyn Smith does not look like a superhero saving the world from peril. Yet the slim, 66-year-old physicist is seemingly becoming a potential saviour in the public eye. At least that is the reaction he says he got while recently moving house in Oxford. “I was quite surprised by my new neighbours’ knowledge of energy issues when they said ‘The world is relying on you to develop fusion!’.”

Yet Llewellyn Smith is certainly not your average physicist. During a career spanning nearly 50 years, he has held numerous high-level positions, notably director general of CERN (see “A passion for particle physics”), provost and president of University College London (UCL), head of physics at Oxford University and director of the Culham site of the UK Atomic Energy Authority (UKAEA), which is home to both the UK fusion programme and the Joint European Torus (JET), which is currently the world’s leading fusion experiment.

Now supposedly retired, Llewellyn Smith is not putting his feet up but is instead involved in the €5bn ITER fusion experiment currently being built in Cadarache, France, where he is chairman of the project’s council. He is also president of the Synchrotron-light for Experimental Science and its Applications in the Middle East (SESAME) in Jordan, and in December last year became a vice-president of the Royal Society, a role where he expects to be involved in briefing the society’s president Martin Rees on energy issues.

One of his functions as ITER chair is to advise the project’s director-general Kaname Ikeda on funding and strategy, but the role also involves him advocating fusion as a possible energy alternative, which has seen Llewellyn Smith give dozens of public lectures on energy. “The public seem to understand that nuclear fusion has the potential to provide essentially unlimited energy, in an environmentally responsible manner,” says Llewellyn Smith as we chat at the Rudolph Peierls Centre for Theoretical Physics in Oxford. “Having another major energy option would be enormously valuable.”

Star power

Nuclear fusion is the energy source that powers the Sun and the stars. Mimicking this source of energy involves heating and controlling a plasma of hydrogen isotopes — deuterium and tritium (D–T) — until it is so hot that the nuclei can overcome their mutual Coulomb repulsion and fuse to produce helium nuclei and 14 MeV neutrons. The idea for a fusion power station is to then to extract the heat of the neutrons, which would be used to boil water and drive a steam-powered electrical generator.

But it is not an easy task: the difficulty lies in maintaining a burning plasma for periods of weeks and getting out substantially more energy than you put in. There are currently two methods that could make it work: confining the plasma with magnetic fields; or “inertial confinement” using laser or particle beams. Magnetic confinement, which is how ITER will operate, is the most developed and more likely to be consistently supplying fusion generated electricity to the grid by the middle of the century.

Yet the ITER project has endured a rough ride since the four initial partners — the European Union, Japan, the former Soviet Union and the US — first agreed in 1985 to build an experimental reactor to demonstrate the scientific and technical practicality of fusion power. The latest setback came last year when the reactor’s designers submitted a plan to upgrade the reactor from the 2001 proposal. This change put back ITER’s start-up date by two years to 2018 and has contributed to construction costs rising above €5bn, although Llewellyn Smith is unwilling to put a specific figure on the increase.

One of the main design changes involves a new method to contain potentially damaging discharges of the plasma onto ITER’s giant 1000 m2 reactor wall. At fixed temperature, the fusion rate is proportional to the square of the pressure. It was originally thought that the pressure falls off smoothly to zero at the edge of the plasma, but in the early 1980s physicists discovered a way of operating a reactor in which the pressure drops off very steeply at the edge, and is uplifted elsewhere by the “height” of this drop. This mode of operation increases the fusion rate, but it also produces instabilities at the plasma’s edge — known as “edge-localized modes” or ELMs — that spit globs of plasma onto the reactor wall.

The original 2001 design envisaged firing frozen pellets of deuterium from outside the reactor into the plasma to produce many small ELMs, rather than a few large ELMs. However, plasma physicists have since realized that this may not be enough to do the job completely, so the new design incorporates an additional way of taming ELMs by applying a random weak magnetic field via small coils within the reactor near the plasma edge. To accommodate the new coils means re-designing the inner reactor. The snag is that this will cost much more than the original design.

The redesigns now need to be funded by ITER’s seven members (since 2001, China, India and South Korea joined and the US rejoined having pulled out). Llewellyn Smith points out that while the new design increases the cost, it is much more likely to achieve ITER’s goal. However, ITER’s price-tag has risen for other reasons too. “People hadn’t been careful enough in tracking the cost increases of commodities, which have gone up much more than general inflation, and they grossly underestimated the difficulty of setting up an international laboratory from zero,” he says. “When the initial costing was done, there were three parties in ITER, but now there are seven.” He points out that since all the members want to obtain technical know-how in a wide range of areas, construction of many of the components is being split between several different countries and companies, which adds to the cost.

Such delays have left critics repeating the well-worn phrase that fusion is always 30 years away. Indeed, Llewellyn Smith says it would not surprise him if there were yet more delays beyond the 2018 “first plasma” start date. “That date, of course, is a big public-relations goal,” he says, “but I think the emphasis on the first plasma is wrong.” This is because initially ITER will only use hydrogen to avoid activating the magnets and walls. Tritium will only be used five or six years later. “The first plasma can be whenever you like as long as you don’t delay, or jeopardise, the success of the first D–T plasma,” says Llewellyn Smith. The first D–T plasma is officially planned for 2023 and he insists that any further redesigns or delays should avoid pushing this date back further than absolutely necessary.

Although Llewellyn Smith is confident that ITER will demonstrate its main goal of generating more power than it consumes, what if ITER does not work? “What might happen then would depend on why it failed,” he says. Whether governments will be interested in pursuing fusion if ITER does not work is a big question, but one that Llewellyn Smith thinks they will have to address. “When we see the lights go out as fossil fuels become increasingly scarce, people will think differently about investing in developing new energy sources,” he says.

Opening SESAME

While his involvement in ITER seems a pretty big job for someone in retirement, Llewellyn Smith has also for the past few months been president of the council of the SESAME synchrotron, which is being built in Jordan. It aims to foster science and technology in the Middle East and to use science to forge closer ties between scientists across the region (see Physics World April 2008 pp16–17, print edition only). Most of his time on this project is spent trying to get funding to complete SESAME, which will produce X-rays that can be used in a range of experiment from condensed matter to biology.

Despite his initial reluctance and lack of knowledge in synchrotron science, Llewellyn Smith sees some advantages of getting involved. “It needed a president from outside the region who is politically neutral,” he says, “but also someone who knows about running big science projects, and knows people in Brussels and bodies such as the Department of Energy in Washington and UNESCO.”

Now it is up to Llewellyn Smith to take the lead in finding the funding to build the remaining piece of the jigsaw — the synchrotron storage ring, which is used to keep the electrons circling while producing X-rays. In addition to Jordan itself, Germany and the UK are the biggest contributors to the project — the former having provided the injector system, based on the old BESSY synchrotron in Berlin, which pumps electrons into the storage ring, while the latter donated some of the beamlines from the recently shut down Synchrotron Radiation Source at the Daresbury lab in Cheshire.

Llewellyn Smith is looking not only to the members of SESAME and to the European Union, but to charitable organizations and philanthropists to fill the gap in these “capital costs” amounting to about $15m. However, the running costs will grow to $4–5m a year, putting further pressure on the tight science budgets of SESAME’s 10 member states, which include Israel, Iran and the Palestinian Authority. Even with many potential stumbling blocks, Llewellyn Smith is hopeful that the synchrotron will be operational in five years’ time.

Despite his prowess in running large research projects, Llewellyn Smith’s career was not always rosy. He had a difficult time after quitting CERN to become president and provost of University College London in 1999. “I didn’t enjoy the job and you don’t do your best when you don’t enjoy it,” he admits. He also concedes that “problems such as how to restructure UCL’s faculties were not what I wanted to think about 24 hours a day”.

It is obvious that particle physics is Llewellyn Smith’s real passion, and indeed he is currently writing a book on the LHC with James Gillies — head of public relations at CERN. Rather than starting on the first chapter, they have already written the last one, entitled “Is it worth it?”. As far as the LHC is concerned, Llewellyn Smith would undoubtedly say yes. Whether the same is true for ITER remains to be seen.

In person

Born: Giggleswick, Yorkshire, 1942

Education: University of Oxford (BA and DPhil)

Career: University of Oxford (1974–1998);

director-general of CERN (1994–1998);

provost and president of UCL (1999–2002);

director of UKAEA Culham (2003–2008)

Family: married, one son, one daughter

Hobbies: reading and singing (having recently joined a choir)