Dark matter — the elusive substance that makes up most of the matter in the universe — may be far more complex than physicists had previously thought. Indeed, it may even be influenced by a hitherto unknown “dark force” that acts exclusively on dark matter particles. That’s the claim of a team of US cosmologists, who believe that their new theory of dark matter could explain the recent intriguing and anomalous results of several high profile searches for the first direct evidence of dark matter.

Physicists believe that there is about five times more dark matter in the universe than normal matter — the latter being the familiar stuff that makes up planets and stars. While dark matter appears to interact via gravity and has a strong influence on the motion of massive objects such as galaxies, it does not interact with light and has proven very difficult to detect directly — let alone study in any detail.

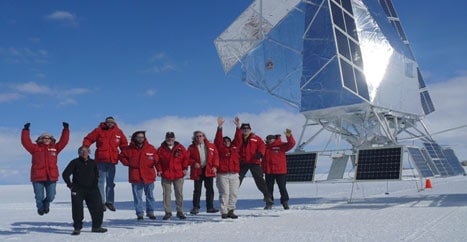

In recent years, several different experiments have found unusual results that might be linked to dark matter. Researchers operating a balloon-borne cosmic-ray detector called ATIC published a paper last week detailing an unexpected excess of electrons between about 300–800 GeV. The results cannot be explained by standard models of cosmic ray origin and propagation in the galaxy and instead suggest a nearby and hitherto unknown “source” of high energy electrons.

Growing evidence of new physics?

Earlier this year it was revealed that the PAMELA satellite found an excess of positrons around 10–100 GeV, which is also unexpected for high energy cosmic rays interacting with the interstellar medium. And the INTEGRAL satellite discovered an unexpected excess of low-energy positrons at the galactic centre. While everyone isn’t sure yet whether these results are fully consistent with each other, they all seem to point to “new physics”.

One possibility is that these excess particles are caused by the annihilation of weakly interacting massive particles (WIMPs) — one of the leading candidates for dark matter. In theory, WIMPs can collide and annihilate each other, producing electron-positron pairs. However, the annihilation rate required to explain the observed excesses is far higher than expected from standard theories of dark matter.

Now, Douglas Finkbeiner at the Harvard-Smithsonian Center for Astrophysics and colleagues believe they may have a possible answer (arXiv:0810.0713). “If we believe dark matter annihilation may be the culprit for the ATIC and PAMELA excesses, we come straight to a couple of interesting conclusions,” explained Finkbeiner. “First, dark matter must annihilate to electrons or muons, either directly or indirectly, and second, it does so about 100 times more readily than expected. Both can be accomplished with a theory containing a new force in the dark sector.”

A new force and particle

According to Finkbeiner and his colleagues, their new proposed fundamental force is felt only by dark matter and mediated by a new particle, “phi”, much in the same way that another fundamental force — electromagnetism — is mediated by photons. Crucially, the force is attractive, bringing dark matter particles together much more effectively to annihilate at low speeds and leading to a greater annihilation rate than otherwise expected. Dark matter particles would collide and annihilate to produce phi particles, with each phi then decaying to produce the electrons, positrons and other particles observed by experiments.

What’s remarkable is that we found how easily the different elements supported each other and can explain a number of different anomalies simultaneously Neal Weiner, New York University

“This theory is partially a synthesis, but in bringing all the ideas together and realizing how simply they could fit together in a single framework, it is much more than just that,” says Neal Weiner, another member of the team at the Center for Cosmology and Particle Physics at New York University. “What’s remarkable is that we found how easily the different elements supported each other and can explain a number of different anomalies simultaneously.”

There are several possibilities to explain a dark matter signal and this is only one of them Dan Hooper, Fermilab

Others are more cautious. “The detections by ATIC and PAMELA are compelling but it is far too early to say that we have detected dark matter,” says Dan Hooper at Fermilab in Illinois. “Moreover, there are several possibilities to explain a dark matter signal and this is only one of them. There is no compelling reason why the universe has to be this way.”

Finkbeiner, however, is optimistic. “All we have given up is the relative simplicity of recycling the same old forces we already know about,” he says. “But we have no philosophical problem with this. Can we really expect to discover a whole new ‘dark sector’ of particles and not find any new forces at all?”

Call for better measurements

Hooper believes the only way to resolve the question ultimately is to have more precise measurements of the electron bump and more data to nail down what scientists are looking at here. Finkbeiner and Weiner agree, pointing out that further astrophysical signals, scattering of nuclei in underground experiments and news from particle accelerators could all help probe dark matter further.

“It will require many pieces, probably, to figure it out,” says Weiner. “We’re really just at the beginning of thinking about these things.”