Richard Muller’s new book Physics for Future Presidents: The Science Behind the Headlines is both fascinating and frustrating. On the fascinating side is the wide variety of poorly appreciated, presidentially useful facts he includes. For example, did you know that petrol has 15 times the energy of TNT per unit mass, and that just one of the 9/11 aircraft carried the energy equivalent of almost 1 kilotonne of TNT? No wonder that the terrorists’ planes did so much damage to a particularly vulnerable part of New York’s infrastructure. And did you know that because growing corn requires so much energy and fertiliser, ethanol fuel produced from corn in the US reduces greenhouse-gas emissions by only 13% compared with petrol, whereas ethanol produced from sugar cane in Brazil reduces emissions by 90%?

Other facts are more fun but of less immediate presidential usefulness. In a section on space, Muller, a physicist at the University of California at Berkeley in the US, notes that if you plan to travel to a spot one light-year away, it makes a lot of sense to accelerate at 1g (the standard acceleration due to gravity at the Earth’s surface) up to the halfway point and then decelerate at about 1g for the rest of the way. The result is a pleasant near-1g of gravity in your spaceship throughout the whole journey, and a respectably relativistic average speed.

Frustratingly, however, Muller has a tendency to talk down to his readers. In his book The First Three Minutes, the Nobel laureate Steven Weinberg wrote that he pictured his reader as a smart lawyer — not a physicist with Weinberg’s training, but an intellectual peer. Muller, unfortunately, does not follow this model. At one point he criticizes another physicist for being patronizing (“a common tone that some physicists affect”). Yet three pages later, after pointing out that you can warm your bedroom with a 1 kW electric heater for a dollar a night, he notes helpfully that “it does add up. A dollar per night is $365 per year”.

Later, the author explains that the di in carbon dioxide is due to the molecule having two oxygen atoms per carbon atom. Furthermore, he seems to feel that future presidents do not need to be comfortable with the metric system that is used all over the world, so in the book we do physics in degrees Fahrenheit, pounds and feet. Perhaps he needed this approach to make his course for non-scientists at Berkeley (which forms the basis for this book, and shares its name) so popular, but even the UK has largely abandoned imperial units. You may also want to take a moment to check the author’s facts. For example, his estimate of 450 feet for the blast radius from a 1 kiloton nuclear weapon is less than the conventionally accepted value.

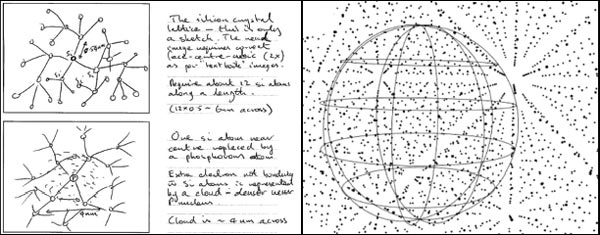

Such lapses are a pity, because there is valuable and fairly deep analysis in this book. For example, consider the linear hypothesis for how cancer deaths vary with radiation exposure. This hypothesis starts from what we know about cancer deaths due to high radiation levels and assumes that the risk of death from cancer is linearly proportional to the amount of radiation exposure, with no threshold below which radiation is harmless. On this basis, Muller calculates that the Chernobyl nuclear accident should have resulted in 4000 additional deaths from cancer.

This number of deaths is a terrible tragedy, comparable to 9/11. However, Muller points out that given that a fifth of all people die from cancer, the total additional cancer deaths due to Chernobyl are epidemiologically immeasurable against the background of hundreds of thousands of deaths that would have occurred naturally in the area he considered. The only cases that can be clearly attributed to Chernobyl are the cases of thyroid cancer, due to radioactive iodine.

Another possibility is that the body’s natural ability to repair cells makes low doses of radiation (below a certain threshold value) less lethal than the linear hypothesis indicates, or even completely safe. For now, however, epidemiological studies cannot help us to evaluate the linear hypothesis. There is not even full agreement on linear projections for Chernobyl. I found a 1996 study in the International Journal of Cancer that put the projection for Chernobyl at more than 15,000 cancer deaths in all of Europe through to 2065, against a background of several hundred million. A president who has to make decisions about the disposal of nuclear waste and the risks of nuclear terrorism has to determine policy in an atmosphere of scientific uncertainty. She or he should understand the issues at this depth.

Muller unfortunately does not dig as deeply when he discusses climate change. He starts by dismissing some of the arguments presented by former Vice-President Al Gore in the movie An Inconvenient Truth as cherry-picking or even distortion. He argues that loss of Antarctic ice contradicts the climate-model prediction of increased snowfall in that region. He also points out that hurricane damage in the US, when expressed in dollars adjusted for inflation, has not increased over the last century — unlike the chart in as-spent dollars that Gore shows in his film. On the other hand, Muller expresses support for the Intergovernmental Panel on Climate Change’s judgement that the 1 °F global warming in the last 50 years is very unlikely to stem from known natural causes alone. But then, frustratingly, he largely skips over the crucial questions of how severe the impacts of climate change are likely to be, and when and how vigorously we need to act. This is a critical linking step to policy decisions.

Personally, I think climate change could have a serious impact, and the time scale for action combines the qualities of a sprint and a marathon. It is a long-standing joke that when former President Bill Clinton went out to jog he would start slowly and then ease off — perhaps even stopping for a hamburger. On this issue we need to start quickly, and since we will need a rapidly growing supply of clean energy to reduce emissions while meeting growing demand, we actually need to speed up over time.

On specific solutions to the issues of energy and environment, there are some frustrating lacunae. The sections on wind turbines and solar energy, for example, fail to mention the problems that arise from the fact that the wind blows intermittently, and the Sun does not shine at night. The book also omits the fact that wind power, unlike solar energy, is already almost cost competitive with fossil-fuel energy sources.

When discussing coal, Muller fails to mention that carbon-sequestration schemes may have trouble finding suitable underground storage sites all over the world. Safety and public acceptance also pose questions. We know that carbon sequestration can cause small earthquakes. What will happen when one-third of a billion tonnes of carbon dioxide is buried near a power plant?

Although the book does present many of the complexities of nuclear power and nuclear weapons, it does not dig deeply enough into the risks of nuclear proliferation from the greatly expanded use of nuclear power. What would a world that burns two million kilograms of plutonium a year look like, when the Nagasaki bomb needed only six kilograms?

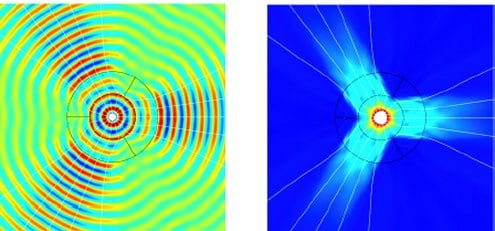

Meanwhile, as a nuclear-fusion researcher, I can confirm that Muller is right to say that fusion will not be putting electricity on the grid in 20 years, but he might be wrong to say that this is the key information a president needs to know on the subject. Overall, nuclear power presents very complicated questions, which require more depth and care than this book provides.

My own advice to aspiring future presidents (and to president-elect Obama) is that you should treat this book as a starting point for understanding how physics affects many issues of importance to society. But more importantly, you should appoint a presidential science advisor with great stature and perspective, and quickly put together a broad and respected Council of Advisors on Science and Technology. You provide policy goals, these thoughtful scientific leaders provide accurate scientific information, and your staff will help you with the analysis needed to bring these together. The outcome of this interaction, as a House Science Committee staff member once suggested to me, is good decisions.