It’s easy to forget that until recently cosmology was largely a theoretical science. Thanks in particular to the Wilkinson Microwave Anisotropy Probe (WMAP), which was launched by NASA in 2001 to study the cosmic microwave background, researchers are now able to talk about the first instants of the universe with the kind of certainty normally associated with a bench-top experiment.

With the analysis of two further years of WMAP data announced last week, that view of the early universe has just got even more detailed. As well as placing tighter constraints on parameters such as the age and content of the universe, the five-year WMAP data provide new, independent evidence for a cosmic neutrino background. The detection of such low-energy neutrinos, wrote Steven Weinberg in 1977 in his famous book The First Three Minutes, “would provide the most dramatic possible confirmation of the standard model of the early universe” — yet at the time no-one knew how to detect such a signal.

Ghostly radiation

WMAP measures the cosmic microwave background: a cold blanket of photons, which according to the new data hails from 375,900 years after the Big Bang (give or take 3100 years or so). This was the moment when the universe had expanded and thus cooled sufficiently for hydrogen atoms to form, allowing photons to flee what had previously been a dense plasma of charge particles. However, long before such “decoupling” between matter and radiation took place — just a second or two after the Big Bang — neutrinos (which interact much more weakly than photons) should have been similarly liberated, producing a shroud of even colder cosmic neutrino radiation.

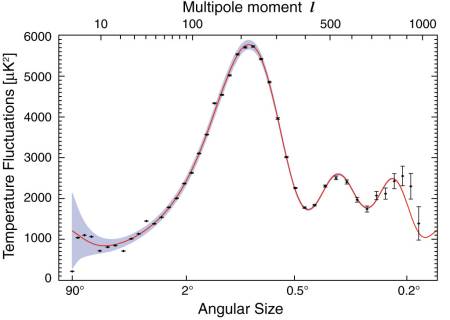

The first year of WMAP data, which was announced in February 2003, measured the tiny temperature fluctuations in the cosmic background photons (thought to have been produced by the same density perturbations in the primordial plasma that led to the formation of galaxies) in unprecedented detail. The observations were a huge success for the standard model of cosmology, which describes a flat, homogenous universe dominated by dark matter (unidentified gravitating but non-luminous matter) and dark energy (a mysterious entity speeding up the expansion of the universe).

By March 2006, having collected three times more data, the WMAP team had measured the polarization of the background photons. This provided rare if not rigorous constraints on models of inflation — a period of enormous expansion thought to have taken place 10–35 seconds after the Big Bang, and a key component of the standard cosmological model.

The five-year data, which was collected between August 2001 and August 2006, determines the temperature fluctuations at small angular scales more precisely. In particular, the theoretical prediction of the third peak in the “angular power spectrum”, which shows the relative strength of the temperature variations as a function of their angular size, only matches the data if the very early universe was bathed in a vast number of neutrinos which would have smoothed out the density perturbations very slightly. The neutrino background was first inferred in 2005 from WMAP data in conjunction with galaxy surveys, but this is the first time the incredibly faint signal has been measured solely from the cosmic microwave background.

As such, the cosmic microwave background provides an independent estimate of the number of neutrino “families” in nature: 4.4 ± 1.5. Despite having been inferred from a totally different cosmological epoch, this value agrees with constraints from Big-Bang nucleosynthesis, the first few minutes of the universe during which light nuclei were manufactured, and with precision measurements at particle accelerators which fix the number of families at three. The WMAP5 data also constrain the combined mass of all types of neutrino to be less than 0.61 eV.

“The discovery of the neutrino background tells us that our models are pretty much right,” says cosmologist Pedro Ferreira of the University of Oxford. “Stuff from particle physics that you’re not putting in by hand just drops out of them — that’s pretty cool if you ask me.”

The discovery of the neutrino background tells us that our models are pretty much right Pedro Ferreira, University of Oxford

Polarizing results

The buzz surrounding the release of the three-year WMAP results in 2006, which has been lower-key for the five-year results, was partly due to their impact on inflation. By measuring the incredibly weak polarization signal of the photons, the WMAP3 data were able to tighten the limits on the “spectral index” of the fluctuations, ns. This is a central parameter in inflationary models which describes the slope of the angular power spectrum once its oscillatory features have been removed: the WMAP3 data favoured a “tilted” spectrum (ns < 1), which is a natural feature of simple inflation models. WMAP5 strengthens this picture: ns = 0.960 ± 0.014.

Another hallmark of inflation is gravitational waves, which would have been produced by motion on the quantum scale and then blown up during inflation. “The five-year results put tighter limits on the gravitational wave amplitude,” says Gary Hinshaw of NASA’s Goddard Flight Center in Maryland, who heads the data analysis for the WMAP science team. “Now gravity waves can contribute no more than 20% to the total temperature anisotropy [corresponding to a “tensor–scalar ratio”, r = 0.2], as opposed to r = 0.3 for the three-year result.” The new combined limits on spectral index and gravitational waves rule out a swathe of inflation models.

A third big result for the five-year data concerns the origin of stars and galaxies. Because the polarization of the cosmic background photons is affected by the presence of ionizing material, WMAP provides new insights into the end of the “cosmic dark ages” when the first generation of stars began to shine.

“We now know that the first stars needed to come earlier than the first billion years in order to give a large enough polarization signal in the CMB,” says WMAP team member Joanna Dunkley. “There is evidence [from WMAP3] that this started at around 400 million years, but since quasars at later times indicate that the universe was still partly neutral at around 1 billion years, the lighting up process was likely quite extended.”

The WMAP5 data don’t change anything we thought, they change what we know Gary Hinshaw, NASA’s Goddard Flight Center

Weaving threads

Among the tens of other cosmological parameters that have been tightened by the new WMAP results are the age of the universe (13.73 ± 0.12 Gyr) and its content, reinforcing the unsettling fact that 95% of the universe is made of stuff that science cannot explain. According to WMAP, neutrinos made up 10% of the universe at the time of recombination, atoms 12%, dark matter 63%, and photons 15%, while dark energy was negligible. Today, by contrast, 4.6% of the universe is made of atoms, 23% dark matter, 72% dark energy and less than 1% neutrinos.

“The WMAP5 data don’t change anything we thought, they change what we know,” says Hinshaw. “Despite the enigmas of dark matter and dark energy, the more different threads we can weave together [such as determining the number of neutrinos based on different mechanisms that occur at separate cosmic epochs], the more confident we become in the basic picture.”

• The five-year data are reported in seven papers submitted to The Astrophysical Journal