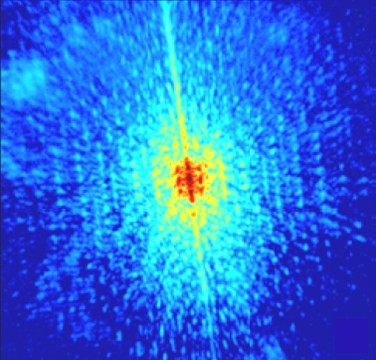

A TEM works by focusing a beam of electrons through a thin sample and capturing the image on a detector on the other side. Such instruments are much better at looking at very tiny objects than an optical microscope because the wavelengths of electrons are much shorter than that of light. A STEM is similar to a TEM except that the electron source can be scanned across the sample, which allows individual atoms to be imaged and identified.

However, even electrons struggle to resolve very small objects and physicists have had great difficulties getting the resolution of electron microscopes below one Angstrom, which is smaller than the distance between individual atoms in a solid. One barrier to sub-Angstrom resolution is spherical aberration, which is the unavoidable blurring of images by the cylindrical lenses used to focus the electrons.

The TEAM instrument is based on FEI’s Titan 80-300 S/TEM microscope, which has been available commercially since 2005. TEAM reached half-Angstrom resolution using new aberration-correction technologies designed by CEOS and built into the microscope’s probe, sample stage and the region between the sample and the electron detector. These technologies were integrated with an aberration correction system that was already used on the electron lenses of the microscope.

The Titan was already the best in the world for TEM, having been able to study samples with a resolution of 0.7 Angstrom. The previous record for a STEM was 0.63 Angstrom set by a competitor’s microscope. While the move to half an Angstrom may not seem like a significant improvement, it had taken the partners in the collaboration three years to get there from 0.7 Angstrom.

According to Dominique Hubert, who is general manager of FEI’s NanoResearch division, reaching 0.5 Angstom was particularly challenging because it was achieved in an instrument that can do both TEM and STEM. This, according to Hubert, required the researchers to overcome significant challenges in designing an instrument that is optimized to perform both types of microscopy.

TEAM was developed in FEI’s research and development lab in Oregon and will be installed later this year at the National Center for Electron Microscopy at Lawrence Berkeley National Laboratory. It could be in use by the third quarter of 2008 to study how atoms combine to form materials and how the growth of crystals and other materials is affected by external factors. Hubert physicsworld.com that technology developed for TEAM will eventually be used in commercial Titan microscopes.

The researchers behind TEAM now want to correct for chromatic aberration, which is caused by electrons of different energies being focused to slightly different points by the microscope.