A soft, self-powered robot, capable of swimming in the deepest regions of Earth’s oceans has been created by researchers in China. Inspired by the hadal snailfish, the team led by Guorui Li at Zhejiang University designed its device to feature flapping fins, and decentralized electronics encased in a deformable silicone body. Having successfully demonstrated the design in the Mariana Trench, their innovations could lead to new ways of exploring some of the most remote regions of the oceans.

The scope of human exploration has extended to even the most inhospitable environments on land, but the deepest regions of Earth’s oceans remain almost entirely unexplored. At depths below 3000 m, extreme pressures experienced by exploration vessels make it very difficult to design robust electronic components required for onboard power, control, and thrust. If these components are closely packed together on a rigid circuit board, pressure-induced shear stresses can cause them to fail at their interfaces.

To overcome these challenges, researchers seek inspiration from the many organisms that thrive at such depths. In their study, Li’s team considered the hadal snailfish, which was recently discovered at depths exceeding 8000 m in the Pacific Ocean. These strange creatures have several features that give them high adaptability and mobility, even at extreme pressures: including a distributed, highly deformable skull, and flapping pectoral fins.

Decentralized electronics

Imitating these features, the researchers designed a pressure-resilient electronics system, which could be fully encased in a soft silicone body. Like the skull of a snailfish, the team decentralized the components of this system – either by increasing the distances between components, or by separating them into several smaller circuit boards. This allowed them to reduce maximum shear stresses at component interfaces by 17%, making them far more resilient to extreme pressures.

To imitate the bird-like flapping fins of the snailfish, Li and colleagues designed artificial muscles using dielectric elastomers: rubber-like materials that convert electrical energy into mechanical work. By sandwiching a compliant electrode between two dielectric elastomer membranes, the researchers could generate flapping in two silicone films, which they supported using elastic frames.

Meet the new guardians of the ocean – robot jellyfish

Li’s team tested the performance of their robot at the bottom of the Mariana Trench, some 10,900 m beneath the ocean surface. Their device was powered by an onboard lithium-ion battery, and fitted with a high-voltage amplifier, video cameras, and LED lights. Even at pressures exceeding 1000 atmospheres, it maintained a flapping motion for 45 min. Further tests in the South China Sea (see video), alongside experiments in a pressure chamber, demonstrated that the device could swim freely and resiliently at speeds exceeding 5 cm/s.

Li and colleagues now hope that their design could be extended to enable more complex tasks, including sensing and communications. They will now focus on developing new materials and structures to enhance the intelligence, versatility, and efficiency of soft robots – further improving their ability to operate in extreme conditions.

The robot is described in Nature.

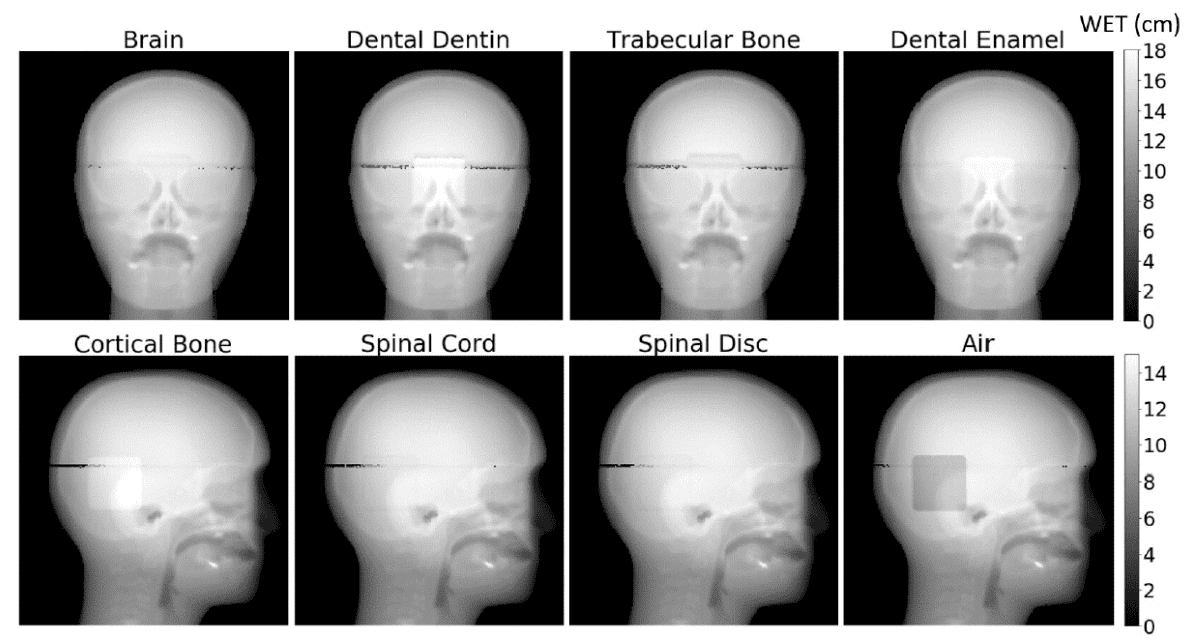

The QUASAR™ Multi-Purpose Body Phantom is a flexible QA tool designed to perform comprehensive testing recommended by AAPM TG 53/66/76 and IAEA TECDOC- 1583.

The QUASAR™ Multi-Purpose Body Phantom is a flexible QA tool designed to perform comprehensive testing recommended by AAPM TG 53/66/76 and IAEA TECDOC- 1583. Joanne Tang is an application specialist at Modus QA. Having joined Modus QA after completing her BSc and MSc in medical biophysics at the University of Western Ontario, she is currently involved in customer application support of the QUASAR™ Multi-Purpose Body Phantom.

Joanne Tang is an application specialist at Modus QA. Having joined Modus QA after completing her BSc and MSc in medical biophysics at the University of Western Ontario, she is currently involved in customer application support of the QUASAR™ Multi-Purpose Body Phantom.