The time it takes for an atom to quantum-mechanically tunnel through an energy barrier has been measured by Aephraim Steinberg of the University of Toronto and colleagues. The team observed ultracold atoms tunnelling through a laser beam, and their experiment provides important clues in a long-standing mystery in quantum physics.

Quantum tunnelling involves a particle passing through an energy barrier despite lacking the energy required to overcome the barrier, as required by classical physics. The phenomenon is not fully understood theoretically, yet it underpins practical technologies ranging from scanning tunnelling microscopy to flash memories.

There has been a long controversy about the length of time taken to cross the barrier – a process that cannot be described as a classical trajectory. This problem arises because quantum mechanics itself provides no prescription for it, explains Karen Hatsagortsyan of the Max Planck Institute for Nuclear Physics in Heidelberg, Germany. “Many definitions have been invented, but they describe the tunnelling process from different points of view”, he says, “and the relationship between them is not simple and straightforward.”

Angular streaking

Hatsagortsyan was involved in one of several recent experiments that looked at electrons escaping from atoms by light-induced ionization in a strong electric field – a process that involves the electrons tunnelling through a barrier as “wave packets” with a range of velocities. These experiments use a phenomenon called angular streaking, which establishes a kind of “clock” that can measure tunnelling with a precision of attoseconds (10-18 s).

Because the peak of the wave packet is produced by interference effects, its behaviour does not follow our classical intuitions: it can seem to move from one side of the barrier to the other faster than light, in defiance of special relativity. This is because “there is ‘no law’ connecting an incoming and an outgoing peak”, says Steinberg. “Even if the peak appears at the output before the input even arrives, that doesn’t mean anything travelled faster than light.”

The method does not, however, really correspond to any previously defined picture of what the tunnelling time is, says Alexandra Landsman of the Max Planck Institute for the Physics of Complex Systems in Dresden, who led some of the other “attoclock” ionization studies of tunnelling. Rather, it was a way to “pick out a ‘correct’ physical definition of tunnelling time among a number of competing proposals”, she says.

More controversy than consensus

But these experiments seem to have created more controversy than consensus – partly because it is not clear how to define the time at which tunnelling “starts”. Recently a team based mostly at Griffith University in Nathan, Australia, concluded from the same approach that particles might tunnel more or less instantaneously.

It may all be a question of definitions. “Sometimes”, says Steinberg, “there’s a quantity you can measure a number of different ways, and since they all give the same answer classically, we think these different measurements are probing the same thing.” But they’re not necessarily – in which case “two different measurements both of which we expected to reveal ‘the tunnelling time’ can have different results.”

But “even if there isn’t one tunnelling time, neither is there an infinite number of options”, Steinberg adds. “There are maybe two or three timescales, and we need to work to understand what each one describes.”

Steinberg’s team approached the problem by measuring a definition of the tunnelling time determined by a kind of internal clock in the particles themselves. For particles, they use a cloud of about five to ten thousand ultracold atoms of rubidium propelled gently towards a barrier induced by a light beam.

Spinning clocks

The atoms each have a spin that, when placed in a magnetic field, will rotate (precess) at a known frequency, which is the ticking clock. The apparatus is arranged so that the particles will only experience an effective magnetic field inside the barrier itself. By measuring how much the orientation of their spin has changed when atoms exit the barrier, they obtain a measure of how long the particles spent “inside” the barrier.

Proposed more than 50 years ago by two Russian physicists, Steinberg’s team created the experiment using atoms that adopt a collective state called a Bose-Einstein condensate (BEC), described by quantum mechanics. The atoms have relatively long quantum wavelengths – a micron or so, which means they can penetrate relatively wide barriers, with long passage times of about a millisecond or so – which can be measured precisely. “We want particles with a well-controlled starting state and a very long wavelength”, says Steinberg. “A BEC is an ideal way to produce this.”

“Honestly, when we started these experiments”, he adds, “partly I just wanted to see with our own eyes that a composite particle with 87 nucleons and 37 electrons [that is, rubidium atoms] could really tunnel all the way across a barrier 10,000 times larger than an atom itself”.

Laser tweezer

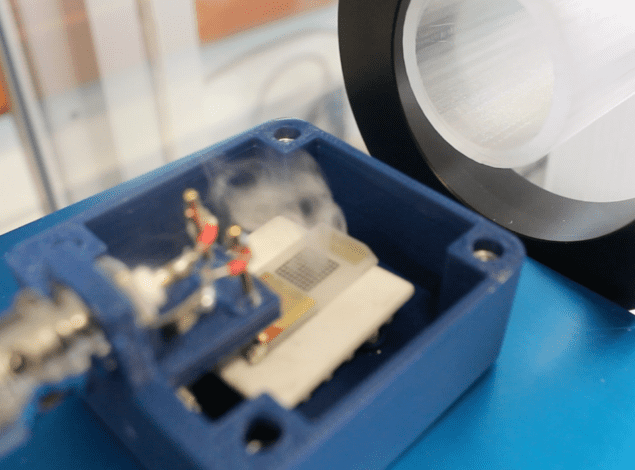

To create the barrier and have the atoms impinge on it, the team used two laser beams. “We Bose-condense them inside an attractive ‘laser tweezer’ beam, which acts as an optical waveguide”, says Steinberg. Then they use a magnetic field to give the atoms a little push towards the barrier, moving at a few millimetres per second.

The barrier is created by a blue laser beam, focused to be about a micron wide, with a frequency slightly greater than that of one of the resonances in the atoms. Inside this laser’s intense electromagnetic field, the atoms will interact with it. But “the atoms can’t keep up, oscillate out of phase with the field, and end up in a higher-energy state”, says Steinberg. This means that the blue laser acts as a repulsive potential, about 1.3 micron wide, through which just a few percent of the atoms can tunnel. To reduce their random thermal motions, the researchers chill the system to a temperature of about 1 nK.

Electron tunnelling seen in real time

Deducing the tunnelling time is then a matter of measuring how the spin angles of the atoms in the trap have changed when they exit it. In this way, says Steinberg, “we are probing the dwell time of transmitted atoms in the barrier.” They find that this is about 0.62 ms.

“This is a remarkable experiment,” says Hatsagortsyan. It is “especially nice because the quantity [tunnelling time] it measures is well defined”, says Landsman. “The findings may have practical implications for tunnelling devices, since the measured time seems to correspond to the time the electron actually spends inside the barrier”.

Steinberg adds that the technique could reveal something about the trajectory within the barrier itself. “We hope in the future to restrict our effective magnetic field to regions even smaller than the barrier”, he says, “so that when we look at the final spin, we’re measuring not how much time the atom spent somewhere ill-defined in the barrier, but in one particular region.” According to one theoretical description, he says, it looks as though a particle “appears on the far side without ever crossing the middle. This is what we’d like to test.”

The research is described in Nature.