The Planck mission gave us the most precise value of the Hubble constant to date by measuring the cosmic microwave background. But studies made since using different methods provide different values. Keith Cooper investigates the discrepancies and asks what it might mean for cosmology

When astronomer Edwin Hubble realized that the universe is expanding, it was the greatest cosmological discovery of all time. The breakthrough acted as a springboard to learning about the age of the cosmos, the cosmic microwave background (CMB) radiation, and the Big Bang.

Hubble was the right man in the right place at the right time. In April 1920 his fellow astronomers Harlow Shapley and Heber D Curtis famously debated the size of the universe, and the nature of spiral nebulae, at the Smithsonian Museum of Natural History in the US. Within four years, Hubble had the answer for them.

Using the 100-inch (2.5 m) Hooker Telescope at Mount Wilson Observatory in California, Hubble was able to identify Cepheid variables – a type of star whose period-luminosity relationship allows accurate distance determination – in the spiral nebulae, which allowed him to measure them as extragalactic. The finding meant the Milky Way was not the entire universe, but just one galaxy among many.

Soon, Hubble had discovered that almost all of these galaxies are moving away from us – their light is redshifted via the Doppler effect. These observations were seized upon by the Belgian cosmologist Georges Lemaître, who realized that they implied that the universe is expanding. Independently, both Hubble and Lemaître derived a mathematical relationship to describe this expansion, subsequently known as the Hubble–Lemaître law. It says that the recession velocity (v) of a galaxy is equal to its distance (D) multiplied by the Hubble constant (H0), which describes the rate of expansion at the current time.

In the nearly 100 years since Hubble’s discovery, we’ve built up a detailed picture of how the universe has developed over time. Granted, there are still some niggles, such as the identities of dark matter and dark energy, but our understanding reached its peak in 2013 with the results from the Planck mission of the European Space Agency (ESA).

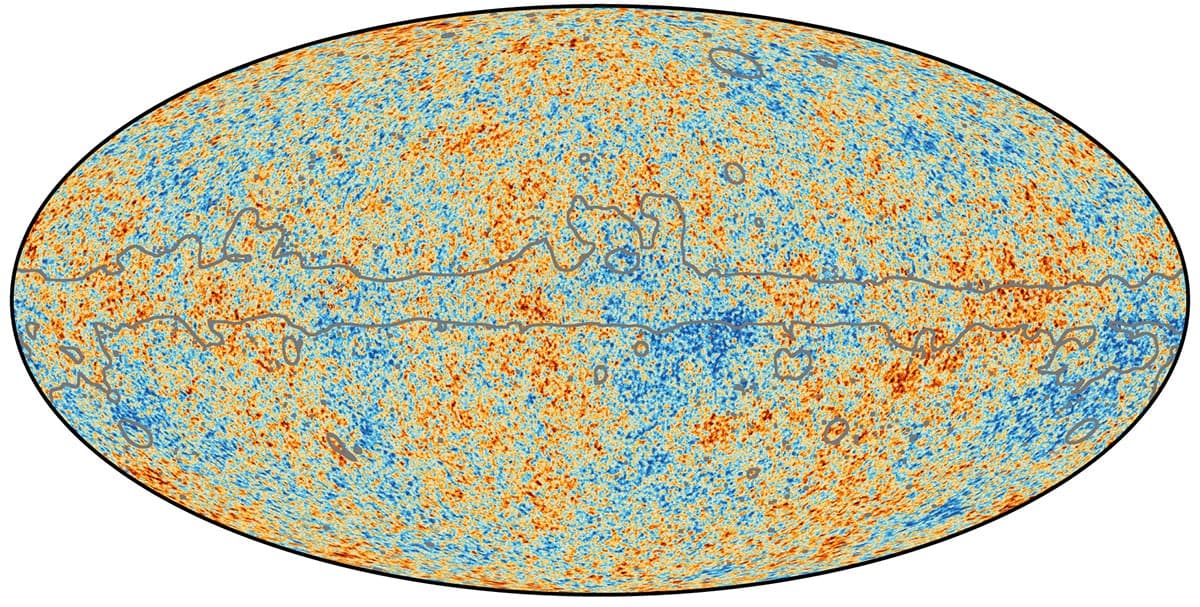

Launched in 2009, Planck used microwave detectors to measure the anisotropies in the CMB – the slight temperature variations corresponding to small differences in the density of matter just 379,000 years after the Big Bang. The mission revealed that only 4.9% of the universe is formed of ordinary baryonic matter. Of the rest, 26.8% is dark matter and 68.3% is dark energy. From Planck’s observations, scientists also deduced that the universe is 13.8 billion years old, confirmed that the universe is flat, and showed how baryonic acoustic oscillations (BAOs) – sound waves rippling through the plasma of the very early universe, resulting in the anisotropies – neatly matched the large-scale structure of matter in the modern universe, which we know has expanded according to the Hubble–Lemaître law.

Planck’s view of the CMB is the most detailed to date. Plus, by mapping our best cosmological models to fit Planck’s observations, a whole host of cosmological parameters have come tumbling out, including H0. In fact, scientists were able to extrapolate the value of H0 to the greatest precision ever, finding it to be 67.4 km/s/Mpc, measured to an uncertainty of less than 1%. In other words, every stretch of space a million parsecs (3.26 million light-years) wide is expanding by a further 67.4 km every second.

With Planck’s results, we thought that this picture was complete, and that we knew exactly how the universe had expanded over those 13.8 billion years. Yet it turns out that we may be wrong (see box “Not a constant constant over time”).

Not a constant constant over time

Describing H0 as “the Hubble constant” is a little bit of a misnomer. It is true that it is a constant at any given point in time since it describes the current expansion. However, the expansion rate itself has changed throughout cosmic history – H0 is just the current value of a broader quantity that we call the Hubble parameter, H, which describes the expansion rate at other times. Therefore, while the local measurement, H0, should only have one value, H can take on different values at different points in time. Prior to the discovery of dark energy, it was assumed that the expansion of the universe was slowing down, and hence H would be larger in the past than in the future. On the face of it, since dark energy is accelerating the expansion, you might therefore expect H to increase with time, but that’s not necessarily the case. Rearranging the Hubble–Lemaître law such that H = v/D, where v is an object’s recession velocity, we see that H depends strongly on distance D, since in a universe with accelerating expansion, the distance is increasing at an exponential rate. The bottom line is that H is likely to be decreasing with time, which is what we think is happening, even though the recession velocity and hence the expansion are still increasing with distance.

Moving parts

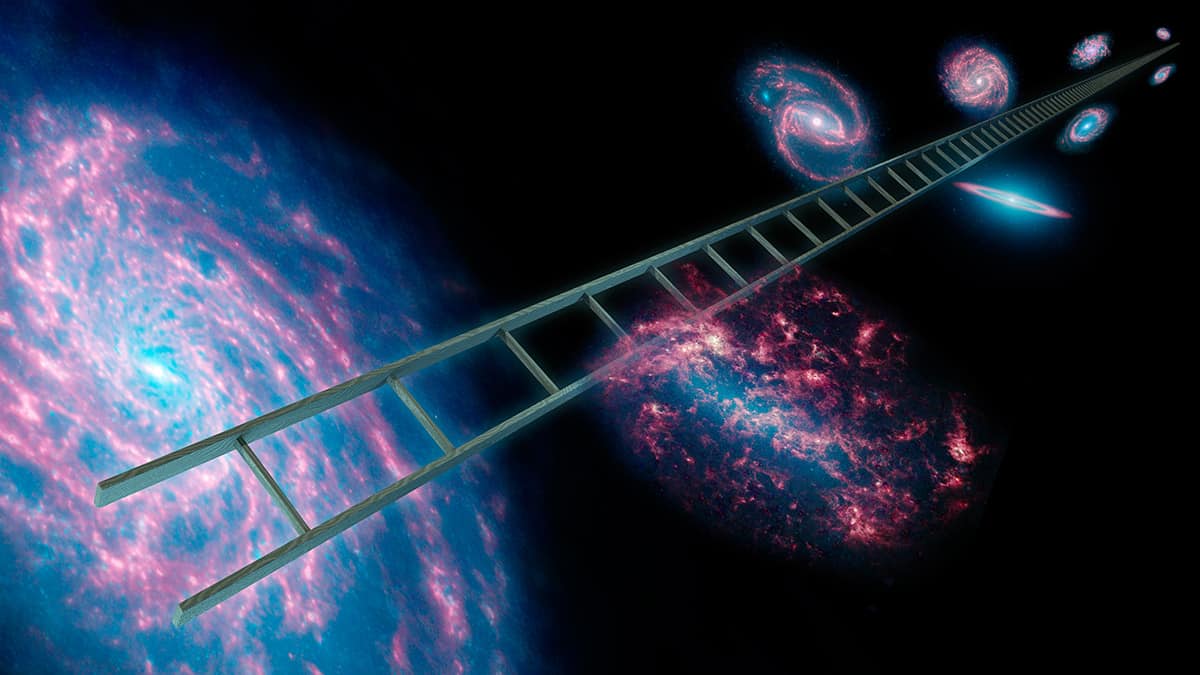

Traditionally, H0 is determined by measuring the distance and recession velocity of galaxies using “local” standard candles within those galaxies. These include type 1a supernovae – the explosion of white-dwarf stars with a certain critical mass – and Cepheid variable stars. The latter have a robust period–luminosity relationship (discovered by Henrietta Swan Leavitt in 1908) whereby the longer the period of the star’s variability as it pulses, the intrinsically brighter the star is at peak luminosity. But as astrophysicist Stephen Feeney of University College London explains, these standard candles have many “moving parts”, including properties such as stellar metallicity, differing populations at different redshifts, and the mechanics of Cepheid variables and type Ia supernovae. All the moving parts lead to uncertainties that limit the accuracy of these observations and we have seen over the years how the resulting calculations of H0 can vary quite a bit.

On the other hand, Planck’s value of H0 is a relatively straightforward measurement – even if it does depend on the assumption that lambda cold dark matter (ΛCDM) cosmology – which incorporates the repulsive force of dark energy (Λ) and the attractive gravitational force of cold dark matter (CDM) – is correct. Still, when all the known uncertainties and sources of error are taken into account, Planck’s value of H0 is to a precision greater than ever before, to just 1%.

Although the Planck value of H0 is calculated from measurements of the CMB, it is important to note that it is not the expansion rate at the time of the CMB’s creation. Rather, “think of the CMB measurement as a prediction instead”, says Feeney. It extrapolates from what the CMB tells us the universe was like 379,000 years after the Big Bang, factoring in what we know of how the universe would have expanded in the time since based on the Hubble–Lemaître law and ΛCDM, to arrive at an estimate of how fast the universe should be expanding today. In other words, however we measure H0, whether it be with the CMB or more local measurements of Cepheid variables and supernovae, we should get the same answer.

A spanner in the works

In 2013, when the Planck measurement was revealed, this wasn’t a problem. Although local measurements differed from the Planck value, their uncertainties were still large enough to accommodate the differences. The expectation was that as the uncertainties become smaller over time with more sophisticated measurements, the local measured value would converge on the Planck value.

However, in 2016 a major milestone was reached in local measurements of H0 that brought our understanding of the universe into question. It involved Adam Riess of Johns Hopkins University, US, who in 1998 had co-discovered dark energy using type Ia supernovae as standard candles to measure the distance to receding galaxies based on how bright the supernovae appeared. He was now leading the cumbersomely named SH0ES (Supernova, H0, for the Equation of State for dark energy) project, which was set up to calibrate type Ia supernovae measurements to determine H0 and the behaviour of dark energy. In order to provide this calibration, the SH0ES team used a lower rung on the “cosmic distance ladder”, namely Cepheid variables. The project aimed to identify these pulsating stars in nearby galaxies that also had type Ia supernovae, which would mean that the Cepheid distance measurement could then be used to calibrate the supernova distance measurement, and this new calibration could in turn be used on supernovae in more distant galaxies. The method produced a value of H0 with an uncertainty of just 2.4%.

Riess and his group were stunned by their result, however. Using the local cosmic distance ladder, they inferred a value of H0 as 73.2 km/s/Mpc. With the vastly reduced uncertainties, there was no way this could be reconciled with Planck’s measurement of 67.4 km/s/Mpc. If these results are correct, then there is something deeply wrong with our knowledge of how the universe works. As Sherry Suyu of the Max Planck Institute for Astrophysics in Garching, Germany, says, “We may need new physics.”

Rather than assume Planck’s high-precision H0 is wrong and therefore rush to take apart the fundamentals of our best cosmological models, a scientist’s first instinct should be to test whether there has been an experimental mistake that means something about our measurement of the cosmic distance ladder is awry.

“That’s where my instinct still lies,” admits Daniel Mortlock of Imperial College London, UK, and Stockholm University, Sweden. Mortlock works in the field of astro-statistics – that is, drawing conclusions from incomplete astrophysical data, and accounting for various kinds of uncertainty and error in the data. It’s worth remembering there are two types of error in any measurement. The first is statistical error – errors in individual measurements, for example read-out noise from the detector, or uncertainties in the sky background brightness. Statistical errors can be reduced by simply increasing your sample size. But the other kind of errors – systematic errors – aren’t like that. “It doesn’t matter if you have a sample five times, or 10 times, or 50 times as large, you just get this irreducible uncertainty,” says Mortlock. An example of a systematic error might be the reddening of a star’s light by intervening interstellar dust – no matter how often you measure the star’s brightness, the star’s light would always be obscured by the dust, and its effect would increase the more you measure it.

Mortlock has considered that this could be what is happening with the local measurements of H0 – there may be some systematic error that astronomers have not yet identified, and if found, the tension between the Planck measurement and the local measurement of H0 could go away. However, Mortlock acknowledges that the evidence for the discrepancy in the H0 values being real “has been steadily growing more convincing”.

Einstein to the rescue

Presented with such an exceptional result, astronomers are double-checking by measuring H0 with other, independent means that would not be subject to the same systematic errors as the Cepheid variable and type Ia supernovae measurements.

One of these can be traced back to 1964, when a young astrophysicist by the name of Sjur Refsdal at the University of Oslo, Norway, came up with a unique way to measure the Hubble constant. It involved using a phenomenon predicted by Einstein but which at the time had not been discovered: gravitational lenses.

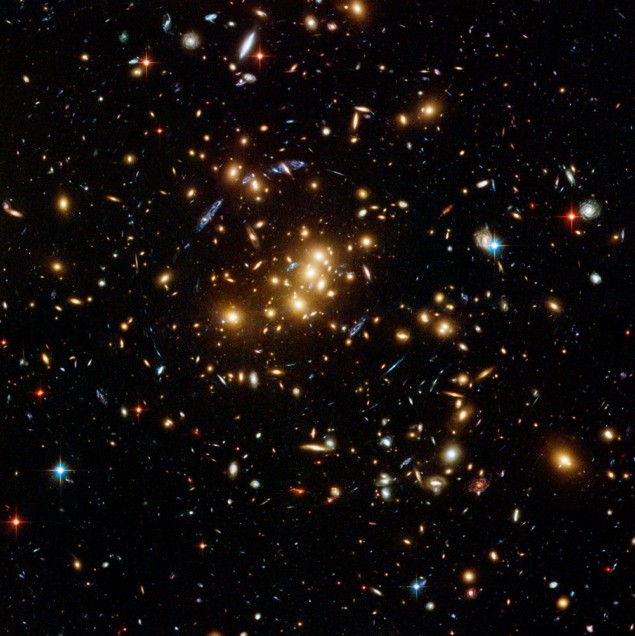

The general theory of relativity describes how mass warps space, and the greater the mass, the more space is warped. In the case of so-called “strong gravitational lensing”, massive objects such as galaxies, or clusters of galaxies, are able to warp space enough that the path of light from galaxies beyond is bent, just like in a glass lens. Given the uneven distribution of mass in galaxies and galaxy clusters, this lensing can result in several light paths, each of slightly different length.

Refsdal realized that if a supernova’s light passes through a gravitational lens, then its change in brightness would be delayed by different amounts in each of the lensed images depending on the length of their light path. So, image A might be seen to brighten first, followed a few days later by image B, and so on. The time delay would tell astronomers the difference in the length of the light paths, and the expansion of space during those time delays would therefore allow H0 to be measured.

Unfortunately, even after the first gravitational lens was discovered in 1979, it turned out that gravitationally lensed supernovae are exceptionally rare. Instead, quasars – luminous active galactic nuclei that also exhibit brightness variations – have been found to be the more common lensed object. That was what led Suyu to launch a project in 2016 to study lensed images of quasars in order to provide an independent measure of H0. It goes under the even more cumbersome name of H0LiCOW, which stands for H0 Lenses in COSMOGRAIL’s Wellspring, where COSMOGRAIL refers to a programme called the COSmological MOnitoring of GRAvitational Lenses, led by Frédéric Courbin and Georges Meylan at the École Polytechnique Fédérale de Lausanne.

Throughout this analysis, Suyu and team kept the final result hidden from themselves – a technique known as blind data analysis – to avoid confirmation bias. It was only at the very end of the process, once they had completed all their data analysis and with their paper describing their observations almost completely written, that they revealed to themselves the value of H0 that they had measured. Would it come out in favour of Planck, or would it boost the controversial SH0ES result?

The value that they got was 73.3 km/s/Mpc, with an uncertainty of 2.4%. “Our unblinded result agrees very well with the SH0ES measurement, adding further evidence that there seems to be something going on,” Suyu tells Physics World.

It’s still too early to claim the matter settled, however. The initial H0LiCOW analysis involved only six lensed quasars, and efforts are being made to increase the sample size. Suyu is also returning to Refsdal’s original idea of using lensed supernovae, the first example of which was discovered by the Hubble Space Telescope in 2014, followed by a second in 2016. Hundreds are expected to be discovered by the Vera C Rubin Observatory in Chile, formerly known as the Large Synoptic Survey Telescope, which will begin scientific observations in October 2022.

“It would be really interesting if the H0LiCOW measurements can be shown to be correct and in agreement with the Cepheid measurements,” says Feeney, who for his part is pursuing another independent measurement of H0, using another phenomenon predicted by Einstein: gravitational waves.

On 17 August 2017 a burst of gravitational waves resulting from the collision of two neutron stars in a galaxy 140 million light-years away triggered the detectors at the Laser Interferometry Gravitational-wave Observatory (LIGO) in the US and the Virgo detector in Italy. That detection has allowed Feeney and a team of other astronomers including Mortlock and Hiranya Peiris, also of University College London, to revive an idea, originally proposed by Bernard Schutz in 1986, to use such events to measure the expansion rate of the universe.

The strength of the gravitational waves indicates how distant a neutron-star merger is, but the merger also produces a handy burst of light known as a kilonova. This can be used to pinpoint the host galaxy, the redshift of which in turn provides the galaxy’s recession velocity. Feeney and Peiris have estimated that a sample of 50 kilonovae would be required to derive an accurate determination of H0, but Kenta Hotokezaka of Princeton University, US, and colleagues have found a way to speed this up. They point out that we would see the gravitational waves from a neutron-star merger at their strongest if we looked perpendicular to the plane of the collision. The merger produces a relativistic jet that’s also moving perpendicular to the plane, so measuring the angle at which we see the jet will tell us how large our viewing angle is from the plane, and therefore allow a determination of the true strength of the gravitational waves and hence the distance. Hotokezaka estimates that a sample size of just 15 kilonovae studied in this fashion will be enough to provide an accurate assessment of H0. Unfortunately, so far astronomers have observed just one kilonova, and based on that sample of one, Hotokezaka calculates H0 to be 70.3 km/s/Mpc, with a big uncertainty of 10%.

Other approaches abound

Cepheid variables and type 1a supernovae are common rungs on the cosmic distance ladder that are used to find a local value for Hubble’s constant. But researchers led by Wendy Freedman of the University of Chicago have used another. They looked at the brightness of red giant stars that have begun fusing helium in their cores, to measure the distance to galaxies in which these stars can be seen. Initially they calculated H0 as 69.8 km/s/Mpc – but then things got complicated. Their data was reanalysed by Wenlong Yuan and Adam Riess to account for dust reddening, resulting in a revised measurement of 72.4 km/s/Mpc with an uncertainty of 1.45%. However, Freedman’s team have performed the same reanalysis and got a value of 69.6 km/s/Mpc, with an uncertainty of 1.4%, so the jury is still out on that one.

Meanwhile, the Megamaser Cosmology Project makes use of radio observations tracking water masers in gas orbiting supermassive black holes at the centres of distant galaxies. The angular distance that the masers traverse on the sky allows for a straightforward geometrical distance measurement to their host galaxy, producing a value of 73.9 km/s/Mpc for H0 with an uncertainty of 3%.

Changing the game

Taken together, all the available evidence seems to be pointing towards the dichotomy between the local measurements and the Planck measurement being real – and not some unidentified systematic error. However, a greater sample size is required before these results can be considered truly robust. With new observatories coming online, we could have the required observations within 10 years.

“If either the gravitational waves or the lensing methods give very strong results that match the SH0ES result, then I think that will change the game,” says Mortlock.

However, don’t expect a speedy resolution. After all, we’re still grappling with the nature of dark matter and dark energy, and current research is focused on trying to identify the dark-matter particle and trying to characterize the behaviour of dark energy. “Whatever is going on with the Hubble constant is still several steps behind those,” says Mortlock. “People are still debating whether the effect is real.”

The value of H0 will have many consequences. It will dictate the age of the universe, and the history of how the universe expanded and allowed large-scale structure to form

One way or another, figuring out whether the discrepancy in our measurements of H0 is real or not will have significant repercussions for cosmology. Feeney describes the local and Planck values of H0 as “a really potent combination of measurements, because you are constraining the universe now and how it looked 13.8 billion years ago, so you pin down the universe at both ends of its evolution”.

The value of H0 will have many consequences. It will dictate the age of the universe (a higher H0 would mean the universe might be substantially younger than 13.8 billion years, which would contradict the age of some of the oldest stars that we know). It would also influence the history of how the universe expanded and allowed large-scale structure to form. And if new physics is needed, as Suyu suggests, then it’s impossible as yet to say how dramatic an effect that would have on cosmology, since we don’t yet know what shape that new physics might take.

“It would be something in addition to our current ΛCDM model,” says Suyu. “Maybe we are missing some new, very light and relativistic particle, which would change Planck’s measurement of H0. Or it could be some form of early dark energy that’s not in our current model.”

Or it could be neither of these, but instead something we’ve not thought of yet. The prospect is tantalizing researchers, but Suyu warns about jumping the gun.

“First we need to get the uncertainties down to the 1% level on multiple methods to see if this tension is real,” she says. So we need to be a little bit patient, but if we come back in 10 years’ time, we may find that the universe is suddenly a very different place indeed.