Dunes of sand and other granular materials can be found in large fields on Earth, Mars and beyond. Dune fields tend to self-order into spatial patterns and the physics underlying this self-organization is poorly understood. Now, Karol Bacik and colleagues at the University of Cambridge in the UK have shown that as dunes move, they repel dunes ahead of them via fluid turbulence, preventing collisions and stabilizing dune fields.

Theoretical models usually assume that dunes act as autonomous, self-propelled agents, that exchange mass with other dunes remotely – via air or water flow – or through collisions. But the latest research, described in Physical Review Letters, suggests that dunes can interact without exchanging mass.

Bacik told Physics World that the dynamics of dune fields are an intriguing and fundamental physical problem, “because we’ve got these large landscapes with isolated dunes, and the question is how would they evolve”. He adds that for their simplified experiment the team took the dune field apart and “did a pair wise interaction with two dunes only”.

Rotating paddles

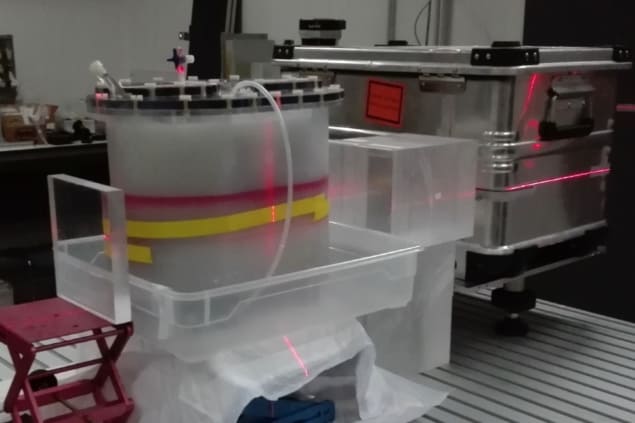

They set-up their two-dune system in a circular water-filled tank mounted with a set of rotating paddles submerged near the surface. Two cameras recorded changes in dune shape, sediment transport and water flow. To create the dunes, they placed two 2.25 kg piles of glass beads on the base of the tank (see video). When they rotated the paddles the flow quickly shifted the piles into a characteristic dune shape – a steep downstream face and shallow upstream face. The dunes then started to migrate downstream.

The researchers found that although the dunes had equal mass they did not move at the same speed. The upstream dune – the one first hit by the flow – moved at a constant speed, but the downstream dune did not. Initially it moved faster than the upstream dune, but then as the gap between the dunes increased it began to slow. Eventually an equilibrium was reached, with both dunes moving at the same speed.

Upstream wake

Analysis showed this dune-dune interaction was created by the fluid flow and the turbulent structures generated in the wake of the upstream dune. The wake created by fluid flowing over the upstream dune pushes the downstream dune away.

“It looks like repulsion, because this interaction means they space out, rather than the opposite, which would be that they collide,” Bacik says. He adds that although the interaction is there all the time “the strength of this wake decays away from the dune, so as the downstream dune keeps escaping, the interaction is weaker and weaker”. And, when the dunes initially shift, or there is a surge in the flow, the interaction increases and pushes the downstream dune a bit harder– “it gives it an extra kick”.

The experiment revealed that dune-dune repulsion is a robust phenomenon. If the dunes were close enough to start with, dune separation occurred at all flow rates explored. It even persisted when the dunes were different sizes. When the researchers created a downstream dune that was 2.5 times larger than the upstream dune, they found that initially the smaller upstream dune migrated faster, but as the gap closed the wake-induced repulsion grew stronger and the larger downstream dune sped up. Again, the two dunes eventually began to move at the same speed.

Satellite images

Although the experimental dunes were underwater, Bacik is confident that dunes on land behave in the same way, with the interaction mediated by air turbulence. “Both gases and liquids are fluids from the physical point of view, so they obey the same equation,” he explained. Bacik adds that satellite images studied by other researchers suggest a similar interaction between desert dunes. These movements, however, occur over decades, not hours. “The bigger the dunes the slower they move,” Bacik explains.

Self-sorting sand creates mysterious megaripples

Hans Herrmann, a physicist at ESPCI Paris, is not surprised by the findings, but is impressed by the work. He told Physics World, “I never had any doubt that there exists a hydrodynamic interaction between dunes irrespective if they are in water or in air. But it is extremely difficult to include such an interaction in a model. Therefore, all models do neglect them.” Herrmann adds that the work “is a beautiful experimental measurement of the consequences of these forces and thus a very useful and very important contribution”.

Next, the team hope to conduct more thorough analysis of satellite images of dune fields to see how prominent this interaction is – and how it works – in more complex, real-world environments.