Optical sensors that work in the mid- to long infrared part of the electromagnetic spectrum have a host of applications, including gas sensing, thermal imaging and detecting hazards in the environment. Such sensors are, however, costly and based on toxic mercury-containing compounds or epitaxial quantum-wells or quantum-dot infrared photodetectors that are difficult and time-consuming to fabricate. Researchers at the Institute of Photonic Sciences in Spain have now overcome these flaws by constructing a mercury-free colloidal quantum dot (CQD) device that can detect light across these difficult-to-access wavelengths. The new detector is based on lead sulphide (PbS) and is compatible with standard CMOS manufacturing technologies.

“Although previous PbS CQD photodetectors had shown compelling performance in the visible/short-wave infrared (VIS/SWIR) range, we now show that they can also cover the mid-wavelength/long-wavelength infrared (MWIR/LWIR) range,” explains team leader Gerasimos Konstantatos. “This makes PbS CQDs the only semiconducting material to cover such a broad spectral range.”

From interband to intraband transitions

CQDs are semiconductor particles only a few nanometres in size. They can be synthesized in solution, which means that CQD films can readily be deposited on a range of flexible or rigid substrates. This ease of manufacture makes them a cost-competitive, high-performance photodetector material that integrates readily with CMOS technologies.

PbS CQDs have recently emerged as a promising basis for detectors in the SWIR (1-2 micron) wavelength range. They do have a drawback, however, in that they rely on interband absorption of light. This means that incident photons excite charge carriers (electrons) across the electronic bandgap (the energy difference between the bottom of the conduction band to the top of the valence band) of the material. The size of this bandgap thus places a lower limit on the energies at which the technology can operate.

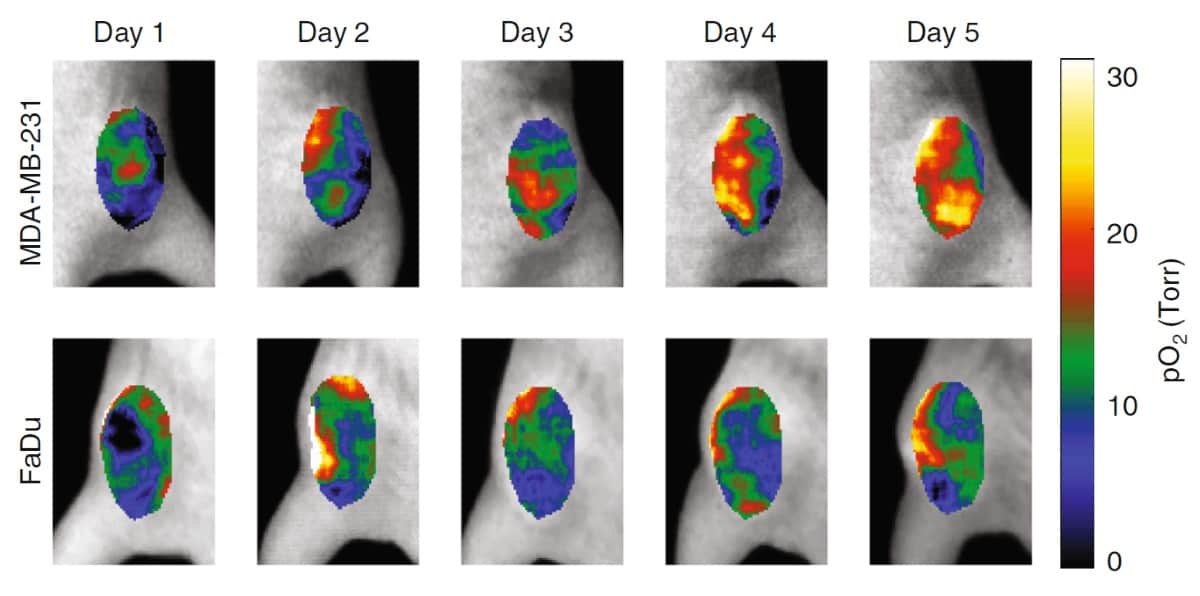

For PbS, this limit was thought to be 0.3 eV, but Konstantatos and co-workers have now reduced it to just above 0.1 eV by heavily doping their PbS CQDs with iodine. The presence of large amounts of iodine facilitates electronic transitions amongst higher excited states known as inter-sub-band (or intraband) transitions, instead of interband ones. This makes it possible to excite electrons using photons with much lower energies than before, in the mid- to long-wavelength IR (5-10 µm) range.

First robust electron doping in PbS CQDs

The researchers’ doping method, which they developed in 2019, involves substituting iodine atoms for the sulphur in PbS using a simple ligand-exchange process. Konstantatos explains that this heavy doping means they can populate the first excited state of the conduction band (1Se) in the CQDs with charge carriers at a rate of more than one electron per dot. When they illuminate their material with low-energy light, these electrons are excited from the 1Se to the second excited state in the conduction band (1Pe). This modulates the material’s conductivity, rendering it sensitive to mid- and long wave IR radiation.

In their most recent experiments, the researchers synthesized PbS CQD films using a wet colloidal chemistry technique, which creates a colloidal suspension of the spheres in a liquid. They followed this with a simple spin coating and ligand exchange with iodine molecules. The ligand exchange occurs on the surface of the dots and this doping renders the CQD films conductive. “To our knowledge, this is the first time that robust electronic doping has been achieved in this material,” says Konstantatos.

Doping is more effective in larger dots because these contain more exposed sulphur atoms. Indeed, for dots smaller than 4 nm in diameter, the 1Se band is almost empty, while for dots with a diameter between 4 and 8 nm, heavy doping occurs and the 1Se band is partially populated. For dots bigger than 8 nm, the 1Se band is nearly completely filled with roughly eight electrons per QD. In such heavily doped QDs, the populated conduction band bleaches interband photon absorption and intraband absorption then becomes possible. This means that the larger the QDs, the longer the wavelength of IR they can absorb.

Thanks to transmission measurements of two iodine-exchanged PbS QD samples, one heavily doped and the other undoped, the team were able to confirm that strong light absorption occurred at an interband (1Sh → 1Se) transition in the undoped sample and at a strong intraband (1Se → 1Pe) peak in the doped one. As mentioned, this occurs because the 1Se is partially populated with electrons.

Towards hyperspectral imaging

Such ultrabroadband operation could make it possible to perform multispectral or hyperspectral imaging, which provides not only visual but also compositional (chemical) information on an object or scene, as well as its temperature, says Konstantatos. “Until now, this could only be achieved by using several image sensors utilizing different technologies, with the IR part being very expensive,” he tells Physics World. “With a CQD technology now covering the full range from the visible to the LWIR, low-cost broadband photodetectors may now be possible.”

The researchers, who report their work in Nano Letters, are now planning to improve the performance of their detectors. They would also like to achieve efficient intraband absorption in smaller dots.