The question in this headline has been on my mind since Friday, when I travelled to London for the annual UK National Quantum Technologies Showcase. The showcase is now in its fifth year, and I’m reliably informed that the first such event – which took place not long after the UK government released an initial £270m tranche of funding for R&D in applied quantum science – had a definite “community” flavour, with lots of exciting ideas but little in the way of anything concrete, never mind actual commercial products.

Now, however, the line between industry and community is starting to blur. Most exhibitors in the QE2 conference centre were showing off devices, rather than proposals or preliminary data. Take Elena Boto, a research fellow at the University of Nottingham. She and her colleagues have developed a wearable magnetoencephalography (MEG) scanner that uses optical magnetometers rather than bulky and expensive superconducting sensors to monitor magnetic activity in the brain. Their technology makes it possible to build an MEG device that fits over the head like a cycling helmet, and can scan people while they are moving. That’s useful for kids and adults with medical conditions that mean they cannot keep still, and also for studying the brains of people while they are doing motion-based tasks.

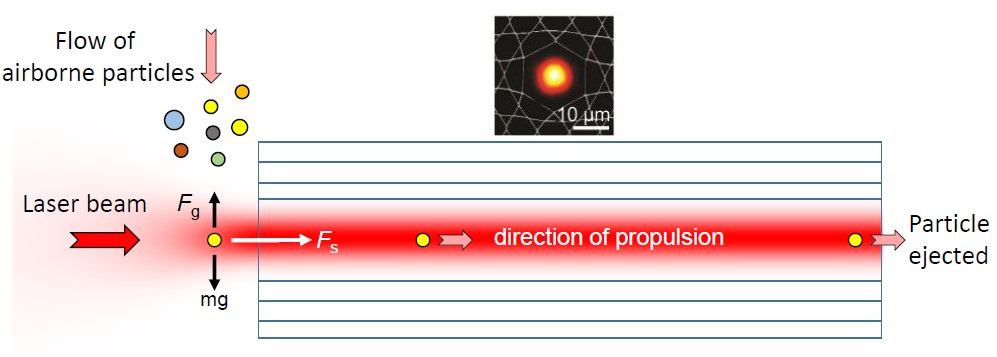

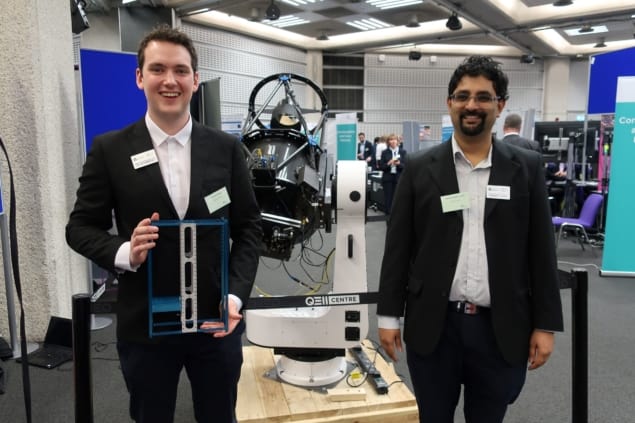

Another intriguing technology at the showcase, this time in the field of quantum communications, was the receiver for a satellite-based quantum key distribution (QKD) network. The receiver was built by members of the University of Bristol’s Quantum Engineering Technology Labs, and Siddarth Joshi, a senior research associate on the project, told me that the satellite part of this new secure-communications network is due to be launched in 2-3 years.

The quantum sensing sector is also seeing some notable advances. The device in the photo below is a quantum gravimeter made by M Squared Lasers, and it uses cold rubidium atoms to detect small changes in the local gravitational field. The M Squared team developed it in partnership with physicists at the University of Birmingham, and shortly before the showcase, they loaded it onto a barge and sent it around London’s waterways to see how it performed in the field. Sensors of this type could help utility companies detect underground pipes and other types of infrastructure without the need to dig, saving money and time while also making life easier for people who live or commute near sites undergoing maintenance.

But for all the progress on show in the crowded exhibit hall, there is still a big gap between exhibition-worthy gadgets and saleable products. Despite considerable progress, most of the devices on display fell squarely in the former category. M Squared’s chief executive, Graeme Malcolm, told me that his Glasgow-based firm plans to test its gravimeter with Shell, the multinational oil and gas company, to help refine its business model. Similarly, Diviya Devani, a systems engineer at Teledyne e2V, says that her company will begin trialling its miniature atomic clock with a defence firm, Leonardo, sometime in 2020. But near-commercial devices like these were very much the exception, not the rule, and the demographics of the exhibit hall reflected this. Around three-quarters of the booths were showcasing the work of researchers in university or government labs, not private companies.

The question of how to build a profitable industry on top of a successful research base came up repeatedly in a series of panel discussions at the showcase. For David Delpy, a veteran science administrator and current honorary treasurer at the Institute of Physics (which publishes Physics World), the missing ingredient is a “supply chain” of people with the right skills. “If you have an industry you need the equivalent of technicians and apprentices,” Delpy told the audience. “The EPSRC [Engineering and Physical Sciences Research Council] cannot fund that.”

Another panellist, Paul Warburton, an engineer and nanoelectronics expert at University College London, highlighted the need for more diversity in the field – not only in terms of race and gender, but also in disciplinary backgrounds. In Warburton’s view, the nascent quantum technologies industry would benefit from having more engineers and computer scientists, and perhaps fewer physicists. “All the science has been done in quantum technologies,” he declared. “The challenges for the next 5-10 years are all engineering problems.”

To my mind, though, the most perceptive suggestion came from Mike Muller. As the former chief technology officer of ARM Holdings, the semiconductor and software firm he co-founded in 1990 and sold for £23.4bn in 2016, Muller is a self-described outsider in the quantum world. He based his advice, instead, on the rise of exascale computing – that is, supercomputers that can perform 1018 calculations per second. Building computers in this class required R&D in several fields, including fundamental physics. But instead of simply handing out grants to researchers, Muller said that the US government also acted as the first customer for their innovations. “Customers are wonderful things for any technology,” he observed. Because developers needed to deliver concrete advances to a paying customer, he explained, they had to make compromises rather than simply making their product better in the abstract. What is more, he added, “The act of buying something encouraged lots of people to work together.”

Over the next few years – and thanks to hefty injections of cash in several countries, not just the UK – I expect to see more quantum technologies move out of the lab and into the marketplace. For now, though, my verdict is that the field of quantum technologies exists in a superposition of industry and community, with some of the characteristics of each.