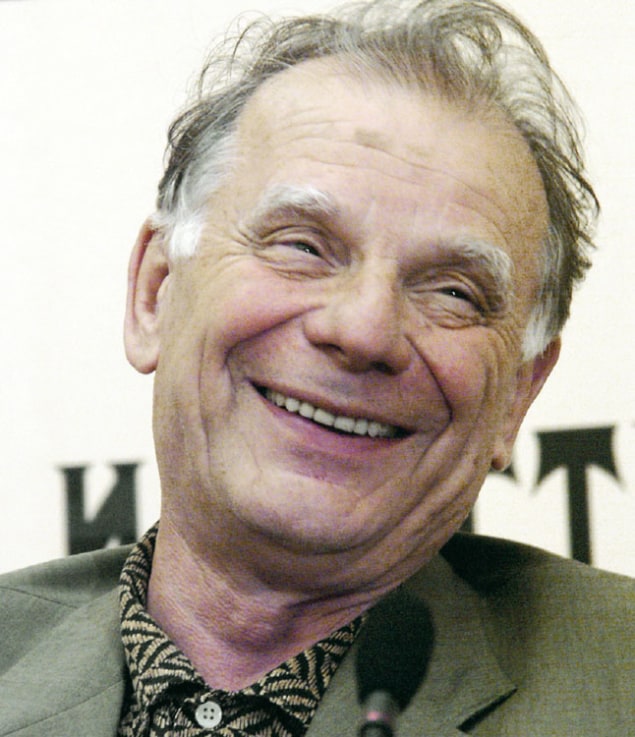

The first ever observations of chiral surface excitons have been made by Girsh Blumberg and colleagues at Rutgers University in the US. The team made their discovery after detecting circularly-polarized light emerging from the surface of a topological insulator. Their work could have a range of applications including lighting, electronic displays, and solar panels.

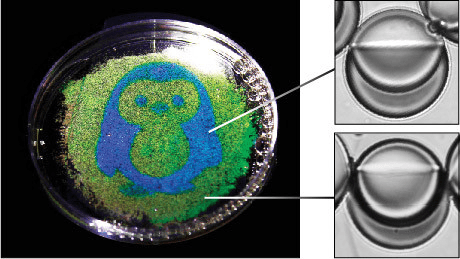

An exciton is a particle-like excitation that occurs in semiconductors. It comprises a bound electron-hole pair, which can be created by firing light at the surface of a semiconductor. After a short period of time, the electron and hole will annihilate and create a photon of photoluminescent light. Photoluminescence spectroscopy is an established technique for studying the electronic properties of conventional semiconductors and is now being used to study topological insulators – a family of crystalline materials with highly conductive surfaces, and insulating interiors.

The surfaces of topological insulators contain 2D gases of Dirac electrons, which behave much like photons with no mass. Theoretical calculations suggest that when such electrons are excited, the resulting holes have mass. Until now, however, physicists have had scant experimental information about excitons comprising massless Dirac electrons and massive holes.

Spiralling electrons

In their study, Blumberg’s team looked at the surface of bismuth selenide, which is a well-known topological insulator. Using a broad range of energies to excite surface excitons, the physicists found that the circularly-polarized photoluminescent light is emitted when the excitons decay. This they say indicates that the electrons are spiralling towards the holes as they recombine. Since spirals cannot be superimposed onto their mirror images, Blumberg’s team describe the quasiparticles as chiral excitons.

Ubiquity of topological materials revealed in catalogues containing thousands of substances

The researchers believe that this chirality is preserved as a result of strong spin-orbit coupling, which affects both electrons and holes. This coupling locks the spins and angular momenta of the electrons and holes together. This preserves the chirality of the excitons by preventing them from interacting with thermal vibrations on the surface – even at room temperature. This is unlike conventional excitons, which lose their chirality rapidly through thermal interactions and therefore do not emit circularly polarized light.

The precise dynamics of chiral excitons is still not entirely clear. In future research, Blumberg’s team hopes to study the quasiparticles in using ultrafast imaging techniques. The researchers also believe that chiral excitons may be found in materials other than bismuth selenide.

From a technological point of view, Blumberg and colleagues say that circularly-polarized photoluminescence could make topological insulators ideal for a wide variety of photonic and optoelectronic technologies. They say that bismuth selenide optical coatings would be easy to mass-produce, making them ideal for applications ranging from ultra-clear television screens to highly efficient solar cells.

The research is described in Proceedings of the National Academy of Sciences.