A cardiac hybrid imaging technique that fuses SPECT myocardial perfusion imaging (MPI) with coronary CT angiography (CCTA) scans can help predict major adverse cardiac events in patients suspected of having coronary artery disease, according to a study published in Radiology.

In a retrospective study, researchers from Switzerland used software-based cardiac hybrid image fusion to analyse patients who underwent CCTA and SPECT MPI exams. They discovered that patients with abnormal findings on cardiac hybrid imaging had a significantly greater risk of experiencing a major adverse cardiac event, including death (Radiology 10.1148/radiol.2018171303).

The comprehensive assessment of coronary artery disease offered by cardiac hybrid imaging may optimize treatment decision-making and minimize unnecessary invasive intervention, senior author Philipp Kaufmann, chair of nuclear medicine and director of cardiac imaging at University Hospital Zurich, told AuntMinnie.com.

“For risk stratification, a hybrid image confers more information than any other modality, particularly in those with a pathologic finding,” he said. “The most important implication for evaluation of known or suspected stable coronary artery disease is that [cardiac hybrid imaging] allows patients to be evaluated non-invasively.”

Two modalities are better than one

Although recent research has confirmed the high diagnostic yield of CCTA for obstructive coronary artery disease, its use as a first-line test is long in coming. A drawback of CCTA is its limited ability to provide insight into haemodynamically relevant stenoses, which may play a part in its considerably lower usage rate compared with MPI. On the other hand, nuclear stress testing via SPECT MPI or PET MPI is specifically geared toward identifying ischemia, but it often overestimates the need for invasive procedures.

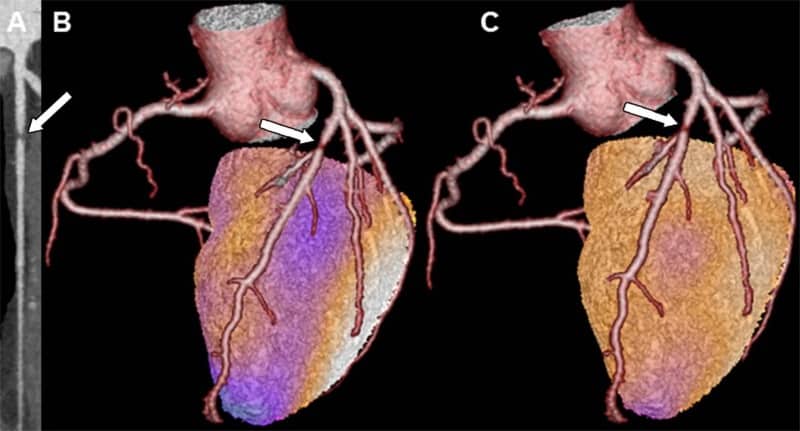

One method that may compensate for the shortcomings of each individual imaging modality is cardiac hybrid imaging, which fuses images from CCTA and MPI and provides the anatomic and functional information of both at once, Kaufmann noted. Several studies have demonstrated the technique’s increased diagnostic value over examining images from each modality alone or side by side.

“Particularly in patients with multiple lesions or complex coronary anatomy, it is, in many cases, very difficult to correctly identify the culprit lesion,” he said. “Only a comprehensive assessment of both pieces of information with hybrid imaging allows [physicians] to correctly assign a coronary artery with a lesion to the ischemic territory.”

Exploring the prognostic potential of cardiac hybrid imaging, Kaufmann and colleagues evaluated patients who underwent both CCTA and SPECT MPI at their institution between May 2005 and December 2008. To fuse the MPI and CCTA datasets, they used cardiac imaging fusion software running on a postprocessing workstation (CardIQ Fusion; Advantage Workstation 4.3, GE Healthcare).

They separated the resulting hybrid imaging data into three distinct categories:

- Cases with both 50% or greater stenosis on CCTA and evidence of ischemia on MPI suggesting coronary artery disease

- Cases with either stenosis on CCTA or ischemia on MPI

- Cases with normal findings on CCTA and MPI

The researchers compared the effectiveness of each scenario for predicting major adverse cardiac events, including death, heart attack, unstable chest pain and coronary revascularization. In all, there were 160 cardiac events recorded in the study population within the 10-year follow-up period.

Long-term prognostic value

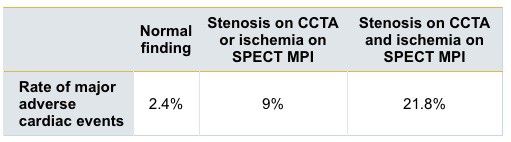

Among 375 patients, cardiac hybrid imaging showed that 46 had both 50% or greater stenosis and ischemia, 113 had only one or the other finding, and 216 had entirely normal findings.

The group found that a matched finding, i.e., indicating both stenosis and ischemia, was associated with more than five times the risk of a major adverse cardiac event compared with normal findings. The presence of only one of the abnormal findings was associated with over three times the risk of a cardiac event compared with normal findings.

The results consistently demonstrated that patients whose cardiac hybrid imaging data identified both stenosis and ischemia had a considerably worse outcome than patients with either one of the signs alone or neither of the abnormal signs. Patients with altogether normal imaging test results had a very favourable long-term prognosis.

These findings confirm the excellent risk stratification ability of cardiac hybrid imaging in patients who are suspected of having coronary artery disease, Kaufmann said. They also support the use of CCTA as an initial, non-invasive evaluation of such patients, followed by MPI only for patients with abnormal CCTA results.

“We should start with a coronary CT angiography exam and, if normal, we can stop testing there,” he said. “But if there is a lesion, we should assess ischemia with a nuclear scan (SPECT or PET MPI), and if there is an ischemia, we should take full advantage of both modalities by fusing the results together to make a hybrid image.”

Radiologists may be key

Ultimately, the extensive assessment of coronary artery disease provided by cardiac hybrid imaging optimizes treatment decision-making and minimizes unnecessary invasive angiographies, according to the authors. What’s more, using this technique could potentially improve the low yield of diagnostic invasive coronary angiography and facilitate evidence-based coronary interventions.

A conspicuous limitation of cardiac hybrid imaging is that it requires an increase in effective radiation dose (roughly 10 mSv in all) because it involves two contrast-enhanced imaging exams rather than just one, the authors noted. However, clinicians may be able to lower this elevated radiation dose by applying reconstruction algorithms.

Another major barrier seems to be the limited knowledge of guidelines and proper implementation of methods for evaluating patients with stable coronary artery disease, Kaufmann said.

“Unfortunately, structures of hospitals do not always facilitate hybrid imaging, because CT is ‘owned’ by one department and SPECT or PET by another, which may be an obstacle for the combination of datasets from different modalities,” he said. “Radiologists may be key in helping clinicians to refer to the best non-invasive test by knowing the technical and clinical guidelines in general (and not only our own for radiology), and by being involved in the multidisciplinary boards.”

In the near future, the researchers hope to conduct a trial demonstrating the positive impact hybrid imaging can have on patient outcomes. They also plan on developing a “triple hybrid” imaging technique that combines CCTA and SPECT MPI scans with information concerning coronary artery shear stress. They believe that adding information about shear stress to hybrid imaging could help identify lesions that do not yet affect ischemia but may in the future.

- This article was originally published on AuntMinnieEurope.com © 2018 by AuntMinnieEurope.com. Any copying, republication or redistribution of AuntMinnieEurope.com content is expressly prohibited without the prior written consent of AuntMinnieEurope.com.