Over the past few years 3D printing has become one of the most-hyped topics in 21st century technology. Research into the techniques used in 3D printing has already given scientists new freedom in the design of components and devices, while the ability to manufacture highly individualized parts in a cost-effective way is finding new applications all the time.

So far, much of the hype has centred on macroscopic objects such as 3D printed implants or prototype parts for the automotive industry. However, a logical extension of the technology would be to exploit the unique potential of 3D printing in the domain of microstructures. This is of particular interest for optical scientists who wish to enhance the functionality of micro-optical devices such as highly integrated cameras, or even make it possible to build entirely new categories of devices that cannot be manufactured via a combination of traditional techniques such as UV- or electron-beam lithography.

Macro vs micro

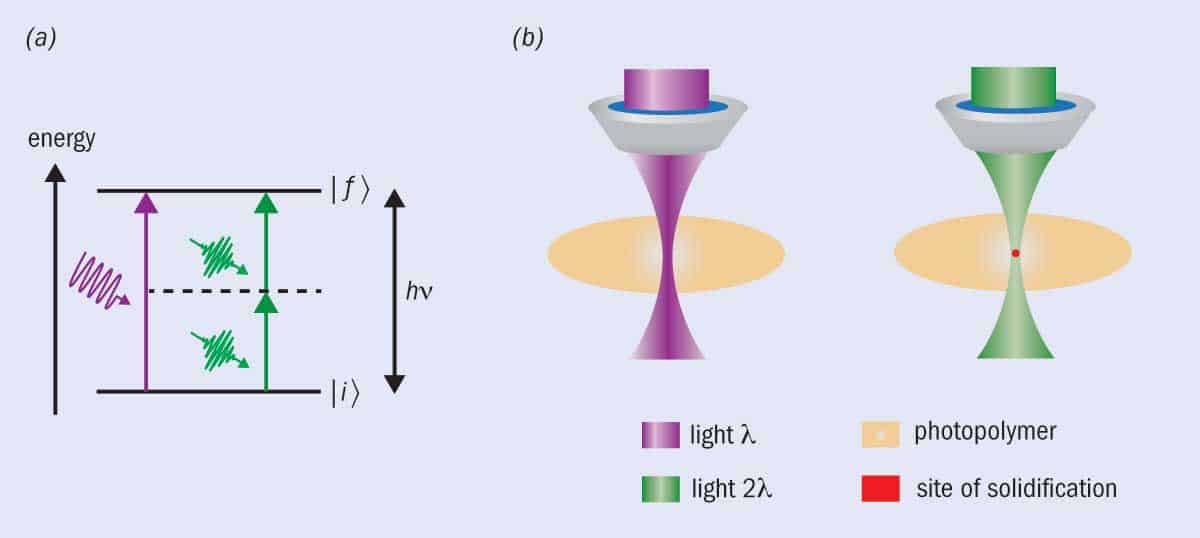

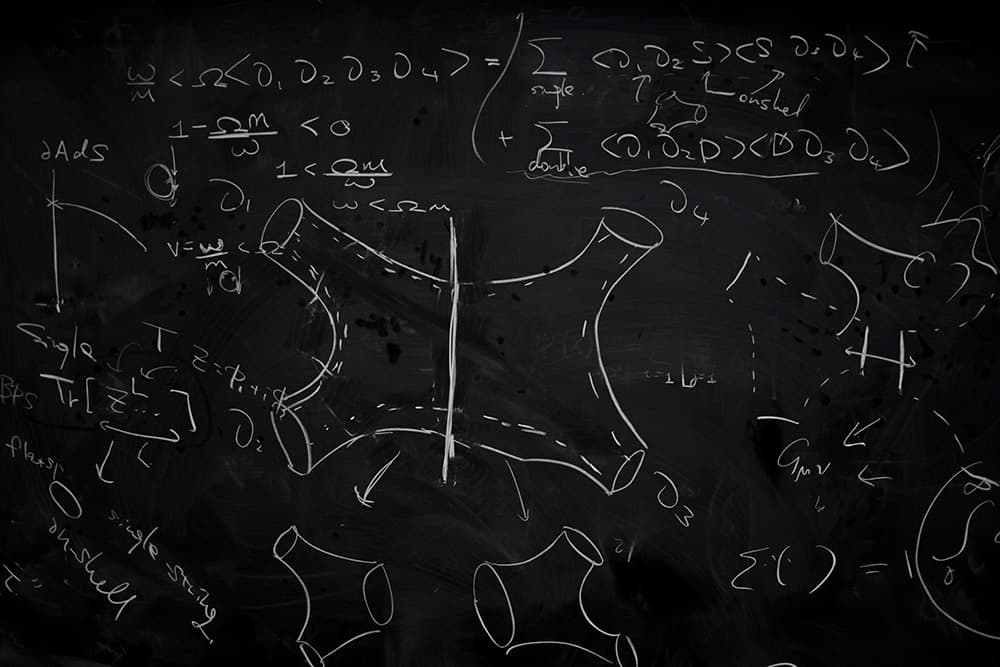

Macro-sized 3D printing relies on several well-established techniques, including the selective melting of metal powders; inkjet printing; stereolithography; and fused deposition modelling. In contrast, the realm of 3D microprinting is dominated by a single method: two-photon polymerization (2PP). In some ways this method is similar to conventional UV lithography, where a (usually) liquid photopolymer is illuminated with light at an appropriate wavelength; the polymer solidifies where the light is absorbed; and a combination of masks and subsequent removal of unexposed polymer are used to create highly sophisticated structures. The main difference is that in 2PP the trigger for solidification to take place inside the focal volume is a femtosecond laser pulse. The physical phenomenon that drives this process is known as two-photon absorption (TPA), and it can only occur if the laser light is very intense: if you focused all of the sunlight falling on the city of Würzburg, Germany, onto a single grain of sand, you would get an intensity equivalent to that used for 2PP. Under these extreme conditions, two simultaneously absorbed photons with wavelengths in the visible part of the spectrum (such as 515 nm) can trigger the same chemical reaction as a single UV photon. Because this reaction does not occur at lower light intensities, solidification is strongly confined to the focal volume, and the polymer does not interact with out-of-focus light at all. The site of solidification can then be scanned in three dimensions to generate a 3D microstructure, as with normal 3D printing.

The concept of 2PP has been well known for at least 20 years, and several research groups have used it to generate objects such as photonic crystals (which are essentially semiconductors for light), 3D structures for life-science applications and micro-optical devices. However, before the 2PP process can be exploited in commercial applications, some technological challenges need to be resolved. One constraint is that, as with many additive manufacturing processes, 2PP is limited to using a single focal volume to process the material. This “serial” process is inherently slow, comparable to painting an entire wall with a small brush instead of a roller. The second disadvantage is the limited availability of photopolymers that can be processed using 2PP while also providing superior functionality for 3D micro-optical devices. For example, some photopolymers have been optimized for the 2PP processing itself, but their chemical and physical properties leave something to be desired in terms of the performance of the completed device.

Industrial optimization

Within the optics and electronics department at the Fraunhofer Institute for Silicate Research (Fraunhofer ISC) in Würzburg, scientists are working to optimize 2PP processing with respect to industrial applications. A key aspect of this research is to develop hybrid polymers that combine inorganic or glass-like materials with substances that exhibit organic photochemistry (as in the reaction described above), and thereby unite the favourable mechanical properties of glass with the processability of purely organic polymers. These hybrid polymers, known commercially as Ormocers®, are liquid resins consisting of a [Si-O]n backbone with different organic side groups. The most important side groups are polymerizable components such as acrylate or epoxies, as they can be used to solidify the resin via photochemical processes. The choice of precursors and the processing conditions determine the conformation of the interlinked organic and inorganic networks, and thus the chemical and physical properties of the final substance. The main benefits of Ormocer chemistry are an excellent stability against temperature, resistance against chemical attack and superior mechanical properties (such as stiffness). Additionally, many properties can be tailored to meet the requirements of different applications.

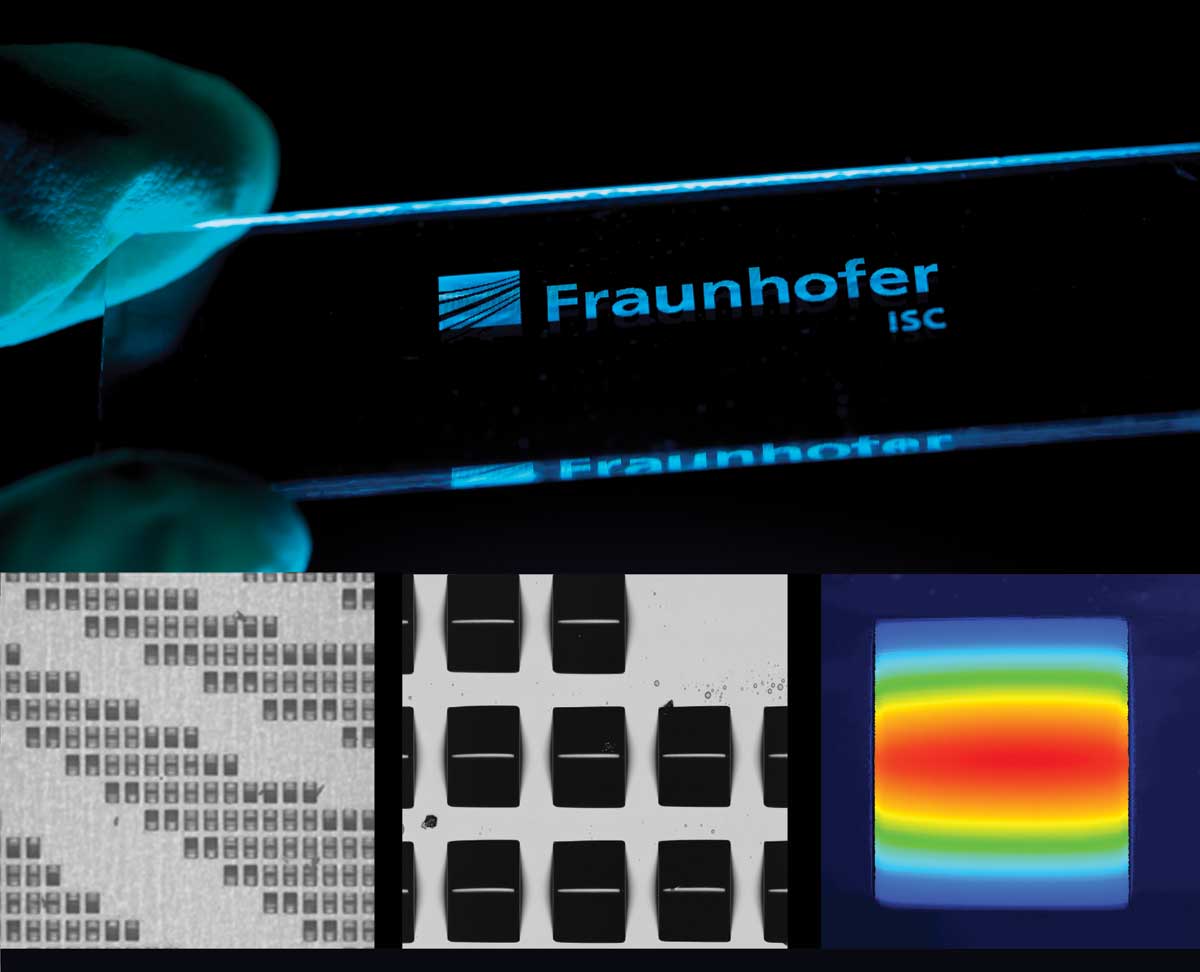

Recently, researchers at Fraunhofer ISC have worked to improve the optical properties and stability of parts that have been 3D microprinted using Ormocers. For example, we have demonstrated that newly synthesized high-refractive-index hybrid polymers do not turn yellow even after being exposed to high-intensity UV LED light and temperatures of 150 °C for 72 hours. Temperature stability has also been proven by creating 2PP-printed microlenses that did not change their shape after being exposed to 200 °C for more than 1.5 hours. Such robustness is important because it means that microstructures fabricated via 2PP from Ormocer material can withstand the autoclaving procedures used to sterilize medical equipment, such as an endoscope equipped with sophisticated micro-optical lenses at the end of an optical fibre.

Another key benefit of these materials is their compatibility with biological matter. Ormocer composites are used as filling materials in dentistry, and many other material modifications are biocompatible with several human cell types. Some of these materials are even biodegradable, which is important when selecting appropriate materials for 3D-printed medical implants or scaffolds for tissue engineering. The micro-patterned hybrid polymer mimics the extracellular matrix, making it possible for human cells to be grown on it either in vitro or in vivo. Once this new tissue is formed, the scaffold material can then be reabsorbed by the human body.

Active interest

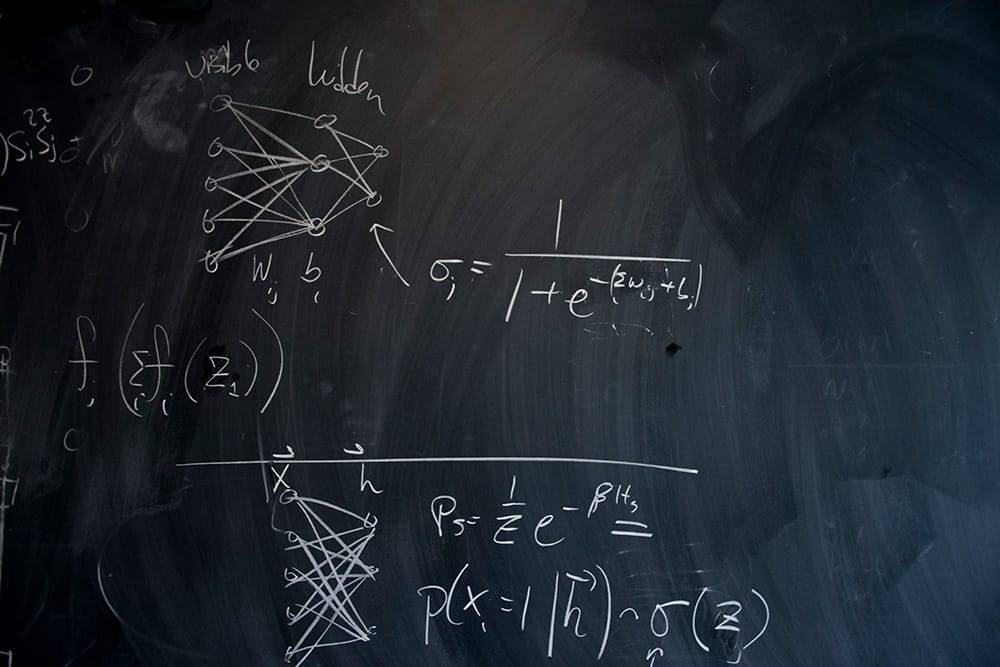

Despite their advantages over conventional polymers, hybrid polymers are still “passive” materials – meaning that their properties, defined by their chemical composition and processing, remain unchanged in response to external stimuli. To truly fulfil the potential of 2PP-written microstructures, scientists are also working to develop “active” materials. Such materials might, for example, act as optical gain media, convert one wavelength of light into another, or exhibit mechanical responses to external electrical or magnetic fields. Gain media have a number of applications, including the amplification of signals in optical data communication. Mechanical responses are important for actuators, for example in human–machine interfaces or miniaturized energy harvesters.

There are two main routes for achieving these properties. One is to incorporate active components during the chemical synthesis process itself, for example by linking optically active ions directly to the polymeric network. The other route is based on a “guest–host” approach, in which active nanoparticles are introduced into a (hybrid) polymer matrix. This route can be very straightforward as long as the guest nanoparticles “match” the host system; in other words, as long as they can be dispersed homogeneously and they maintain their active properties inside the matrix.

Foundations

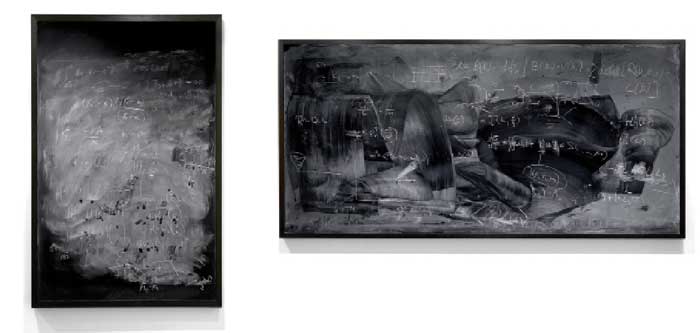

Researchers at Fraunhofer ISC demonstrated recently that material systems of the latter type – so-called “nanocomposites” – can be 3D microprinted using 2PP. In a proof-of-principle experiment, they introduced silica nanoparticles 48 nm and 380 nm in diameter into an Ormocer matrix and studied the material’s behaviour when it was illuminated with femtosecond laser pulses. An example of the results is shown in the “Fine structure” figure, which reveals a complex 3D pattern created in the 48 nm nanocomposite (left image pair) and the institute’s initials in the system containing 380 nm particles (right image pair). The hope is that such experiments will be the foundation for creating structures with more sophisticated particles.

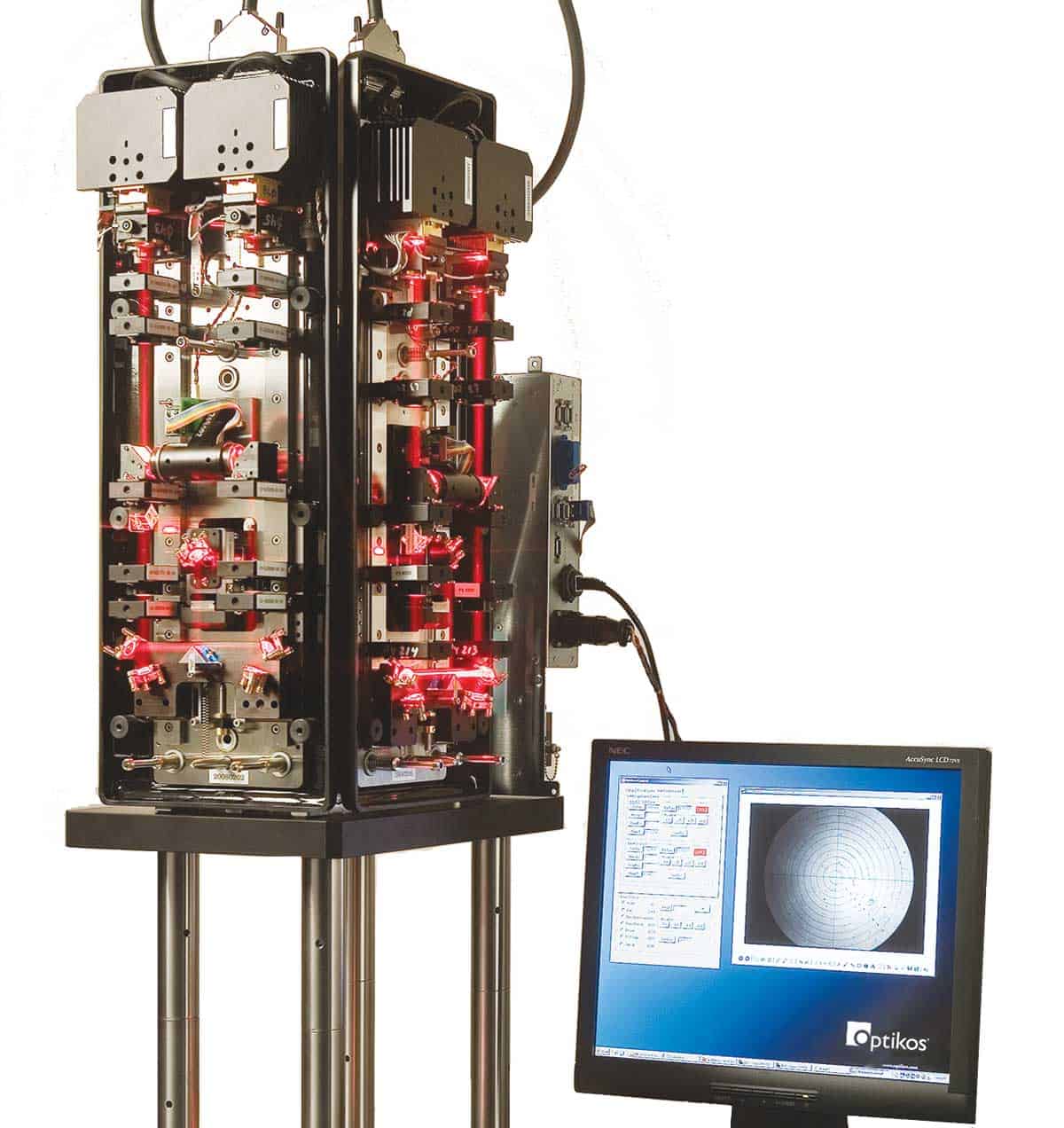

In addition to new materials, another key area of development for 3D microprinting relates to the optics used to control the position of the laser focus during the printing process. The goal here is to set the position of the focus quickly as well as accurately. For this reason, “galvoscanner” mirrors have become increasingly popular, since they have significantly lower moving masses than high-accuracy linear stages, and thus make it possible to rapidly accelerate and position the focal spot in the focal plane.

The huge capability for rapid 3D micropatterning using galvoscanner technology is shown in the “Tiny prisms” image on p32. Here, the Fraunhofer ISC emblem is composed of 10 000 individual microprisms, with a base area of 50 × 60 µm² and a height of 25 µm. Printing a single microprism only takes a few seconds, even without thoroughly optimizing the process in terms of applied photon doses and writing velocity. This result clearly indicates that areas of several square centimetres can easily be filled with micron-sized elements – meaning that we could, in principle, use these 3D-printed microprism arrays in displays and other commercial technologies that rely on redirecting light with low optical losses. Furthermore, the depicted arrangement can be replicated easily, as it is only a so-called “2.5 dimensional” pattern with no undercuts.

Forging ahead

The tremendous potential of 3D microprinting using two-photon polymerization will be achieved when it is possible to employ materials that are not only compatible with the printing process, but that also exhibit new functional properties (either active or passive). Strategies for accelerating the printing process are also highly desirable, and galvoscanner mirror technology is already being implemented today. Both approaches – new materials and faster fabrication – will help ensure that 2PP is adopted on a larger scale in the future.

By Matin Durrani

By Matin Durrani