Experimental particle physicist Jessica Esquivel explores the beneficial collaboration between artificial intelligence and particle physics that is advancing both fields

As a postdoc at the Fermi National Accelerator Laboratory (Fermilab), I was interested to find out we have a long history of implementing neural networks, a subset of AI, on particle physics data. In May 1990, when I was a two-year-old focused on classifying the sounds of various animals on Old McDonald’s farm, physicist Bruce Denby was hosting the Neural Network Tutorial for High Energy Physicists conference. Fast forward to 2016 and we see the first particle-physics collaboration, the NOvA neutrino detector, publishing its work (JINST 11 P09001) using “convolutional neural networks” (CNNs) – a type of image-recognition neural network that is based on the human visual system. My own graduate research “Muon/pion separation using convolutional neural networks for the MicroBooNE charged current inclusive cross section measurement” (DOI: 10.2172/1437290), published in 2018, also featured CNNs.

Over the last three decades, the particle-physics community has welcomed AI with open arms. Indeed, high-energy physicists Matthew Feickert and Benjamin Nachman have set up a collection of all particle-physics research that exploits machine-learning (ML) algorithms – A Living Review of Machine Learning for Particle Physics – which now includes more than 350 papers. ML algorithms are also being applied to particle identification, from gigantic detectors at the Large Hadron Collider at CERN to neutrino experiments including NoVA and MicroBooNE. Indeed, the ML algorithm that NoVA implemented actually improved its experiment comparable to if had collected 30% more data.

But the benefits of AI in particle physics don’t stop there. We use particle-interaction simulations to develop data-analysis tools, such as tracking and calibration, as well as to compare theoretical models of interactions within the Standard Model of particle physics. By implementing AI for model optimization, and using knowledge of “gauge symmetries”, Massachusetts Institute of Technology physicist Phiala Shanaha has noticed significant gains in efficiency and precision of numerical calculations (Phys. Rev. Lett. 125 121601).

AI has also, in turn, benefited from the particle-physics community. CNNs are very good at pattern recognition in 2D space, but falter at higher dimensions. To help CNNs detect pixels on 3D shapes such as spheres and other curved surfaces, Taco Cohen at the University of Amsterdam and colleagues have built a new theoretical “gauge-equivariant” CNN framework that can identify patterns on any kind of geometric surface. Gauge CNNs resolve the 2D-to-3D problem by encoding gauge covariance, a standard theoretical assumption in particle physics. This ever expanding partnership between AI and particle physics is benefiting both fields tremendously.

What are the disadvantages of using AI?

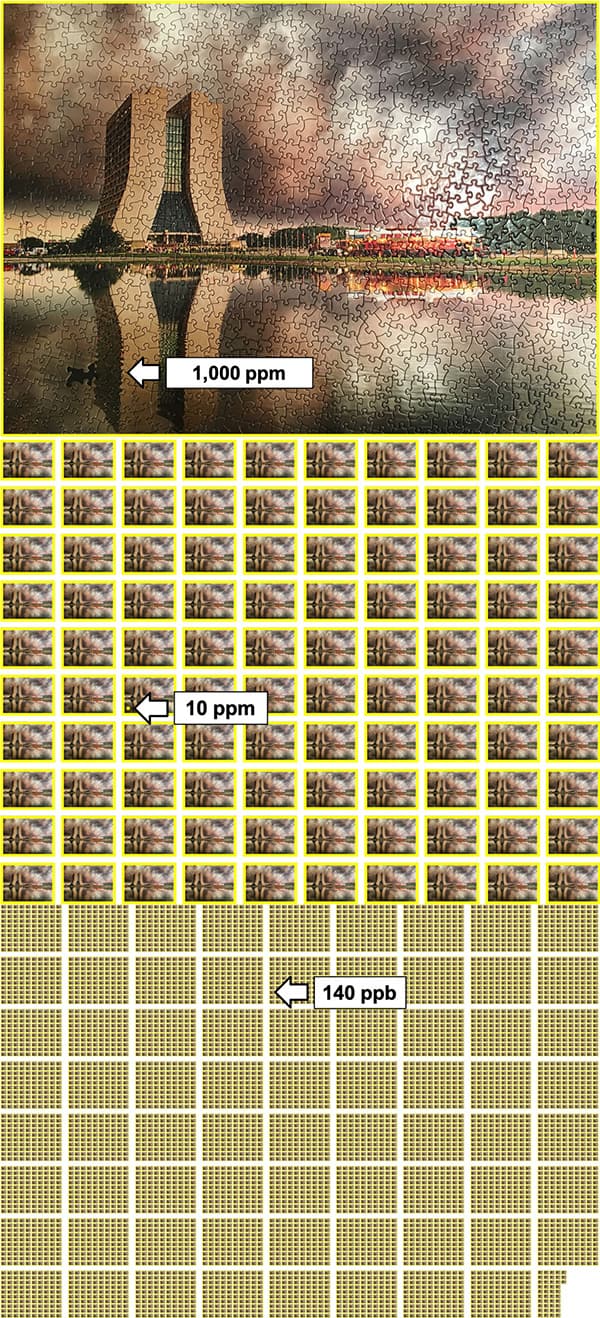

Many of the ML algorithms that have been developed focus on point predictions. As you train a neural network, it maps a datapoint to its associated label. Through this training, a generalized predictor is created that can be used on datapoints outside the training set. For example, say we have a data sample of images and associated labels. The images would be my X dataset, from X1 to Xn and the labels would be my Y dataset, from Y1 to Yn. After training a neural network, we’d have a predictor that has learned to map X to Y. We can then use this predictor on any datapoint Xn+1, and a prediction Yn+1 will be made. The issue with a prediction is that we get a guess at what Xn+1 is, but we don’t get a quantified uncertainty with this guess. Being able to calculate uncertainties in particle physics is crucial. For instance, on the Muon g-2 experiment that I am currently working on, we are trying to get a measurement of the muon’s magnetic moment, with only a 140 part per billion error. That’s like 7128 puzzles, with each puzzle each having 1000 pieces and only having one missing puzzle piece in total.

Our expertise in quantifying uncertainties could be of great benefit to the AI community just as their expertise in building AI architectures would be useful for us. The current uncertainty around predictions has become more and more prevalent in the AI community, as is highlighted by racist faux pas such as Google Images labelling photos of Black individuals as gorillas, and the tech conglomerate has yet to implement a long-term fix to this issue. Implementing uncertainties to prediction labels, like that of the label “gorilla”, could have potentially prevented this mislabelling. Recent attempts at quantifying uncertainties are under way, such as the work by statistician Rina Barber and colleagues, which trains N predictors, with each predictor having one datapoint removed from training dataset (Ann. Statist. 49 486). This method of uncertainty quantification is computationally expensive, but particle physicists are experts in navigating big datasets. As we have a vested interest in uncertainty implementation on AI tools, we may have insight into how to optimize such algorithms.

Another disadvantage of particle physicists using AI, as Charles Brown and I wrote in an online article for Physics World last year, is the lack of ethical discussions about the impact our work in AI may have on society. It’s easy for the particle-physics community to believe the false narrative that the research we do and the tools we develop will only be used for particle-physics research, when historically we’ve seen time and time again – be it the Manhattan Project or the development of the World Wide Web – that the work we do translates to society as a whole. As US theoretical cosmologist Chanda Prescod-Weinstein says, “I’m talking about integrating into a scientific culture that has accepted the production of death as a tangential, necessary evil in order to gain funding. One that will march for science without asking what science does for or to the most marginalized people. One that still doesn’t teach ethics or critical history to its practitioners.”

Not only are there practical disadvantages of using AI in particle physics, like quantifying uncertainties, in focusing our collective intellect in advancing and developing new AI tools without conversations about the moral implications of them, we run the risk of contributing to the oppression of marginalized people globally.

How do you use AI in your research?

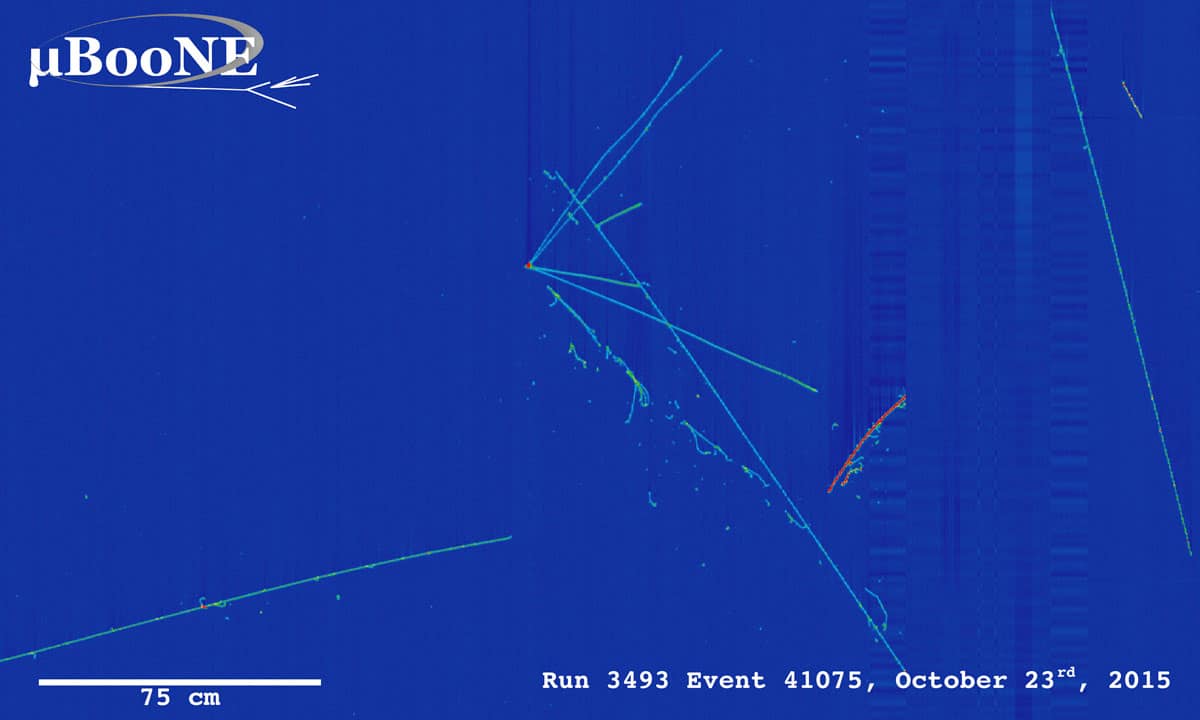

My graduate research used CNNs to classify neutrino–argon interactions called charged-current neutrino interactions. This signal is characterized by a common origination point (called the vertex) of multiple tracks, one of which is a relatively long track made by a muon particle. A background that contaminates this signal at low energies is neutral-current neutrino interactions, where the long track is made by a pion particle. Before my work, the only way of separating this signal from background was to apply a track length cut of 75 cm, which is the pion stopping distance (see image, above). My research focused on training a CNN on muon and pion data for use in separating signal from background to recover the charged-current neutrino interactions below the standard 75 cm track length cut. I’m now working on developing AI algorithms for better beam storage and optimization in the Muon g-2 experiment, which recently hit the headlines for showing a disparity between the predicted and measured values of the muon’s magnetic moment.

In the future, what specific areas of particle physics will AI be most useful and most crucial in moving forward?

In August 2020 the US Department of Energy (DOE) committed $37m of funding to support research and development in AI and ML methods to handle data and operations at DOE scientific facilities, including high-energy physics sites. This new investment perfectly highlights the union of AI and particle physics, and I’m sensing this marriage will stand the test of time. At Fermilab, there are nine particle accelerators on site, each of which has countless subsystems with data output that needs to be monitored in real time to make sure the many experimental collaborations receive the data necessary to answer the biggest questions of our generation.

As Fermilab moves towards developing the Proton Improvement Plan II, a concerted effort to consolidate the monitoring of hundreds of thousands of subsystems, increase accelerator run time, and improve the quality of each particle accelerator is under way – with AI being the powerhouse that will turn all this into a reality. “Different accelerator systems perform different functions that we want to track all on one system, ideally,” says Fermilab engineer Bill Pellico. “A system that can learn on its own would untangle the web and give operators information they can use to catch failures before they occur.”

Not only will AI have the ability to improve data collection and processing, but these algorithms could also be used to alert accelerator operators of potential issues before a system failure, thereby increasing beam up-time. Aside from improved particle-accelerator monitoring, AI will become critical in storing and analysing data from the next wave of particle detectors, such as the Deep Underground Neutrino Experiment, which will generate more than 30 petabytes of data per year, equivalent to 300 years of HD movies. Those figures aren’t even taking into account the massive data surge that would arise from, say, a supernova event, should one be captured. Over the coming decades, AI algorithms will undoubtedly be taking a leading role in data processing and analysis across particle physics.