Whole-body exposure to stray radiation can now be calculated accurately and efficiently for patients undergoing radiotherapy. Researchers in the US and Germany modified a treatment planning system (TPS) – the software used to predict patient dose distribution – to include unwanted doses from scattered and leaked radiation. The additional calculation, which adds an average of 7% to the computation time required for a standard treatment plan, could lead to better radiation treatments that avoid radiogenic secondary cancers and other side effects later in life. This is especially important for survivors of childhood cancer (Med. Phys. 10.1002/mp.14018).

External-beam radiotherapy has come a long way since its inception in the first half of the last century. In that time, most of the developments that have occurred have been with a view to improving the way the technique targets tumours – typically by delivering greater doses with ever increasing accuracy. Nowadays, patients undergoing radiotherapy can usually expect to survive their primary cancers, with five-year survival rates exceeding 70%.

But as post-treatment lifespans grow longer, late side effects of radiotherapy – such as damage to the heart, fertility issues and secondary cancers – are becoming increasingly prevalent. These side effects can be caused by radiation that is delivered to non-target tissue outside of the main therapeutic beam, much of which is not modelled by clinical TPSs.

To address this shortcoming and model the stray-radiation dose for the whole body, Lydia Wilson at Louisiana State University (LSU) and colleagues (also from LMU Munich, PTB and BsF) set out to modify the research TPS CERR (Computational Environment for Radiotherapy Research).

“Typically, commercial systems are proprietary and we can’t get sufficient access to the source code to integrate our algorithms,” says Wayne Newhauser, at LSU and Mary Bird Perkins Cancer Center. “CERR is open and we can get our grubby little hands on every line of code.”

Various methods exist for calculating treatment doses. The most accurate way is to model the process using a Monte Carlo simulation, but the computational expense of this technique limits its utility, particularly for stray radiation doses to the whole body. As these are exactly what Wilson and colleagues intended to calculate, they chose a much more efficient method – a physics-based analytical model.

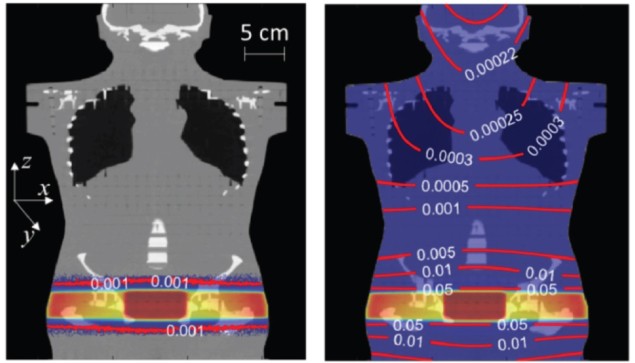

The team’s algorithm calculates, for every location, a total dose that is the sum of four components: the primary therapeutic dose intended for the tumour; the dose contributed by radiation scattered from the head of the linear accelerator (linac); the dose from radiation leaking from inside the linac; and the dose from radiation scattered in the patient’s own body.

For regions within the primary radiation field, the researchers used the dose calculated by the baseline TPS. For regions far from the target, not modelled by the unmodified system, they calculated the dose with their analytical algorithm alone. For regions near to the target, they used a combination of the two.

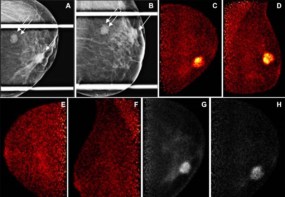

To compare the performance of the baseline CERR and their extended version (which they call CERR-LSU), the researchers used both systems to calculate dose distributions for two X-ray energies – 6 and 15 MV – and two phantom geometries: a simple water phantom and a prostate-cancer treatment delivered to a realistic, human-shaped phantom. They then implemented the treatment plans on physical versions of the phantoms and measured the delivered doses at various locations.

Where the dose predictions of the two systems diverged – in regions outside of the treatment field – the team found that CERR-LSU was more accurate in every case. The locations where CERR-LSU offered the least accuracy were those within the treatment field, where both systems used the baseline dose calculations. The improved performance of CERR-LSU came at a modest increase in computation time, with the extended system taking only 30% longer than CERR in the most extreme case.

Radiotherapy algorithm could reduce side effects

So when can we expect these improvements to show up in the commercial TPSs used in the clinic? Newhauser thinks that it all depends on the priorities of the TPS vendors and their customers. When the demand is there, however, adoption could be quick – given that the physics is now well understood and other algorithms have been integrated successfully into multiple TPSs.

“The biggest challenge to commercialization has been the continuation of a historical focus on short-term outcomes,” says Newhauser. “As disease-specific survival rates gradually continue to increase, patient, clinicians and vendors will eventually become more interested in treatment-planning features that improve long-term outcomes. We could begin to see basic capabilities appear in commercial systems in two to three years.”