Next-generation steering technologies for lidar are making machine vision cheaper and more portable. Janelle Shane describes how these advances could apply to autonomous vehicles

Today’s self-driving cars are hard to miss, thanks to the striking structures that emerge from their roofs. These structures – big backward fins, prominent domes or rotating turrets that resemble frozen yogurt containers – house an array of cameras and sensors that constantly look for hazards in all directions. One of the most important sensors in this array, but also currently one of the largest and most expensive, is known as light imaging detection and ranging, or lidar (when the light comes from a laser, it is sometimes called ladar). This range-finding method uses reflected light to measure the distance to faraway objects, much like a bat or a dolphin uses reflected sound, or a radar station uses radio waves. And despite the size and expense of lidar units, all but one of the nearly 20 companies currently pursuing self-driving cars – Tesla is the notable exception – include lidar somewhere in the array of gizmos sticking out of their vehicles.

Depth measurement is undoubtedly a very important capability for machine vision to have, especially on machines that are meant to avoid obstacles approaching at high speeds. But why is lidar so vital? There are, after all, other methods of measuring depth, and most of them are cheaper and less bulky than today’s lidar systems. The answer is that lidar is uniquely good at providing high-resolution 3D imaging at highway speeds. In fact, it is very well suited to machine vision in all kinds of fast-moving situations – not just self-driving cars, but also drones, spacecraft, factory automation and games. Hence, the availability of compact, low-cost lidar systems would produce a giant leap in the accuracy of machine vision.

Strengths and weaknesses

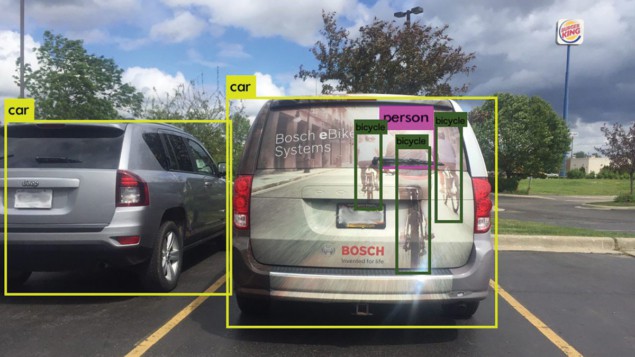

Most self-driving cars use a combination of methods to sense depth. Cameras, for example, can deduce depth with the help of image-processing algorithms, and they offer high-resolution sensing at a much lower cost than lidar. However, their measurements are subject to uncertainty. Is that open sky ahead or a reflection from a shiny obstacle? Is that a real bicycle or a mural? How about non-sequiturs such as emus or people in costumes? Humans mostly get along fine with vision alone, but machine vision has a long way to go before it can handle all of the ambiguities that people can. This is why a “ground-truth” depth measurement – rather than inferring depth from 2D images – is useful.

For bats, dolphins and submarines, the depth-sensing strategies of choice are sonar and ultrasound, which both rely on reflected sound waves. In self-driving cars, these methods are widely used for parking assistance and close-range obstacle avoidance. However, the slower speed of sound compared with light, and the fall-off of reflected signal intensity with distance, means that they don’t work well at driving speeds, or over distances of more than a few meters.

Radar, in contrast, is fast, inexpensive, and great for measuring speeds and detecting large obstacles like cars, ships and planes. It penetrates rain, snow and fog relatively well, and (unlike cameras) it works just as well without artificial illumination. However, the relatively long radio wavelength means that it doesn’t resolve fine detail well, and it usually only scans in 2D.

Lidar can resolve details fine enough to recognize obstacles, or even determine which way a pedestrian is facing. However, lidar is currently heavy and expensive

This is where lidar comes in. Like radar, lidar uses time of flight for high-speed distance measurement, but its operating wavelength in the near infrared gives it a higher spatial resolution. This means that lidar can resolve details fine enough to recognize obstacles, or even determine which way a pedestrian is facing. However, lidar is currently heavy and expensive; indeed, Tesla cites high cost as the chief factor behind its decision, alone among self-driving car developers, not to pursue it.

The main reason lidar systems are currently so large and expensive is that they need to capture a large field of view. Cameras can gather information about an entire scene at once using a pixelated sensor and the ambient illumination from sunlight, room lights or headlights. Lidar, however, must produce its own illumination – usually carefully timed pulses of near-infrared light – and this creates some challenges. It takes a lot of light to illuminate an entire scene at once (called flash lidar), especially if reflected light levels need to be strong enough to compete with ambient lighting. Filtering the collected light by wavelength can help get rid of some ambient background, but narrowband filters don’t work well at the higher incidence angles needed for wide-angle viewing. Increasing the illumination strength can help, but it makes systems consume a lot of power, and splashing lots of light around is undesirable in many situations. Another problem is the resolution: although high-resolution camera sensors are readily available, high-resolution lidar sensors are much more expensive. This means that flash lidar systems can only capture relatively narrow fields of view with high resolution.

The scanning solution

Scanning is a natural solution to the field-of-view difficulty. Even human vision, often thought of as a gold standard for high-resolution imaging, only has the resolution required for 20/20 vision in the central 1.5–2 degrees of its field of view. Constant eye movements – called microsaccades – scan this high-resolution area to collect information about a wide-field scene, and our brains fill in the details to give us a unified picture. Likewise, the lidar systems used in self-driving cars scan discrete illumination points to fill in a larger field of view. The spinning turret found on the top of many of these vehicles, for example, houses a 64-laser device from Velodyne that rotates several times a second, filling in a 360 × 27 degree field of view.

This device, however, weighs about 13 kg and costs around $80,000, and although a 16-laser version weighs less than 1 kg and costs around $4000, the resolution is correspondingly lower. These sizes and costs are prohibitive for mass deployment, and competing methods of mechanical lidar scanning also carry significant overheads in cost, weight and size. NASA’s DAWN (Doppler Aerosol WiNd lidar) mission, for example, carries a rotating wedge scanner that takes up 16 kg of the transceiver’s 34 kg payload. Mechanical lidar scanning is also sensitive to vibration, acceleration and impact; consumes a lot of power; and can transfer impulses to the device the scanner is mounted on. The last two problems are particularly significant for spacecraft and for small, light vehicles such as drones.

To lower the weight, and especially the cost, of lidar systems, a new class of lidar steering is emerging. Rather than using large mechanical motions to scan the lidar’s field of view, the so-called solid-state lidar methods use non-mechanical technologies. For example, several companies (including Luminar, Innoviz and Infineon, as well as Velodyne) are developing micromechanical systems (MEMS) for lidar steering. As their name implies, MEMS are not strictly non-mechanical, but the motion is performed by microscopic mirrors, which means that these systems can be small and light, consuming very little power. They are still somewhat sensitive to vibration and shock, but one of their major advantages is their low cost because MEMS devices have been produced in large quantities for cinema and consumer projectors.

A different steer

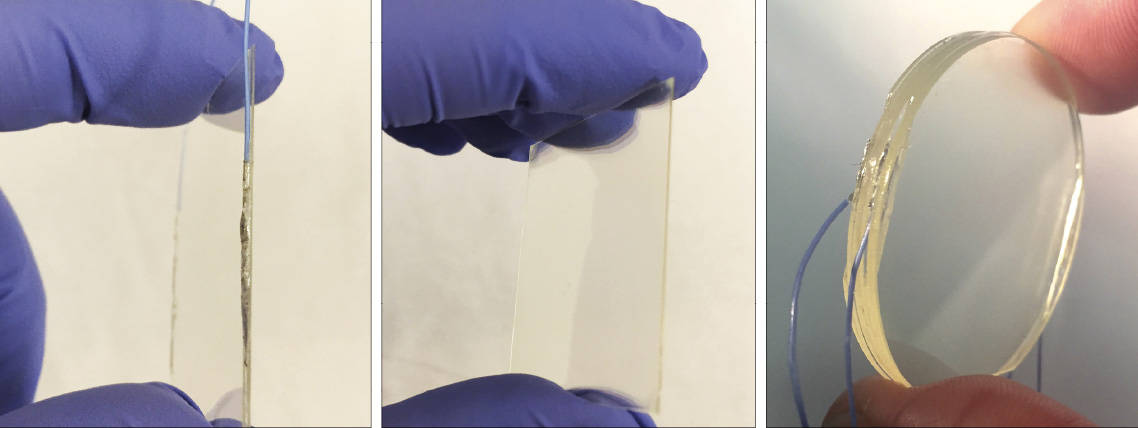

Another alternative is to use liquid crystal polarization gratings (LCPGs) to perform the beam-steering action. This is a relatively new method and it is truly non-mechanical because it relies on the ubiquitous liquid crystal (LC) technology found in a wide range of devices, from smartphone screens to car dashboards. LCPGs consist of a passive patterned LC grating, plus an active LC switch. When the switch changes the polarization handedness of light entering the grating, it will switch with >99.5% efficiency to the +1 or –1 diffraction order. Since each switch/grating stage can be <1 mm thin, these stages can be cascaded for discrete, highly repeatable steering in 2D over a >90 degree field of regard (the total area that a movable sensor can “see”). The LC response times range from tens of microseconds to a few milliseconds and, unlike with mechanical devices, there is no “ringing” while the device settles between movements.

One major advantage of LCPG steering is that these devices can be made with very large apertures – 15 cm or more. This means that more of the returning lidar signal can be collected because the illumination is scattered in all directions when it reflects from most targets. In addition, LCPGs steer the lidar unit’s excitation and collection optics at the same time, meaning that signal is only collected from the area that is being actively illuminated. This contrasts with MEMS systems, which steer a narrow illumination beam but collect from a fixed wide-aperture area – meaning that they not only collect more background light, but are also susceptible to collecting confusing multi-bounce signals from cars and other reflective objects. More efficient signal collection and background rejection mean that LCPG-based lidar can use lower-power sources, potentially lowering its cost and weight compared with MEMS-based systems.

Although LCPG technology is relatively new, at Boulder Nonlinear Systems we have already used these devices to steer flash lidar, synthetic aperture lidar and Doppler lidar – mostly for aerospace applications where large apertures not only increase signal, but crucially mean lidar excitation beams can be more highly collimated, increasing their accuracy and range. We are also using LCPGs to refocus microscope systems (LCPGs can be made in lens form too), where modern objectives are too large to work well with other remote focusing methods.

These initial applications in aerospace and microscopy are much more sensitive to aperture, weight and vibration than to cost. However, LCPGs have the same potential to be made cheaply in volume as regular LC devices, and therefore show promise for more cost-sensitive lidar applications such as self-driving cars. Their lifetimes and ability to withstand thermal cycling will have to be characterized and optimized. However, their true non-mechanical nature means they may be well suited to the rugged, high-vibration environments of future machine vision.