A quirk of human vision known as the vergence-accommodation conflict has emerged as one of the thorniest problems in virtual reality optics. Margaret Harris assesses strategies for solving it

When the Eyephone (no, that isn’t a typo) made its debut in Silicon Valley in 1987, it was meant to be the wave of the future. This early virtual reality (VR) headset was the brainchild of the computer scientist and technology philosopher Jaron Lanier, who popularized the term “virtual reality” and became, with his company VPL Research, one of the field’s pioneers. But despite the Eyephone’s impressive pedigree and futuristic appeal, its flaws soon became apparent. Its headset consisted of a pair of 3-inch-wide liquid-crystal displays, placed near the focal length of a pair of Fresnel lenses and held in place by a headband. Bulky and awkward to wear, it gave users the rough equivalent of 6/60 vision. Worse, it gave them headaches, nausea and dizziness that sometimes persisted for hours after they had left the virtual world behind. And to top it off, a complete Eyephone system set early adopters back almost $50,000 – in late 1980s dollars.

More than 30 years later, and towards the end of a day-long VR symposium held in San Francisco as part of the 2018 Photonics West conference, Lanier reflected on that first, failed dawn of the VR era. “There’s this crazy thing that in the history of technology, sometimes the first people who come into the field can see further,” he mused. Early VR systems garnered some significant successes, Lanier added, noting that his wife’s life was recently saved by a doctor who trained in a surgical simulator. But when it came to the Eyephone and one of its spin-offs, the PowerGlove, he didn’t mince his words. “It was total crap – are you kidding?” he said, laughing. “We sold thousands of PowerGloves and let us say they were not necessarily great.”

Three decades of intermittent innovation and investment have ironed out many of the Eyephone’s flaws. Consumer devices such as the Oculus Rift VR headset are relatively compact and retail for hundreds of dollars, not thousands. Refresh rates on VR displays often exceed 60 Hz, far better than the handful of frames per second typical of the late 1980s and early 1990s. But reports of user discomfort have not gone away, and as companies such as Microsoft, Google and Intel pour money into VR, it is becoming apparent that some of the technology’s limitations have as much to do with the physics of the human eye as they do with device engineering and design.

Real eyes, virtual world

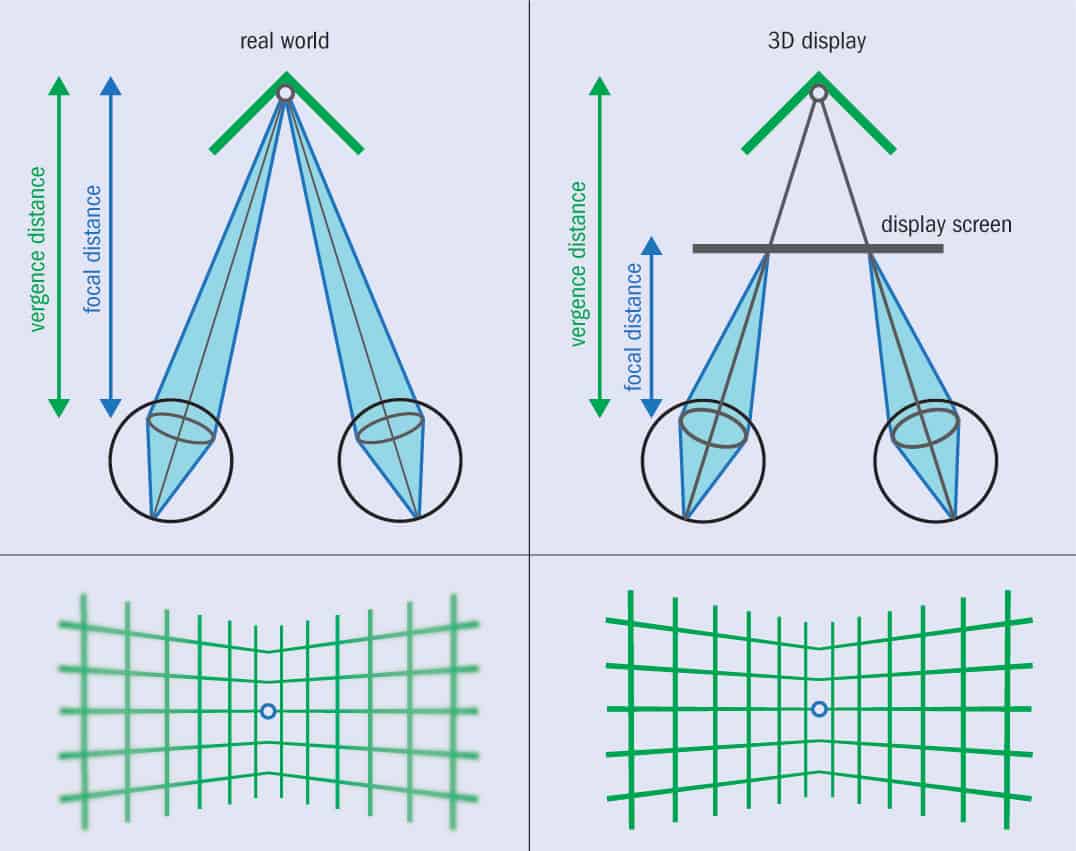

“Hold your finger up in front of you and look at it,” directs Gordon Love, a physicist who heads the computer science department at Durham University in the UK. “When you do that, a number of things have to happen in your eyes. First of all, you have to focus on your finger. Then you have to verge – that is, point your eyes towards it.” In the real world, Love explains, the location at which your eyes focus, or accommodate, is the same as where they point, or verge: your finger, in this case. In a VR display, though, regardless of whether you are examining a virtual object up close, or marvelling at the lush scenery in the background, your eyes are always focused on the display itself, which is typically a few centimetres away (see figure). Vergence and accommodation are therefore decoupled, which is not the normal state of affairs. “You’re effectively forcing the eye to do something that it never has to do in the real world,” Love says.

The vergence-accommodation conflict, as it’s known, is not the only source of eyestrain and nausea among VR users. However, decades of research on VR and human vision – dating back to the Eyephone era and even before it – show that it is a major contributor. It is also a problem that industry scientists are working on in earnest. At the Photonics West symposium, Douglas Lanman of Oculus Research – the R&D division of the company behind the Rift headset – placed it third on his list of challenges for VR developers, after improving the resolution of displays and widening their field of view. “Vergence-accommodation is a good research topic, because focusing in a headset is way down developers’ list of priorities,” Lanman explains, adding that the conflict needs to be addressed before VR can achieve its full potential.

Computing tricks

Proposed solutions to the vergence-accommodation conflict come in many forms. Of these, the computational approach is the simplest. To understand how it works, try staring at your finger again. In the real world, if you do this, your finger will be in focus, while everything in the background will be blurred. In a typical VR display, though, all objects appear to be in focus regardless of where your eyes are pointing. The makers of 3D films – who, like VR developers, simulate depth of field by providing slightly different views of a scene to each eye – correct for this computationally, by rendering the important elements in each scene sharply while blurring the background. “Look at a still image from the film Avatar,” Love suggests. “You’ll see that the main character is in focus, while other parts are blurred. And 99% of the time, that’s fine – at least for a film.”

For VR, however, the problem is more complex, because users want to explore and interact with virtual environments and objects rather than passively consuming a pre-recorded scene. Some VR developers have tried blurring images selectively, depending on where the user is looking, but Pierre-Yves Laffont, a computer-vision expert at VR start-up Lemnis Technologies, explains that computational tricks either don’t affect how our eyes accommodate, or they come with significant drawbacks, such as making the entire image blurry. “It’s a cosmetic change,” he says. “It does not really solve the problem.”

Optics to the rescue

The alternative is to tackle the vergence-accommodation conflict optically, by creating VR systems that more nearly mimic how light behaves in the real world. One approach, first suggested in the mid-1990s, is to use multiple displays, each at a different focal plane, to show different parts of a virtual scene. Unfortunately, the additional displays add bulk, and because they are stacked in front of each other, contrast is often lost. “In optics, there is no free lunch and there is no Moore’s Law,” warns Jerry Carollo, an optical architect at Google. “In software, you can do anything, but every time you add an optical adjustment, you’re adding weight and fecking the comfort.”

Another approach is to replace the passive, single-focus optical components found in most VR displays with adaptive multifocal optics. This is the idea that drew Love, whose background is in adaptive optics for astronomy applications, into VR. In 2009 his group worked with Martin Banks’ vision-science lab at the University of California, Berkeley to develop a switchable lens that could change its focal length in less than a millisecond. By placing this lens between the user’s eye and a display, and then rapidly cycling the lens through a handful of different focal lengths, they produced a more realistic accommodation experience, with test subjects reporting fewer negative visual effects.

The main disadvantage with this system, Love says, is that it places strict requirements on the user’s head position. “When your head moves around in real life, you start to see around objects,” he explains. “In our display, when you do that all you see is these different focal planes kind of moving apart, which is really horrible – much worse than the thing you’re trying to correct.” In lab experiments, test subjects bite down on a bar to help keep their heads in place; whether that level of control is possible in a standard VR head-mounted display is, Love says, “an open question”.

Some VR limitations have to do with the physics of the human eye rather than device engineering

A more practical option, at least in the near term, might look something like the prototype that Lemnis Technologies showed off at Photonics West in January 2018. This Singapore-based start-up was founded in 2017, and its team has developed software that can determine, in real time, the optimal location for the focus in each frame of a virtual scene. The Lemnis prototype also incorporates an infrared eye-tracker to monitor where the user is looking, and this information is fed to mechanical actuators that adjust the position of one or more lenses inside the headset – thereby giving users a more “real-world” visual experience. The company is working with undisclosed partners to incorporate its technology into a consumer device, and Laffont, its co-founder and CEO, is naturally confident about its future. “I believe that eye-tracking is a major component of the next generation of VR headsets,” he says, adding that many start-ups in the eye-tracking field have recently been acquired by tech giants such as Facebook, Google and Apple.

Wide-open field

Another strategy for resolving the vergence-accommodation conflict eschews adaptive optics in favour of light-field technology. Conceptually, light-field VR displays work by mapping multiple views of virtual objects onto a single display, with two or more light rays emerging from each incremental area on the object and manifesting as pixels on a screen. The technology is based on a system demonstrated in 1908 by the optical physicist (and Nobel laureate) Gabriel Lippmann, who used an array of small lenses to record a scene as it appeared from many different locations, and additional arrays to reconstruct composite images for each eye. The result was a 3D image that accurately incorporates parallax and perspective shifts – an attractive attribute for VR developers.

There is, however, a problem: the more rays you add, the more pixels you need in your display. “You end up building a low-resolution display, and that’s a big trade to make,” Love explains. Nevertheless, several prototype light-field VR systems have been demonstrated, notably by Nvidia Research. There is also a strong feeling in the industry that light-field technology needs to be perfected for both VR and its newer cousin, augmented reality (AR), to have mass-market applications. In AR, users interact with virtual and real objects simultaneously, and the vergence-accommodation conflict is, if anything, even more bothersome than it is in VR. “Imagine you want to put a virtual object in your hand just in front of you,” explains Laffont. “With today’s AR devices, either your hand will appear sharp or the virtual object will appear sharp, but you won’t be able to focus on both of them at the same time.”

At the Photonics West symposium, Ed Tang, co-founder and CEO of Avegant, a VR developer, was bullish about light-field’s capacity to get around such problems – and, eventually, to dominate the crowded field of VR technology. “Light-field, at the end of the day, is required for the experiences users demand,” he declared. “I would even go so far as to be sceptical that VR will become accepted without light-field.” Others are more cautious. Lanman, who was a senior research scientist at light-field specialists Nvidia until 2014, called light-field displays “academically interesting but very far away”. Laffont echoes this view. “I think light-field displays are really exciting and definitely the future,” he says. “The problem is that the current technology does not allow you to achieve a high field of view and a high resolution together with a low computational expense. And if we have to trade the resolution or the field of view, that will prevent certain applications from being realized.”

For now, both light-field-based VR devices and their adaptive-optics counterparts still look more like modern Eyephones than, well, modern iPhones. A case in point is the Avegant Glyph, a light-field-based video headset that entered the market in 2016. One prominent reviewer, Dieter Bohn of tech site The Verge, summed up the Glyph as “ridiculous-looking, kind of uncomfortable, and pretty awesome”. Two years later, that description remains valid for most – perhaps all – VR headsets available to consumers. “VR initially took off much more slowly than expected,” says Laffont. “We are still at the very early stage of its history.” Despite significant progress and a promising future, the field of VR has a way to go before the virtual becomes truly real.