Particle physicists are planning the successor to CERN’s Large Hadron Collider – but how will they deal with the deluge of data from a future machine and the proliferation of theoretical models? Michela Massimi explains why a new scientific methodology called “model independence” could hold the answer

It’s been an exciting few months for particle physicists. In May more than 600 researchers gathered in Granada, Spain, to discuss the European Particle Physics Strategy, while in June CERN held a meeting in Brussels, Belgium, to debate plans for the Future Circular Collider (FCC). This giant machine – 100 km in circumference and earmarked for the Geneva lab – is just one of several different projects (including those in astroparticle physics and machine learning) that particle physicists are working on to explore the frontiers of high-energy physics.

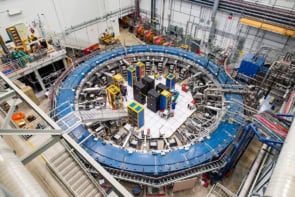

CERN’s Large Hadron Collider (LHC) has been collecting data from vast numbers of proton–proton collisions since 2010 – first at an energy of 8 TeV and then 13 TeV during its second run. These have enabled scientists on the ATLAS and CMS experiments at the LHC to discover the Higgs boson in 2012, while light has also been shed on other vital aspects of the Standard Model of particle physics.

But, like anything else, colliders have a lifespan and it is already time to plan the next generation. With the information that could be obtained from the LHC Run 3 expected to peak in 2023, a major upgrade has already begun during its current shutdown period. The High-Luminosity LHC (HL-LHC), which will run from the mid-2020s to the mid-2030s, will allow high-precision collisions with a centre-of-mass energy of 14 TeV and gather datasets that are 10 times larger than those of the current LHC.

Particle physicists hope the upgraded machine will increase our understanding of key fundamental phenomena – from strong interactions to electroweak processes, and from flavour physics to top-quark physics. But even the HL-LHC will only take us so far, which is why particle physicists have been feverishly hatching plans for a new generation of colliders to take us to the end of the 21st century.

Japan has plans for the International Linear Collider (ILC), which would begin by smashing electrons and positrons at 250 GeV, but could ultimately achieve collisions at energies of up to 1 TeV. China has a blueprint for a Circular Electron Positron Collider that will reach energies of up to 250 GeV – with the possibility of converting the machine at some later point into a second-generation proton–proton collider. At CERN, two options are currently on the table: the Compact Linear Collider (CLIC) and the aforementioned FCC (see box).

CERN’s Future Circular Collider

Along with the Compact Linear Collider (CLIC), CERN has another option for the next big machine in particle physics. The Future Circular Collider (FCC) would require a massive, 100 km new tunnel to be excavated below France and Switzerland – almost four times longer than the current LHC. The FCC would run in two phases. The first would be dedicated to electron–positron collisions (FCC-ee) in the newly built 100 km tunnel, starting in around 2040. The second phase, running from around 2055 to 2080, would involve dismantling FCC-ee and reusing the same tunnel to carry out proton–proton collisions (FCC-hh) at energies of up to 100 TeV. The European Particle Physics update is due to conclude and publish priorities for the field that will eventually inform the CERN council on whether to move forward with the technical design report.

Setting targets

Now, if you’re not a particle physicist, you might be wondering why we want to upgrade the LHC, let alone build even more powerful colliders. What exactly do we hope to achieve from a scientific point of view? And, more importantly, how can we most effectively achieve those intended goals? Having attended the Brussels FCC conference in June, I was struck by one thing. While recent news stories about next-generation colliders have concentrated on finding dark matter, the real reason for these machines lies much closer to home. Quite simply, there is lots we still don’t know about the Standard Model.

A key task for any new collider will be to improve our understanding of Higgs physics (see box below for just one example), allow very precise measurements of a number of electroweak observables, improve sensitivity to rare phenomena, and expand the discovery reach for heavier particles. Electron–positron colliders could, for example, more precisely measure the relevant interactions of the Higgs boson (including interactions not yet tested). Future proton–proton colliders, meanwhile, could serve as a “Higgs factory” – with the Higgs boson becoming an “exploration tool” – to study, among other things, how the Higgs interacts with itself and perform high-precision measurements of rare decays.

Understanding the Higgs mass

The Higgs boson was discovered at CERN in 2012, but we’re still not sure why it has such a low measured mass of just 125 GeV. Known as the “naturalness problem”, it’s linked to the fact that the Higgs boson is the manifestation of the Higgs field, with which virtual particles in the quantum vacuum interact. As a result of all these interactions, the Higgs boson squared mass receives additional contributions of energy. But for the Higgs mass to be as low as 125 GeV, the contributions from different virtual particles at different scales have to cancel out precisely. As CERN theoretical physicist Gian Giudice once put it, this “purely fortuitous cancellation at the level of 1032, although not logically excluded, appears to us disturbingly contrived” (arXiv:0801.2562v2). He likens the situation to balancing a pencil on its tip – perfectly possible in principle, but in practice highly unlikely as you have to finetune its centre of mass so that it falls precisely within the surface of its tip. Indeed, Giudice says that the precise cancellations required for the measured mass of the Higgs boson to be 125 GeV is like balancing a pencil as long as the solar system on a tip a millimetre wide.

A further task would be to shed light on neutrinos. Physicists are keen to understand the mechanism generating the masses of the three species of neutrino (electron, muon and tau) that are linked to the physics of the early universe. And then, of course, there’s the physics of the dark sector, which includes the search for a variety of possible dark-matter candidates. Any eventual finding for these in new colliders would have to be combined and cross-checked with data coming from direct dark-matter detection searches and a variety of cosmological data.

More generally, there’s the physics that goes Beyond the Standard Model (BSM). BSM physics includes (but is not limited to) familiar supersymmetric (SUSY) models, in which every boson (a particle with integer spin) has a fermion “superpartner” (with half-integer spin), while each fermion has a boson superpartner. The BSM landscape extends well beyond SUSY, and features a number of possible exotic options, ranging from possible new resonances at high energy to extremely weakly coupled states at low masses.

An interesting methodological approach

But one thing is certain in this bewildering array of unanswered questions: how we approach these challenges matters as much as what kind of machine we should build. And that’s why it’s crucial for particle physicists – and for philosophers of science such as myself – to discuss scientific methodology. Theorists have tried to find a successor to the Standard Model, but it’s still the best game in town. New methodological approaches are therefore vital if we want to make progress.

How we should methodologically approach these unanswered questions matters as much as how we should build the new machines themselves

I can understand why most current research is still firmly grounded in the Standard Model, despite the many theoretical options currently explored for BSM physics. Why jump ship if the vessel’s still going strong, even though we don’t fully understand how it functions? The trick will be to learn how to navigate the vessel in the uncharted, higher-energy waters, where no-one knows if – or where – new physics might be. And this trick has a name: “model independence”.

Model independence, which nowadays is routinely used in particle physics and cosmology, has been prompted by two main changes. The first is the immense wealth of data emerging from particle colliders like the LHC or cosmology projects such as the Dark Energy Survey, as just one example. This new era of “big data” is forcing scientists to do fundamental research in a way that is no longer railroaded along pre-defined paths but is more open-ended, more exploratory and more sensitive to data-driven methods and phenomenological approaches.

The other main factor behind the growth of model-independent approaches has been the proliferation of theoretical models designed to capture possible new BSM physics. Similarly, its increasing popularity in cosmology has been driven by the many different modified-gravity models proposed to tackle open questions about the standard ΛCDM model, which postulates the existence of cold dark matter (CDM) and dark energy (Λ).

But what exactly is model independence? Surely scientific inquiry depends on models all the way down – so how can scientific inquiry ever be independent of a model? Well, first let’s be clear what we mean by models. To understand how, say, a pendulum swings, you model it using the principles of Newtonian physics. But you also have to devise what philosophers of science call “representational models” associated with the theory. In the case of a pendulum, these representational models are built using Newtonian physics to represent specific phenomena, such as the displacement of the pendulum from equilibrium.

The reason why we build such models is to see if a real system matches the representational model. In the case of a pendulum, we do this by collecting data about how it swings (model of the data) and checking for any systematic error in the way it functions (model of the experiment). But if models are so ubiquitous even for something as simple as a pendulum, how can there be any “independence from models” when it comes to particle physics or cosmology? And why does this question even matter?

Model independence matters in fields where research is more open-ended and exploratory in nature. It is designed to “bracket off” – basically ignore – certain well-entrenched theoretical assumptions of the Standard Model of particle physics or the ΛCDM model in cosmology. Model independence makes it easier to navigate your way through uncharted territories – higher energies in particle physics, or modified gravity in the case of cosmology.

To see what this “bracketing-off” means in practice, let’s look at how it’s been used to search for a particular example of BSM physics at the LHC through the phenomenological version of the Minimal SuperSymmetric Model (pMSSM). Like any SUSY model, this model assumes that each quark has a “squark” superpartner and each lepton has a “slepton” superpartner – particles that are entirely hypothetical as of today. Involving only a handful of theoretical parameters – 11 or 19 depending on which version you use – the pMSSM gives us a series of “model points” that are effectively snapshots of physically conceivable SUSY particles, with an indication of their hypothetical energies and decay modes. These model points bracket off many details of fully fledged SUSY theoretical models. Model independence manifests itself in the form of fewer parameters (masses, decay modes, branching ratios) that are selectively chosen to make it easier for experimentalists to look for relevant signatures at the LHC and exclude, with a high confidence level, a large class of these hypothetical scenarios.

In 2015, for example, members of the ATLAS collaboration at CERN summarized their experiment’s sensitivity to supersymmetry after Run 1 at the LHC in the Journal of High Energy Physics (10 134). Within the boundaries of a series of broad theoretical constraints for the pMSSM-19, infinitely many model points are physically conceivable. Out of this vast pool of candidates, as many as 500 million of them were originally randomly selected by the ATLAS collaboration. Trying to find experimental evidence at ATLAS for any of these hypothetical particles under any of these physically conceivable model points is like looking for a needle in a haystack. So how do particle physicists tackle the challenge?

What members of the ATLAS collaboration did was to gradually trim down the sample, step by step reducing the 500 million model points to just over 310,000 that satisfied a set of broad theoretical and experimental constraints. By sampling enough model points, the researchers hoped that some of the main features of the full pMSSM might be captured. The final outcome of this sampling takes the form of conceivable SUSY sparticle spectra that are then checked against ATLAS Run 1 searches. And as more data were brought in at LHC Run 2, more and more of these conceivable candidate sparticles were excluded, leaving only live contenders (which nonetheless remain purely hypothetical as of today).

In other words, instead of testing a multitude of fully fledged SUSY theoretical models one by one to see if any data emerging from LHC might support one of them, model independence recommends looking at fewer, indicative parameters in simplified models (such as pMSSM-19). These are models that have been reduced to the bare bones, so to speak, in terms of theoretical assumptions, and are therefore more amenable to being cross-checked with empirical data.

The main advantage of model independence is that if no data are found for these simplified models, an entire class of fully fledged and more complete SUSY theoretical models can be discarded at a stroke. It is like searching for a needle in a haystack without having to turn and twist every single straw, but instead being able to discard big chunks of hay at a time. This is, of course, only one example. Model independence manifests itself more profoundly and pervasively in many other aspects of contemporary research in particle physics: from the widespread use of effective field theories to the increasing reliance on data-driven machine-learning techniques, just to mention two other examples.

Cosmological concerns

Model independence has led to a controversy surrounding cosmological measurements of the Hubble constant, which tracks the expansion rate of the universe. The story began in 2013 when researchers released the first data from the European Space Agency’s Planck mission, which had been measuring anisotropies in the cosmic microwave background since 2009. When these data were combined with the ΛCDM model of the early universe, cosmologists found a relatively low value for the Hubble constant, which was confirmed by further Planck data released in 2018 to be just 67.4 ± 0.5 km/s/Mpc.

Problems arose when estimates for the Hubble constant were made using data from pulsating Cepheid variable stars and supernovae Ia exploding stars, which offer more model-independent probes for the Hubble constant. These model-independent measurements led to a revised value of the Hubble constant of 73.24 ± 1.74 km/s/Mpc (arXiv:1607.05617). Additional research has only further increased the “tension” between the value of the Hubble constant from Planck’s model-dependent early-universe measurements, and more model-independent late-universe probes. In particular, members of the H0liCOW (H0 Lenses in COSMOGRAIL’s Wellspring) collaboration – using a further set of model-independent measurements of quasars gravitationally bending light from distant stars – have recently measured the Hubble constant at 73.3 ± 1.7 km/s/Mpc.

And to complicate matters still further, in July Wendy Freedman from the University of Chicago and collaborators used measurements of luminous red giant stars to give another new value of the Hubble constant at 69.8 ± 1.9 km/s/Mpc, which is roughly halfway between Planck and the H0LiCOW values (arXiv:1907.05922). More data on these stars from the upcoming James Webb Space Telescope, which is due to launch in 2021, should shed light on this controversy over the Hubble constant, as will additional gravitational lensing data.

Let’s get philosophical

Model independence is an example of what philosophers like myself call “perspectival modelling”, which – metaphorically speaking – involves modelling hypothetical entities from different perspectives. It means looking at the range of allowed values for key parameters and devising exploratory methods, for example, in the form of simplified models (such as pMSSM-19) that scan the space of possibilities for what these hypothetical entities could be. It is an exercise in conceiving the very many ways in which something might exist with an eye to discovering whether any of these conceivable scenarios is in fact objectively possible. Ultimately, the answer lies with experimental data. If no data are found, large swathes of this space of possibilities can be ruled out in one go following a more data-driven, model-independent approach.

As a philosopher of science, I find model independence fascinating. First, it makes clear that philosophers of science must respond to – and be informed by – the specific challenges that scientists face. Second, model independence reminds us that scientific methodology is an integral part of how to tackle the challenges and unknowns lying ahead, and advance scientific knowledge.

Model independence is becoming an important tool for both experimentalists and theoreticians as they plan future colliders. The Conceptual Design Report for the FCC, for example, mentions how model independence can help “to complete the picture of the Higgs boson properties”, including high-precision measurements of rare Higgs decays. Such model-independent searches are a promising (albeit obviously not exclusive or privileged) methodological tool for the future of particle physics and cosmology. Wisely done, the scientific exercise of physically conceiving particular scenarios becomes an effective strategy to find out what there might be in nature.