The discovery that the universe is expanding with increasing speed may have bagged a Nobel prize, but some cosmologists are still not sure if dark energy is the explanation for it. Keith Cooper looks at the arguments for and against this mysterious phenomenon

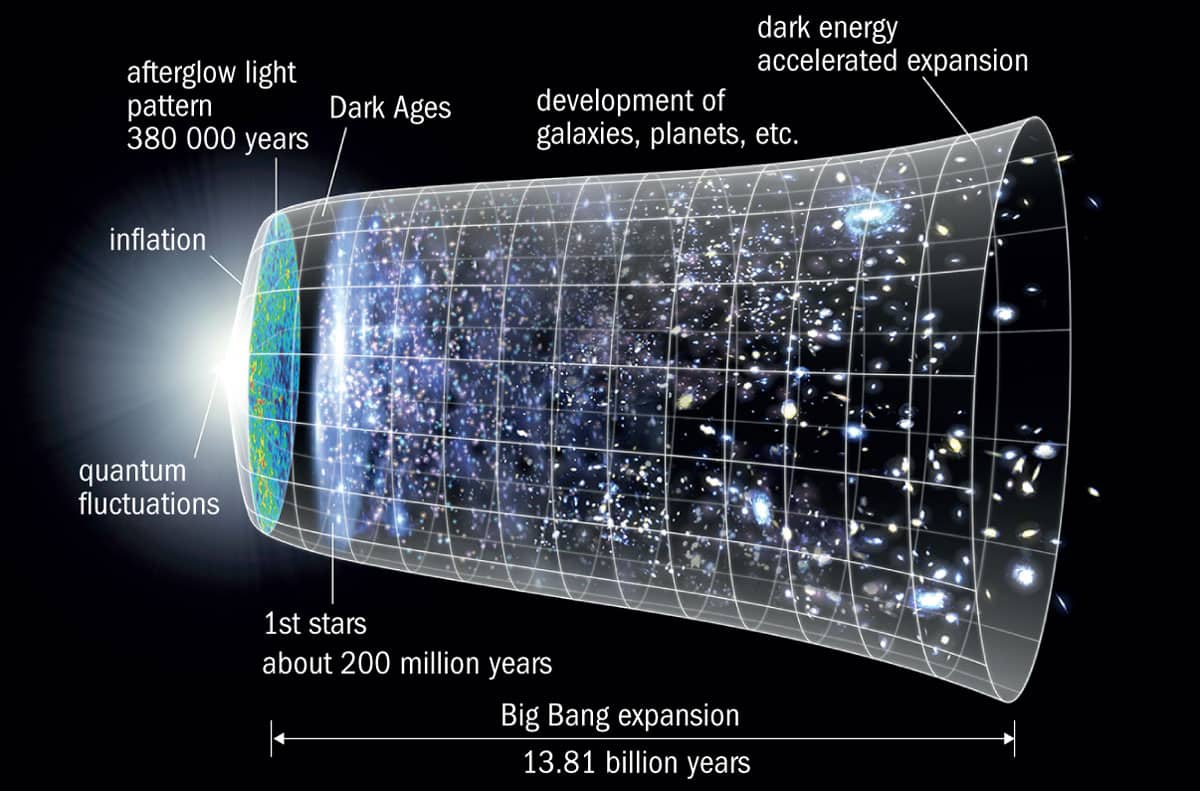

It was the most profound discovery in cosmology since the detection of the faint radio hiss from the cosmic microwave background (CMB). In 1998 two teams of researchers, locked in a fierce rivalry to be the first to measure the expansion rate of the universe, independently announced that they had arrived at the same startling conclusion: the expansion of the universe is not slowing down as expected, but is speeding up. The discovery led to the 2011 Nobel Prize for Physics being awarded to the two team leaders – Brian Schmidt of the High-Z Supernova Search Team and Saul Perlmutter of the Supernova Cosmology Project – as well as Schmidt’s teammate, Adam Riess, who was the first to plot the data and realize that the universe is not behaving as it should.

The discovery was a “terrifying” moment, admits Riess, who is now at Johns Hopkins University in the US. At the time he was fresh out of his PhD and charged with plotting the supernovae data that the High-Z team had been collecting. Because all type Ia supernovae – the thermonuclear destruction of a white dwarf star – explode with very similar luminosities and light curves, they can be calibrated to act as “standardized candles” by which cosmic distances can be measured. Comparing those distances to the redshift of the supernovae tells us how fast the universe is expanding. Riess’s conclusion that the data implied the expansion is accelerating was so counter-intuitive that he was sure he’d made a mistake.

However, when Perlmutter’s team revealed that it had found the same thing, history was made, with the discovery of the accelerated expansion documented in two breakthrough papers – one by the Supernova Cosmology Project (Astrophys. J. 517 565) and the other by the High-Z team (Astron. J. 116 1009). To explain this acceleration, an old idea was reborn: Einstein’s cosmological constant, which describes the energy density of empty space – and with it the notion of “dark energy”. The latest measurement from the Plank mission suggests the cosmos is made of roughly 68% of this dark energy, along with 5% ordinary matter and 27% dark matter. However, the exact nature of dark energy remains mysterious.

The supporting arguments

In the subsequent 20 years, two major advances have been made. The first is independent confirmation that the observed acceleration is a real effect. This confirmation has come from several different avenues, in particular the baryonic acoustic oscillations (BAOs) in the CMB. These oscillations originate from the early universe, less than 380,000 years after the Big Bang, when space was filled with an ocean of plasma dense enough to allow acoustic waves to oscillate through. The sound waves had peaks and troughs represented by hot and cold spots – the anisotropies – in the CMB, and shared a characteristic wavelength. Over the aeons the hot spots became the nucleation sites for matter to condense into galaxies and, as the universe expanded, so did the characteristic wavelength. Today, the average distribution of galaxies reflects the size of the BAOs in the CMB. Just as type Ia supernovae are “standard” candles, so BAOs are standard rulers by which to measure the expansion of the universe. They support the finding that the expansion is accelerating.

A flat universe has a critical matter/energy density that requires 68.3% of all the mass and energy in the universe to be made from dark energy

Further evidence that the acceleration is real centres on the geometry of space itself, which the CMB indicates is “flat”. In such a universe, Euclidean geometry applies: if you draw two parallel lines and extend them to infinity, they will always remain parallel, whereas in a curved universe the lines would diverge or converge. A flat universe has a critical matter/energy density that requires 68.3% of all the mass and energy in the universe to be made from dark energy, a value that can be derived from the magnitude and spacing of the acoustic peaks in the CMB.

The other big development of the last two decades, says Riess, is dark energy’s equation of state, which describes the ratio between the energy density of dark energy, and its pressure. Because it is causing the universe to expand rather than contract, dark energy is said to have negative pressure, or “tension”, hence the solution to its equation of state has a minus value. In theory, the equation of state for a universe dominated by the cosmological constant would have a solution of –1, but in truth any solution to the equation of state greater than minus one-third results in a universe that undergoes accelerating expansion.

It turns out that the solution to the equation of state for our universe is almost bang on –1, (+/–0.05). This is exactly the value, to within 5%, that one would expect in a universe dominated by the cosmological constant. On the face of it, this would seem to rule out alternatives to the cosmological constant such as a scalar field called quintessence, in which dark energy varies across time and space. Because the cosmological constant is a fixed value across the universe, it implies that dark energy will always have the same strength. Since it is the energy of space itself, then as space expands more dark energy comes into the universe, causing the expansion to accelerate ever faster. If dark energy is left unchecked, it is a scenario that could ultimately result in a Big Rip that would tear the fabric of space–time apart.

The other side

So, case closed? Not exactly. Dark energy could be merely mimicking the cosmological constant, a scalar field changing so slowly that we have not yet been able to detect it. Or (whisper it quietly) perhaps dark energy does not even exist.

The discovery of what appears to be an accelerating expansion is undisputed, but are we being tricked by nature? Riess is, perhaps surprisingly, open to the possibility. “I don’t think the phenomenon of dark energy has to be real,” he says.

One of the stumbling blocks is the staggering discrepancy between the predicted strength of dark energy, and its observed strength. Quantum field theory calculates a value that is 10120 times larger than what we observe. If dark energy really were that strong, it would expand space so fast that individual atoms would be separated by vast distances and stars and galaxies would not be able to form. Clearly, we seem to be missing something fundamental.

As such, this discrepancy has led some scientists to consider other, somewhat controversial, possibilities instead. Before we delve into them, it is important to recognize the difference between the accelerating expansion and dark energy. The former has been shown by observations, but the latter is just the interpretation of those observations.

Any interpretation has to take into account all the observations: the supernovae results, the BAOs, the CMB and its acoustic peaks, and the growth of galaxy clusters. Riess has already been involved in a public skirmish along these lines. In 2016 Subir Sarkar of the University of Oxford, Jeppe Nielsen of the Niels Bohr Institute at the University of Copenhagen and Alberto Guffanti of the University of Torino published a paper (Scientific Reports 6 35596) in which they argued that the evidence for dark energy was weaker than had been thought, based on their statistical analysis of data from 740 type Ia supernovae.

Riess disagreed with their assessment. “I think it had serious flaws,” he says, describing their analysis of the supernovae data as “non-standard”. Indeed, a re-analysis of the Sarkar results by David Rubin and Brian Hayden of the Lawrence Berkeley National Laboratory (ApJL 833 L30) demonstrates what they say are errors in Sarkar and colleagues’ analysis. Sarkar, however, disputes this, saying such criticism is “disingenuous”, not only because his team was using a common statistical method called the Maximum Likelihood Estimator, but also because this method does not assume that the standard Λ-CDM (the paradigm of cold dark matter and dark energy) model of the universe is the correct model, and is therefore unbiased. Sarkar is sceptical of this standard cosmological model, saying that it has “never been rigorously tested”.

Another criticism Riess raises of Sarkar’s work is that it did not include all the evidence for accelerating expansion from BAOs, the CMB and so on. “I don’t know why one would ignore all the other confirming evidence,” says a bemused Riess. Sarkar counters this by arguing that some controversial analyses, such as that by Isaac Tutusaus in a 2017 paper in Astronomy and Astrophysics (602 10.1051/0004-6361/201630289), claim to see no evidence for acceleration in BAO data. However, the consensus remains among cosmologists that BAOs are strong evidence for accelerated expansion.

The great voids

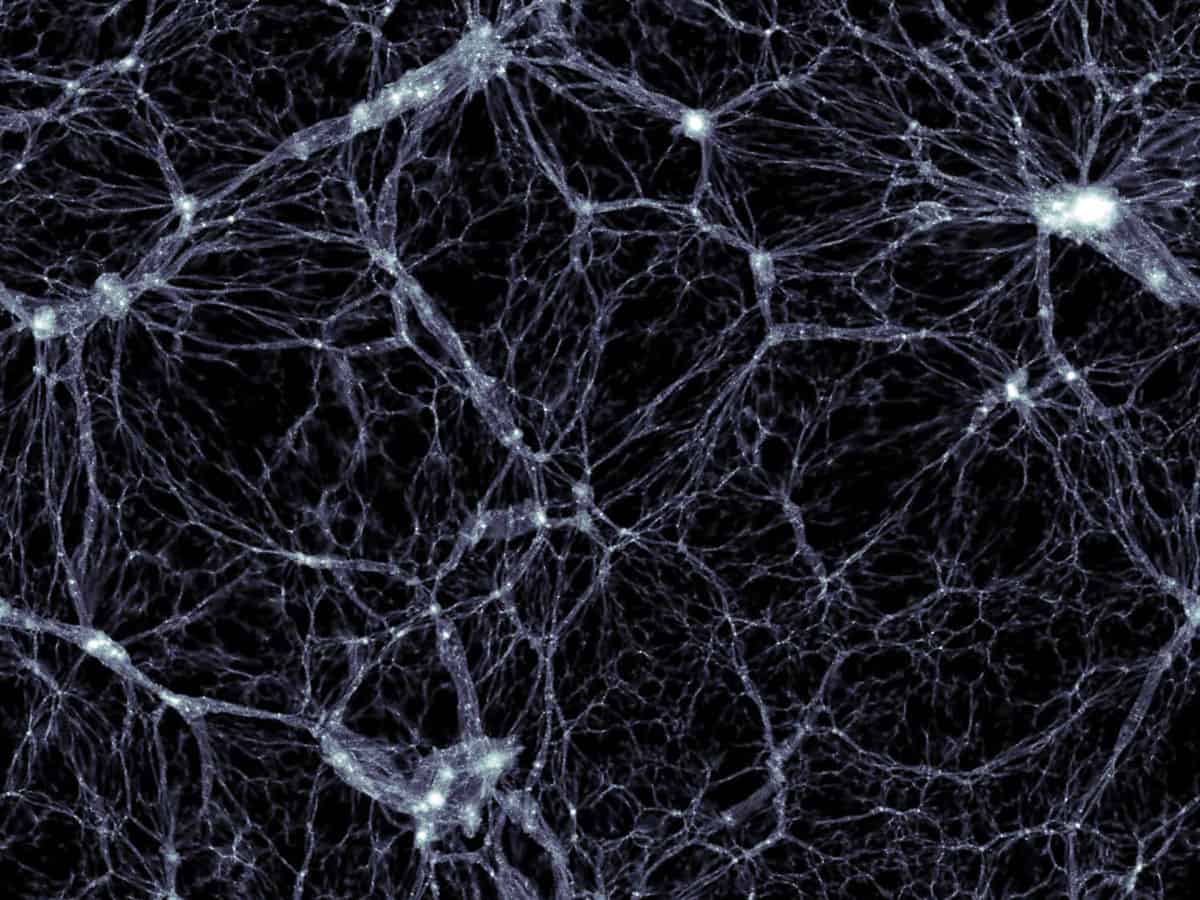

Indeed, challenges to the existence of dark energy often focus on our most precious cosmological models. The Cosmological Principle states that the distribution of matter in the universe is both homogenous and isotropic. However, on smaller scales matter is lumpy, arranged into galaxies and clusters of galaxies, which form great chains and walls of clusters that stretch hundreds of millions of light-years. Crucially, though, these largest structures, such as the Sloan Great Wall, are not gravitationally bound. In-between these islands of matter are vast voids where the density of matter is far lower. Gravity will affect the expansion of space differently depending on whether you are in a cluster or a void.

Challenges to the existence of dark energy often focus on our most precious cosmological models

The $64,000 question, according to István Szapudi of the University of Hawaii, is not whether structure influences the expansion of the universe – “It’s clear that it does,” he says – but what is the size of that effect?

Szapudi co-wrote a paper published in 2017 (MNRAS 469 L1) that argues that the Λ-CDM model fails to take into account the changing structure – manifest in the voids and clusters – as one travels through the universe. Models of the expansion of the universe are typically based around the Friedmann–Lemaître–Robertson–Walker (FLRW) metric. This is an exact solution to the Friedmann equation, which solves the general theory of relativity for an expanding universe consistent with the Cosmological Principle and where the curvature of space, which is zero, is the same everywhere. However, using their AvERA algorithm, Szapudi and his colleagues, led by Gábor Rácz of Eötvös Loránd University in Budapest, found that their simulated expansion takes place at different rates depending on the surrounding structure. Because the universe is dominated by voids where the lower gravity allows the universe to expand faster, it is only by averaging all the different rates of expansion that it would seem like the expansion is accelerating.

Long vs short scales

Another contentious alternative to dark energy that also acknowledges structure but takes a different tack to Szapudi and Rácz’s approach, is David Wiltshire’s “timescape cosmology”. Based at the University of Canterbury in New Zealand, Wiltshire is dubious about the validity of the FLRW metric. In particular, he is critical of the fact that in FLRW cosmology, the scales that matter are the largest scales that ignore the coarse graininess of individual galaxies and clusters. “On what scales are matter and geometry coupled by Einstein’s equations?” he asks. “My answer is that short scales take precedence.”

On scales less than 450 million light-years, the universe is lumpy, filled with those voids and clusters that affect space and its expansion differently. The largest voids, at over 160 million light-years across, occupy 40% of the volume of the observable universe in total. Add in all the smaller voids, and they account for more than half the universe, so they have a big say in how the universe appears to expand.

In timescape cosmology, clocks run faster in voids than in more densely populated regions of space. A clock running in the Milky Way would therefore be about 35% slower than the same clock in the middle of a large cosmic void. Billions more years would have passed in voids than in galaxy clusters, and in those extra billions of years there will have been more expansion of space. Averaging the expansion rate across all of space – that is, the voids and the clusters – makes it seem like the expansion is getting faster because the voids dominate.

Back-up needed

It’s mind-blowing stuff, but Riess isn’t ready to down tools and give up researching dark energy just yet. “I don’t really take things like this too seriously until other people can independently verify it,” he says. “People have their pet way of looking at the problem, but in the cases that I’ve seen nobody has been able to reproduce what they have done.”

One of the problems that timescape cosmology currently faces is that it’s not yet as well-developed as models of dark energy. Wiltshire says his group has just begun work tackling the challenge of reducing the BAO data without assuming the FLRW metric, and says that the initial results show promise.

Fitting the heights of the acoustic peaks in the CMB data is even more of a challenge, as it requires rewriting the mathematics that describes the growth of the tiny anisotropies that are the seeds of cosmic structures. To do so with the same accuracy as the FLRW metric relies on something referred to as “backreaction”.

In standard cosmology, the FLRW metric is assumed to exactly describe the average growth of the universe on arbitrarily large scales. However, in a generally inhomogeneous universe as described in timescape cosmology, this is no longer the case. Even if the deviations from homogeneity are small, as the anisotropies of the CMB show, their average growth may not exactly follow the Friedmann equation on large scales. These differences are called backreaction.

“No-one has ever considered backreaction in the primordial plasma [of the CMB] before,” says Wilt-shire. “This is a very hard problem, but I doubt anyone else will want to do it unless the Friedmann equation is shown to fail.”

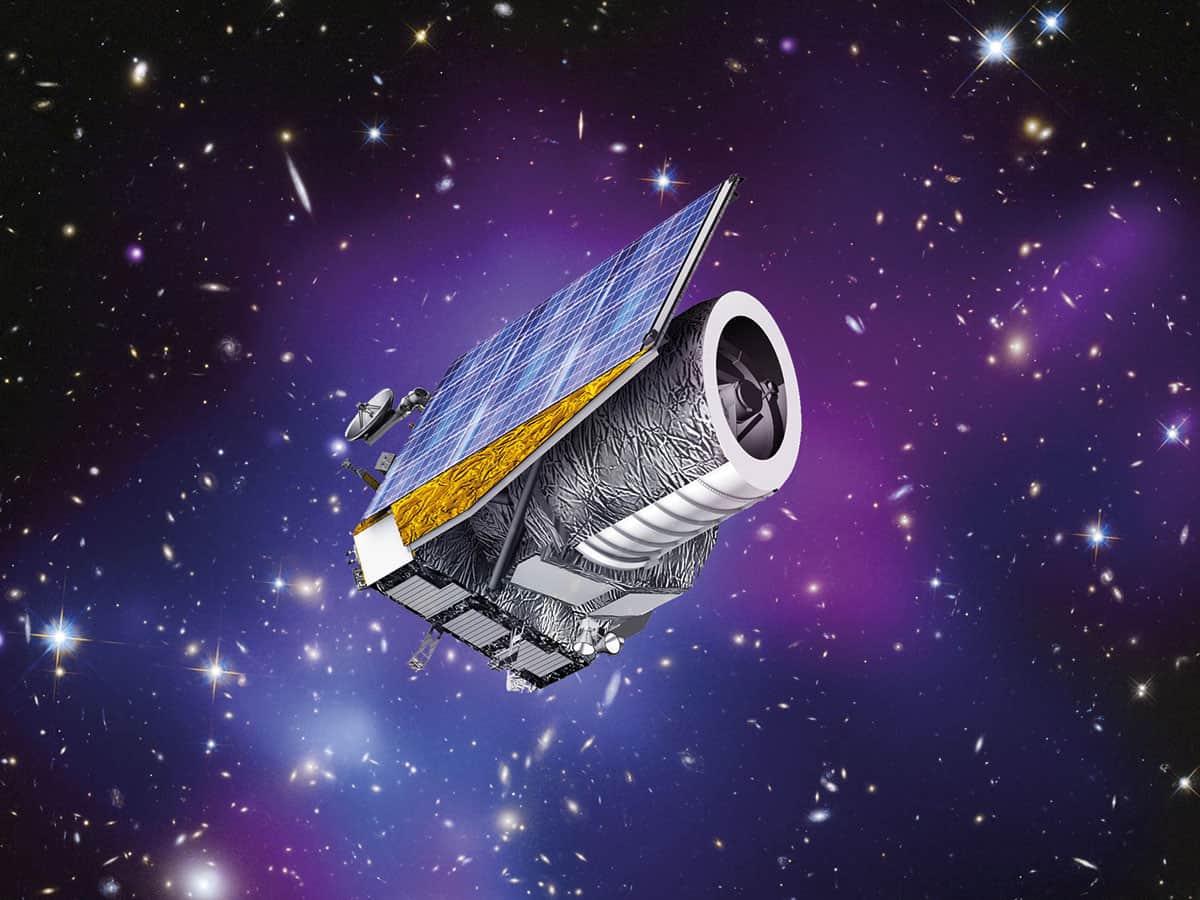

Wiltshire, however, has the FLRW metric in his sights. Euclid, which is a European Space Agency mission launching in the next decade to study dark matter, dark energy and the geometry of space, will be able to put FLRW on the spot using a method developed by Chris Clarkson, Bruce Bassett and Teresa Hui-Ching Ku in 2007. It looks for a relation between the Hubble constant – a measure of the expansion of space – and the luminosity distance (a relation between the absolute and apparent magnitude) of an object. This relation holds only for a universe where the curvature of space is the same everywhere, as per the Friedmann equation. If the test supports the predictions of the FLRW metric, then timescape cosmology is probably wrong. On the other hand, if it disproves the FLRW metric, “it will be game on”, as Wiltshire puts it.

Not so constant constant

The Hubble constant, which is so fundamental to the expansion of space, is also a source of consternation. In 2016 Riess led a team making the most precise measurement of the Hubble constant in the local universe. As with his discovery of the accelerating expansion 20 years ago, Riess made this measurement using type Ia supernovae, initially those that had exploded in galaxies that also host visible Cepheid variable stars – another cosmic yardstick with which to measure stellar distances. By calibrating the supernovae distances with the accurate distances as measured by the Cepheids’ period-luminosity relation, his team then applied that calibration to 300 other type Ia supernovae in more distant galaxies to produce an accurate measurement.

The resulting Hubble constant that Riess’s group measured was 73 km/s/Mpc (in other words, in every million-parsec-wide volume of space, the universe expands by 73 kilometres every second). However, the measurement of the constant in the local universe seems to differ to that in the very early universe, as measured by the European Space Agency’s Planck mission, which found a value of 67.3 km/s/Mpc.

Riess draws an analogy with the growth of a human body. A doctor might measure the height of a child and plot that on a growth chart to predict how tall that child will be when they are an adult. The local measurement of the Hubble constant is like measuring the height of the adult, and Planck’s measurement of the Hubble constant is like measuring their height when they were a child. “Our cosmological model, which includes dark energy and dark matter, predicts what the final height of the child will be,” he says. “It doesn’t appear to be correct.”

So why the difference in the Hubble constant at the opposite ends of history? One possibility is that our assumptions about the early universe are wrong. Perhaps dark-matter particles are less stable or interact more than we thought, which would affect the properties of the CMB. Perhaps there was an earlier spurt of dark energy sometime in the first billion years. “We’re all scratching our heads about this, trying to figure out what could cause it,” says Riess.

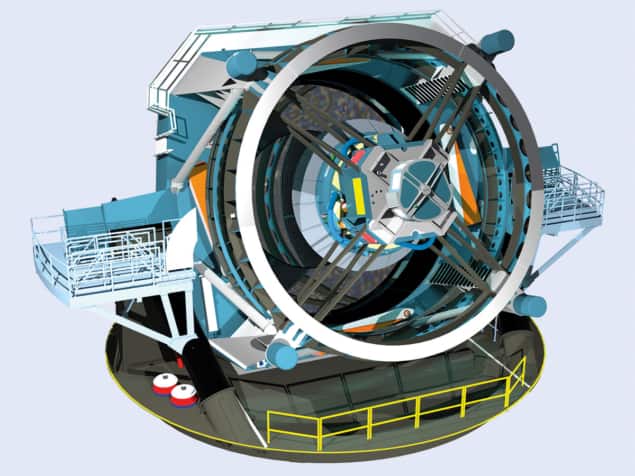

For his part, Szapudi thinks that the discrepancy in the Hubble constant can be explained by a small difference between the standard Λ−CDM model and how the AvERA algorithm depicts the expansion of the universe. “If nothing else, our alternative theory has illustrated that the Hubble constant discrepancy could be a tell-tale sign of a slightly different expansion history,” he says. “Quite independently of the details of our theory, it is an indication that future surveys by Euclid, WFIRST [the Wide-Field Infrared Survey Telescope] and LSST [Large Synoptic Survey Telescope] are likely to find something very interesting when mapping the expansion history.”

Still controversial

Make no mistake, dark energy – be it the cosmological constant or quintessence – is the leading theory with plenty of observational evidence to support it. The alternatives remain highly controversial. Yet those nagging questions – like that huge 10120 discrepancy – just won’t go away. Upcoming surveys will either solidify dark energy theory’s position further, or produce a surprise by pulling the rug out from under it. With the Dark Energy Survey – an international collaboration using a 570-million-pixel camera called DECam on the Blanco 4 m telescope at the Cerro Tololo Inter-American Observatory in Chile – releasing its first data from a survey of 300 million galaxies, these are exciting times.

“What makes this a really fun field to be in is that I don’t know what the next big step will be,” concludes Riess. “We’re just in the middle of our initial reconnaissance, and I don’t think we should be surprised by surprises.”