“Hello, World!” is a simple and commonly used code to introduce people to a programming language. Pronouncing “Hello, World!” out loud, however, while instinctive for most adults, involves a complex series of respiratory, phonetic and resonance tasks to produce sound and articulate the correct words.

Speech originates in the brain, with neural signals fired from the brain cortex effortlessly coordinating vocal tract muscles to communicate. However, for paralysed patients who have lost the ability to articulate speech (a condition called anarthria) due to a brain injury or neurological disorder, verbal communication is especially challenging. This can restrict their autonomy and impact quality-of-life, despite retaining intact cognitive function.

A team of neuroscientists and engineers at the University of California San Francisco (UCSF) used deep-learning and natural-language models to decode the brain waves of a clinical trial participant with severe paralysis and anarthria as he attempted to produce words and sentences. The study, published in the New England Journal of Medicine, showed a promising median decoding rate of 15.2 words per minute (with a median word error rate of 25.6%). This is approximately three times faster than the computer-based typing interface that the participant normally relies on for communication.

“We think that an ideal speech neuroprosthesis would allow a paralysed user to voluntarily and autonomously engage the system to communicate and interact with their personal devices,” explains David Moses, a postdoctoral fellow at UCSF and one of the lead authors in the study.

Recording the brain – restoring the speech link

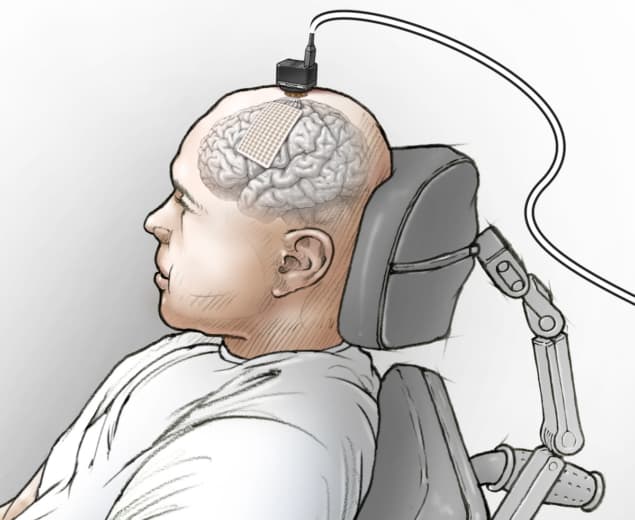

To capture the cortical signals associated with speech in real time, the team used a high-density rectangular electrode array (67 × 35 × 0.51 mm) implanted on the surface of the brain to record electrical activity in multiple cortical brain regions involved in speech formulation.

During speech tasks, a percutaneous connector secured to the participant’s cranium transmits the analogue brain signals – using a detachable link and cable – from the electrode array to a computer for signal processing and filtering.

Translating the speech-correlated filtered cortical brain signals into words restored the participant’s ability to communicate in real-time. Throughout the 81-week study period, the neural implant provided highly stable cortical signals without the need for frequent recalibration, which is desirable for long-term neuroprosthetic applications.

Decoding speech – words library

The researchers employed a set of 50 common English words as the baseline to form grammatically and semantically correct sentences. The participant attempted to produce individual words that appeared on a monitor and, on a subsequent task, create sequences to form sentences such as “hello how are you”, “I like my nurse” and “bring my glasses please”.

A variation of the speech tasks required the participant to formulate a unique response to prompts displayed in the monitor to actively engage him in a conversation.

The neural signals recorded throughout the isolated-word task were used to train word detection and classification models using deep learning. During sentence decoding, the word error rate using only the deep-learning models was 60.5%. When accounting for the next-word probabilities using a natural-language model, the word error rate dropped below 30%, making the neuroprosthetic suitable for real-time speech decoding.

The next step for this technology involves validation with other clinical trial participants. “We need to show that this method of decoding attempted speech from brain activity works with more than just one person and ideally with individuals who have other severe conditions, such as locked-in syndrome,” Moses tells Physics World.