There’s no escaping the laws of physics – even at the movies. Michael Brooks reveals why they’re vital in creating the best possible visual effects

It’s almost ironic that, decades after failing to attend many of his own undergraduate physics lectures, Tim Webber found himself teaching his colleagues the physics they needed to do their job. As chief creative officer at London-based Framestore – one of the world’s leading visual effects (VFX) studios – he’d worked on blockbusters such as Harry Potter and the Goblet of Fire (2005) and The Dark Knight (2008). But it was his Oscar-winning work leading the visual effects on the Alfonso Cuarón movie Gravity (2013) that forced him to share his physics insights.

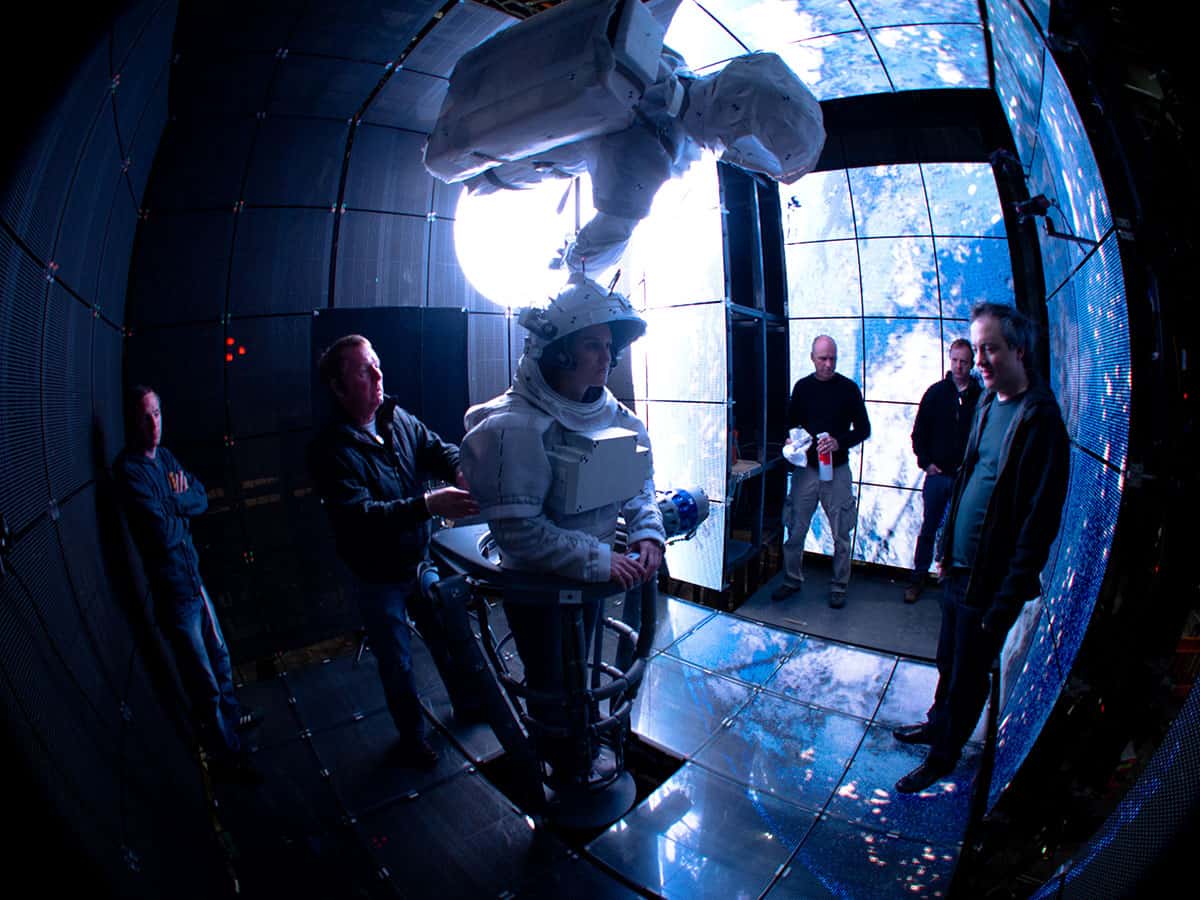

Gravity featured Sandra Bullock and George Clooney as space-shuttle astronauts fighting for their lives in a zero-gravity environment after their craft gets hit by space debris. According to Webber, the problem was that animators spend years developing the skill of creating virtual beings that don’t just look good, but also move in a way that suggests they have weight. “Suddenly,” he says, “they had to animate things that didn’t have weight, but still had mass.” It was a concept that Webber’s team struggled to get their heads around. “So I got them into a room and gave them physics lectures.”

His tutorials paid off. Webber – plus his colleagues Chris Lawrence, Dave Shirk and Neil Corbould – won the 2014 Academy Award for Best Visual Effects for their work on the movie. But then Webber has always had a creative bent. As an undergraduate at the University of Oxford in the early 1980s, he’d spend more time in arts studios than physics labs. Indeed, he’s one of many similarly inclined people who use their training in physics, engineering and maths in the VFX industry. And no wonder. When it comes to recreating a believable world on screen, physics is everything. “Maths and physics feature very heavily,” says Webber’s Framestore colleague Eugénie von Tunzelmann.

Before working at Framestore, von Tunzelmann – an engineer and computer scientist by training – was a visual-effects designer at another London VFX firm called Double Negative. While there, she worked on Christopher Nolan’s epic sci-fi movie Interstellar (2014) and ended up co-authoring a scientific research paper about Gargantua – the black hole that’s the focus of the film (Class. Quant. Grav. 32 065001). She wrote the paper with Paul Franklin, who had co-founded Double Negative, and another colleague from the firm, Oliver James. The trio had collaborated with Caltech physicist Kip Thorne (the fourth author on the paper) to create as realistic a simulation of a supermassive black hole as possible. The simulation won plaudits from physicists and Hollywood critics alike – and led to Franklin sharing the VFX Oscar in 2015.

From humble beginnings

Things have certainly come a long way since the iconic – but less-than-realistic – VFX of King Kong (1933) or Jason and the Argonauts (1963). The transition to computer-generated imagery (CGI) in films such as TRON (1982) and Jurassic Park (1993) was a game-changer, but there was still plenty that was unrealistic about the way light behaved, or creatures moved. This, though, is an industry that never stands still. “New techniques are constantly being developed,” says Sheila Wickens, who originally studied computer visualization and is now a VFX supervisor at Double Negative. “It is very much a continually evolving industry.”

These days, the industry has embraced what is known as “physically based rendering” whereby physics is “hard-wired” into the CGI. Industry-standard software now includes physics-based phenomena, such as accurately computed paths for rays of light. “The complex maths used in ray-tracing is in part based on maths developed for nuclear physics decades ago,” says Mike Seymour, a VFX producer and researcher at the University of Sydney whose background is in mathematics and computer science.

Daniel Radcliffe: VFX tricks and wizardry

Other phenomena captured by today’s CGI include life-like specular reflection, which means that materials such as cotton and cardboard – which in the past did not reflect light in CGI scenes – are now modelled more realistically. A similar thing has happened with the inclusion of Fresnel reflection so that image-makers can account for the fact that the amount and wavelengths of light reflected depend on the angle at which the light hits a surface. Indeed, it’s no longer acceptable to make things up, or break the laws of physics, says Andrew Whitehurst, VFX supervisor at Double Negative, who won an Oscar in 2016 for his work on Alex Garland’s artificial-intelligence-focused thriller Ex Machina.

“When I began in the industry a little over 20 years ago, we cheated at almost everything,” Whitehurst admits. “Now, surfaces are more accurately simulated, with reflectometer research being implemented into code that describes the behaviour of a variety of materials. Our metals now behave like metals and, by default, obey the laws of energy conservation. Fire and water are generally simulated using implementations of the Navier–Stokes equations: the tools we use in VFX are not dissimilar to those used by researchers needing to compute fluid simulations.”

In fact, Whitehurst says, it’s hard to see how many things can be made any more realistic. “We can blow anything up we want, we can make anything fall down that we want, we can flood anything we want, and we can make things as hairy as we would like.”

Much of this accuracy comes out of deliberate research programmes, either in academia or within the studios themselves. The fact that filmmakers can now accurately model curly hair, for example, owes a debt to researchers at the renowned US animation studio Pixar, who, in the early 2010s, developed a physics-based model for the way it moves. Their model is described in a Pixar technical memo (12-03a) entitled “Artistic simulation of curly hair” by a team led by Hayley Iben, a software engineer who originally did a PhD at the University of California, Berkeley, on modelling how cracks grow in mud, glass and ceramics.

Modelling the movement of curly hair for animations, it turns out, is best done by representing hair as a system of masses on springs. The technique Iben and her team developed was used to great effect in the animation of the curly-haired hero Merida of Brave (2012), and later in films such as Finding Dory (2016) and The Incredibles 2 (2018). Admittedly, it’s more accurate to model hair as infinitesimally thin elastic rods, but this, the Pixar group says, straightens out the hair too much when in motion. Increase the stiffness to avoid this, and the hair takes on an unrealistic wiriness as the character moves their head.

Such compromises are important. After all, it’s the movie director who gets the final say in whether a visual effect works. “Being able to make something 100% real is actually just a stepping stone to making something cinematic,” says Seymour at the University of Sydney. “It is often critical to be able to create something real, and then depart from it in a believable way.”

Sometimes the departure doesn’t even have to be believable. Back at Framestore in London, von Tunzelmann recalls being asked to create fantasy fire where the flames curled in spirals. “There was no software that can do that, so we wrote a new fluids solver that measured the curl of the field and exaggerated it,” she explains. Everyone in the VFX industry, it seems, wants someone with a background in physics or engineering on their side (see box below).

Even in things where we are trying to play by the rules, we are going to bend them here and there

The same conflict between aesthetics and reality occurred in Interstellar. Whitehurst, who helped develop some of the VFX techniques used in the film, suggests that movie was both realistic and not. The team had to rewrite the ray-tracing software to account for the intense curvature of space around a black hole – and also had to dial down Gargantua’s brightness for the audience. “You can see exciting detail in it, and you probably wouldn’t be able to [in reality] because it would be so staggeringly bright,” Whitehurst points out. “Even in things where we are trying to play by the rules, we are going to bend them here and there.”

That human factor

For all the progress in making movie animations look as realistic as possible, one challenge still looms large: how best to represent human beings. Getting human features to look right for movie-goers is about more than just simple physics. We can make a human face that is photographically perfect – the issues of light transport through skin and modelling how wrinkles work have largely been solved. The difficulty is in the subtleties of how a face changes from moment to moment. The problem, Framestore’s Webber reckons, is that evolution has trained the human eye to analyse faces and work out if someone is lying or telling the truth, is healthy or unhealthy.

“Being able to recreate faces and fool the human eye is exceptionally tricky,” he says. And the truth is that we don’t even know what it is we see in a face that tells us something is awry: it’s a subconscious reaction. That means VFX designers don’t know how to fix a wrong-looking face – and just can’t generate one from scratch, let alone know how they might recreate a particular emotion that you want that character’s face to portray at that moment. “The last thing you want is a character saying ‘I love you’ when the eyes are saying the opposite,” Webber says.

And if you want to make life for a visual-effects designer even harder, try asking them to put a human face underwater, Seymour suggests. “The skin and mass of the face moves with gravity mitigated by buoyancy,” he says. “If they then quickly move a limb underwater near their face, the current produced by the simulated flesh of their hand needs to inform a water simulation that will affect their hair, their face-flesh simulation and any tiny bubbles of air in the water. These multiple things all interact and have to be simulated together.”

For now, animators compromise by combining CGI with motion capture, whereby an actor does their performance with dozens of dots glued to their face so that the image-processing software can track all the muscle movements from the recorded scene. VFX designers then use this information to create a virtual character who might need larger-than-life qualities (quite literally, in the case of the Incredible Hulk). Finally, they overlay some of the original footage to re-introduce facial movements. “This brings back subtleties that you just can’t animate by hand,” Webber says.

It turns out that our eye is more forgiving when it comes to CGI representations of animals. That’s why we have seen a slew of movies led by computer-generated “live-action” animals, from Paddington (2014) to the recent remakes of Disney’s The Jungle Book (2016) and The Lion King (2019). Framestore has recently been working on Disney’s upcoming remake of Lady and the Tramp. Due out later this month, it mixes footage of real and CGI dogs – and the VFX are so realistic that many in-house animators can’t tell which is which, according to Webber. “People in the company have asked why a particular shot is in our showreel when it’s a real dog, and they have to be told – and convinced – that it isn’t!”

The new remake of Lady and the Tramp mixes footage of real and CGI dogs that’s so realistic that many in-house animators can’t tell which is which

Some things in movies, however, will never be truly realistic. Directors in particular want their monsters to move quickly because that’s more exciting. However, the laws of physics dictate that massive creatures move slowly – think how lumbering an elephant is compared to a horse – and our subconscious knows it. So when we watch a giant monster scurry across the screen, it can feel wrong – as if the creature has no mass.

“If Godzilla or a Transformer were actually to try to move at the speed they do in the movies, they would likely tear themselves apart, as F = ma last time I checked,” Whitehurst says. “This is a fight that I always have, and that everyone always has. But ultimately a director wants something exciting, and a Pacific Rim robot moving in something that looks like ultra-ultra-slow motion doesn’t cut it.” It’s a point echoed by Sheila Wickens, who studied computer visualization and animation at Bournemouth University and is now VFX supervisor on the BBC’s flagship Doctor Who series. “We usually start out trying to make something scientifically correct – and then we end up with whatever looks good in the shot,” she says.

Douglas Trumbull: a mutual appreciation between scientists and moviemakers

That fight between directors wanting visual excitement and animators wanting visual accuracy is what made working on Gravity so special for Webber. He says the film was the highlight of his career to date – but also “by far the most challenging movie” he’s worked on. “All we were filming was the actors’ faces, everything else was made within the computer,” he says. The team had to write computer simulations of what would happen in microgravity when one character is towing another on a jet pack, and the result became a plot point. “We found that they bounced around in a very chaotic and uncontrollable way,” Webber says. “It’s literally down to F = ma, but Alfonso, who was working with us, really loved it and folded that into the script.”

That was quite a moment, Webber says. Suddenly, all his physics lectures – given and received – had been worth it.

Paths to success in the visual-effects industry

If you’re a physicist who wants to work in the visual-effects (VFX) industry, what opportunities are available and what skills do you need – beyond a willingness to lecture your colleagues about the finer points of F = ma?

Yen Yau, a Birmingham-based project manager who trains newcomers in the world of film

Having worked on careers publications for ScreenSkills – the industry-led skills body for the UK’s screen-based creative industries – Yau says there is huge diversity in the paths people can take. “Certainly, physics is going to be more important in some roles, but there are numerous routes in for all types of backgrounds and experiences of applicants.”

Eugénie von Tunzelmann, a visual-effects designer at London VFX firm Framestore

While most workers in the VFX industry don’t have a background in science, technology, engineering and mathematics (STEM) subjects, she says there is a need for people with skills in those areas. Anyone working in a job that involves programming a software plugin that, say, defines how light bounces off a surface will almost inevitably have a background in physics or optics. If you were trying to model fluid flow, “you’d need to have an understanding of thermodynamics”, she says.

Andrew Whitehurst, Oscar-winning VFX designer

Describing himself as “an artist with an interest in physics and engineering”, Whitehurst says that many people in the VFX industry are physicists or engineers with a creative itch that won’t go away. “I work with people with physics doctorates or engineering doctorates and art-school dropouts but we all meet in the middle. I have no formal background in physics, but I have a reasonable passing knowledge of a lot of physics and engineering principles. I need to know why camera lenses do what they do, for example, so that we can mimic their behaviour.” But scientists working in VFX will have to learn how to compromise, he notes. “We use a lot of science and engineering, but we are not in the business of scientific visualization. I am an enormous respecter of science, but if I can make a more beautiful picture that tells the story better, I’m going to do it.”

Tim Webber, physicist who is now chief creative officer at Framestore in London

Even if you don’t need to understand the maths hidden in the software that the VFX industry use, Webber feels it helps to understand the principles of what the equations are doing, pointing to his experience early in his career working on a 1996 Channel 4 TV mini-series dramatizing Gulliver’s Travels and starring Ted Danson. “There are lots of small people and big people and we had to work out the angles, where to put the camera, so that the perspective would match what it would match in the other scale. I was using bits of paper and rulers and protractors and calculators.”