A combined analysis of the concentrations of radon and one of its radioactive isotopes called “thoron” may potentially allow for the prediction of impending earthquakes, without interference from other environmental processes, according to new work done by researchers from Korea. The team monitored the concentrations of both isotopes for about a year and observed unusually large peaks in the thoron concentration only in February 2011, preceding the Tohoku earthquake in Japan, while large radon peaks were observed in both February and the summer. Based on their analyses, the researchers suggest that the anomalous peaks observed in that month were precursory signals related to that earthquake that followed the following month.

Earthquake prediction remains the holy grail of geophysics, and an oft-proposed but highly contested method for quake forecasting revolves around the detection of abnormal quantities of certain gaseous tracers in soil and groundwater. These are believed to be released through pre-seismic stress and the micro-fracturing of rock in the period immediately before an earthquake.

Cloudy with a chance of tremors?

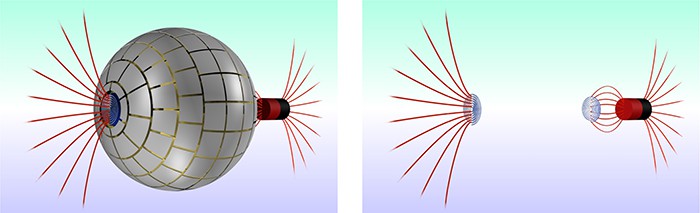

While a number of such precursors have been proposed – including radon, chloride and sulphate – their application to earthquake forecasting has not been realized. The problem here lies in how abnormal concentrations of these tracers can also occur through other environmental processes. For example, signals from radon (222Rn) – an easy-to-detect radioactive gas whose short half-life of 3.82 days makes it highly sensitive to short-term fluctuations – can be disrupted by meteorological phenomena and tidal forces. Radon has no stable isotopes, but has a host of radioactive isotopes including a very short-lived isotope called thoron (220Rn, half-life = 55.6 s).

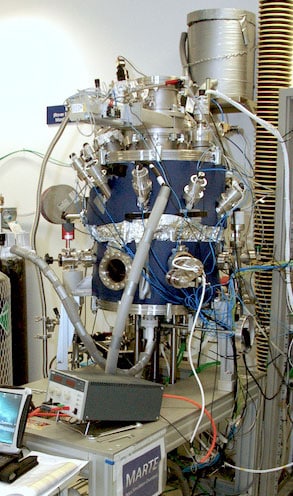

In a new study, Guebuem Kim and Yong Hwa Oh of Seoul National University propose that an underground, dual-tracer analysis – using both radon and thoron – might be able to overcome these limitations. With its half-life of only 56 seconds, measured thoron activity in the stagnant air of a cave should typically be very low if the recording detector is placed sufficiently far (0.2 m) from the cave floor. “Thoron – through diffusive flows – decays away before it reaches the detector,” explains Kim. “Thus, at an optimum position, only advective flows of thoron – earthquake precursors – reach the detector.”

To test this concept, the researchers took hourly measurements of the radon and thoron concentration in the Seongryu Cave, in eastern Korea’s Seonyu Mountain, over a period of 13 months. The cave – which formed around 250 million years ago – is around 330 m long and varies from 1 to 13 metres in height. Recordings were taken in a part of the cave that is isolated from the air flow from the outside, preventing any thoron anomalies that may arise from a wind-induced surface flow along the cave floor.

Unexpected peaks

An unusually large peak in thoron concentration – above those caused by seasonal variations or daily temperature fluctuations, and unexplainable by a precipitation event – was recorded in the February of 2011, preceding the magnitude 9.0 Tohoku earthquake in Japan, 1200 km away, a month later. In contrast, radon peaks were observed not only during February but also in the preceding summer period, when atmospheric stratification is believed to better trap radon within the cave system. While the thoron measurements alone are capable of recording earthquake signals, Kim says, the anomalous peaks detected were clearer when plotted in tandem with radon activity.

The single station used in the study would not be able to localise or assess the magnitude of an impending earthquake, but the team suggest this may be done using a large network of such detectors. Though the researchers undertook their measurements in a natural limestone cave system, the principle could also be applied to man-made caverns, the researchers report, with the method not being dependant on a particular lithology of rock.

Heiko Woith, a hydrogeologist at the Helmholtz-Zentrum Potsdam in Germany who was not involved in the Korean team’s work, is sceptical about the new method. “The length of the time series is too short to judge the reliability of a precursor,” he says, cautioning that a non-tectonic origin for the thoron anomaly still cannot be ruled out. “Certainly, the radon–thoron approach is interesting to follow in future studies, but it is premature and misleading to call it a new ‘reliable earthquake precursor’ at this stage,” he concludes.

With this initial study complete, the researchers are now looking to further explore the potential of their radon–thoron technique by setting up a remote monitoring system within an artificial cave, powered by a solar panel on the surface. Ultimately, Kim suggests, these system might be deployed on a larger scale.

The research is described in Scientific Reports.