When you sell a microscope that focuses electrons into a 0.1 nm diameter beam — which then must be held steady on a single column of atoms — you don’t just drop the instrument at your customer’s door and let them get on with it. Indeed, long before the system is packed for shipping, customer and supplier will have already collaborated on preparing an appropriate location where the instrument will perform at its best. Some of the customer’s personnel will have even been trained in how to use the instrument.

But according to Mike Hepburn, who is managing director of the UK subsidiary of the Japanese microscope maker JEOL, some of the firm’s customers actually help the company to develop microscopes at its factory in Japan.

Take Angus Kirkland, a materials scientist at the University of Oxford in the UK. JEOL is working with Kirkland’s research group to develop ultrahigh resolution transmission electron microscopes (TEMs). This has involved development staff from the firm’s manufacturing facility in Japan visiting Kirkland’s lab for several months at a time.

Hepburn told physicsworld.com that both parties gain from this type of interaction, with the Oxford group even publishing a number of research papers on the development of “super resolution” techniques for TEM. For its part, Hepburn says that JEOL can now offer technology developed in collaboration with Oxford on TEMs that it sells to other customers.

Making new science possible

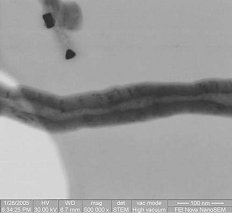

According to Hepburn, a key achievement of the collaboration is that the TEM — which was originally specified to have a 0.12 nm resolution — is now operating at 0.07 nm. “That doesn’t seem like a huge difference numerically”, he says, “but in terms of the new science it makes possible, it really is a big difference”.

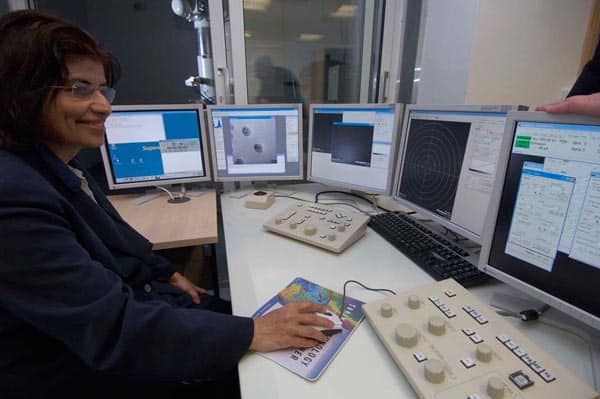

Other collaborations between the firm and UK universities focus more on developing new applications for JEOL instruments. This includes the company’s current collaboration with Pratibha Gai, who is JEOL Professor of Electron Microscopy at the University of York in the UK. Gai is one of the leading authorities on environmental TEM and co-director of the York JEOL Nanocentre, a £5.5m facility that opened in April 2007 and was partially funded by JEOL.

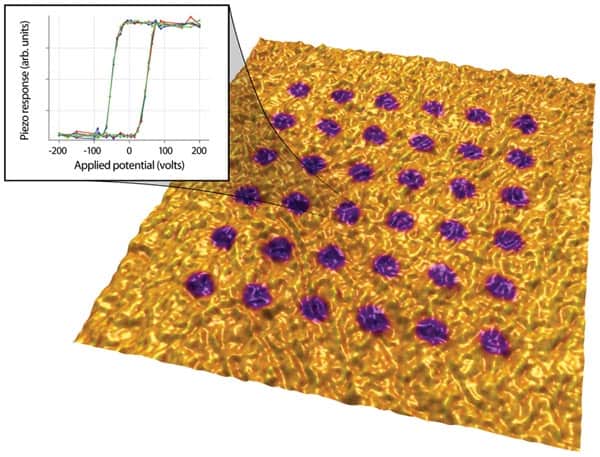

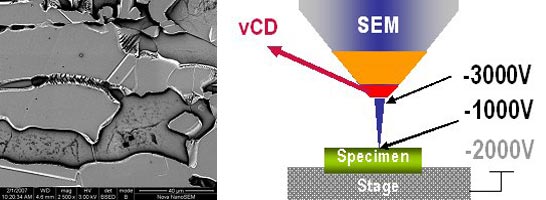

According to Hepburn, the centre is home to one of the world’s most powerful electron microscopes. This is a “double aberration-corrected microscope”, which has both imaging (TEM) and probe (scanning TEM) aberration-correctors fitted. It can obtain images at 0.1 nm resolution and resolve column of atoms at a resolution better than 0.2 nm.

The York researchers are using the instrument to develop new techniques for environmental TEM, in which a sample is placed in a small cell that does not need to be held under a high vacuum as with an ordinary electron microscope but can operate at ambient pressures. This technique allows researchers to see, for example, chemical reactions occurring in real time. “We help our customers through advanced training to understand the instrument so they can develop new applications,” explains Hepburn.

Elsewhere in the UK, JEOL maintains an ongoing relationship with Peter Goodhew, a materials engineer at the University of Liverpool and director of the superSTEM project at the nearby Daresbury Laboratory. SuperSTEM is developing two aberration-corrected TEMs — one of which is an existing TEM that has been retro-fitted with an aberration-correction system and the other which was delivered earlier this year by the US-based firm NION.

Corrected columns

While JEOL is not involved directly with the superSTEM project, Hepburn said that it is important for the company to be associated with one of the key groups working in this area “[Liverpool] is one of the leading groups for using corrected columns”, he explains. “We are very excited to be working with them”.

JEOL UK also collaborates with Tony Cullis, a semiconductor physicist at the University of Sheffield. The Sheffield group has recently ordered an ultra-high resolution TEM from JEOL, which is currently developing the instrument and expects to deliver it by mid-2009. To ensure that Cullis’s team will be able to use the new instrument when it arrives, JEOL is currently installing an interim instrument that will be used to train the staff.

“Our collaborations often begin with the development of leading-edge instrumentation and establish a close working relationship between the user and our development team”, says Hepburn. He believes that this delivers advantages to both parties. “We get feedback from the researchers back into the factory for future design and development and they benefit from getting advanced information about new developments,” he says. “In some cases we arrange for researchers to visit our factory in Japan to have discussions about design. It is a genuine win-win situation for both sides.”

Selling instruments

While collaborations with high-profile researchers help the company to develop new technologies, Hepburn emphasizes that JEOL is in the business of selling instruments to a wide range of users. He believes that there is much more to a successful sale than simply delivering the instrument. “It is one thing to encourage an institute to buy your instrument, but if it not sited correctly, or their staff not trained, then they are going to be disappointed”.

As a result, the company works very closely with the customer to ensure that its microscope is installed in the best possible location. “We get involved in the very beginning by having our engineers attend the customer’s site meetings”, explains Hepburn. JEOL engineers will even talk to architects and builders regarding alterations to existing buildings and the design of new facilities. This is important because environmental factors such as vibrations can have a detrimental effect on the operation of an electron microscope.

“We have been lucky enough at Oxford to have a new purpose-built building, but that is the exception rather than the rule”, he explains. “Normally we have to work within the confines of an existing building, which means that sometimes a hole has to be dug, then insulated from its surrounding area and filled with a solid concrete block” in order to minimize vibrations.

“Within JEOL UK we have several people who project manage the installation process from a technical point of view and our engineers have many years experience with creating the best location for an instrument,” says Hepburn. Although he admits that knowing what the correct environment should be is “a little bit of a black art”, Hepburn insists that the company will always get the right answer.

40th anniversary

JEOL UK has about 40 employees, with its parent company JEOL Ltd of Japan having 3250 people worldwide. JEOL Ltd was established in Japan in 1949, while JEOL UK was launched 40 years ago this year. According to Hepburn, the firm has installed more than 1000 electron microscopes in the UK — along with several hundred NMR instruments and mass spectrometers that the company also makes.

The vast majority of the company’s sales and service staff have scientific or engineering backgrounds, and many have been students or technicians in university labs that use JEOL instruments. This includes Hepburn, who worked in the Materials Department at the University of Surrey where he used a JEOL TEM.

Hepburn says that the firm is seeking to boost its ranks by hiring people at the postdoc level, who will help the firm expand its user-training and applications-development programmes. The company is also looking to sponsor the research of up-and-coming scientists who use their equipment. In doing so, the firm hopes to encourage the next generation of microscope users, as well as benefit from the drive and enthusiasm of less established researchers.