Physicists have deployed a Bose–Einstein condensate (BEC) as a “quantum microscope” to study phase transitions in a high-temperature superconductor. The experiment marks the first time a BEC has been used to probe such a complicated condensed-matter phenomenon, and the results – a solution to a puzzle involving transition temperatures in iron pnictide superconductors – suggest that the technique could help untangle the complex factors that enhance and inhibit high-temperature superconductivity.

A BEC is a state of matter that forms when a gas of bosons (particles with integer quantum spin) is cooled to such low temperatures that all the bosons fall into the same quantum state. Under these conditions, the bosons are highly sensitive to tiny fluctuations in the local magnetic field, which perturb their collective wavefunction and create patches of greater and lesser density in the gas. These variations in density can then be detected using optical techniques.

The new instrument, known as a scanning quantum cryogenic atom microscope (SQCRAMscope), puts this magnetic field sensitivity to practical use. “Our SQCRAMscope is basically like a microscope – a big lens, focusing light down on a sample, looking at the reflected light – except right at the focus we have a collection of quantum atoms that transduces the magnetic field into a light field,” explains team leader Benjamin Lev, a physicist at Stanford University in the US. “It’s a quantum gas transducer.”

Making a practical probe

The SQCRAMscope grew out of Lev’s earlier work on atom chips. In these devices, clouds of ultracold atoms are confined within a vacuum chamber to isolate them from their environment and levitated with magnetic fields generated by a miniaturized circuit, or chip.

One possible application of atom chips is to use these suspended atom clouds as sensitive, high-resolution probes of magnetic fields. But there was a problem: whenever researchers wanted to change the configuration of their chips, or bring a new sample of material near the atoms, they needed to break the vacuum, remove various optical components, take the chip out, and then put everything back. “It would take literally months to change anything,” Lev says. “It was not very good for rapid trials.”

A further complication is that running current through an atom chip causes the chip to heat up, affecting the temperature of any nearby sample in ways that are hard to predict or control. This, Lev observes, is “not ideal if you want to study phase transitions” and other temperature-dependent phenomena.

The Stanford team’s solution was to separate their atom chip from the samples they wanted to study. While the ultracold rubidium atoms in the SQCRAMscope remain inside a vacuum chamber, the chip used to magnetically trap them is located just outside it, with a few-hundred micrometre gap in between for the samples to slide in. “It’s a crazy Rube Goldberg kind of device, but it works,” Lev says.

A pnictide puzzle

After testing their SQCRAMscope on samples of gold wire, the researchers turned to a far more complex material: an iron pnictide superconductor with the chemical formula Ba(Fe1−xCox)2As2. At room temperature, this material is a metal with a tetragonal crystal structure, but as it cools, it undergoes a transition to a nematic phase at a temperature that depends on the doping fraction x. At this point, the material’s crystalline symmetry is partially broken, and it exhibits a characteristic patchwork pattern of magnetic domains. However, the exact temperature of this nematic transition was the subject of some debate, because measurements that were sensitive to the material’s bulk properties gave one answer, while methods that focused on its surface properties suggested another.

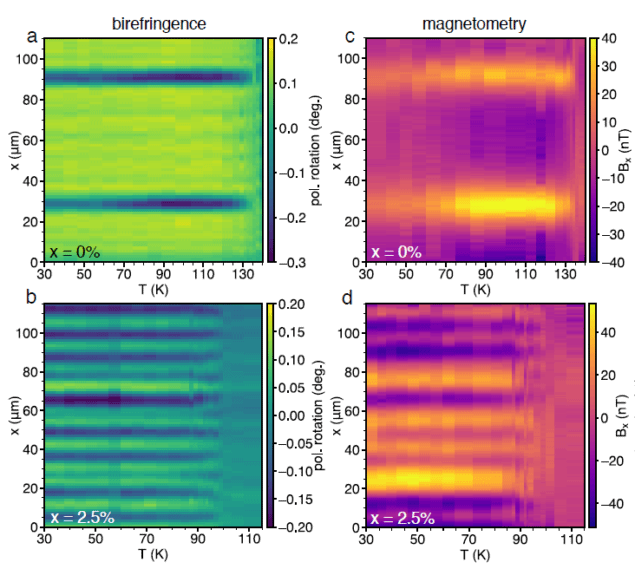

Enter the SQCRAMscope. “These spatial patterns [in the magnetic domains] develop on the scale of a few microns, and that’s exactly matched to the spatial resolution of the SQCRAMscope,” Lev says. With help from collaborators in Stanford’s Geballe Laboratory for Advanced Materials, he and his team prepared a sample of the iron pnictide, connected it to gold wires, and used the SQCRAMscope to image magnetic field fluctuations as current flowed through it. By combining these magnetometry measurements with separate measurements of the sample’s optical birefringence, the team could study the sample’s bulk and surface magnetic properties simultaneously, and resolve the debate engendered by previous conflicting measurements.

Strange metals become even stranger

What they found is that, contrary to hints of a discrepancy in previous studies, the material’s structural transition to a nematic phase and its electron transition occur at the same temperature: about 135 K for an undoped sample, and 96.5 K for a sample with 2.5% doping (see image). “People are excited about multimodal probes, where you can do two distinct measurements at the same time without any sort of change to the sample or substrate,” Lev says. “We have a perfect example of that, and it’s because the atoms are transparent to all wavelengths except for those that are resonant with rubidium.”

Scope for improvements

Now that the SCQRAMscope has proved its worth as a means of studying strongly correlated materials, Lev says that he and his colleagues have “a long list of fun projects to do”. Possible follow-up experiments include using the SCQRAMscope to probe other properties of pnictides (including superconducting electron transport) and study electron transport in 2D materials such as graphene. The team is also improving the instrument’s technical capabilities by reducing its use of cryogens, extending its range of operating temperatures and designing a new mount to make sample exchange easier.

Further details of the study appear in Nature Physics.

Join our webinar with Shawn Prince and Awais Mirza from Accuray who will provide an overview of the finalized Medicare Radiation Oncology Alternative Payment Model (RO-APM). This payment model is designed to test whether bundled, prospective, site-neutral, modality agnostic, episode-based payments to physician group practices, hospital outpatient departments and freestanding radiation therapy centers for radiotherapy episodes of care, reduces Medicare expenditures, while preserving or enhancing the quality of care for Medicare beneficiaries.

Join our webinar with Shawn Prince and Awais Mirza from Accuray who will provide an overview of the finalized Medicare Radiation Oncology Alternative Payment Model (RO-APM). This payment model is designed to test whether bundled, prospective, site-neutral, modality agnostic, episode-based payments to physician group practices, hospital outpatient departments and freestanding radiation therapy centers for radiotherapy episodes of care, reduces Medicare expenditures, while preserving or enhancing the quality of care for Medicare beneficiaries. Shawn Prince has an undergraduate degree in Business Administration from The Ohio State University and a Master’s of Business Administration degree from Arizona State University. Shawn is a Senior Director of Patient Access at Accuray. His previous professional experience includes reimbursement and sales for various pharmaceutical and biotechnology companies.

Shawn Prince has an undergraduate degree in Business Administration from The Ohio State University and a Master’s of Business Administration degree from Arizona State University. Shawn is a Senior Director of Patient Access at Accuray. His previous professional experience includes reimbursement and sales for various pharmaceutical and biotechnology companies. Awais Mirza is the senior manager of patient access with Accuray. Within the patient access department, Awais works to secure appropriate coding, reimbursement coverage and payment from both Medicare and commercial insurers. He has an expansive background in clinical and administrative radiation oncology and has worked for a number of hospitals as a licensed radiation therapist and administrative manager/leader prior to joining Accuray.

Awais Mirza is the senior manager of patient access with Accuray. Within the patient access department, Awais works to secure appropriate coding, reimbursement coverage and payment from both Medicare and commercial insurers. He has an expansive background in clinical and administrative radiation oncology and has worked for a number of hospitals as a licensed radiation therapist and administrative manager/leader prior to joining Accuray.