The problem with marine plastic pollution is that there’s just not enough of it. We obviously don’t want there to be more, it’s just that the quantities of plastic we know to be floating in the world’s oceans fall far short of the amount that scientists say should be there.

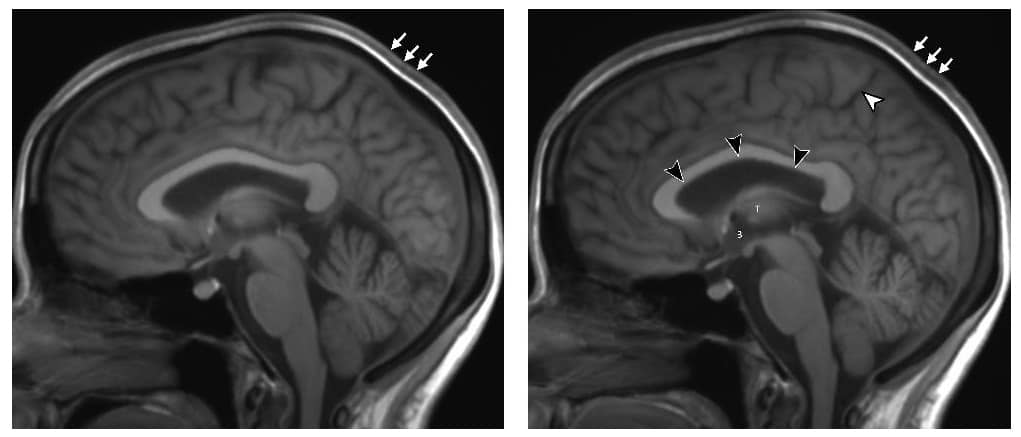

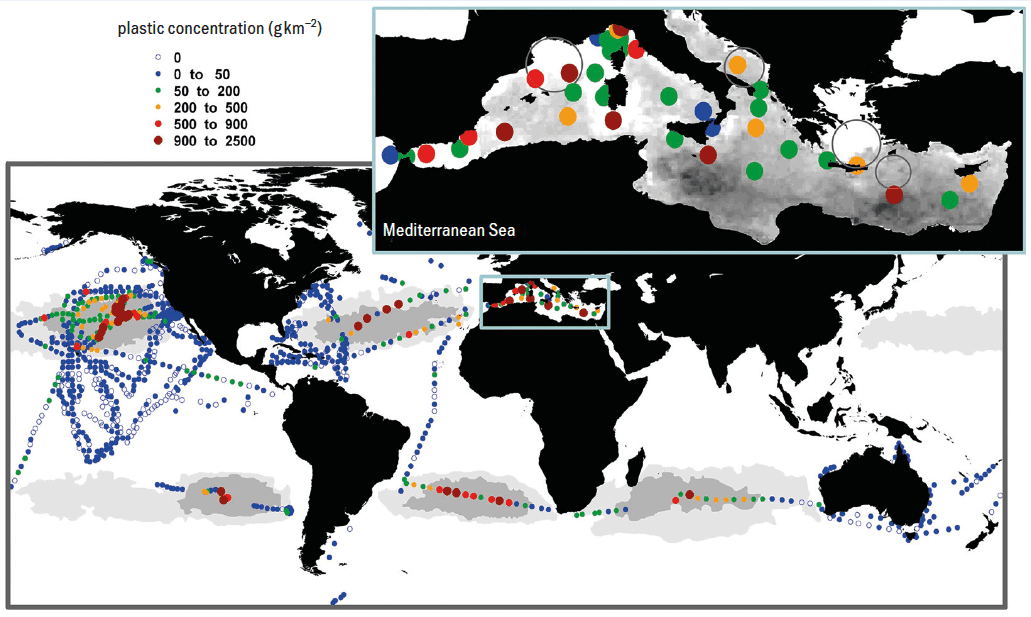

Take the world’s best-known concentration of ocean plastic, the Great Pacific Garbage Patch. This is an accumulation of floating debris encircled by the North Pacific Gyre (a system of ocean currents that circulates between North America and Asia). It’s estimated to contain a staggering 80,000 tonnes – or 1.8 trillion pieces – of plastic. Similar gyres hold smaller collections in the South Pacific, the Indian Ocean, and the North and South Atlantic too. All in all, the total mass of known plastic floating on or near the surface of our oceans exceeds 250,000 tonnes (figure 1). But that’s not enough.

Since we started releasing plastic into the sea in the 1950s, the amount emitted annually has risen to something like 10 million tonnes. Most of the material entering the oceans nowadays is less dense than water, which means that if it survives for longer than a few years, we should expect to find tens of millions of tonnes of plastic floating at the surface. That’s orders of magnitude more than we actually see – so where’s it all gone?

In the Great Pacific Garbage Patch many pieces are old, and items made recently are poorly represented. Floating objects typically seem to take several years to make their way from a coast or river where they enter the sea, to one of the subtropical gyres where they linger long-term. Most pieces never finish that journey (or at least, they haven’t yet), but where they end up and how they get there are still unknown.

“It’s not missing like dark matter is missing; it’s a different kind of missing,” says Erik van Sebille, an oceanographer and climate scientist at Utrecht University in the Netherlands. “Nobody knows how much is at the seafloor versus how much is on beaches versus how much is ingested by animals versus how much of it is already degraded by bacteria. And that is the puzzle.”

Wherever we do find plastic pollution in the environment, we also see its effect on wildlife, from turtles entangled in discarded fishing nets, to starving sea birds with stomachs full of bottle caps and other indigestible detritus. To quantify how widespread such effects are – an essential step if we want to mitigate them – we need a much better picture of how marine plastic is distributed, and how it moves around in the years and decades after its release. This is also necessary if we’re to have any success in cleaning up the oceans, as collection efforts that focus exclusively on the most prominent 1% of material might have little effect.

Modelling the journey

Many oceanographers think the best way to produce a detailed map of marine plastic is through modelling. The Tracking of Plastic in Our Seas (TOPIOS) project – funded by the European Research Council and led by van Sebille – is an example of such an effort. Currently, the TOPIOS model integrates tides, ocean currents and the wind to predict the paths taken by objects floating at the surface. When plastic sinks down into the ocean, it’s removed from the model and is no longer tracked. Over the course of its five-year programme, which started in April 2017, the TOPIOS researchers expect to broaden the model by building a comprehensive, high-resolution version that captures every relevant process in all three dimensions. When complete, the simulation will take into account not only the obvious oceanographic influences, but more subtle considerations too.

One such factor is the role played by marine microbes. The longer a piece of plastic remains in the ocean, the more living organisms it accumulates. This “biofouling” process gradually increases the overall density of the object-plus-hangers-on, until eventually the whole thing sinks into the depths. But different microbes populate different parts of the ocean, and not all affect floating plastic equally. The difference between an object reaching a subtropical gyre or being deposited on the seabed a thousand kilometres away could come down to which species set up home on its surface.

Another important parameter that varies from place to place is how bright the sunshine is. Exposed to ultraviolet light for long enough, plastic photo-oxidizes, eventually becoming brittle and breaking into fragments. The resulting microplastic particles can be dispersed throughout the water column, where they enter an entirely different transport regime.

Even with such a detailed model, however, the TOPIOS team will still need some empirical data of its own to feed into the system. The ultimate goal is to power the model with a Bayesian inference algorithm, using one set of observations of plastic to train it and another set to validate it.

Searching from space

Unfortunately, those observations are not easy to come by. For one thing, the sheer size of the oceans makes taking representative measurements difficult and expensive. Another problem is that the distribution of plastic is heterogeneous even on a small scale: samples a kilometre apart can differ in plastic concentration by an order of magnitude, says van Sebille. “It’s a bit like an astronomer pointing their telescope into the sky 100 times and then having to say what the structure of the universe is like.”

For some, satellites provide the obvious solution to this problem, since it’s only from space that you can see the big picture. However, no satellites currently in orbit are dedicated to the task of observing marine plastic – nor are any on the drawing board. The technology and techniques necessary for the remote sensing of plastic are still at the proof-of-concept stage.

One person studying the problem is Paolo Corradi, a systems engineer at the European Space Agency (ESA), based at its European Space Research and Technology Centre in the Netherlands. Together with a large, international collaboration, Corradi envisages how space-based measurements would fit into an integrated marine debris observing system (IMDOS) that also comprises airborne techniques and in situ measurements (Front. Mar. Sci. 10.3389/fmars.2019.00447).

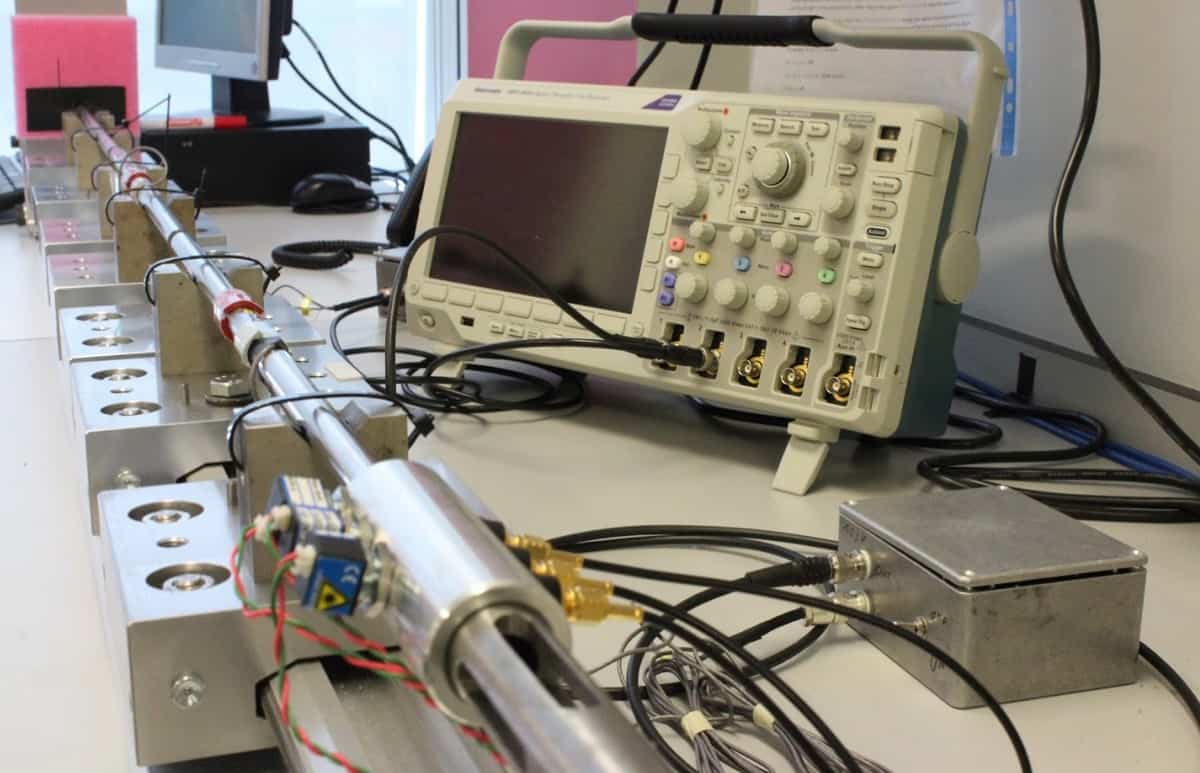

Among the remote-sensing methods under consideration, the least well studied for marine-plastic detection is probably radar. At first glance, this technique has a lot going for it, as radar can be used day and night, whether the skies are clear or cloudy. Satellite-based radar systems can also exploit an effect by which the transceiver’s motion along its orbital path mimics a much larger static antenna. The size of this “synthetic aperture” is equal to the distance the satellite travels in the interval between the emission of a pulse and the detection of its echo. The size of the aperture determines the radar’s spatial resolution, allowing satellite-based systems to achieve resolutions on the order of a metre – fine enough to spot large individual pieces or dense accumulations of debris as long as they are sufficiently reflective. However, it’s not known whether synthetic-aperture radar (SAR) will work for plastic, as the signal from such a low-dielectric-constant material might well be swamped by backscatter from ocean waves. “This is a question that keeps buzzing in my head and doesn’t let me sleep some nights,” says Armando Marino of the University of Stirling, UK, where a forthcoming project will test the method using ground-based radar.

Where satellite radar systems might help the most is in locating plastic aggregations indirectly. A variation of the SAR technique uses interferometry to measure the way water moves at the ocean surface. This method compares the phase of radar signals measured at different points to retrieve information about wave height and velocity, as well as larger scale features like surface currents. As floating objects tend to concentrate at the boundaries between differently moving masses of water, such satellite-based radar could determine where plastic is likely to build up, providing key information for transport models.

And that’s not all that wave measurements might reveal. On SAR images of ocean gyres, Marino and colleagues have noticed areas of unusually smooth sea-surface texture, which they attributed to the presence of surfactants. Like oil poured on troubled waters, surfactants dampen waves at the surface, decreasing the strength of the radar signal. Knowing that such molecules are produced by microbial activity, and that marine plastic is colonized rapidly by microbes, the researchers propose that where such calm patches follow the pattern of ocean-circulation features, they could indicate the presence of high concentrations of microplastic pollution.

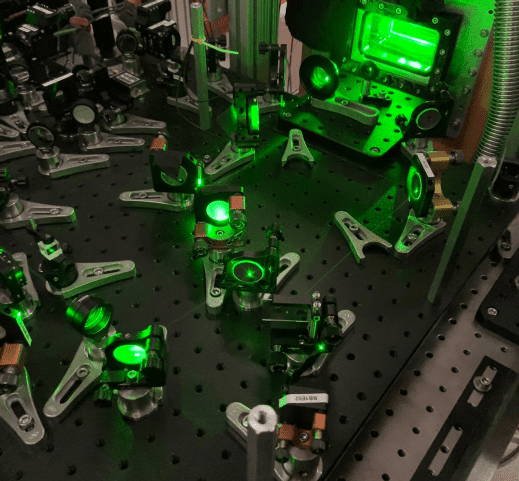

Another promising active-sensing technique is lidar. Laser range-finders – which determine distance by measuring the travel time of a laser pulse – are already commonplace on Earth-observation satellites, where they measure clouds and other atmospheric particles, wind speed, and the thickness of the ice caps. No sensors have been launched yet to explicitly study marine plastic, but some researchers have used a lidar system designed for atmospheric aerosols to look for plankton and other suspended particles (2013 Geophys. Res. Lett. 40 4355). Although the repurposed instrument offered only a coarse vertical resolution below the water’s surface, it was sensitive enough to detect particles floating in the top 20 m or so of the ocean. Since the concentration of plastic is thought to drop off exponentially more than 5 m below the surface, an appropriately optimized instrument could be an effective tool for measuring the quantity of microplastic fragments suspended in the water column.

Corradi suggests that one day lidar techniques might even be able to discriminate between plastic and other particles by detecting inelastic processes such as fluorescence or Raman scattering. He cautions, however, that the signal from the latter would be so faint that current instruments would struggle to detect it from orbit.

Shining a light on plastic

In the quest to pinpoint ocean plastic, the techniques that have been most thoroughly investigated so far are passive optical remote-sensing methods that measure reflected sunlight. Like every material, plastic imprints a characteristic spectral profile on light that scatters from its surface. Cutting-edge recycling centres already use this effect, identifying different types of plastic based on how bright they appear at certain frequencies in the infrared part of the spectrum.

An orbital application of a similar technique was demonstrated recently by Lauren Biermann, an Earth-observation scientist at Plymouth Marine Laboratory (PML) in the UK. Together with colleagues at PML and the University of the Aegean in Mytilene, Greece, Biermann used multispectral images captured by ESA’s Sentinel-2 mission – a twin-satellite system that measures vegetation and land use at high resolution across 13 spectral bands from a mean altitude of 786 km (figure 2).

The sensors on these satellites don’t have the spectral resolution necessary to discriminate between different types of plastic, as happens in recycling plants – and even if they did, the intervening atmosphere would mask some of the material’s narrow spectral features. Instead, Biermann has developed a way to identify plastic based on the material’s reflectance in three broad bands that are more easily measurable from orbit: one centred at the far-red end of the visible spectrum; one just beyond the visible range in the near-infrared (NIR); and one in the shortwave infrared (SWIR). While water absorbs strongly across all of these bands, plastic has a sharp reflectance peak in the NIR, making floating plastic objects stand out brightly against the dark ocean surface in NIR images.

Unfortunately, water’s strong absorption in the infrared also means that plastic becomes effectively invisible once it dips even a few millimetres below the waves. This passive technique can therefore only detect debris bobbing at the surface and cannot build up an inventory of plastic pollution suspended in the water column. Another challenge is that seaweed, driftwood and even sea foam all have the same sharp reflectance peak in the NIR that plastic exhibits – and, like floating plastic, they all tend to gather along ocean fronts and on beaches. So to distinguish plastic from other marine debris you have to look to less obvious spectral markers in other parts of the electromagnetic spectrum.

With this in mind, Biermann and colleagues have used satellite images to compile a spectral catalogue of material types. Recent well-documented blooms of sargassum in the Caribbean provided the spectral touchstone for floating vegetation, for example. A flood in Durban, South Africa, meanwhile, washed masses of bottles and other debris into the Indian Ocean, revealing the spectral properties of impure aggregations of plastic. “Because I’m South African, I just reached out to people I knew and asked for photographs of the harbour,” says Biermann. “I wanted to see how certain I was that this was plastic and – oh my God! – it was like a landfill had just been washed clean into the sea.”

When they had built up a library of spectral signatures, the team used it to train a machine-learning algorithm – based on Bayesian inference, like the TOPIOS model – to recognize plastic and other floating materials automatically. When tested on another set of aggregations whose compositions were verified independently, the algorithm identified plastic with an accuracy of 86%.

As promising as these results are, they were achieved with instruments that were not designed with this purpose in mind. Biermann says that the sensors used on the Sentinel-2 satellites are not quite as sensitive as she would like, and they lack bands at wavelengths where additional measurements would be useful. Most important, perhaps, is the fact that the satellites’ cameras have a spatial resolution of 10 m at best, meaning the only plastic items they can possibly observe are those that have coalesced into floating rafts large enough to fill a significant fraction of a 100 m2 pixel – specifically, around 30% for plastic bottles or bags, and 50% for discarded fishing nets. (Based on ESA studies, Corradi reports that a 1% pixel coverage is probably the theoretical limit for sensors like those carried by Sentinel-2.) Whatever the exact figure, it’s clear that cameras currently in orbit are unlikely to be much use when it comes to more widely distributed collections of plastic such as those amassed by the subtropical gyres. Biermann hasn’t, for example, been able to use her method on the Great Pacific Garbage Patch because Sentinel-2 captures images of the land and coastal waters only, which underlines how existing satellites are not quite fit for purpose.

Closer to Earth

But if low-Earth orbit is simply too high for such instruments to spot individual plastic objects, why not bring the instruments lower – say, to 400 m? This is the altitude from which the non-profit organization Ocean Cleanup surveyed the Great Pacific Garbage Patch. Extending from the hold of a C-130 cargo aircraft, a hyperspectral imager acquired images in visible and infrared light while a laser was used to build up lidar profiles.

The high spectral resolution of the infrared sensor, along with the light’s shorter path through the atmosphere, meant that the Ocean Cleanup team had more freedom to choose which bands to use than Biermann did with her satellite-based technique. Furthermore, with a survey height of 400 m, each image pixel was around 1 m2. Using two narrow spectral features in the SWIR, they found that floating plastic had to fill only about 5% of a pixel to be detectable, meaning the researchers could theoretically spot individual plastic items just a few centimetres across.

Being so low down doesn’t make the ocean any more transparent to infrared radiation, however, so the SWIR signal could still only detect debris bobbing at the surface. This is where the lidar came in, but this time not to look for suspended microplastics. Instead, the researchers used it to measure how far large aggregations – such as those that coalesce around drifting fishing nets – extend below the surface. “Ghost nets are like magnets for other debris, so they end up being almost a solid floating mass of debris,” says expedition member Jen Aitken, of Teledyne Optech in the US. “We managed to find some aggregations of plastic where the lidar was able to penetrate two or three metres, enough to make a rough model of what it looked like in 3D.”

Detailed observations like these are unlikely to be made as routinely as satellite measurements, however. Whereas a satellite passes over the same spot every few days, whether or not you decide to download its data, each airborne survey of the remote ocean takes huge effort. Aitken describes Ocean Cleanup flights – in aircraft packed with extra fuel tanks – as being dominated by the time taken to go from California out into the Pacific and back. Indeed, an excursion that might last 10 hours or more, may only spend a couple of hours taking data.

Conducting the surveys with drones is an alternative, Aitken suggests, but as these only spend an hour or so in the air, they would still need to use a large and costly ship as a base of operations. That might be feasible given that Ocean Cleanup’s plans already call for ships to pick up recovered plastic pollution. The above-mentioned IMDOS concept also includes them in its mix of techniques. “Satellites will be just part of the monitoring solution,” says Corradi. “You need airborne, you need drones, you need in situ measurements and boat measurements.”

Until such a programme exists, what conclusions can researchers draw from the data currently available? Different models suggest different distributions. At the start of the TOPIOS project, van Sebille and colleagues proposed that the reason we see so little floating plastic is because it spends only a short time at the surface before fragmenting and sinking. In that case, the missing material is distributed throughout the depths and across the ocean floor. Researchers with Ocean Cleanup, on the other hand, say that the age of typical plastic fragments found in the subtropical gyres rules this out (2019 Sci. Rep. 9 12922). If they are right, plastic debris spends years cycling between beaches and coastal waters, only reaching the offshore environment long after its emission.

Physics World unwrapped

Whichever model is true, there are implications for mitigation and remediation efforts. If most plastic has already broken up and spread through the water column, we can forget about retrieving it. Instead, we should concentrate on stopping further emissions at their source, while also investigating the impact of plastic pollution on deep-water ecosystems. If plastic survives for years, however, the bulk of current microplastics are from objects released decades ago. In that case, today’s remediation programmes might prevent harm far in the future.