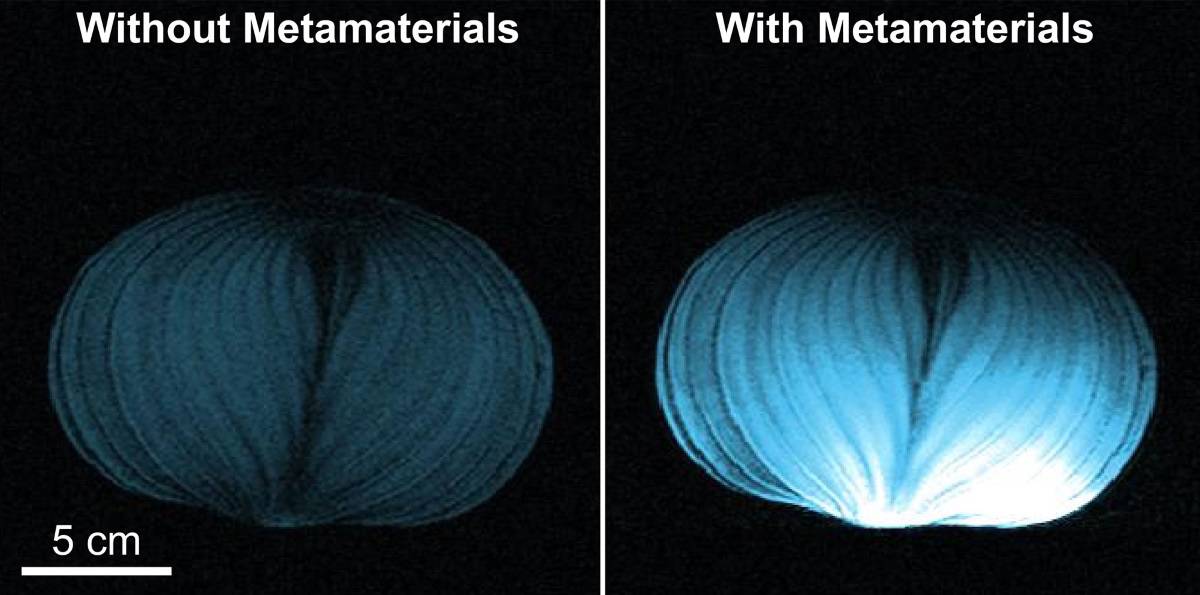

IceCube is a particle detector comprising 5160 digital optical modules suspended along 86 strings, each up to 2.5 km long and embedded under the ice at the Amundsen-Scott South Pole Station. The strings are spaced to create a total detector volume of a cubic kilometre. Neutrinos interact with matter only extremely weakly, so the array instead detects the tiny flashes of Cherenkov light produced when the neutrinos collide with hydrogen or oxygen nuclei inside the ice and create secondary particles.

In November 2013, the IceCube Collaboration published details of its observation of 28 extremely high-energy particle events, created by neutrinos with energies of at least 30 TeV. The findings represented the first evidence for high-energy cosmic neutrinos, which arise from outside of our solar system.

“This is the dawn of a new age of astronomy,” principal investigator Francis Halzen commented at the time. Looking back, this development may indeed have heralded the onset of multimessenger astronomy – studying the Universe using not just electromagnetic radiation, but also information from high-energy neutrinos, gravitational waves and cosmic rays.

Tracking the source

Two years later, IceCube confirmed the cosmic origin of high-energy neutrinos with an independent search in the Northern Hemisphere. This study examined muon neutrinos reaching IceCube through the Earth, using the planet to filter out the large background of atmospheric muons. Data analysis suggested that more than half of the 21 neutrinos detected above 100 TeV were of cosmic origin.

Interestingly, the neutrino flux measured from the Northern Hemisphere had the same intensity as that from the Southern Hemisphere. This suggests that the bulk of the neutrinos are extragalactic, else sources in the Milky Way would dominate the flux around the galactic plane.

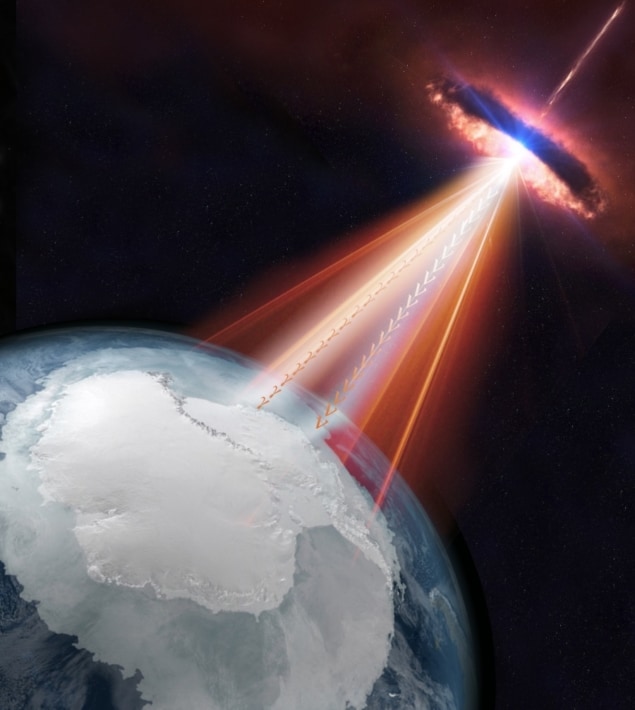

Then last year, IceCube published evidence that a known blazar – called TXS 0506+056a and located about 4 billion light years from Earth – was a source of high-energy neutrinos detected by the observatory. A blazar is an active galactic nucleus, with a massive spinning black hole at its core, that emits twin jets of light and elementary particles. By chance, one of these jets is aimed at Earth.

“The neutrinos detected in association with this blazar provided the first compelling evidence for a source of the high-energy extragalactic neutrinos that IceCube has been detecting,” explains IceCube spokesperson Darren Grant. “We anticipate, however, that blazars may not be the entire story. One of our recent papers looks at 10 years of IceCube data and we see there are some other sources that are becoming potentially interesting.”

Future upgrades

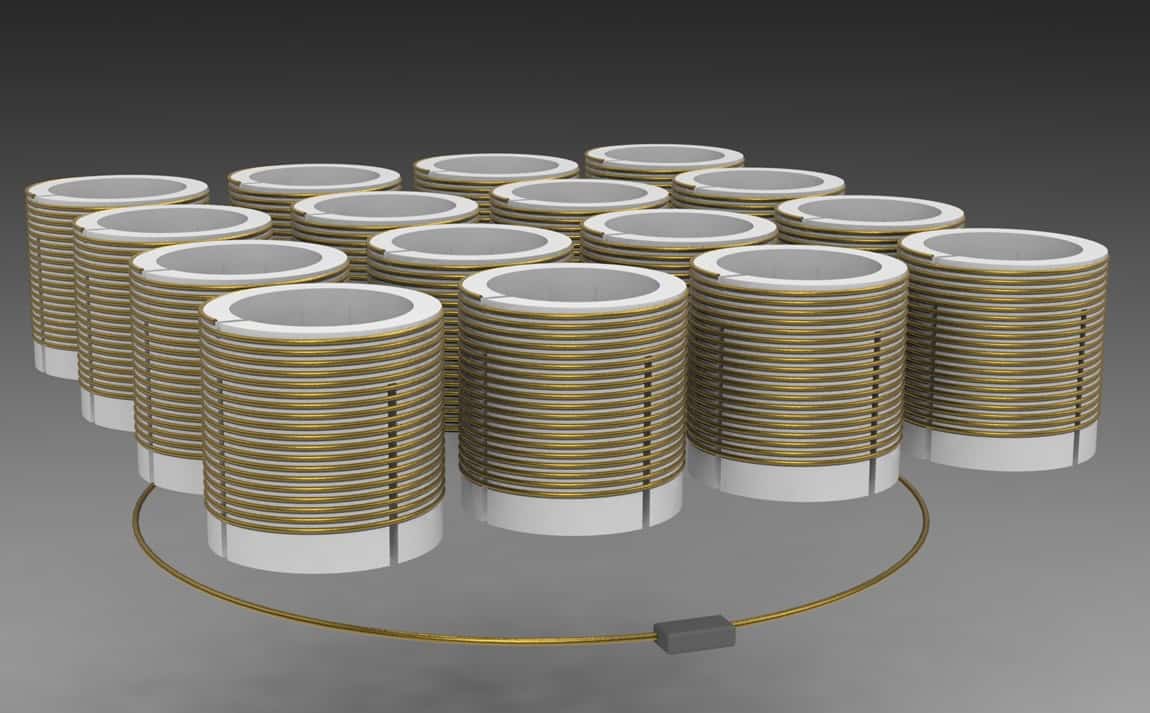

Next in the pipeline is the recently announced IceCube Upgrade, which will see another seven strings of optical modules installed within the existing strings. The upgrade will add more than 700 optical modules to the 5160 sensors already embedded in the ice, and should nearly double the detector’s sensitivity to cosmic neutrinos.

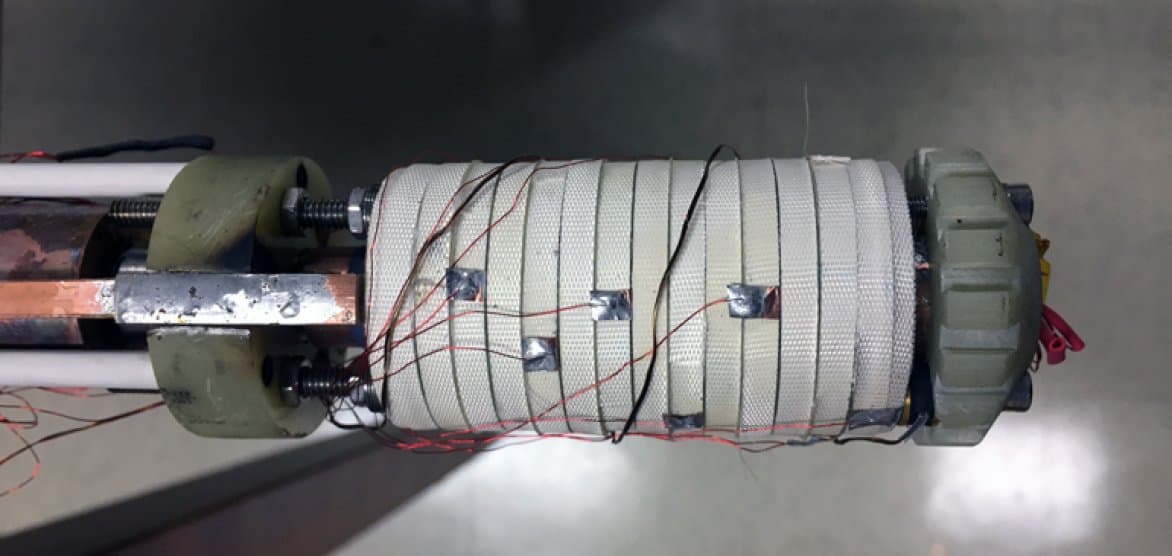

The new sensor modules will be two to three times more sensitive than those in the current detectors, thus collecting more of the Cherenkov light produced in each rare neutrino event. The enhanced modules will include multiple photomultiplier tubes (PMTs) – either two 8-inch or 24 3-inch PMTs – as well as incorporating advanced power and communication electronics.

IceCube has two key goals for the upgraded observatory. First, the close spacing of the new detectors will extend detection capabilities to lower energy neutrino events. This will increase the precision of measurements of atmospheric neutrino oscillations, in which neutrinos transform from one type to another as they travel through space.

“In particular, this will let us conduct precision tests of oscillations to tau neutrinos that will explore if the neutrino mixing matrix is “unitary” – a key check on the Standard Model of particle physics,” Grant explains.

Another goal is re-calibration of the ice around the sensors for the entire IceCube detector. As well as enabling improved reconstructions of future neutrino events, this will also allow the team to reanalyse previous data with increased precision.

And IceCube’s development plans don’t stop there. Knowledge acquired while designing and deploying the new detectors will provide a launch point for a ten-times more sensitive future extension, known as IceCube-Gen2. The team also intend to develop a shallow radio detector array that will significantly increase sensitivity to ultrahigh-energy neutrinos. This would furnish IceCube-Gen2 with the ability to detect neutrinos with energies from a few GeV to levels above EeV.

“The current design would include about 120 additional strings of optical sensors deployed in the deep ice over a volume of about 6 cubic kilometres, while the radio array would encompass some 500 km2 of instrumented volume,” Grant tells Physics World. “This represents a significant technological and logistical challenge; the aim is to have the full Gen2 in operation early in the 2030s.”