In this short video with Philips, filmed at ASTRO 2019, Laura Sluis and Michael Davis introduce an integrated approach to managing radiation therapy workflows. IntelliSpace Radiation Oncology offers a single vendor-neutral interface for multiple applications, making workflows more efficient and helping to reduce the time that patients need to wait for treatment. The workflow is protocol-driven, but can be customized to the meet the needs of the clinic and the patient.

Open-access publishing: challenges and opportunities

This episode of the Physics World Weekly podcast celebrates International Open Access Week.

Matin Durrani speaks to Antonia Seymour, who is publishing director at IOP Publishing, about the challenges and rewards of publishing open-access journals. He also chats with Gábor Csányi, who is Professor of Molecular Modelling at the University of Cambridge and a member of the editorial board of the open-access journal Machine Learning: Science and Technology.

Also in the podcast is Margaret Harris, who reports back from the UK’s House of Commons where she met this year’s winners of the Institute of Physics Business Innovation Awards.

Low-cost kirigami sensor tracks shoulder movement

A low-cost sensor that can track shoulder movements has been unveiled by scientists in the US. The simple device is assembled in a flat configuration from a laser-cut sheet of plastic and two strain sensors. The principles of kirigami — the Japanese art of paper folding cutting – allow the device to adopt the 3D shape of a user’s shoulder.

After breaking his collarbone in a bike crash, material scientist and engineer Max Shtein was surprised when his physical therapist used a protractor to measure the range of motion in his shoulder. When he returned to his lab at the University of Michigan, he was determined to create a better solution.

Shoulder movement can be measured more accurately using motion-tracking camera systems, but Shtein felt that a wearable system would be better because it could monitor patients at home. “The shoulder, in particular, moves in a very complex way. It is one of the most well-articulated joints in the body,” Shtein explains. “Nobody has really been able to track it properly with anything wearable, and yet it is key to so many activities in sports and daily life.”

Unfolds like a spring

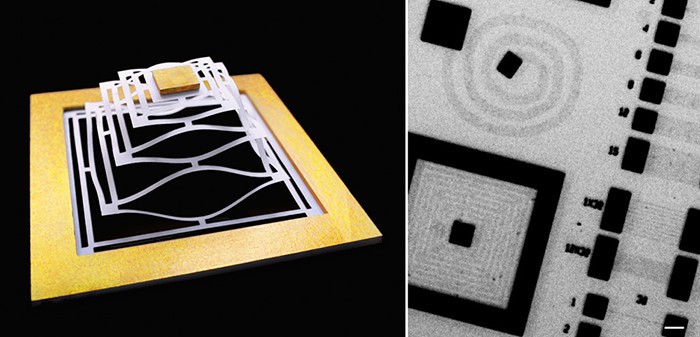

To accommodate this movement, his team turned to kirigami. This is a variation of origami that involves cutting and folding paper to create decorative shapes such as snowflakes. They used a laser to cut a pattern of rotationally symmetrical, concentric circles into a thin sheet of plastic, creating a device that unfolds like a spring into an interconnected network of alternating saddles. In use, the device opens over the shoulder, and flexes and strains as the shoulder moves.

Team member Erin Evke explains the benefits of kirigami: “If you take a sheet of paper and try to wrap it around a sphere, it’s impossible to do so without folding or wrinkling the paper. This would significantly stress the sensors before your measurement even began. Our cut pattern avoids this problem.”

The team measured the stress and strain across the kirigami device and also calculated its mechanical properties using finite-element analysis. Once they understood the response of the device, they added two strain gauges that allow the device to detect complex shoulder motions. A gauge at the top of the arm captures the raising and lowering of the limb, a gauge at the back of the shoulder to measure arm movement across the body.

Shoulder shrugs

When tested against a 19-camera motion tracking system, the kirigami shoulder sensor was found to accurately measure the raising, lowering, and circling of the arm. It was also able to detect movements such as running, shoulder shrugs and rotations of the wrist.

The team says that sensors could be mass produced for less than $10 each using existing technologies. Sensors could be incorporated into wearable devices and used by physical therapy patients to log exercises and progress outside of medical settings. Ultimately, Shtein and colleagues see kirigami sheets as a versatile sensing platform that could be used with different sensors to create a range of multifunctional health and movement monitoring devices.

Evke says that the sensors could be beneficial to athletes: “Since you can tune the cut design to match the curvatures of all different parts of your body, you can generate a lot of data that can be used to track your form – for instance while lifting – as well as the amount of strain applied on your joints. The user could be alerted of improper form in real-time and therefore prevent injuries.”

Stretchable graphene transistors inspired by kirigami

Electrical engineer and physicist Jamal Deen, of McMaster University in Canada, who was not involved in the research, recently produced a review of joint monitoring methods. He told Physics World that inertial measurement units (IMU) based on micro-electromechanical systems have the most potential for developing wearable joint tracking systems, as they are compact, inexpensive and low-power. An IMU measures the motion of an object by measuring its linear and rotational accelerations. He adds, however, that to the best of his knowledge, “clinically accepted IMU-based joint monitoring systems are not available in the market”.

Deen believes that strain sensors are very suitable for joint monitoring systems, adding that integrating them with rotationally symmetric kirigami is a novel idea that needs further work. In particular, the current system is wired and the plastic sheets could hinder joint movement. “The developed system needs proper validation with respect to a clinically accepted system to prove its accuracy and efficacy,” he explains. “Finally, it is essential to include a wireless communication module and an independent power source to make the system completely wearable.”

Balancing the demands of renewable energy self-sufficiency

Energy “autarky” — a term that roughly means self-sufficiency — has obvious social and community advantages. They include reducing the reliance on centralized suppliers and power imports from far-off destinations as well as aiding local development. While the political ramifications of decentralized energy supply is much debated, there are cost issues. It can be less efficient and so more costly to use smaller, local-generation schemes. In addition, local energy self-sufficiency, at whatever level, may also not be viable for all areas, especially given the local variations of renewable supply.

There is certainly a debate over the relative merits of national grids versus local autarky. For example, while the European Commission and the European Network of Transmission System Operators for Electricity “do not oppose local generation”, they both emphasize the benefits of grids, especially when it comes to the “cost-decreasing effect of integration and electricity trading among European countries”.

A new study led by the Potsdam Institute in Germany seeks to clarify the issues. It looks at the possibilities for local, regional and national autarky in renewable electricity generation/use in Europe. It assumes that area-based electricity independence/autonomy is only possible when the technical potential of renewable electricity exceeds local demand.

Electricity autarky below the national level is often not possible in densely populated areas in Europe

The work assesses the technical potential of roof-mounted and open-field photovoltaics as well as on- and off-shore wind turbines at all scales and locations — including at continental, national, regional and municipal levels — taking competing land use issues into account. It found that most regions across Europe were likely to be able to satisfy their electricity needs by using solar and wind power and that the renewable electricity potential exceeds demand at continental and national levels, with the total potential at a Europe-wide level of 15 000 TWh per year — over four times current demand. But it warns that developing these systems would increase pressure on land-use around large cities and towns while some municipalities might have to import power from outside.

Local shortages

The report found that the “technical-social potential” of renewable electricity beats demand on the European and national levels. For more local autarky, however, the situation is different. Here, demand exceeds generation in several regions — an effect that is stronger when the population density is higher. To reach electricity autarky below the national level, regions would need to use “very large fractions or all of their non-built-up land” for renewable electricity generation.

That might be hard given there may be competing uses for the land. Indeed, the study concludes that electricity autarky below the national level is often not possible in densely populated areas in Europe. In fact, it found more than 3000 European municipalities that cannot be self-sufficient due to insufficient land area.

The bottom line of the report is that large cities may lack enough renewable potential with the only way to self-sufficiency being to co-operate with surrounding regions or assign all their undeveloped land to generating renewable electricity — an outcome that may not be desirable. “Ultimately, it is a balancing act between self-sufficiency and more intensive local land use on the one hand and the acceptance of imports together with greater cooperation with other municipalities, regions and countries in Europe on the other,” says the report’s co-author Tim Tröndle from the Potsdam Institute.

Rural options

It is a useful study, even if it only covers power and not other energy needs. Yet it does not look at way to balance competing demands for land, which could be a key issue for local-level autarky. Certainly, as the study admits, even without balancing issues, it may be hard to achieve local autarky in high-density urban areas. Some might say it is foolish to try. Indeed, that view may be reinforced by another interesting study, which suggests that PV solar — usually seen as well-suited to use on urban roof tops — is in fact better deployed in rural areas.

That may seem counter intuitive, given that there could be conflicts between solar farms and land for growing crops. However, the US-based study suggests that the two can be compatible — via PV arrays on stands or semi-transparent PV materials — to allow crops to grow underneath. Moreover, PV cells’ efficiency falls off significantly with rising temperature so local rural micro climates can help with cooling. Hot urban roof tops, and indeed hot deserts (another common choice for big arrays), are not ideal.

The study suggests that growing crops in the intermittent shade cast by the PV panels “does not necessarily diminish agricultural yield’. It concludes that the potential for “agrivoltaic” globally could be met if less than 1% of agricultural land at the median power potential of 28 W/m2 were suitable candidates for agrivoltaic systems and converted to dual use. But it warns that the lack of energy storage and the “temporal variance” in the availability of solar energy will restrict this expansion.

So we have not escaped the balancing problem, although trading power via the grid from solar farms (and wind farms) dotted widely around the country might be part of the solution- with cities taking in their share.

The roof-top solar resource

While PV cooling issues are important, it obviously makes sense, in terms of avoiding environmental intrusion, to use domestic or commercial roof-tops for PV wherever possible rather than on land sites. Indeed, various PV cooling options are being developed for roof mounted PV including hybrid PV thermal systems (PVT) that make use of the collected heat.

The EU’s cost competitive roof-top solar PV resource has been put in a recent study at 680 TWh — 25% of EU power. Interestingly, in that study, France is seen as having the largest potential in solar power output while Portugal leads in terms of the potential solar power percentage of total generation (around 40%). That is based on a projected future Levelised Cost of Energy (LCOE) in the range €6–21 per MWh, the lowest cost obviously being in the the sunnier south.

Another recent study has looked in more detail at projected likely PV costs across the EU, although this is for larger utility schemes, most of which are likely to be land based. It suggests that by 2030, utility scale PV LCOE will range from €14 per MWh in Malaga to €24 per MWh in Helsinki with 7% weighted average cost. And that this range will be €9–15 per MWh by 2050.

Projecting costs so far ahead is obviously fraught with uncertainty, but it does seem clear that PV solar is likely to get very cheap and boom across the EU. A new report by the International Energy Agency says that PV will lead the rest, with 60% of the huge 1 200 GW of new renewable capacity expected globally by 2024 being PV solar. The best specific site deployment choice for PV however remains a matter of debate. Even leaving cooling issues aside, large utility schemes, most of which are on the ground, are usually much more competitive than smaller roof-top domestic schemes. Yet the eco-impacts for ground mounted schemes are higher and they can’t easily be located in dense urban areas. Urban energy autarky clearly isn’t going to be easy.

Deep learning algorithm helps diagnose neurological emergencies

Head CT is used worldwide to assess neurological emergencies and detect acute brain haemorrhages. Interpreting these head CT scans requires readers to identify tiny subtle abnormalities, with near-perfect sensitivity, within a 3D stack of greyscale images characterized by poor soft-tissue contrast, low signal-to-noise ratio and a high incidence of artefacts. As such, even highly trained experts may miss subtle life-threatening findings.

To increase the efficiency, and potentially also the accuracy, of such image analysis, scientists at UC San Francisco (UCSF) and UC Berkeley have developed a fully convolutional neural network, called PatchFCN, that can identify abnormalities in head CT scans with comparable accuracy to highly trained radiologists. Importantly, the algorithm also localizes the abnormalities within the brain, enabling physicians to examine them more closely and determine the required therapy (PNAS 10.1073/pnas.1908021116).

“We wanted something that was practical, and for this technology to be useful clinically, the accuracy level needs to be close to perfect,” explains co-corresponding author Esther Yuh. “The performance bar is high for this application, due to the potential consequences of a missed abnormality, and people won’t tolerate less than human performance or accuracy.”

The researchers trained the neural network with 4396 head CT scans performed at UCSF. They then used the algorithm to interpret an independent test set of 200 scans, and compared its performance with that of four US board-certified radiologists. The algorithm took just 1 s on average to evaluate each stack of images and perform pixel-level delineation of any abnormalities.

PatchFCN demonstrated the highest accuracy to date for this clinical application, with an area under the ROC curve (AUC) of 0.991±0.006 for identification of acute intracranial haemorrhage. It exceeded the performance of two of the radiologists, in some cases identifying some small abnormalities missed by the experts. The algorithm could also classify the subtype of the detected abnormalities – as subarachnoid haemorrhage or subdural haematoma, for example – with comparable results to an expert reader.

The researchers point out that head CT interpretation is regarded as a core skill in radiology training problems, and that the most skilled readers demonstrate sensitivity/specificity of between 0.95 and 1.00. PatchFCN achieved a sensitivity of 1.00 at specificity levels approaching 0.90, making it a suitable screening tool with an acceptable level of false positives.

“Achieving 95% accuracy on a single image, or even 99%, is not OK, because in a series of 30 images, you’ll make an incorrect call on one of every two or three scans,” says Yuh. “To make this clinically useful, you have to get all 30 images correct – what we call exam-level accuracy. If a computer is pointing out a lot of false positives, it will slow the radiologist down, and may lead to more errors.”

According to co-corresponding author Jitendra Malik, the key to the algorithm’s high performance lies in the data fed into the model, with each small abnormality in the training images manually delineated at the pixel level. “We took the approach of marking out every abnormality – that’s why we had much, much better data,” he explains. “We made the best use possible of that data. That’s how we achieved success.”

The team is now applying the algorithm to CT scans from US trauma centres enrolled in a research study led by UCSF’s Geoffrey Manley.

The hard sell of quantum software

When John Preskill coined the phrase “quantum supremacy” in 2011, the idea of a quantum computer that could outperform its classical counterparts felt more like speculation than science. During the 25th Solvay Conference on Physics, Preskill, a physicist at the California Institute of Technology, US, admitted that no-one even knew the magnitude of the challenge. “Is controlling large-scale quantum systems merely really, really hard,” he asked, “or is it ridiculously hard?”

Eight years on, quantum supremacy remains one of those technological watersheds that could be either just around the corner or 20 years in the future, depending on who you talk to. However, if claims in a recent Google report hold up (which they may not), the truth would seem to favour the optimists – much to the delight of a growing number of entrepreneurs. Across the world, small companies are springing up to sell software for a type of hardware that could be a long, long way from maturity. Their aim: to exploit today’s quantum machines to their fullest potential, and get a foot in the door while the market is still young. But is there really a market for quantum software now, when the computers that might run it are still at such an early stage of development?

Showing promise

Quantum computers certainly have potential. In theory, they can solve problems that classical computers cannot handle at all, at least in any realistic time frame. Take factorization. Finding prime factors for a given integer can be very time consuming, and the bigger the integer gets, the longer it takes. Indeed, the sheer effort required is part of what keeps encrypted data secure, since decoding the encrypted information requires one to know a “key” based on the prime factors of a very large integer. In 2009, a dozen researchers and several hundred classical computers took two years to factorize a 768-bit (232-digit) number used as a key for data encryption. The next number on the list of keys consists of 1024 bits (309 digits), and it still has not been factorized, despite a decade of improvements in computing power. A quantum computer, in contrast, could factorize that number in a fraction of a second – at least in principle.

Other scientific problems also defy classical approaches. A chemist, for example, might know the reactants and products of a certain chemical reaction, but not the states in between, when molecules are joining or splitting up and their electrons are in the process of entangling with each other. Identifying these transition states might reveal useful information about how much energy is needed to trigger the reaction, or how much a catalyst might be able to lower that threshold – something that is particularly important for reactions with industrial applications. The trouble is that there can be a lot of electronic combinations. To fully model a reaction involving 10 electrons, each of which has (according to quantum mechanics) two possible spin states, a computer would need to keep track of 210 = 1024 possible states. A mere 50 electrons would generate more than a quadrillion possible states. Get up to 300 electrons, and you have more possible states than there are atoms in the visible universe.

Classical computers struggle with tasks like these because the bits of information they process can only take definite values of zero or one, and therefore can only represent individual states. In the worst case, therefore, states have to be worked through one by one. By contrast, quantum bits, or qubits, do not take a definite value until they are measured; before then, they exist in a strange state between zero and one, and their values are influenced by whatever their neighbours are doing. In this way, even a small number of qubits can collectively represent a huge “superposition” of possible states for a system of particles, making even the most onerous calculations possible.

Near-term improvements

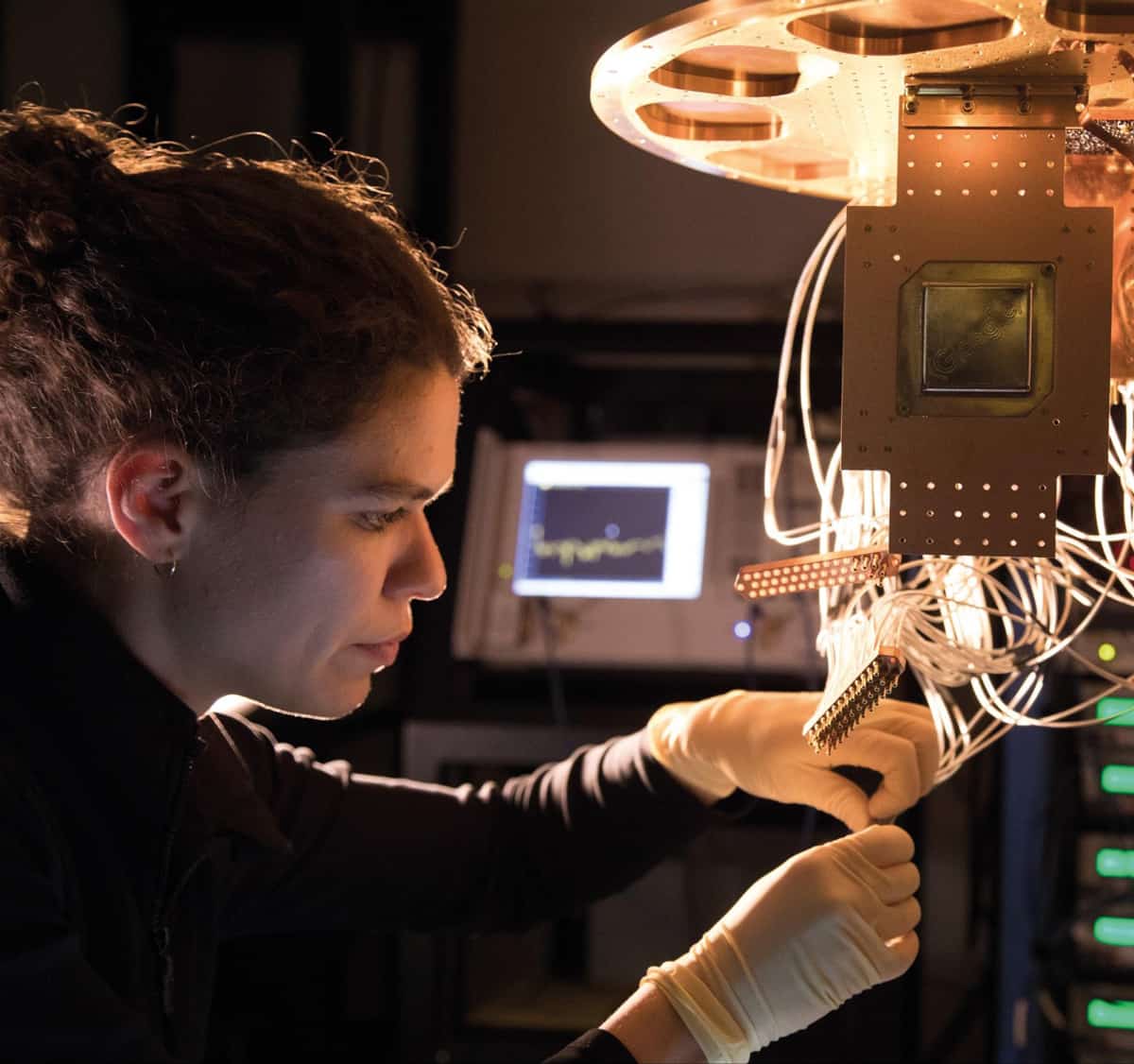

Today’s biggest universal quantum computer – or at least the biggest one anyone is talking about publicly – is Google’s Bristlecone, which consists of 72 superconducting qubits. In second place comes IBM’s 50 superconducting-qubit model. Other firms, including Intel, Rigetti and IonQ, have built smaller yet still sizeable quantum computers based on superconducting, electron, trapped-ion and other types of qubit. “In terms of advanced machines capable of processing more than one or two qubits, there are several dozen systems currently in existence globally,” says Michael Biercuk, a quantum physicist at the University of Sydney, Australia.

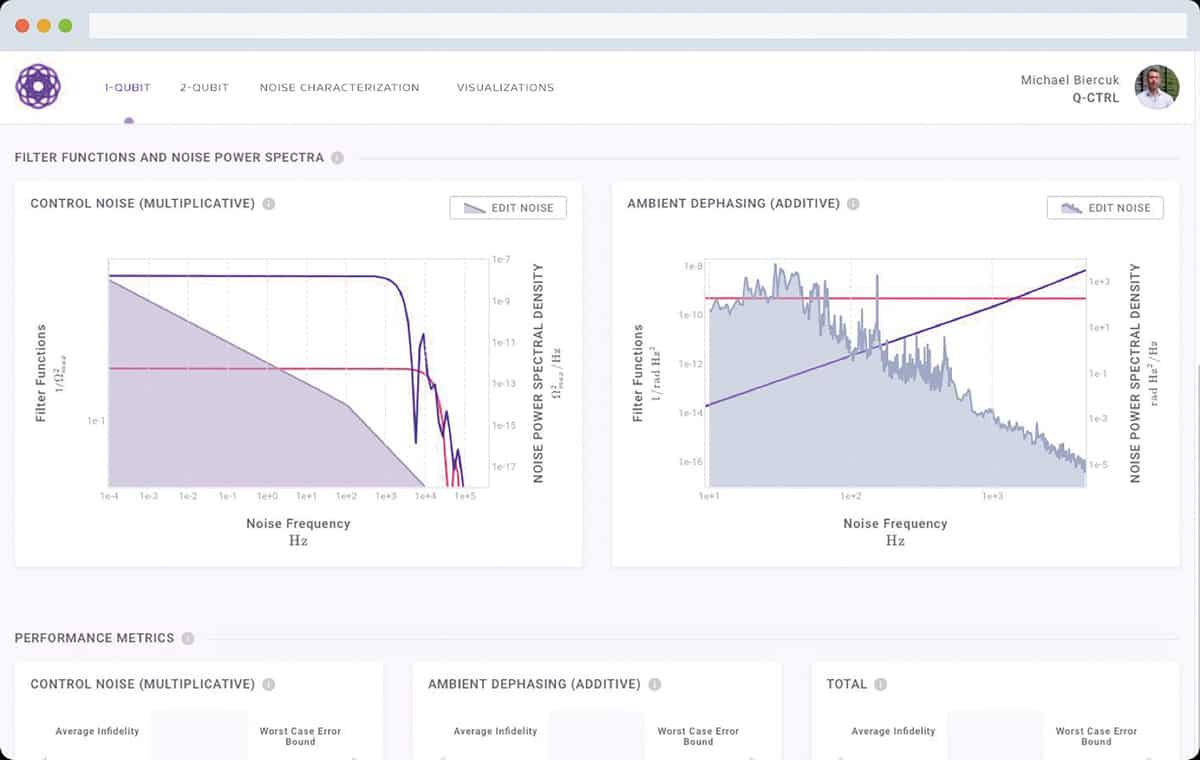

That might not sound like enough to constitute a market for quantum software. However, Biercuk argues that the overall market for quantum technologies – including not just computing but also sensing, metrology and imaging – could be worth billions of dollars in the next four years. In 2017, Biercuk gained “multi-million dollar” funding from venture capitalists to found a company, Q-CTRL, and take a slice of this pie. Now, two years later, the company has 25 employees, has reported an additional $15m in investment and is in the process of opening a second office in Los Angeles, US.

The reason for Q-CTRL’s strong start is partly that it is not waiting for the perfect quantum computer to come along. Instead, it is selling software designed to improve the quantum computers already in existence. The major potential for improvement lies in error correction. Whereas a transistor in a classical computer makes an error on average once in a billion years, a qubit in the average quantum computer makes an error once every thousandth of a second, usually because it interacts undesirably with another qubit (“cross talk”) or with the external environment (the quantum-mechanical effect known as decoherence). Quantum algorithms can make up for these errors by combining many fragile physical qubits into a single composite “logical” qubit, so that the effects of cross-talk and decoherence are diluted. Doing this, however, requires more qubits – thousands, even millions more – which are not in great supply to begin with.

Q-CTRL’s software builds on Biercuk’s academic research by employing machine-learning techniques to identify the logic operations most prone to qubit error. Once these bothersome operations are identified, the software replaces them with alternatives that are more nuanced but mathematically equivalent. As a result, the overall error rate drops without recourse to more qubits. According to Biercuk, these clever substitutions mean that a client’s algorithm can be executed in a way that minimizes the effects of decoherence, and enables longer, more complex programs to be run on a given quantum computer – without making any improvement in the hardware.

Though Biercuk declines to reveal how many clients his company has, he says they range from quantum-computing newcomers to expert research and development teams. “Overall, our objective is to educate where appropriate, and deliver maximum value to solve their problems, irrespective of their level of experience in our field,” he explains.

First applications

Research suggests that error-reduction software could play a major role in establishing near-term applications for quantum computers. The same year Q-CTRL was founded, scientists at Microsoft Research in Washington, US, and ETH Zurich in Switzerland investigated the number of qubits required to simulate the behaviour of an enzyme called nitrogenase. Bacteria use this enzyme to make ammonia directly from atmospheric nitrogen. If humans could understand this process well enough to reproduce it, the carbon footprint associated with producing nitrogen fertilizer would plummet. The good news, according to the Microsoft-ETH team, is that an error-free quantum computer would require only 100 or so qubits to simulate the nitrogenase reaction. The bad news is that once the team took into account the error rates of today’s quantum machines, the estimated number of qubits rose into the billions.

Reducing qubit numbers to something more manageable is a key priority for Steve Brierley. In 2017 Brierley, a mathematician at the University of Cambridge, UK, founded a company called Riverlane to develop quantum software, including error correction. Today, with £3.25m in seed funding from venture capital firms Cambridge Innovation Capital and Amadeus Capital Partners under his belt, Brierley is optimistic. “We now think we can do [the nitrogenase simulation] with millions of qubits, and soon we hope to do it with hundreds of thousands,” he says. “And that’s because of improvements in software, not hardware.”

Unlike Q-CTRL, Riverlane is developing software aimed at potential users of quantum computers, rather than the manufacturers of those computers. Although early applications are likely to be research-oriented – Brierley mentions the simulation of new, high-performance materials as well as problems in industrial chemistry – he chose to pursue them outside the university environment because it is easier to assemble a diverse range of expertise outside the silos of academia. In a university, he says, “You don’t typically see a physicist, a computational chemist, a mathematician and a computer scientist in the same room.” Then he laughs. “It sounds like the beginning of a joke.”

There is another reason Brierley chose to develop software for quantum computing outside academia. In a company, he explains, you are forced to focus on the problem at hand, rather than being distracted by tangential lines of research that could make an interesting paper. As a result, he says, “if we do our job well, we’ll get there in five years rather than 10.”

Brierley’s estimate, however, raises a question: if even the optimists think applications are five years away, why form a company now? In his answer, Brierley points out that there are precedents for companies founded before the technology they hope to profit from is ready. The US animation firm Pixar, for instance, was created in 1986, well before it was possible to make feature-length computer-animated films. Riverlane’s current clients, Brierley says, are “early movers” who want to understand how quantum computing will impact their field.

A quantum hype cycle?

Quite when that impact will happen is a matter of debate, and some observers think its imminence has been overstated. In 2017, scientists at Google, one of the biggest and most vocal quantum-computing developers, claimed that their universal quantum processor, which was then based on 49 qubits, stood a chance of demonstrating quantum supremacy by the end of that year. It didn’t happen. The following year, they repeated the prediction for their newer, 72-qubit computer. Again, it didn’t happen. Now they are pinning their hopes on what remains of 2019. In June, Hartmut Neven, engineering director of Google’s Quantum Artificial Intelligence lab, declared, “The writing is on the wall.”

Shortly before this article was published, a report from Google suggested that the US computing firm has finally made good on its promise. However, the name “quantum supremacy” can be misleading. To achieve it, a quantum computer would have to outperform the world’s most powerful classical supercomputer, which is currently IBM’s Summit at Oak Ridge National Laboratory in Tennessee, US. Technically, however, this milestone could be reached by running an algorithm that was specifically designed to exploit the rift between quantum and classical computing, and that had no practical use – as appears to be the case with Google’s result. Yudong Cao, a computer scientist and co-founder of Zapata Computing in Massachusetts, US, notes that there are other complicating factors in the debate. What if a quantum computer beats the most powerful classical computer, but then another, more powerful classical computer comes along? What if the quantum computer is fractionally faster, but the classical machine is more economical to use?

Despite questions surrounding the different “flavours of quantum usefulness”, as Cao puts it, he believes that some version of it could be just a few years away. “Eventually, we will get to a stage where we can say there is sufficient scientific evidence to say that a certain [quantum computation] has practical relevance, and is beyond anything a classical computer could do,” he says.

Cao’s his company was founded on such optimism. Zapata is targeting customers who believe they have problems that could be addressed by “near term” quantum computers – ones that operate with more than 100 high-quality qubits. In a typical case, says Cao, a customer will come to Zapata with the problem they are working on, and the people at Zapata will screen it to see which aspects have solutions obtainable with classical computing, and which with quantum. If part of the problem needs a quantum computer, the question then is whether that part is within the capacity of systems likely to be available in the next two to five years. Only a “small subset” of quantum-type problems fit into this category, Cao says.

For problems where the prospects are good, Zapata aims to create an operating system that will enable customers to run simulations. This operating system will automatically separate the simulation into its quantum and classical components, but it will only interact with the customer in a higher-level, classical language. Hence, the customer will not need to bother with the intricacies of qubits and quantum behaviour to simulate their problems.

According to Cao, Zapata has “quite a few” customers already, at various stages of development. “Some have ongoing projects, while others are in the process of being screened to see what aspects of their problem could be suitable,” he explains. Like other quantum-computing entrepreneurs, he declines to discuss specific problems he and his colleagues are working on.

Past precedent

Commercially driven secrecy is one reason why it is impossible to say when the quantum-computing revolution will truly arrive, and to what degree software will hasten it. The website quantumcomputingreport.com lists more than 100 private start-ups, focusing on applications ranging from finance, logistics, video rendering, drugs research and cyber security. The numbers suggest that demand is high, and Cao thinks the industry is at a similar stage to the classical-computing industry in the 1940s. Then, too, there were many ideas, but the best approaches were still far from being established. Some early computers were based on valves, for instance, while others were based on electromechanical switches. “In the same way, the programmers had to be very mindful of the limitations of the computers,” Cao says. “They had to be careful how to allocate memory, they had to pre-process the problem as much as they could, and send only the most tedious parts to the machine.”

If the comparison between quantum and classical computing is valid, it is worth pointing out how long it took for classical computing to really establish itself. After the transistor was invented in 1947, another 25 years passed before the first microprocessors hit the shelves. But Cao thinks that researchers in quantum computing have a distinct advantage over their predecessors. “Computational complexity theory did not exist. Numerical linear algebra did not exist. Cloud computing did not exist,” he says. “We have a lot more ammunition we can throw at the challenge.”

Is Google’s quantum supremacy not so supreme after all?

Google’s Sycamore quantum processor hit the headlines in September when a leaked draft paper suggested that the device is the first to have achieved quantum supremacy by solving a problem more than a billion times faster than a conventional (classical) supercomputer. But now, researchers at IBM claim that the Google team has vastly overestimated how long the problem would take to solve on a supercomputer – saying that the actual speed-up offered by Sycamore is more like a factor of 1000.

The final version of the Google paper has since been published in Nature and describes how 53 programmable superconducting quantum bits were used to determine the output of a randomly-chosen quantum circuit. While the calculation has no practical application, it does provide a way of comparing the performance of Sycamore to that of classical computer.

The quantum calculation took 200 s to complete and the Google researchers estimated that the same calculation would take 10,000 years to execute on a state-of-the-art supercomputer.

Days, not millennia

But now, IBM’s Edwin Pednault, John Gunnels, and Jay Gambetta argue that the same task could be performed in just 2.5 days using the Summit supercomputer at Oak Ridge National Laboratory in the US. Writing in the IBM Research Blog, they say that the Summit calculation would be more accurate than the Sycamore calculation and with further optimization, the execution time could be reduced.

The IBM trio say that the Google researchers overestimated the classical calculation time because they failed to fully consider the “plentiful” hard-disk data storage resources available on supercomputer systems.

They write, “Google’s experiment is an excellent demonstration of the progress in superconducting-based quantum computing, showing state-of-the-art gate fidelities on a 53-qubit device, but it should not be viewed as proof that quantum computers are ‘supreme’ over classical computers”.

Overused term

The trio also cautions physicists about the casual use of the term “quantum supremacy”, which is usually understood to describe the ability (at least in principle) of a quantum computer to do a calculation that would take a classical computer an impractically long time to complete.

They write, “we urge the [quantum computing] community to treat claims that, for the first time, a quantum computer did something that a classical computer cannot with a large dose of scepticism due to the complicated nature of benchmarking an appropriate metric”.

The term quantum supremacy is attributed to Caltech’s John Preskill, who has recently lamented his choice of words. As well as the unfortunate association with the term white supremacy, Preskill is also concerned about the hype surrounding the word supremacy.

IBM researchers provide a technical description of how the Summit supercomputer calculation could be done in a preprint on arXiv.

- The nascent quantum software industry is profiled by Jon Cartwright in “The hard sell of quantum software“.

Magnetism, light and sound – a Takis retrospective

Pioneering artist Panagiotis Vassilakis (1925–2019), known professionally as Takis, spanned the divide between art and science, breaking conventions with his “kinetic” sculpture that used electromagnetism to explore the concept of energy. Born in Athens in 1925, Takis moved to Paris in 1954 and became a key figure of the avant-garde in Paris, London and New York. The renowned Signals London gallery was named after his influential series of antennae-like sculptures, and became an important hub for the transmission of ideas and the dissolution of barriers between the arts and sciences.

From July to October this year, 70 of Takis’ major works are on display at the Tate Modern gallery in London, a fitting tribute to the recently deceased artist who sought to communicate the poetry and beauty of the electromagnetic universe. The exhibition, simply titled Takis, was co-curated by writer and curator Guy Brett, who was closely involved in Signals London, together with the Tate’s international art curator Michael Wellen and assistant curator Helen O’Malley.

Takis became fascinated by electromagnetism in 1959, describing it as “an infinite, invisible thing, that doesn’t belong to earth alone. It is cosmic; but it can be channelled”. An early work of his – Oscillating Parallel Line / Ligne parallele vibrative (1965) – involved suspending a needle in air using a powerful magnet, to highlight how magnetism can override the laws of gravity. “I would like to render [electromagnetism] visible so as to communicate its existence and make its importance known; I would like to make visible this invisible, colourless, non-sensual, naked world which cannot irritate our eye, taste or sex. Which is simply pure thought.” The artist was never too focused on the “visual qualities” of his work, saying instead that “what I was obsessed with was the concept of energy”.

Despite covering a lifetime of his work, the Tate exhibition is not chronological. Instead it is thematically arranged, with rooms dedicated to the themes of magnetism and metal, light and darkness, and sound and silence, all using elements of electromagnetism to dramatic effect. Takis dubbed his magnetism-inspired works “telemagnetic” and these mostly use magnetic fields to suspend metal objects in space, appearing to float as if defying gravity. He was interested in the gaps between the floating objects where “energy” clearly existed but there was nothing to see or touch. A highlight of the exhibition is Magnetic Fields (1969), where magnetic pendulums trigger movement from nearly 100 sculptures. On loan from the Guggenheim Museum, this is being displayed for the first time since the 1970s.

In the light and darkness section, displayed in a darkened gallery, several works are made with glowing blue mercury-arc rectifiers, such as Télélumière No. 4 (1963–1964). These glass bulb valves, containing a pool of mercury, were once commonly used for converting high-voltage or high-current alternating current (AC) into direct current (DC). The arc between the mercury pool and multiple metal anodes only allows current to pass in one direction. The rectifier is switched using an electrode, which can be dipped into the mercury pool using an external electromagnet, causing a spark to ionize the mercury vapour and initiate the arc.

These components were usually hidden from view in power substations or railway controls, but Takis saw their beauty. “Seeing them turn on is a really incredible experience,” says O’Malley. “The gas turns a really extraordinary kind of aqua blue colour, but it’s a really slow build, until it reaches full brightness.” The Tate set these works to switch on and off in 15-minute increments and it’s worth the wait.

These works have a distinctly post-war pre-transistor feel. Takis sourced many of his materials from military surplus shops and flea markets, salvaging radio antennas and control dials from US army Jeeps or aeroplanes. As with his art, his electronics skills were self-taught. “When you see the back of a lot of the artwork, the wiring and the electrics is total chaos. You can tell that it was constructed by an amateur,” adds O’Malley.

The final section of the exhibition concentrates on sound and the acoustic devices Takis created, which are part kinetic sculpture, part musical instrument. In the exhibited sequence of his Musicals, an electromagnet is switched on and off to make a needle bounce against a stretched and amplified wire, giving out the sound of a single prolonged note that starts and stops as the needle hits and drops away from the wire. Perhaps with his Greek heritage in mind, Takis was interested in the mystical ideas of Pythagoras and the concept of the music of the spheres – the idea that mathematical relationships express qualities of energy, manifested in numbers, angles, shapes and sounds. The somewhat jarring sounds he created will not be to everyone’s taste though. “Being surrounded by eight or nine of those works is actually a really intense experience that some people love, and others hate, finding it really uncomfortable,” says O’Malley.

The works displayed in this exhibition demonstrate how art can illuminate scientific concepts, and most physicists will understand the feeling of awe his sculptures generate. Takis himself clearly had the spirit of an experimentalist. “You hear anecdotes about explosions, and of combining materials and then running behind the couch because he was nervous something might happen,” says O’Malley.

He also worked with scientists and engineers during a fellowship at MIT in 1968, where together with engineer Ain Sonin, he invented a floating wheel to harness wave energy. The “sea oscillation” device was tested in Boston harbour in 1968 and could generate 1–6 W of power. Perhaps once displaying symbols of the scientific cutting edge, these works now provide a beautiful showcase of technology from a past era and a chance to rethink our own relationship to the electromagnetic universe.

-

Takis is open 3 July – 27 October 2019. Co-curated by Guy Brett, Michael Wellen and Helen O’Malley. The exhibition is organized by Tate Modern in collaboration with the MACBA Museu d’Art Contemporani de Barcelona and the Museum of Cycladic Art, Athens

Your pathway to a future in nuclear science

“What we offer is a vocational programme, the whole goal of which is to support the nuclear industry,” says Gavin Smith of The University of Manchester. NTEC was formed in 2005 to tackle concerns that not enough students were graduating from UK universities to meet the needs of the nuclear sector, be it in operation, decommissioning or new build. It was set up following detailed consultations with the whole of the UK nuclear sector including businesses, regulators, the Ministry of Defence, the Nuclear Decommissioning Authority, government departments and the Cogent Sector Skills Council.

Seven institutions – the universities of Birmingham, Central Lancashire, Leeds, Liverpool, Manchester and Sheffield, as well as the Nuclear Department, Navy Command – provide 16 different course units that are directly taught. Nine of these are also available in a distance-learning format, with another six units being converted over the next couple of years. Students graduate with an MSc, a postgraduate certificate, a diploma or a continuous professional development (CPD).

Bespoke training

The NTEC courses are distinctive in that they are aimed at both graduates straight out of university and industry professionals seeking to broaden their skills and knowledge. “A unique aspect of our courses is the delivery method,” says Smith, who explains that each course unit is taught in a one week short-format method. “Instead of a student having a course delivered over a one-hour slot every Monday morning at nine o’clock for a full semester, they take eight course units for the full MSc, and study each one for a week at one of the member universities.”

Each course unit includes about 35 hours of direct contact, but totals around 150 hours when including pre- and post-coursework, exams and study time. This approach allows the training to be accessed by people working in industry, who might find it easier to take one week out of a work schedule, rather than attending a full semester of classes. “They can do a part-time course over three years, where they do four modules in year one, four modules in year two and then do their project in year three,” says Smith. “That’s the kind of model we were told by industry that they prefer, rather than having day release or losing an employee for a full year.” The course offers a broad scope of choice and flexibility, with supervisors providing recommendations depending on the interests of each student.

Broad spectrum

NTEC encourages applications from graduates with a wide variety of science and engineering backgrounds, including those with degrees in aerospace, chemical engineering, chemistry, civil engineering, computer science, materials, mechanical engineering and, of course, physics. Applicants straight from university will be required to have at least a 2:2 degree in a relevant discipline.

For applicants with some years’ industrial experience, a lesser qualification may be acceptable. However, each application is considered individually – the main criterion being whether a registering university believes that a potential student has a good chance of completing the programme successfully.

As part of the degree, full-time students also undertake a research project during the summer. “We start thinking about projects around April, and we encourage most full-time students to do the project within industry,” says Smith. “This gives them a flavour of industry and provides a stepping stone between university life and going into industry once they’ve finished their studies.”

Our course was formed on the basis of a big stakeholder consultation, to see what industry wants today and what it needs in the future.

Gavin Smith

Part-time students do their project in their third year, mostly at their own company, which Smith says lets them “contribute to their employer’s goals and research requirements”.

Practical experience

One former full-time NTEC student is Saralyn Thomas, who now works in the nuclear industry. “It’s such a fantastic place to work, and it truly is an exciting time to join the industry with increased support from the UK government on various nuclear projects as the world comes to fully appreciate that net zero needs nuclear,” says Jackson. “Pursuing a career in the nuclear industry was the best decision I have ever made – the second best being the NTEC course, which opened those doors for me.”

For Thomas, the course gave her the opportunity to meet other like-minded individuals, some of whom were already working in the nuclear sector. “It also gave me a fantastic insight into what it would be like to work in different areas of the industry,” says Thomas, for whom a summer placement at the National Nuclear Laboratories through NTEC cemented her desire to join the nuclear industry. “If we are to meet our net zero goals with nuclear as a key part of that energy mix, it is critical that we get the right people into the industry so we can fills the skills shortage which we currently face in the sector – and NTEC is just the course you need to equip yourself with those skills.”

Another former full-time NTEC student is Sophie Jackson, who has now worked in the nuclear industry for more than six years. Her job involves ensuring nuclear material is safe and reducing the risks as low as reasonably practicable. Jackson has worked in many areas from fuel enrichment to decommissioning, none of which she says would have been possible without attending the NTEC Masters degree.

“I studied from 2014 to 2015 and can honestly say it was one of the best decisions I have ever made,” says Jackson. “The course prepares you for industry like no other, it is designed by industry for industry professionals and students wanting to kick start a career in nuclear.”

Before finishing the course, she had already been accepted for a job as a nuclear safety engineer at BAE Systems Submarine Ltd, having met the team at its Barrow site organised by NTEC. “You have the chance to meet many nuclear professionals throughout the course which helped me know what area I wanted to work in and allowed me to develop relationships with professionals in the nuclear industry,” she adds.

Students on the NTEC courses also benefit from lectures given by external industrial experts as well as an annual bespoke careers fair. “We invite all the nuclear companies that support the programme, so that students are able to talk to them about projects and possible employment once they complete their studies,” says Smith.

The consortium has an external advisory board, to ensure that the course quality does not drop, and that its content is relevant. As Smith points out: “Ultimately our course was formed on the basis of a big stakeholder consultation, to see what industry wants today and what it needs in the future.”

For more information on the course and how to apply, visit the NTEC website: www.ntec.ac.uk. Note that all course units will eventually be converted to fully distance learning.

Applications are open from now until the start of the course in September 2019, and most students can apply for funding through a postgraduate master’s loan. Applications for single modules are open year-round – you should submit your request at least four weeks prior to the module taking place.

Entry requirements for the Nuclear Science and Technology MSc, postgraduate diploma and postgraduate certificate are the same. Applicants straight from university are required to have at least a 2:2 degree in a relevant discipline. Part-time UK students are accepted with the lower qualification of a higher national certificate (HNC) as long as they have at least seven years’ work experience in the nuclear industry.

Applicants whose first language is not English must have achieved one of the following levels of proficiency:

• IELTS – 7.0 with no less than 6.0 in any sub-test

• TOEFL – scores of at least 95 overall with no less than 24 in reading, 22 in listening, 25 in speaking and 24 in writing

Contact: info.ntec@manchester.ac.uk, +44 (0)161 275 4267/161 275 1246

Strategic wind farm siting could help seabirds

Back in 2016 the US installed its first offshore wind farm: Block Island Wind Farm, off the coast of Rhode Island. Many more projects are underway and the US government plans to produce 86 GW – enough energy to power around 60 million homes – from offshore wind by 2050. But how will these new wind farms affect US seabird populations? A new study shows careful placement can help minimize the exposure of foraging birds that may be vulnerable to widespread development.

Offshore wind farms are already commonplace in Europe, particularly in the North Sea where there are over 80, producing more than 18 MW. Research from Europe has shown that offshore wind farms can affect seabirds, both directly – when birds collide with turbines – and indirectly, when birds lose habitat as a result of their tendency to avoid wind farm areas. When multiple offshore wind farms are installed, there are concerns that there may be cumulative effects on seabirds.

Wing Goodale from the University of Massachusetts at Amherst and Biodiversity Research Institute, US, and colleagues wondered if the cumulative impact on seabirds could be reduced by careful design of the spatial arrangement of offshore wind farms. To investigate, they modelled the exposure of seven foraging seabird guilds – groups of birds that rely on the same set of resources – under three different wind farm siting scenarios along the US east coast.

Their findings show that cumulative effects are more likely for coastal birds than pelagic birds, which spend most of their time on the ocean and away from land.

“Coastal bottom gleaners, such as sea ducks, and coastal divers, such as loons, are vulnerable to displacement, whilst coastal surface gleaners such as gulls are vulnerable to collision,” says Goodale. Pelagic birds like puffins, razorbills, fulmars and shearwaters are less vulnerable because their habitat is less disturbed.

The results, which are published in Environmental Research Letters (ERL), show that some birds will be exposed wherever the wind farms are placed. Gulls will be exposed the most in near-shore and shallow water locations, whilst pelagic birds will be exposed the most in high wind areas, which tend to be further out to sea.

Proposed wind energy areas already avoid known bird hotspots such as the Nantucket Shoals and aim to disperse developments along most of the east coast, from South Carolina to Massachusetts. The new findings suggest that the detailed siting of the wind farms is important too.

“We recommend that when two or more wind farms are sited in one wind energy area they should, to the extent practicable, be separated as much as possible, to provide movement corridors for species vulnerable to displacement,” says Goodale.

Spreading out developments even further may help to minimize adverse impacts on seabirds. Goodale and colleagues suggest that future siting should focus on areas with no current leases, such as the Gulf of Maine.