The 2024 Nobel prizes in both physics and chemistry were awarded, for the first time, to scientists who have worked extensively with artificial intelligence (AI). Computer scientist Geoffrey Hinton and physicist John Hopfield shared the 2024 Nobel Prize for Physics. Meanwhile, half of the chemistry prize went to computer scientists Demis Hassabis and John Jumper from Google DeepMind, with the other half going to the biochemist David Baker.

The chemistry prize highlights the transformation that AI has achieved for science. Hassabis and Jumper developed AlphaFold2 – a cutting-edge AI tool that can predict the structure of a protein based on its amino-acid sequence. It revolutionized this area of science and has since been used to predict the structure of almost all 200 million known proteins.

The physics prize was more controversial, given that AI is not traditionally seen as being physics. Hinton, with a background in psychology, works in AI and developed “backpropagation” – a key part of machine learning that enables neural networks to learn. For the work, he won the Turing award from the Association for Computing Machinery in 2018, which some consider the computing equivalent of a Nobel prize. The physics part mostly came from Hopfield who developed the Hopfield network and Boltzmann machines, which are based on ideas from statistical physics and are now fundamental to AI.

While the Nobels sparked debate in the community about whether AI should be considered physics or chemistry, I don’t see an issue with the domains and definitions for subjects having moved on. Indeed, it is clear that the science of AI has had a huge impact. Yet the Nobel Prize for Physiology or Medicine, which was awarded to Victor Ambros and Gary Ruvkun for their work in microRNA, sparked a different albeit well-worn controversy. This being that no more than three people can share each science Nobel prize in a world where scientific breakthroughs are increasing highly collaborative.

No-one would doubt that Ambros and Ruvkin deserve their honour, but many complained that Rosalind Lee, who is married to Ambros, was overlooked for the award. She was the first author of the 1993 paper (Cell 75 843) that was cited for the prize. While I don’t see strong arguments for why Lee should have been included for being the first author or married to the last author (she herself also stated such), this case highlights the problem of how to credit teams and whether the lab lead should always be given the praise.

What sounded alarm bells for me was rather the demographics of this year’s science Nobel winners. It was not hard to notice that all seven were white men born or living in the UK, the US or Canada. To put this year’s Nobel winners in context, the number of white men in those three countries make up just 1.8% of the world’s population. A 2024 study by the economist Paul Novosad from Dartmouth College in the US and colleagues examined the income rank of the fathers of previous Nobel laureates. It found, instead of a uniform distribution, that over half come from the top 5% in terms of wealth.

This is concerning because, taken with other demographics, it tells us that less than 1% of people in the world can succeed in science. We should not accept that such a tiny demographic are born “better” at science than anyone else. The Nobel prizes highlight that we have a biased system in science and little is being done to even out the playing field.

Increasing the talent pool

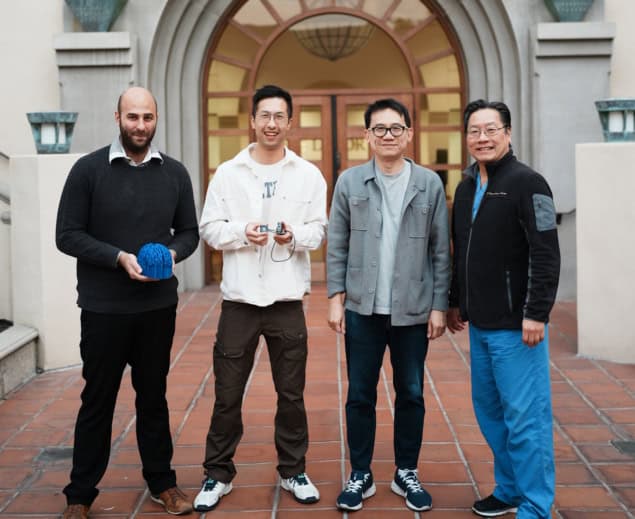

Non-white people in western countries have historically been oppressed and excluded from or discouraged from science, a problem that continues to be unaddressed today. The Global North is home to a quarter of the world’s population but claims 80% of the world’s wealth and dominates the Global South both politically and economically. The Global North continues to acquire wealth from poorer countries through resource extraction, exploitation and the use of transnational co-operations. Many scientists in the Global South simply cannot fulfil their potential due to lack of resources for equipment; are unable to attend conferences; and cannot even subscribe to journals. Deep connections: why two AI pioneers won the Nobel Prize for Physics

Moreover, women and Black scientists worldwide and even within the Global North are not proportionally represented by Nobel prizes. Data show that men are more likely to receive grants than women and are awarded almost double the funding amount on average. Institutions like to hire and promote men more than women. The fraction of women employed by CERN in science-related areas, for example, is 15%. That’s below the 20–25% of people in the field who are women (at CERN 22% of users are women), which is, of course, still half of the expected percentage of women given the global population.

AI will continue to play a stronger and more entangled role in the sciences, and it is promising that the Nobel prizes have evolved out of the traditional subject sphere in line with modern and interdisciplinary times. Yet the demographics of the winners highlight a discouraging picture of our political, educational and scientific system. Can we as a community help reshape a structure from the current version that favours those from affluent backgrounds, and work harder to reach out to young people – especially those from disadvantaged backgrounds?

Imagine the benefit not only to science – with a greater pool of talent – but also to society and our young students when they see that everyone can succeed in science, not just the privileged 1%.