Physics World is delighted to announce its Top 10 Breakthroughs of the Year for 2024, which includes research in nuclear and medical physics, quantum computing, lasers, antimatter and more. The Top Ten is the shortlist for the Physics World Breakthrough of the Year, which will be revealed on Thursday 19 December.

Physics World is delighted to announce its Top 10 Breakthroughs of the Year for 2024, which includes research in nuclear and medical physics, quantum computing, lasers, antimatter and more. The Top Ten is the shortlist for the Physics World Breakthrough of the Year, which will be revealed on Thursday 19 December.

Our editorial team has looked back at all the scientific discoveries we have reported on since 1 January and has picked 10 that we think are the most important. In addition to being reported in Physics World in 2024, the breakthroughs must meet the following criteria:

- Significant advance in knowledge or understanding

- Importance of work for scientific progress and/or development of real-world applications

- Of general interest to Physics World readers

Here, then, are the Physics World Top 10 Breakthroughs for 2024, listed in no particular order. You can listen to Physics World editors make the case for each of our nominees in the Physics World Weekly podcast. And, come back next week to discover who has bagged the 2024 Breakthrough of the Year.

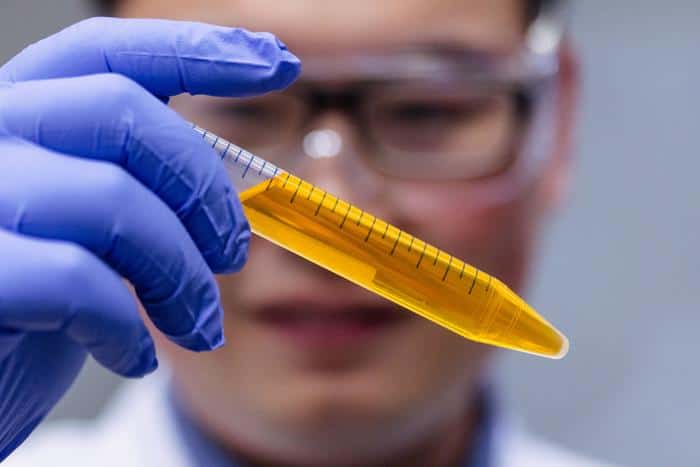

Light-absorbing dye turns skin of live mouse transparent

To a team of researchers at Stanford University in the US for developing a method to make the skin of live mice temporarily transparent. One of the challenges of imaging biological tissue using optical techniques is that tissue scatters light, which makes it opaque. The team, led by Zihao Ou (now at The University of Texas at Dallas), Mark Brongersma and Guosong Hong, found that the common yellow food dye tartrazine strongly absorbs near-ultraviolet and blue light and can help make biological tissue transparent. Applying the dye onto the abdomen, scalp and hindlimbs of live mice enabled the researchers to see internal organs, such as the liver, small intestine and bladder, through the skin without requiring any surgery. They could also visualize blood flow in the rodents’ brains and the fine structure of muscle sarcomere fibres in their hind limbs. The effect can be reversed by simply rinsing off the dye. This “optical clearing” technique has so far only been conducted on animals. But if extended to humans, it could help make some types of invasive biopsies a thing of the past.

Laser cooling positronium

To the AEgIS collaboration at CERN, and Kosuke Yoshioka and colleagues at the University of Tokyo, for independently demonstrating laser cooling of positronium. Positronium, an atom-like bound state of an electron and a positron, is created in the lab to allow physicists to study antimatter. Currently, it is created in “warm” clouds in which the atoms have a large distribution of velocities, making precision spectroscopy difficult. Cooling positronium to low temperatures could open up novel ways to study the properties of antimatter. It also enables researchers to produce one to two orders of magnitude more antihydrogen – an antiatom comprising a positron and an antiproton that’s of great interest to physicists. The research also paves the way to use positronium to test current aspects of the Standard Model of particle physics, such as quantum electrodynamics, which predicts specific spectral lines, and to probe the effects of gravity on antimatter.

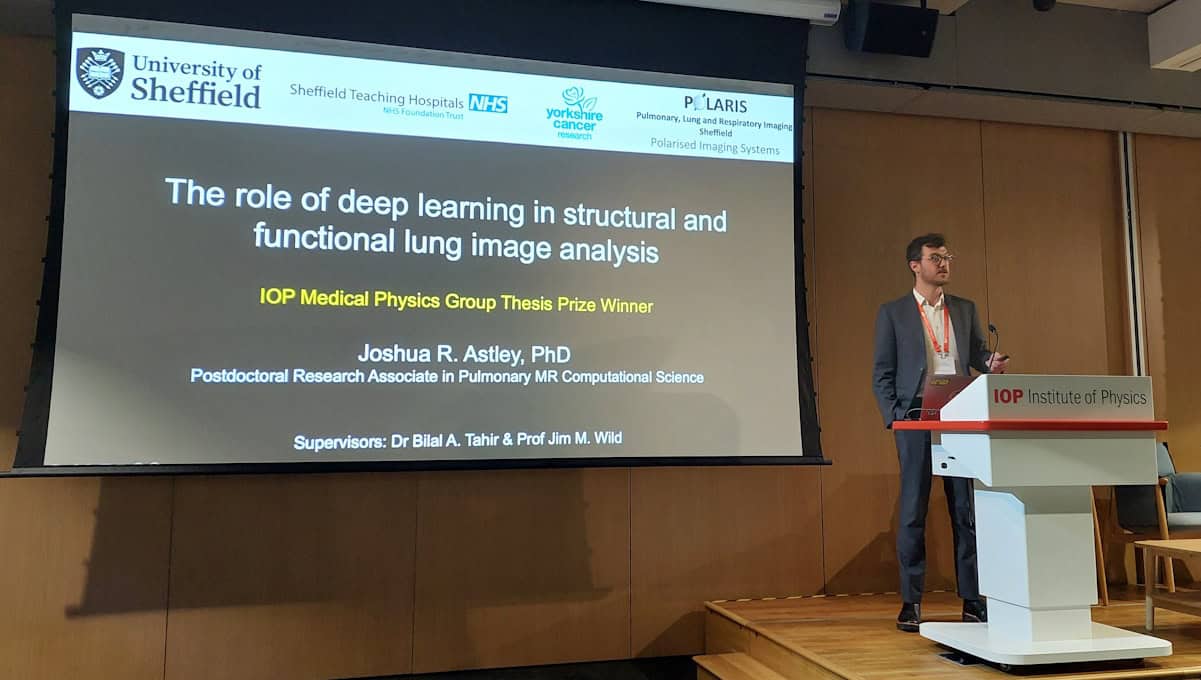

Modelling lung cells to personalize radiotherapy

To Roman Bauer at the University of Surrey, UK, Marco Durante from the GSI Helmholtz Centre for Heavy Ion Research, Germany, and Nicolò Cogno from GSI and Massachusetts General Hospital/Harvard Medical School, US, for creating a computational model that could improve radiotherapy outcomes for patients with lung cancer. Radiotherapy is an effective treatment for lung cancer but can harm healthy tissue. To minimize radiation damage and help personalize treatment, the team combined a model of lung tissue with a Monte Carlo simulator to simulate irradiation of alveoli (the tiny air sacs within the lungs) at microscopic and nanoscopic scales. Based on the radiation dose delivered to each cell and its distribution, the model predicts whether each cell will live or die, and determines the severity of radiation damage hours, days, months or even years after treatment. Importantly, the researchers found that their model delivered results that matched experimental observations from various labs and hospitals, suggesting that it could, in principle, be used within a clinical setting.

A semiconductor and a novel switch made from graphene

To Walter de Heer, Lei Ma and colleagues at Tianjin University and the Georgia Institute of Technology, and independently to Marcelo Lozada-Hidalgo of the University of Manchester and a multinational team of colleagues, for creating a functional semiconductor made from graphene, and for using graphene to make a switch that supports both memory and logic functions, respectively. The Manchester-led team’s achievement was to harness graphene’s ability to conduct both protons and electrons in a device that performs logic operations with a proton current while simultaneously encoding a bit of memory with an electron current. These functions are normally performed by separate circuit elements, which increases data transfer times and power consumption. Conversely, de Heer, Ma and colleagues engineered a form of graphene that does not conduct as easily. Their new “epigraphene” has a bandgap that, like silicon, could allow it to be made into a transistor, but with favourable properties that silicon lacks, such as high thermal conductivity.

Detecting the decay of individual nuclei

To David Moore, Jiaxiang Wang and colleagues at Yale University, US, for detecting the nuclear decay of individual helium nuclei by embedding radioactive lead-212 atoms in a micron-sized silica sphere and measuring the sphere’s recoil as nuclei escape from it. Their technique relies on the conservation of momentum, and it can gauge forces as small as 10-20 N and accelerations as tiny as 10-7 g, where g is the local acceleration due to the Earth’s gravitational pull. The researchers hope that a similar technique may one day be used to detect neutrinos, which are much less massive than helium nuclei but are likewise emitted as decay products in certain nuclear reactions.

Two distinct descriptions of nuclei unified for the first time

To Andrew Denniston at the Massachusetts Institute of Technology in the US, Tomáš Ježo at Germany’s University of Münster and an international team for being the first to unify two distinct descriptions of atomic nuclei. They have combined the particle physics perspective – where nuclei comprise quarks and gluons – with the traditional nuclear physics view that treats nuclei as collections of interacting nucleons (protons and neutrons). The team has provided fresh insights into short-range correlated nucleon pairs – which are fleeting interactions where two nucleons come exceptionally close and engage in strong interactions for mere femtoseconds. The model was tested and refined using experimental data from scattering experiments involving 19 different nuclei with very different masses (from helium-3 to lead-208). The work represents a major step forward in our understanding of nuclear structure and strong interactions.

New titanium:sapphire laser is tiny, low-cost and tuneable

To Jelena Vučković, Joshua Yang, Kasper Van Gasse, Daniil Lukin, and colleagues at Stanford University in the US for developing a compact, integrated titanium:sapphire laser that needs only a simple green LED as a pump source. They have reduced the cost and footprint of a titanium:sapphire laser by three orders of magnitude and the power consumption by two. Traditional titanium:sapphire lasers have to be pumped with high-powered lasers – and therefore cost in excess of $100,000. In contrast, the team was able to pump its device using a $37 green laser diode. The researchers also achieved two things that had not been possible before with a titanium:sapphire laser. They were able to adjust the wavelength of the laser light and they were able to create a titanium:sapphire laser amplifier. Their device represents a key step towards the democratization of a laser type that plays important roles in scientific research and industry.

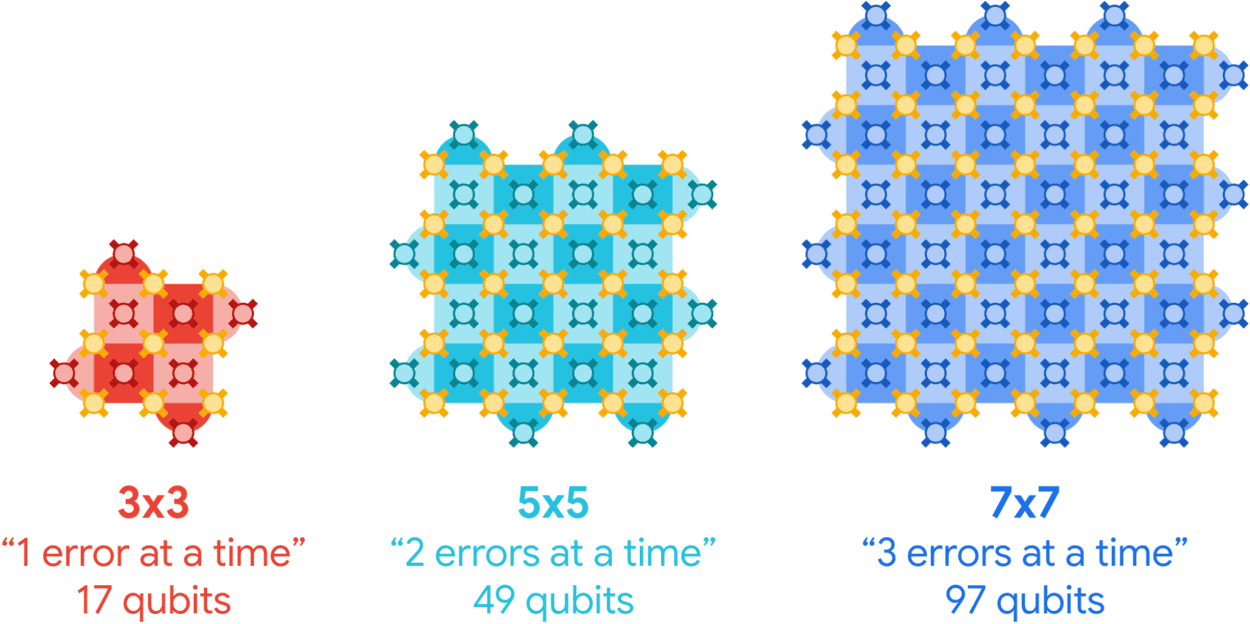

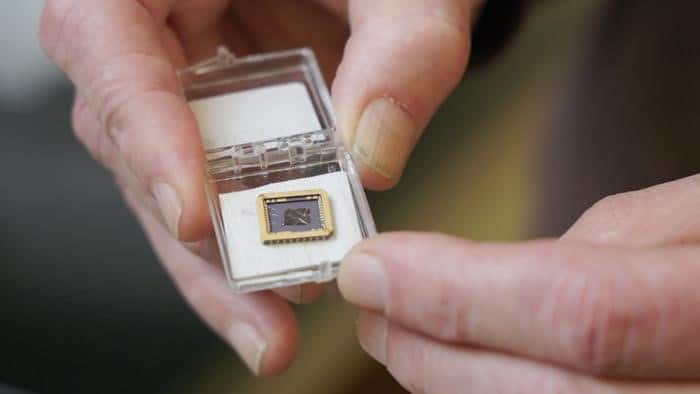

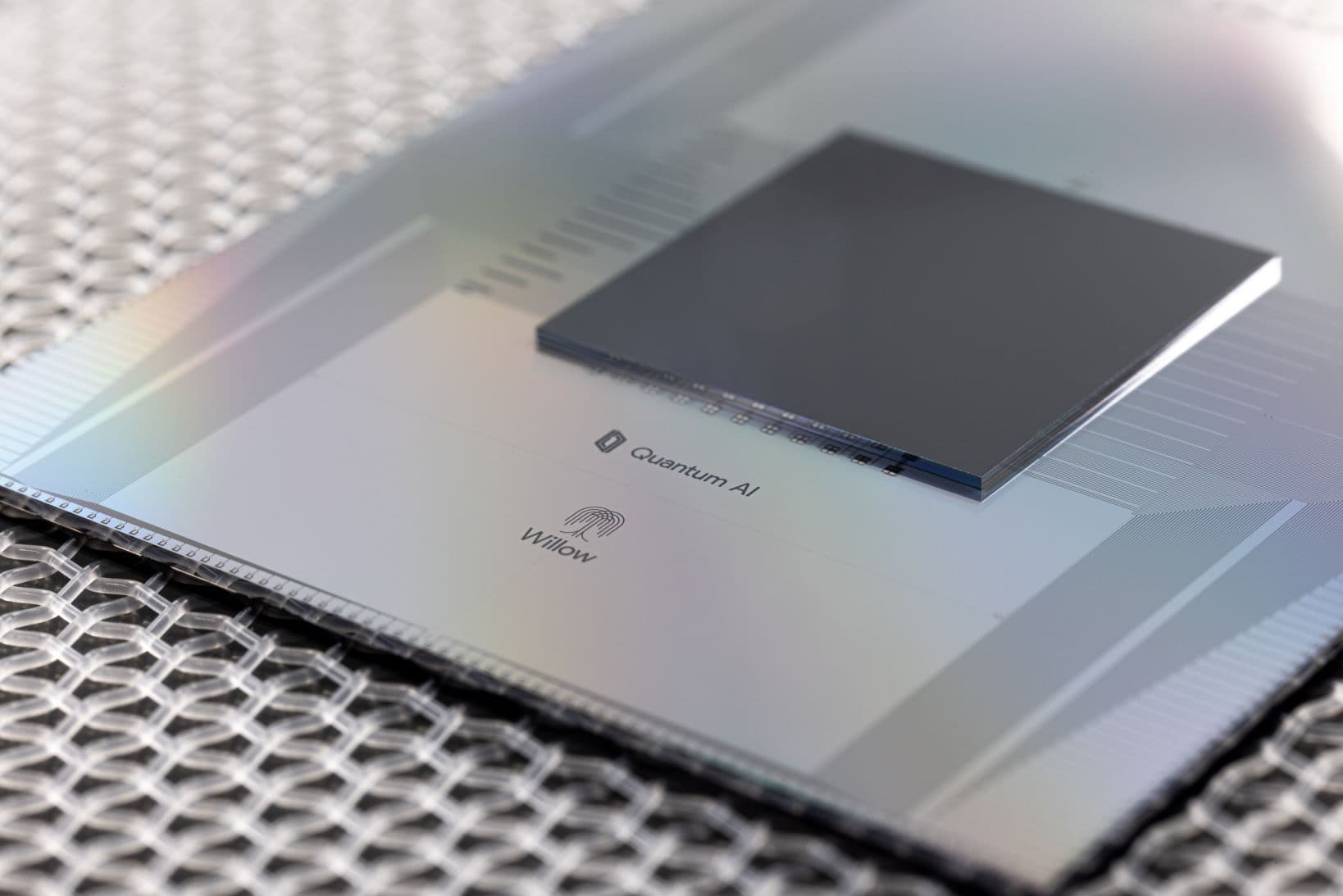

Quantum error correction with 48 logical qubits; and independently, below the surface code threshold

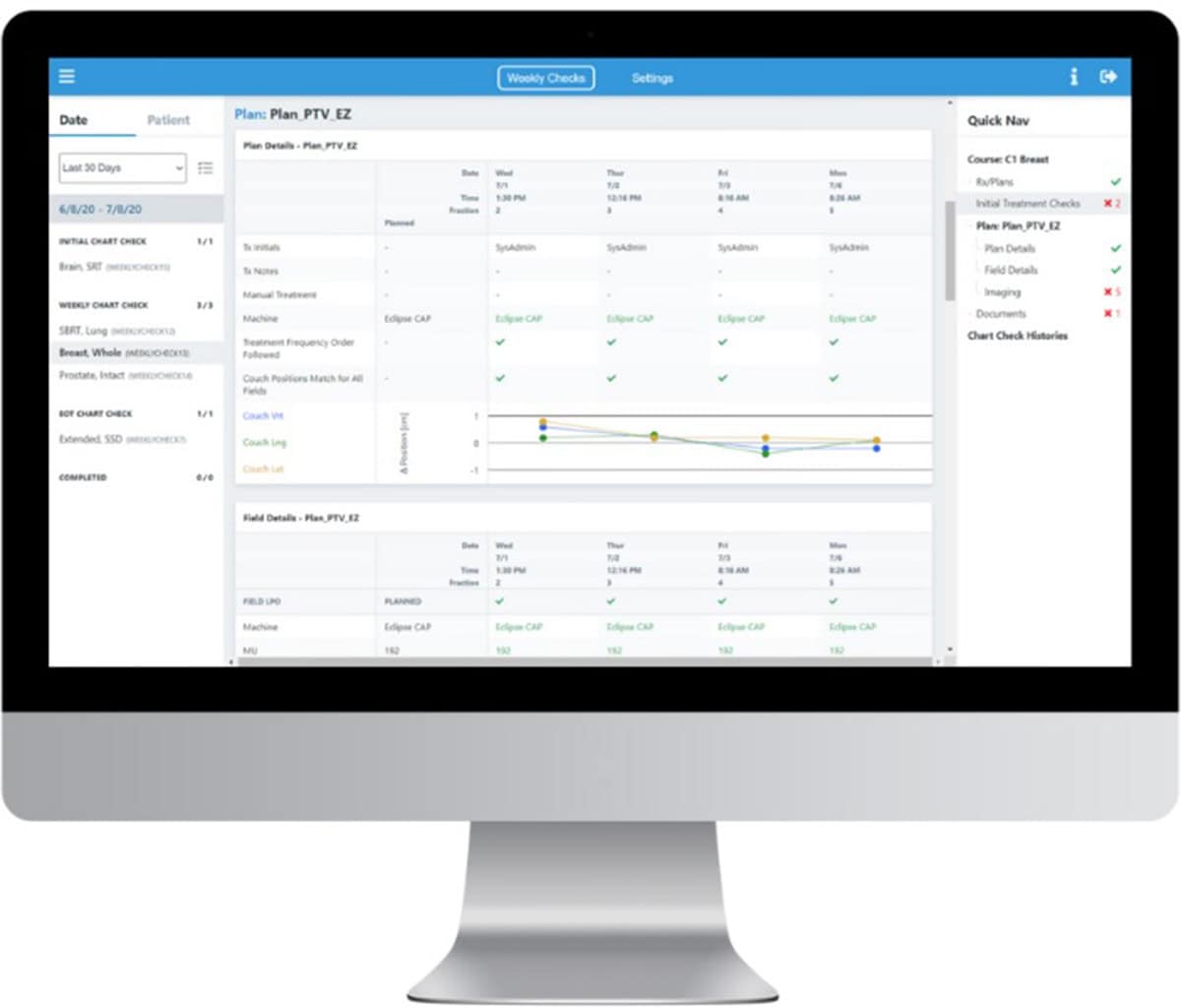

To Mikhail Lukin, Dolev Bluvstein and colleagues at Harvard University, the Massachusetts Institute of Technology and QuEra Computing, and independently to Hartmut Neven and colleagues at Google Quantum AI and their collaborators, for demonstrating quantum error correction on an atomic processor with 48 logical qubits, and for implementing quantum error correction below the surface code threshold in a superconducting chip, respectively. Errors caused by interactions with the environment – noise – are the Achilles heel of every quantum computer, and correcting them has been called a “defining challenge” for the technology. These two teams, working with very different quantum systems, took significant steps towards overcoming this challenge. In doing so, they made it far more likely that quantum computers will become practical problem-solving machines, not just noisy, intermediate-scale tools for scientific research.

Entangled photons conceal and enhance images

To two related teams for their clever use of entangled photons in imaging. Both groups include Chloé Vernière and Hugo Defienne of Sorbonne University in France, who as duo used quantum entanglement to encode an image into a beam of light. The impressive thing is that the image is only visible to an observer using a single-photon sensitive camera – otherwise the image is hidden from view. The technique could be used to create optical systems with reduced sensitivity to scattering. This could be useful for imaging biological tissues and long-range optical communications. In separate work, Vernière and Defienne teamed up with Patrick Cameron at the UK’s University of Glasgow and others to use entangled photons to enhance adaptive optical imaging. The team showed that the technique can be used to produce higher-resolution images than conventional bright-field microscopy. Looking to the future, this adaptive optics technique could play a major role in the development of quantum microscopes.

First samples returned from the Moon’s far side

To the China National Space Administration for the first-ever retrieval of material from the Moon’s far side, confirming China as one of the world’s leading space nations. Landing on the lunar far side – which always faces away from Earth – is difficult due to its distance and terrain of giant craters with few flat surfaces. At the same time, scientists are interested in the unexplored far side and why it looks so different from the near side. The Chang’e-6 mission was launched on 3 May consisting of four parts: an ascender, lander, returner and orbiter. The ascender and lander successfully touched down on 1 June in the Apollo basin, which lies in the north-eastern side of the South Pole-Aitken Basin. The lander used its robotic scoop and drill to obtain about 1.9 kg of materials within 48 h. The ascender then lifted off from the top of the lander and docked with the returner-orbiter before the returner headed back to Earth, landing in Inner Mongolia on 25 June. In November, scientists released the first results from the mission finding that fragments of basalt – a type of volcanic rock – date back to 2.8 billion years ago, indicating that the lunar far side was volcanically active at that time. Further scientific discoveries can be expected in the coming months and years ahead as scientists analyze more fragments.

Physics World‘s coverage of the Breakthrough of the Year is supported by Reports on Progress in Physics, which offers unparalleled visibility for your ground-breaking research.