Superconducting nanowires could be used as both targets and sensors for the direct detection of dark matter, physicists in Israel and the US have shown. Using a prototype nanowire detector, Yonit Hochberg at the Hebrew University of Jerusalem and colleagues demonstrated the possibility of detecting of dark matter particles with masses below about 1 GeV/c2, while maintaining very low levels of noise. The team says it has already used its prototype to set “meaningful bounds” on interactions between electrons and dark matter.

While dark matter appears to make up about 85% of the matter in the universe, it has not been detected directly – despite the best efforts of physicists working on numerous detectors worldwide. So far, the search has been dominated by efforts to detect weakly-interacting massive particles (WIMPs) – hypothetical dark-matter particles that could be streaming through Earth in very large numbers. WIMP detectors are designed to look for particles with masses greater than 1 GeV/c2, and are not expected to be sensitive to lower-energy particles.

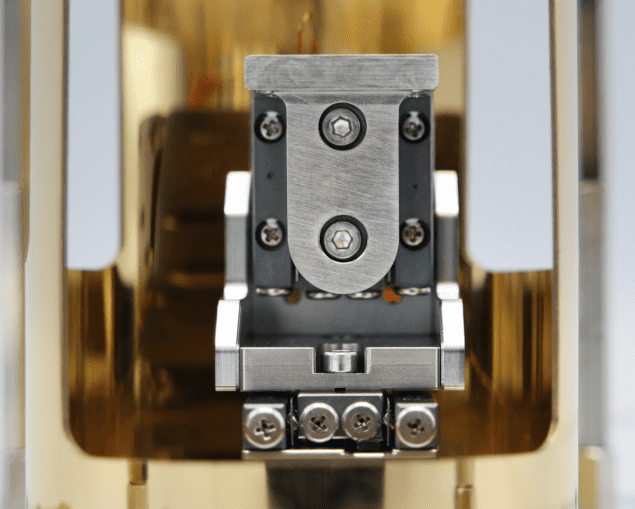

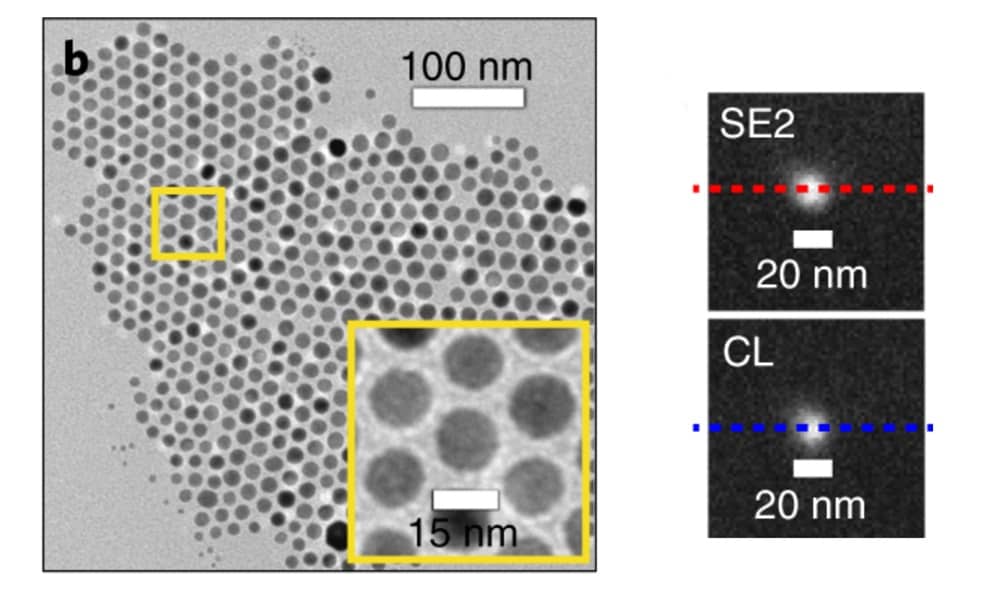

To extend the search to lower masses, physicists have used several different sensor technologies made from materials including graphene, polar crystals, and superfluid helium. Superconducting nanowires are already used to detect single photons, and Hochberg and colleagues at the Massachusetts Institute of Technology and National Institute of Standards and Technology believe that nanowires should join the hunt for dark matter. If a dark matter particle collides with an electron in a cold, current-carrying superconducting nanowire, the nanowire could heat-up and for a short time cease to be a superconductor. The resulting spike in the nanowire’s resistance would reveal that a dark matter interaction has taken place.

Low noise

The physicists tested their proposal by building a tungsten-silicide nanowire prototype, which had a detection energy threshold of 0.8 eV. During 2.8 h of operation, the detector registered no unwanted background counts, which demonstrates a very low level of intrinsic noise.

The team says the technique has several advantages over other detectors, including ultra-fast detection speeds and very low levels of noise. In addition, the wires could potentially pick up dark matter particles with kinetic energies below 1 eV, which is extremely low for a dark-matter detector, and could also detect “dark photons” with energies less than 1 eV. Dark photons are hypothetical particles that could mediate interactions between dark matter.

The team says their early experiments have already placed meaningful bounds on the interaction between dark matter and electrons including the strongest terrestrial bounds on the absorption of sub-electronvolt dark photons.

In future studies, Hochberg and colleagues now hope to fabricate nanowires on larger scales, and with even lower detection thresholds. When coupled with other detection techniques, they believe their nanowires will allow them to probe for dark matter in previously-unexplored regions of mass and energy.

The research is described in a preprint on arXiv.