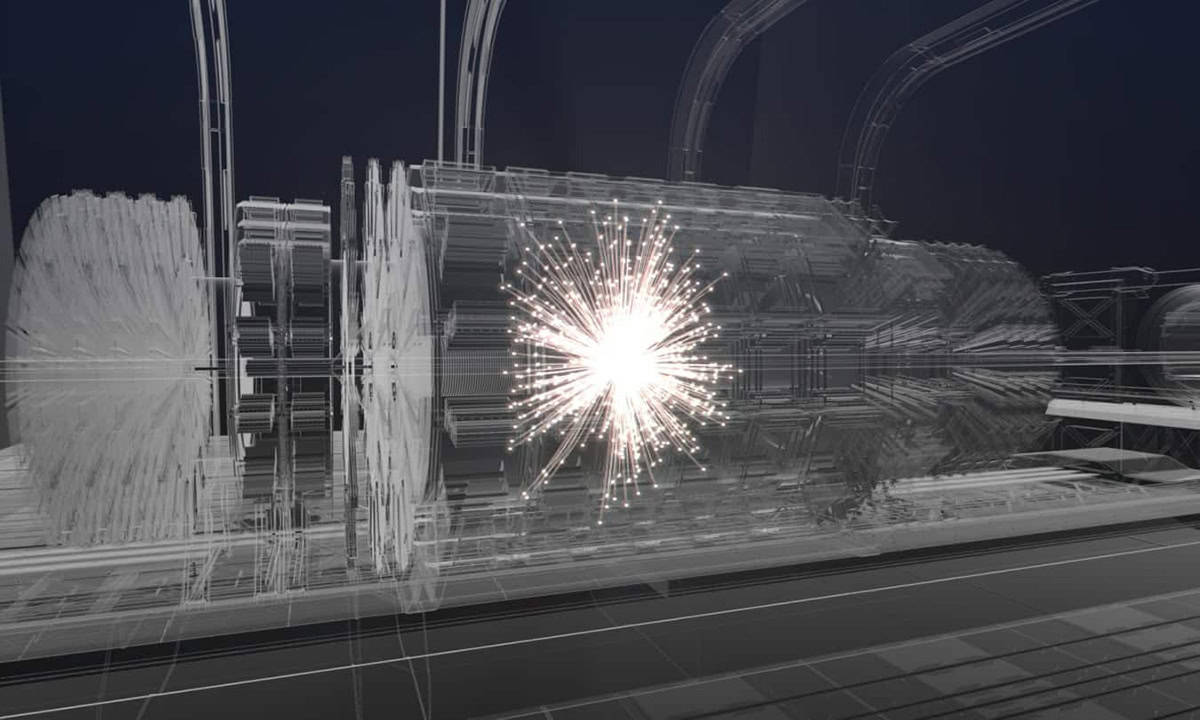

More than a decade following the discovery of the Higgs boson at the CERN particle-physics lab near Geneva in 2012, high-energy physics stands at a crossroads. While the Large Hadron Collider (LHC) is currently undergoing a major £1.1bn upgrade towards a High-Luminosity LHC (HL-LHC), the question facing particle physicists is what machine should be built next – and where – if we are to study the Higgs boson in unprecedented detail in the hope of revealing new physics.

Several designs exist, one of which is a huge 91 km circumference collider at CERN known as the Future Circular Collider (FCC). But new technologies are also offering tantalising alternatives to such large machines, notably a muon collider. As CERN celebrates its 70th anniversary this year, Michael Banks talks to Tulika Bose from the University of Wisconsin–Madison, Philip Burrows from the University of Oxford and Tara Shears from the University of Liverpool about the latest research on the Higgs boson, what the HL-LHC might discover and the range of proposals for the next big particle collider.

What have we learnt about the Higgs boson since it was discovered in 2012?

Tulika Bose (TB): The question we have been working towards in the past decade is whether it is a “Standard Model” Higgs boson or a sister, or a cousin or a brother of that Higgs. We’ve been working really hard to pin it down by measuring its properties. All we can say at this point is that it looks like the Higgs that was predicted by the Standard Model. However, there are so many questions we still don’t know. Does it decay into something more exotic? How does it interact with all of the other particles in the Standard Model? While we’ve understood some of these interactions, there are still many more particle interactions with the Higgs that we don’t quite understand. Then of course, there is a big open question about how the Higgs interacts with itself. Does it, and if so, what is its interaction strength? These are some of the exciting questions that we are currently trying to answer at the LHC.

So the Standard Model of particle physics is alive and well?

TB: The fact that we haven’t seen anything exotic that has not been predicted yet tells us that we need to be looking at a different energy scale. That’s one possibility – we just need to go much higher energies. The other alternative is that we’ve been looking in the standard places. Maybe there are particles that we haven’t yet been able to detect that couple incredibly lightly to the Higgs.

Has it been disappointing that the LHC hasn’t discovered particles beyond the Higgs?

Tara Shears (TS): Not at all. The Higgs alone is such a huge step forward in completing our picture and understanding of the Standard Model, providing, of course, it is a Standard Model Higgs. And there’s so much more that we’ve learned aside from the Higgs, such as understanding the behaviour of other particles such as differences between matter and antimatter charm quarks.

How will the HL-LHC take our understanding of the Higgs forward?

TS: One way to understand more about the Higgs is to amass enormous amounts of data to look for very rare processes and this is where the HL-LHC is really going to come into its own. It is going to allow us to extend those investigations beyond the particles we’ve been able to study so far making our first observations of how the Higgs interacts with lighter particles such as the muon and how the Higgs interacts with itself. We hope to see that with the HL-LHC.

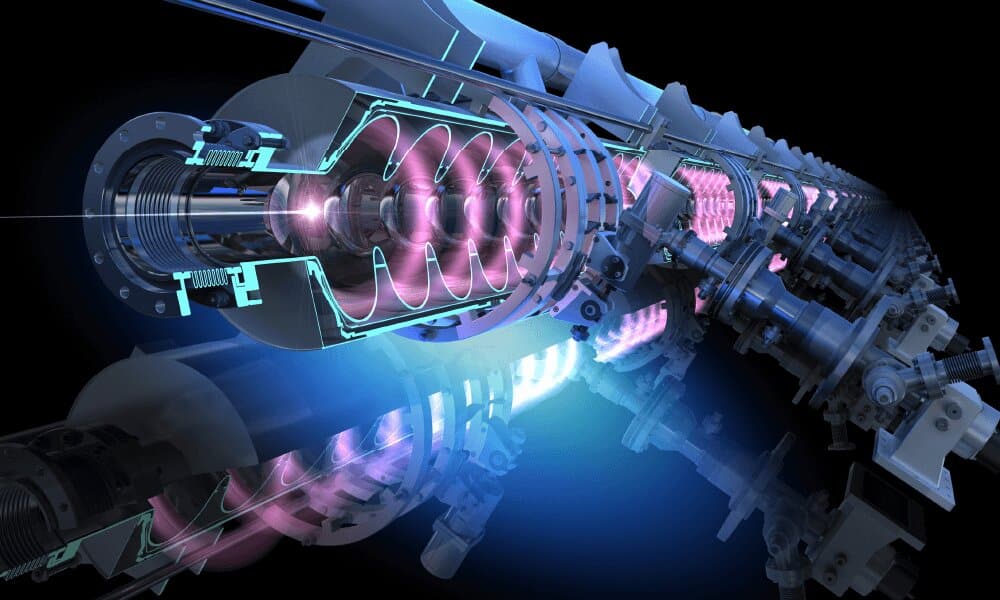

What is involved with the £1.1bn HL-LHC upgrade?

Philip Burrows (PB): The LHC accelerator is 27 km long and about 90% of it is not going to be affected. One of the most critical aspects of the upgrade is to replace the magnets in the final focus systems of the two large experiments, ATLAS and CMS. These magnets will take the incoming beams and then focus them down to very small sizes of the order of 10 microns in cross section. This upgrade includes the installation of brand new state-of-the-art niobium-tin (Nb3Sn) superconducting focusing magnets.

What is the current status of the project?

PB: The schedule involves shutting down the LHC for roughly three to four years to install the high-luminosity upgrade, which will then turn on towards the end of the decade. The current CERN schedule has the HL-LHC running until the end of 2041. So there’s another 10 years plus of running this upgraded collider and who knows what exciting discoveries are going to be made.

TS: One thing to think about concerning the cost is that the timescale of use is huge and so it is an investment for a considerable part of the future in terms of scientific exploitation. It’s also an investment in terms of potential spin-out technology.

In what way will the HL-LHC be better than the LHC?

PB: The measure of the performance of the accelerator is conventionally given in terms of luminosity and it’s defined as the number of particles that cross at these collision points per square centimetre per second. That number is roughly 1034 with the LHC. With the high-luminosity upgrade, however, we are talking about making roughly an order of magnitude increase in the total data sample that will be collected over the next decade or so. So in other words, we’ve only got 10% or so of the total data sample so far in the bag. After the upgrade, there’ll be another factor of 10 data that will be collected and that is a completely new ball game in terms of the statistical accuracy of the measurements that can be made and the sensitivity and reach for new physics

Looking beyond the HL-LHC, particle physicists seem to agree that the next particle collider should be a Higgs factory – but what would that involve?

TB: Even at the end of the HL-LHC, there will be certain things we won’t be able to do at the LHC and that’s for several reasons. One is that the LHC is a proton–proton machine and when you’re colliding protons, you end up with a rather messy environment in comparison to the clean collisions between electrons and positrons and this allows you to make certain measurements which will not be possible at the LHC.

So what sort of measurements could you do with a Higgs factory?

TS: One is to find out how much the Higgs couples to the electron. There’s no way we will ever find that out with the HL-LHC, it’s just too rare a process to measure, but with a Higgs factory, it becomes a possibility. And this is important not because it’s stamp collecting, but because understanding why the mass of the electron, which the Higgs boson is responsible for, has that particular value is of huge importance to our understanding of the size of atoms, which underpins chemistry and materials science.

PB: Although we often call this future machine a Higgs factory, it has far more uses beyond making Higgs bosons. If you were to run it at higher energies, for example, you could make pairs of top quarks and anti-top quarks. And we desperately want to understand the top quark, given it is the heaviest fundamental particle that we are aware of – it’s roughly 180 times heavier than a proton. You could also run the Higgs factory at lower energies and carry out more precision measurements of the Z and W bosons. So it’s really more than a Higgs factory. Some people say it’s the “Higgs and the electroweak boson factory” but that doesn’t quite roll off the tongue in the same way.

While it seems there’s a consensus on a Higgs factory, there doesn’t appear to be one regarding building a linear or circular machine?

PB: There are two main designs on the table today – circular and linear. The motivation for linear colliders is due to the problem of sending electrons and positrons round in a circle – they radiate photons. So as you go to higher energies in a circular collider, electrons and positrons radiate that energy away in the form of synchrotron radiation. It was felt back in the late-1990s that it was the end of the road for circular electron–positron colliders because of the limitations of synchrotron radiation. But the discovery of the Higgs boson at 125 GeV was lighter than some had predicted. This meant that an electron–positron collider would only need a centre of mass energy of about 250 GeV. Circular electron–positron colliders then came back in vogue.

TS: The drawback with a linear collider is that the beams are not recirculated in the same way as they are in a circular collider. Instead, you have “shots”, so it’s difficult to reach the same volume of data in a linear collider. Yet it turns out that both of these solutions are really competitive with each other and that’s why they are still both on the table.

PB: Yes, while a circular machine may have two, or even four, main detectors in the ring, at a linear machine the beam can be sent to only one detector at a given time. So having two detectors means you have to share the luminosity, so each would get notionally half of the data. But to take an automobile analogy, it’s kind of like arguing about the merits of a Rolls-Royce versus a Bentley. Both linear and circular are absolutely superb, amazing options and some have got bells and whistles over here and others have got bells and whistles over there, but you’re really arguing about the fine details.

CERN seems to have put its weight behind the Future Circular Collider (FCC) – a huge 91 km circumference circular collider that would cost £12bn. What’s the thinking behind that?

TS: The cost is about one-and-a-half times that of the Channel Tunnel so it is really substantial infrastructure. But bear in mind it is for a facility that’s going to be used for the remainder of the century, for future physics, so you have to keep that longevity in mind when talking about the costs.

TB: I think the circular collider has become popular because it’s seen as a stepping stone towards a proton–proton machine operating at 100 TeV that would use the same infrastructure and the same large tunnel and begin operation after the Higgs factory element in the 2070s. That would allow us to really pin down the Higgs interaction with itself and it would also be the ultimate discovery machine, allowing us to discover particles at the 30–40 TeV scale, for example.

What kind of technologies will be needed for this potential proton machine?

PB: The big issue is the magnets, because you have to build very strong bending magnets to keep the protons going round on their 91 km circumference trajectory. The magnets at the LHC are 8 T but some think the magnets you would need for the proton version of the FCC would be 16–20 T. And that is really pushing the boundaries of magnet technology. Today, nobody really knows how to build such magnets. There’s a huge R&D effort going on around the world and people are constantly making progress. But that is the big technological uncertainty. Yet if we follow the model of an electron–positron collider first, followed by a proton–proton machine, then we will have several decades in which to master the magnet technology.

With regard to novel technology, the influential US Particle Physics Project Prioritization Panel, known as “P5”, called for more research into a muon collider, calling it “our muon shot”. What would that involve?

TB: Yes, I sat on the P5 panel that published a report late last year that recommended a course of action for US particle physics for the coming 20 years. One of those recommendations involves carrying out more research and development into a muon collider. As we already discussed, an electron–positron collider in a circular configuration suffers from a lot of synchrotron radiation. The question is if we can instead use a fundamental elementary particle that is more massive than the electron. In that case a muon collider could offer the best of both worlds, the advantages of an electron machine in terms of clean collisions but also reaching larger energies like a proton machine. However, the challenge is that the muon is very unstable and decays quickly. This means you are going to have to create, focus and collide them before they decay. A lot of R&D is needed in the coming decades but perhaps a decision could be taken on whether to go ahead by the 2050s.

And potentially, if built, it would need a tunnel of similar size to the existing LHC?

TB: Yes. The nice thing about the muon collider is that you don’t need a massive 90 km tunnel so it could actually fit on the existing Fermilab campus. Perhaps we need to think about this project in a global way because this has to be a big global collaborative effort. But whatever happens it is exciting times ahead.

- Tulika Bose, Philip Burrows and Tara Shears were speaking on a Physics World Live panel discussion about the future of particle physics held on 26 September 2024. This Q&A is an edited version of the event, which you can watch online now