A pair of machine-learning algorithms tracks patient motion in real time, in an ultrasound-guided radiotherapy system demonstrated by researchers in China and the US. While one neural network extracts features from each frame in an imaging sequence, the other observes patterns over time, predicting where the target will be in subsequent frames. The technique could offer clinicians an accurate and computationally efficient means of monitoring tumour motion without the need for ionizing radiation or implanted markers.

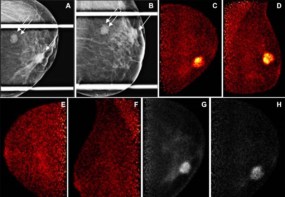

Real-time image guidance allows radiotherapy to be administered with the least possible damage to healthy tissue. It achieves this by tracking the tumour’s motion during therapy and switching the radiation beam off whenever it moves outside of the target volume. Image-guided radiotherapy (IGRT) systems typically employ X-ray CT to produce images for planning and patient positioning, but the image acquisition process is too slow to provide information continuously throughout the procedure. The same instrument can capture 2D fluoroscopic images with high enough temporal resolution, but these lack soft-tissue contrast, and therefore rely on the presence of prominent fiducial markers implanted in the tumour.

An inexpensive alternative to X-ray-based IGRT, ultrasound imaging requires no fiducial markers to be implanted and delivers no ionizing radiation to the patient. No implementation tried so far, however, has managed to simultaneously achieve adequate tracking accuracy and computational speed.

Now, a collaboration led by Lei Xing of Stanford University School of Medicine and Dengwang Li of Shandong Normal University has shown that a machine-learning approach can achieve a level of tracking accuracy well above the threshold of clinical acceptability, while keeping up easily with the 30 frames per second acquired by the ultrasound imaging device (Med. Phys. 10.1002/mp.13510).

Key to the result was the use of two complementary network architectures, each of which focuses on a different aspect of the motion-tracking problem. “The first part is mainly for image-feature learning,” says first author Pu Huang of Shandong Normal University. “It transfers the high-dimensional image to a low-dimensional but high-level feature representation, which is resistant to noise.”

Fed this spatial information, the system’s second component handles the temporal dimension. “This part is mainly for historical memory learning,” explains Huang. “It processes the high-level feature representation in an efficient way and yields the predicted target location.”

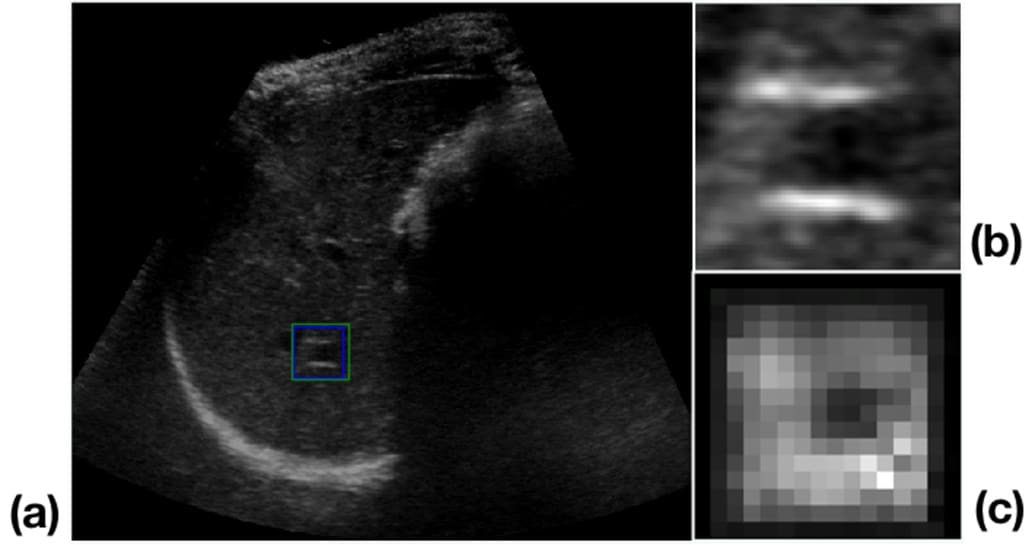

The researchers applied these algorithms to a dataset of 64 liver ultrasound sequences in which the features — which moved and deformed throughout each sequence — had been identified by experienced ultrasound physicians. Twenty-five of the sequences made up a training set while the remaining 39 were used to test the tracker’s performance. The feature locations as determined by the ultrasound physicians were taken to represent the “ground truth” against which the algorithms were judged.

Compared to the assumed perfect performance of the experts, the automatic tracker achieved an average error of less than 1 mm and never exceeded the clinical accuracy threshold of 2 mm. Running on an unexceptional computer with a dedicated graphics processing unit — not unusual nowadays — the algorithms achieved this at a rate of more than 66 frames per second, which is much faster than the frame rate of the ultrasound imager.

As the technique relies on hardware that is commonplace, there are no great barriers to its clinical adoption. Huang and colleagues envisage it being used with either beam gating — where the beam is switched on and off in response to tumour motion — or a multileaf collimator, in which the beam is shaped and directed by movable filters. First, though, the researchers intend to test the procedure with realistic streaming data, as they have only tested it offline so far.