Accurate estimates of CT dose, tailored for individual patients, can now be made within clinically acceptable computation times, report researchers at Duke University. Shobhit Sharma and colleagues used an automatic image-segmentation method to create custom anatomical models from patients’ CT data. Combined with a Monte Carlo simulation of photon transport, and implemented on multiple parallel processors, the team’s technique calculates the radiation burden imposed by the scan in less than half a minute – hundreds of times faster than non-parallelized Monte Carlo methods. Having access to patients’ personalized dose histories will help clinicians make cost–benefit decisions about any subsequent procedures that would deliver an additional dose (Phys. Med. Biol. 10.1088/1361-6560/ab467f).

When estimating the radiation dose that a CT scan delivers to a patient’s organs, clinicians have to balance accuracy against practicality. The most reliable estimates are derived from Monte Carlo simulations that model the individual patient’s anatomy and the geometry and other properties of the scanner – but such simulations can take hours to compute, putting them beyond the reach of time-constrained clinics.

Quicker results can be achieved by using dedicated graphics processing units (GPUs) to perform the simulation, but, says Sharma, “existing tools that use GPU computation to speed up the process lack either patient or scanner specificity, which limits their relevance towards providing dose estimates for a particular patient”.

Off-the-shelf computer models – which come in a range of sizes and body types – can be used instead, but no generic model quite matches a patient’s unique anatomy, so these can only ever yield an approximation of the delivered dose.

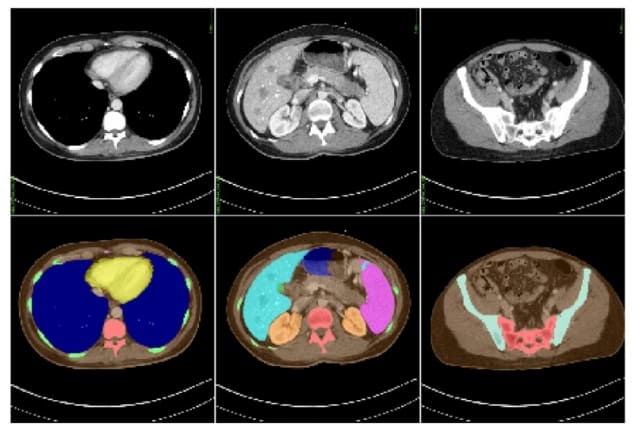

The solution that Sharma and his colleagues explore in their proof-of-principle experiment – based on actual CT datasets from 50 adult subjects – is to follow up the scan with an automatic procedure that maps out the patient’s internal organs. This segmentation process is performed in two steps: first, an artificial neural network produces an initial model from the patient’s CT dataset; then, gaps in the model are filled in by comparing it to a library of pre-existing computational phantoms.

Equipped with a detailed 3D map of their subject, the researchers then run a Monte Carlo radiation-transport simulation to work out where in the patient the X-ray photons’ energy was deposited during the CT scan. As well as accounting for the anatomy of the patient, this simulation can also handle any peculiarities of the CT setup. “Our active collaborations with major CT manufacturers enable us to accurately model proprietary components, which makes the dose estimates from our tool highly scanner-specific,” says Sharma.

Using a code that is optimized for parallel computation by multiple GPUs, the team found that the simulation took an average of 24 s to run for each patient, meaning the procedure can be implemented without disrupting existing clinical workflows.

Having demonstrated the speed of their method, Sharma and colleagues tested its accuracy by comparing it to dose measurements in physical phantoms – the gold standard for validating simulations. The team placed thermoluminescent dosimeters (TLDs) at various positions within two anthropomorphic phantoms, one approximating a five-year-old child, the other an adult male. As they would with a human patient, the researchers acquired CT images of the phantoms, then segmented them (manually this time) to build bespoke computational models. Overall, the calculated doses agreed with those measured by the TLDs to within 10%, which the researchers consider a good match.

One limitation of the technique in its current form is in dealing with secondary electrons produced when X-rays are absorbed in the body. The Monte Carlo code that the researchers use assumes that photons deliver their energy at the point of initial interaction. In reality, secondary electrons carry the energy a short distance before depositing it by exciting and ionizing atoms in the medium. While not a problem at the scale demonstrated so far, a full treatment of secondary electrons is crucial for calculating dose on microscopic scales.

“Since our code doesn’t include any models for sampling electrons generated from photon interactions, our method in its current form is not the best candidate for calculating dose where the range of electrons is greater than the voxel size used for the anatomical model,” explains Sharma. “Having said that, incorporating additional models for electron generation and transport in MC-GPU is feasible and can be accomplished if the need arises.”