Physicists working on the IceCube Neutrino Observatory in Antarctica say they have made the first observation of the Glashow resonance – a process first predicted more than 60 years ago. If confirmed, the observation would provide further confirmation of the Standard Model of particle physics and help astrophysicists understand how astrophysical neutrinos are produced.

In 1959 the theoretical physicist and future Nobel laureate Sheldon Glashow worked out that an electron and an antineutrino could interact via the weak interaction to produce a W boson. Subsequent calculations indicated that this coupling – known as the Glashow resonance – should occur at antineutrino energies of around 6.3 PeV (6.3 × 1015 V). This is well beyond the energies achievable in current or planned particle accelerators, but natural astrophysical phenomena are expected to produce such neutrinos, which could then create a W boson by colliding with an electron here on Earth

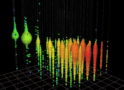

IceCube is well placed to detect such an event because it comprises 86 strings of detectors suspended in holes bored into the Antarctic ice cap. When a neutrino occasionally interacts with the ice, tiny flashes of light are seen by the detectors. However, most interactions are equally probable for neutrinos and antineutrinos, and many neutrino detectors cannot tell which triggered the interaction.

Parity conservation

The need to conserve parity demands that the Glashow resonance be different. “At 6.3 PeV, this peak is only possible if you have an antineutrino interacting with an electron or, in an alternative symmetric world, a neutrino interacting with an antielectron,” explains Lu Lu of the University of Wisconsin-Madison, a senior member of the IceCube team. As a result, measuring the proportion of Glashow resonance events relative to the total number of neutrinos detected from an astronomical event could constrain the ratio of neutrinos to antineutrinos. This in turn could suggest how the neutrinos were produced.

Although astrophysical events are expected to produce neutrinos with very high energies, the number of neutrinos drops off following a power law in energy. Catching neutrinos requires a large detector in any case, so catching very high energy neutrinos requires an immense one. IceCube therefore uses a cubic kilometre of ice at the South Pole as its detection medium.

To search for Glashow resonance collisions in IceCube, the team analysed data taken by the detector between May 2012 and May 2017 using a machine learning algorithm. One event that occurred on 8 December 2016 stood out. The researchers estimate the detectable energy from the event to be 6.05 PeV, which – when losses through undetectable channels are factored in – is consistent with an antineutrino energy of about 6.3 PeV.

Highest energy deposition

The signature from the “early pulses”, caused by particles that outrun the light waves in the ice, helped the researchers rule out other possible explanations, such as a cosmic ray muon, and conclude that the event was indeed caused by an astrophysical neutrino. “This is definitely the highest energy deposition event IceCube has ever recorded from a neutrino,” says Lu. Based on the extremely low background levels expected at this energy, the researchers concluded it was at least 99% likely to be a Glashow event.

The researchers are now planning an even bigger detector, called IceCube Gen-2. As well as detecting neutrinos predicted to have even higher energies, the researchers hope this would allow them to detect a statistically significant number of Glashow events, confirming the findings and allowing the phenomenon to be used in astronomy.

Mysterious high-energy event in IceCube could be a tau neutrino

Lu is particularly excited by the potential to understand how particles are accelerated by astrophysical processes. “For cosmic rays, it’s too difficult because they deflect everywhere,” she says. “High-energy photons interact with the cosmic microwave background; if you don’t have gravitational waves, the only other messenger is the neutrino, and the neutrino-to-antineutrino ratio brings a completely new axis to this game.”

Neutrino physicist David Wark, the UK principal investigator of the Super-Kamiokande detector, is impressed. “People have been trying for 50 years to detect these high-energy astrophysical neutrinos, and so it is astounding that IceCube has finally done it. Just a few years ago they saw the first gold-plated astrophysical neutrinos and now one at a time they’re knocking off all the things we expect to see at these very high energies.” Uncertainties arising partly from the impossibility of calibrating collision energy without extrapolation make him want to see at least one more event to be sure, but he says that the odds of one single detection appearing to be exactly where theory predicts the Glashow resonance are “not large”.

The research is described in Nature.