An artificial material with the same elastic, adhesive, self-cleaning, sensing and tensile properties as natural spider silk has been created by researchers in South Korea. The synthetic web, which is made from a semi-solid stretchy gel, works using electrostatics and might have applications in artificial muscles, grippers and self-cleaning wall-climbing devices.

Spider silk has a tensile strength five times higher than that of steel, and its stretchable threads boast an adhesive coating that enables spiders to capture and trap prey in their webs. This adhesive coating does have a down side, however, which is that it attracts contaminants from the environment, causing the webs’ capturing efficiency to deteriorate.

To overcome this problem, spiders have evolved several strategies for reducing and eliminating dirt and debris from their webs. One such strategy is to build a minimalist web structure and then wait for prey to fly or crawl into it. As its prey struggles with the sticky threads, the spider senses the web vibrating, springs into action and wraps its as-yet weakly-bound prey with additional threads. This traps the prey once and for all, without the need for a high-surface-area web to which contaminants could more easily adhere. Another strategy is for the spiders to pull on their webs and then rapidly release them, causing contaminants to bounce off in a catapult-like fashion as the webs vibrate.

Mimicking spider actuation and sensing

Scientists have long been fascinated by spider biology, and many have attempted to mimic either the spiders’ actuation and sensing or the structural, self-cleaning properties of their silk. Reproducing the behaviour of spider webs in the laboratory is no simple task, however, and previous attempts to do so have mainly focused on recreating the way in which a spider spins its natural web.

A team led by Jeong-Yun Sun and Ho-Young Kim of Seoul National University has now taken a different approach. Their method involves applying static electricity to fibre-like strands of an ionically conducting and stretchable organogel, which is a semi-solid material made of gelling molecules in an organic solvent (in this case, covalently cross-liked polyacrylamide chains in ethylene glycol with dissolved lithium chloride). The strands of this organogel are then encapsulated with silicone rubber and coated with a hydrophobic perfluorinated compound to reduce their surface energy (and thus surface tension).

The researchers, who report their work in Science Robotics, wove these composite fibres into structures that resemble natural spider webs. They found they could make the web strands electro-adhere to target objects made from metals, ceramics and polymers, thanks to the static electric field that arises between adjacent web strands when a high voltage is applied.

Vibrations help eliminate contaminants

Sun, Kim and colleagues found that these target objects generate an electrical response in the ionic conducting fibres as soon as the fibre touches them, initiating the adhesion process by electrostatics. They also discovered that they could make the fibres vibrate by applying an electric field between strand pairs. These vibrations, combined with the fibres’ hydrophobic coating, help to eliminate contaminants that would otherwise reduce adhesion forces. Indeed, after self-cleaning, the artificial webs recovered nearly 99% of their original adhesive force.

In a related article, Jonathan Rossiter, a roboticist at the University of Bristol, UK, and the head of the Soft Robotics group at the Bristol Robotics Laboratory, notes that flexible and stretchable ionic conducting materials could be used as substitutes for conventional conductors in future electroactive artificial muscles. Rossiter, who was not involved in the Seoul team’s work, suggests that such structures could come in handy when developing soft robotic wearable assistive devices for older people or people with disabilities.

Read more

Spider catapults towards prey using energy stored in its web

The study’s lead author, Younghoon Lee, agrees, adding that the team’s approach could also apply to “existing robotics components based on electrostatics, such as electrostatic grippers, dielectric elastomer actuators and capacitive tactile sensors”. In addition, the materials’ ability to self-clean could be exploited in robots that use electro-adhesion to climb walls, and are currently limited to clean, dust-free surfaces.

As a suggestion for future work, Rossiter notes that it would be “extremely interesting to develop the spider analogy further, potentially seeing how spiders could work with the ionic spider webs in their natural environments, providing new insights into biology.” For their part, the Seoul team aim to improve the robustness of their web fibres and may also seek to adapt them so that they can detect non-charged objects, too.

With the advancement of treatment techniques designed to escalate delivered tumour doses, there is an increasing need for quality-assurance tools in radiation therapy treatment planning. Medical physicists are looking to improve treatment delivery by optimizing current 4D IGRT protocols and exploring rapidly advancing adaptive techniques.

With the advancement of treatment techniques designed to escalate delivered tumour doses, there is an increasing need for quality-assurance tools in radiation therapy treatment planning. Medical physicists are looking to improve treatment delivery by optimizing current 4D IGRT protocols and exploring rapidly advancing adaptive techniques.

Atomic force microscopes (AFMs) are versatile tools for characterizing surfaces down to the subnanometre scale. Researchers wanting to, say, map out the optical antennas they’ve inscribed on a chip, or measure the quantum dots they’ve created, can image objects at resolutions down to the picometre level by scanning an AFM over the surface.

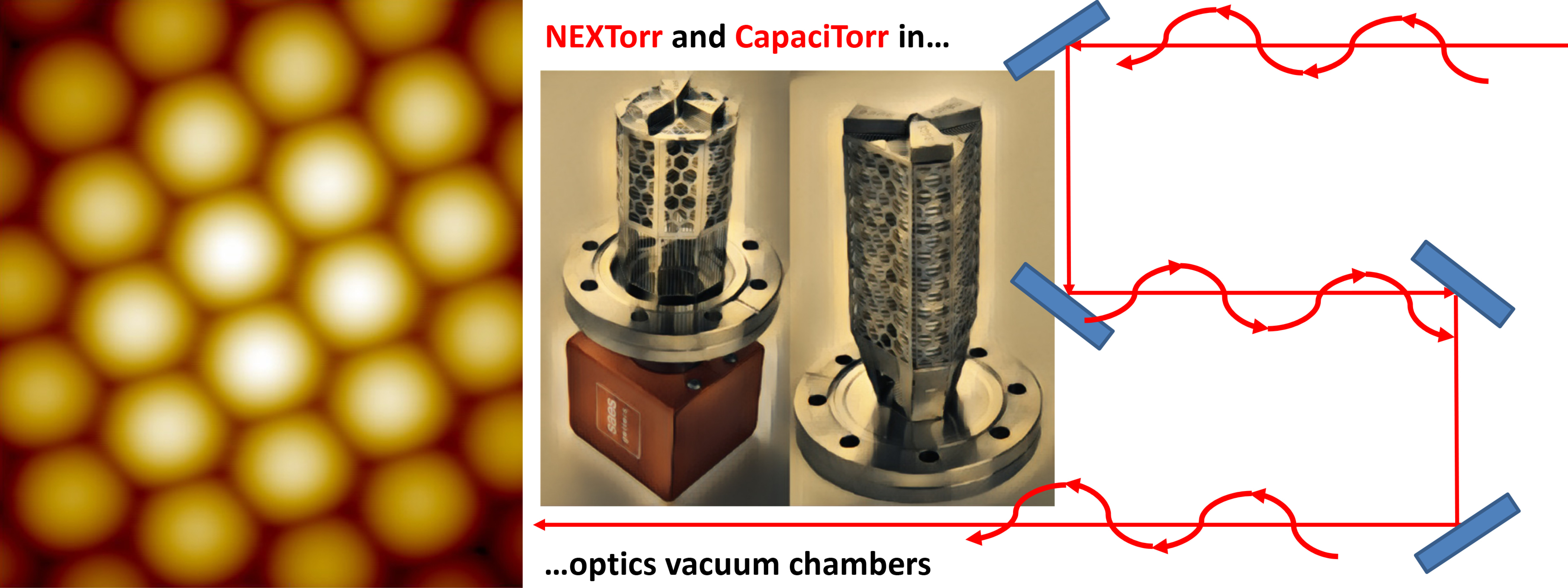

Atomic force microscopes (AFMs) are versatile tools for characterizing surfaces down to the subnanometre scale. Researchers wanting to, say, map out the optical antennas they’ve inscribed on a chip, or measure the quantum dots they’ve created, can image objects at resolutions down to the picometre level by scanning an AFM over the surface. Vacuum engineers and scientists have long known that even if a sample is clean and handled with ultra-high vacuum (UHV) standards, a layer of unwanted carbon will form on the material’s surface after it’s placed in a high-vacuum (HV) or UHV chamber. This also happens for materials in the optics vacuum chambers in particle accelerators and synchrotron X-ray beamlines.

Vacuum engineers and scientists have long known that even if a sample is clean and handled with ultra-high vacuum (UHV) standards, a layer of unwanted carbon will form on the material’s surface after it’s placed in a high-vacuum (HV) or UHV chamber. This also happens for materials in the optics vacuum chambers in particle accelerators and synchrotron X-ray beamlines.