RSNA 2020, the annual meeting of the Radiological Society of North America, showcases the latest research advances and product developments in all areas of radiology. Here’s a selection of studies presented at this year’s all-virtual event, all of which demonstrate the increasingly prevalent role played by artificial intelligence (AI) techniques in diagnostic imaging applications

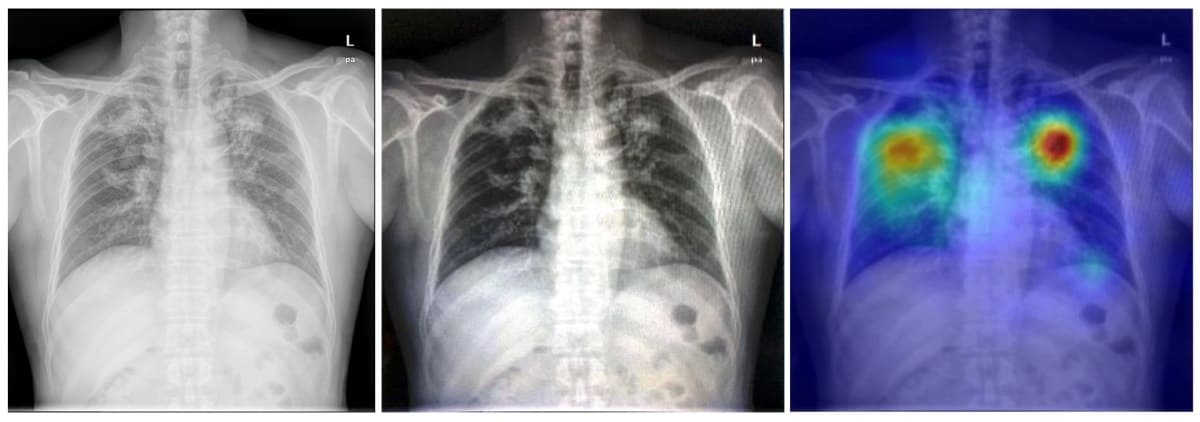

Deep-learning model helps detect TB

Early diagnosis of tuberculosis (TB) is crucial to enable effective treatments, but this can prove challenging for resource-poor countries with a shortage of radiologists. To address this obstacle, Po-Chih Kuo, from Massachusetts Institute of Technology, and colleagues have developed a deep-learning-based TB detection model. The model, called TBShoNet, analyses photographs of chest X-rays taken by a phone camera.

The researchers used three public datasets for model pre-training, transferring and evaluation. They pretrained the neural network on a database containing 250,044 chest X-rays with 14 pulmonary labels, which did not include TB. The model was then recalibrated for chest X-ray photographs by using simulation methods to augment the dataset. Finally, the team built TBShoNet by connecting the pretrained model to an additional 2-layer neural network trained on augmented chest X-ray images (50 TB; 80 normal).

To test the model’s performance, the researcher used 662 chest X-ray photographs (336 TB; 326 normal) taken by five different phones. TBShoNet demonstrated an AUC of 0.89 for TB detection. With optimal cut-off, its sensitivity and specificity for TB classification were 81% and 84%, respectively.

The team conclude that TBShoNet provides a method to develop an algorithm that can be deployed on phones to assist healthcare providers in areas where radiologists and high-resolution digital images are unavailable. “We need to extend the opportunities around medical artificial intelligence to resource limited settings,” says Kuo.

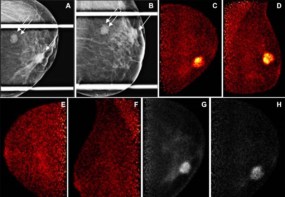

Algorithm predicts breast cancer risk

Researchers at Massachusetts General Hospital (MGH) have developed a deep-learning algorithm that predicts a patient’s risk of developing breast cancer using mammographic image biomarkers alone. The new model can predict risk with greater accuracy than traditional risk-assessment tools.

Existing risk-assessment models analyse patient data (such as family history, prior breast biopsies, and hormonal and reproductive history) plus a single feature from the screening mammogram: breast density. But every mammogram contains unique imaging biomarkers that are highly predictive of future cancer risk. The new algorithm is able to use all of these subtle imaging biomarkers to predict a woman’s future risk for breast cancer.

“Traditional risk-assessment models do not leverage the level of detail that is contained within a mammogram,” says Leslie Lamb, breast radiologist at MGH. “Even the best existing traditional risk models may separate sub-groups of patients but are not as precise on the individual level.”

The team developed the algorithm using breast cancer screening data from a population including women with a history of breast cancer, implants or prior biopsies. The dataset included 245,753 consecutive 2D digital bilateral screening mammograms performed in 80,818 patients. From these, 210,819 exams were used for training, 25,644 for testing and 9290 for validation.

The researchers compared the accuracy of their deep-learning image-only model to that of a commercial risk-assessment model (based on clinical history and breast density) in predicting future breast cancer within five years of the mammogram. The deep-learning model achieved a predictive rate of 0.71, significantly outperforming the traditional risk model’s a rate of 0.61.

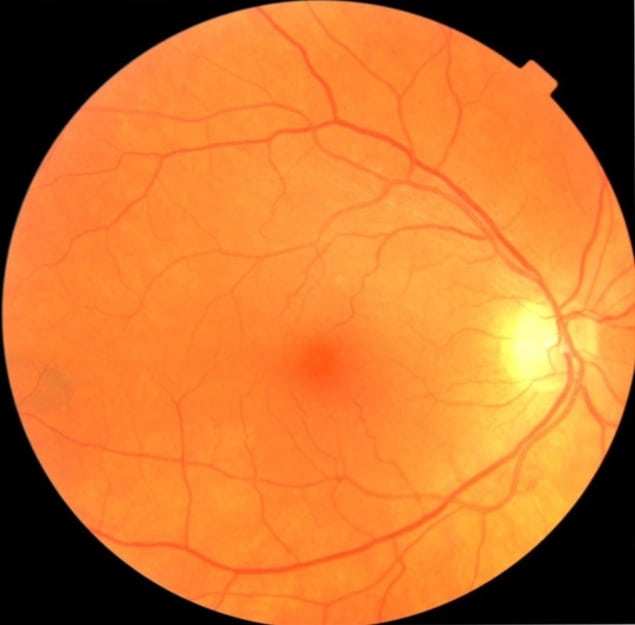

Eye exam could provide early diagnosis of Parkinson’s disease

A simple non-invasive eye exam combined with machine-learning networks could provide early diagnosis of Parkinson’s disease, according to research from a team at the University of Florida.

Parkinson’s disease, a progressive disorder of the central nervous system, is difficult to diagnose at an early stage. Patients usually only develop symptoms – such as tremors, muscle stiffness and impaired balance – after the disease has progressed and significant injury to dopamine brain neurons has occurred.

The degradation of these nerve cells leads to thinning of the retina walls and retinal microvasculature. With this in mind, the researchers are using machine learning to analyse images of the fundus (the back surface of the eye opposite the lens) to detect early indicators of Parkinson’s disease. They note that these fundus images can be taken using basic equipment commonly available in eye clinics, or even captured by a smartphone with a special lens.

Using datasets of fundus images recorded from patients with Parkinson’s disease and age- and gender-matched controls, the researchers trained support vector machine (SVM) classifying networks to detect signs of disease on the images. They employed a machine-learning network called U-Net to select blood vessels from the fundus image, and used the resulting vessel maps as inputs to the SVM classifier. The team showed that these machine-learning networks could classify Parkinson’s disease based on retina vasculature, with the key features being smaller blood vessels.

“The single most important finding of this study was that a brain disease was diagnosed with a basic picture of the eye. You can have it done in less than a minute, and the cost of the equipment is much less than a CT or MRI machine,” says study lead author Maximillian Diaz. “If we can make this a yearly screening, then the hope is that we can catch more cases sooner, which can help us better understand the disease and find a cure or a way to slow the progression.”