Quantum computers – devices that use the quantum mechanical superposition principle to process information – are being developed, built and studied in organizations ranging from universities and national laboratories to start-ups and large corporations such as Google, IBM, Intel and Microsoft. These devices are of great interest because they could solve certain computationally “hard” problems, such as searching large unordered lists or factorizing large numbers, much faster than any classical computer. This is because the quantum mechanical superposition principle is akin to an exponential computational parallelism – in other words, it makes it possible to explore multiple computational paths at once.

Because nature is fundamentally quantum mechanical, quantum computers also have the potential to solve problems concerning the structure and dynamics of solids, molecules, atoms, atomic nuclei or subatomic particles. Researchers have made great progress in solving such problems on classical computers, but the required computational effort typically increases exponentially as the number of particles rises. Thus, it is no surprise that scientists in these fields view quantum computers with a lot of interest.

Many different technologies are being explored as the basis for building quantum processors. These include superconductors, ion traps, optical devices, diamonds with nitrogen-vacancy centres and ultracold neutral atoms – to name just a few. The challenge in all cases is to keep quantum states coherent for long enough to execute algorithms (which requires strictly isolating the quantum processor from external perturbations or noise) while maintaining the ability to manipulate these states in a controlled way (which inevitably requires introducing couplings between the fragile quantum system and the noisy environment).

Recently, universal quantum processors with more than 50 quantum bits, or qubits, have been demonstrated – an exciting milestone because, even at this relatively low level of complexity, quantum processors are becoming too large for their operations to be simulated on all but the most powerful classical supercomputers. The utility of these 50-qubit machines to solve “hard” scientific problems is currently limited by the number of quantum-logic operations that can be performed before decoherence sets in (a few tens), and much R&D effort is focused on increasing such coherence times. Nevertheless, some problems can already be solved on such devices. The question is, how?

First, find a computer

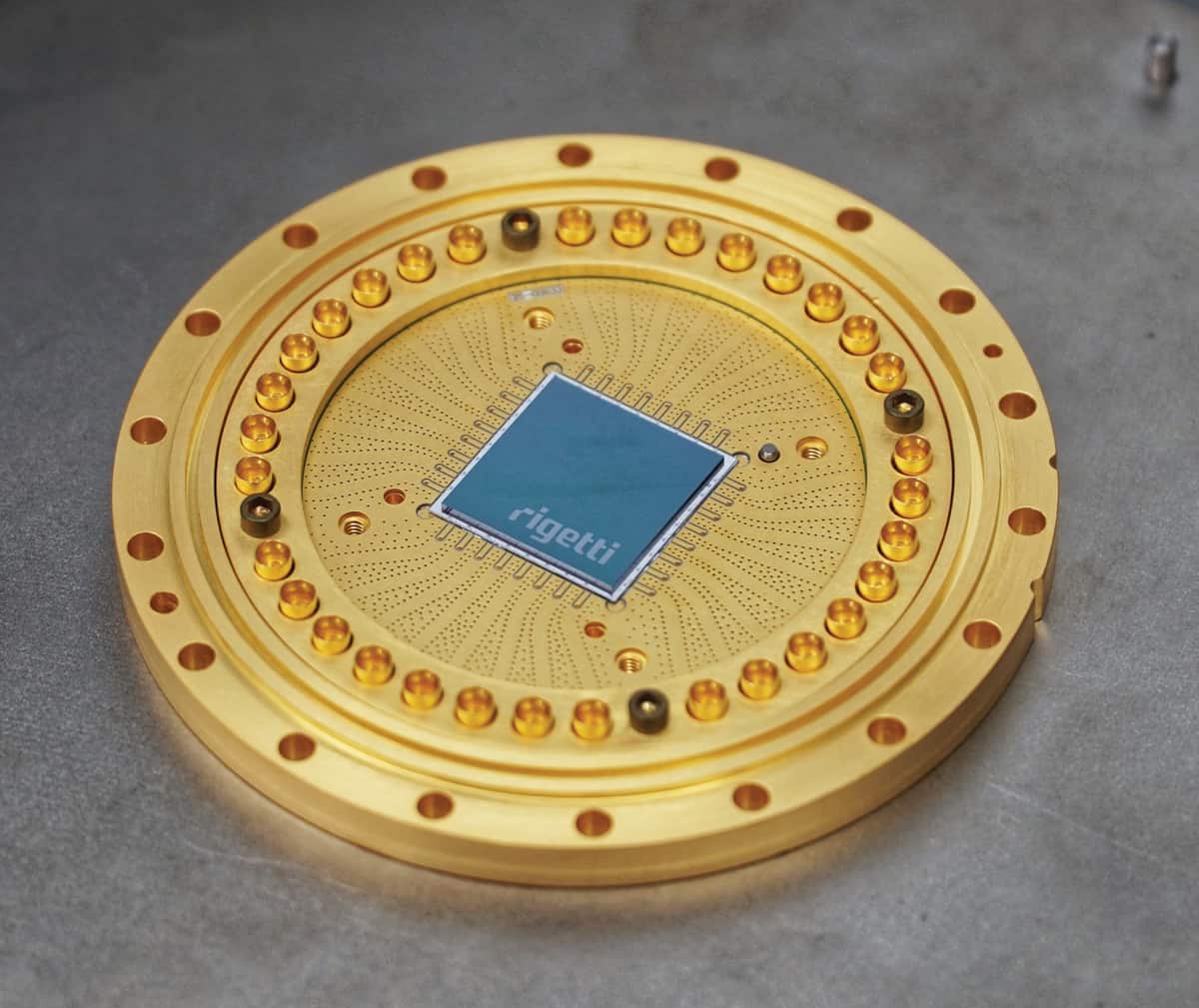

Within the research sector, scientists have taken the first steps towards using quantum devices to solve problems in chemistry, materials science, nuclear physics and particle physics. In most cases, these problems have been studied by collaborations between scientists and the developers, owners and/or operators of the devices. However, a combination of publicly available software (such as PyQuil, QISKit and XACC) to program quantum computing processors, coupled with improved access to the devices themselves, is beginning to open the field to a much broader array of interested parties. The companies IBM and Rigetti, for instance, allow users access to their quantum computers via the IBM Q Experience and the Rigetti Forest API, respectively. These are cloud-based services: users can test and develop their programs on simulators, and run them on the quantum devices, without ever having to leave their offices.

As an example, we recently used the IBM and Rigetti cloud services to compute the binding energy of the deuteron – the bound state of a proton and a neutron that forms the centre of a heavy hydrogen atom. The quantum devices we used consisted of about 20 superconducting qubits, or transmons. The fidelity of their quantum operations on single qubits exceeds 99%, and their two-qubit fidelity is around 95%. Each qubit is typically connected to 3–5 neighbours. It is expected that these specifications (number of qubits, fidelities and connectivity) will improve with time, but the near future of universal quantum computing is likely to be based on similar parameters – what John Preskill of the California Institute of Technology calls “noisy intermediate-scale quantum” (NISQ) technology.

The deuteron is the simplest atomic nucleus, and its properties are well known, making it a good test case for quantum computing. Also, because qubits are two-state quantum-mechanical systems (conveniently thought of as a “spin up” and a “spin down” state), there is a natural mapping between qubits and fermions – that is, particles with half-integer spin that obey the Pauli exclusion principle – such as the proton and neutron that make up a deuteron. Conceptually, each qubit represents an orbital (or a discretized) position that a fermion can occupy, and spin up and down correspond to zero or one fermion occupying that orbital, respectively. Based on this Jordan-Wigner mapping, a quantum chip can simulate as many fermions as it has qubits.

Another helpful feature of the quantum computation of the deuteron binding energy is that the calculation itself can be simplified. The translational invariance of the problem reduces the bound-state calculation of the proton and the neutron to a single-particle problem that depends only on the relative distance between the particles. Furthermore, the deuteron’s Hamiltonian becomes simpler in the limit of long wavelengths, as details of the complicated strong interaction between protons and neutrons are not resolved at low energies. These simplifications allowed us to perform our quantum computation using only two and three qubits.

Then, do your calculation

We prepared a family of entangled quantum states on the quantum processor, and calculated the deuteron’s energy on the quantum chip. The state preparation consists of a unitary operation, decomposed into a sequence of single- and two-qubit quantum logical operations, acting on an initial state. With an eye towards the relatively low two-qubit fidelities, we employed a minimum number of two-qubit CNOT (controlled-not) operations for this task. To compute the deuteron’s energy, we measured expectation values of Pauli operators in the Hamiltonian, projecting the qubit states onto classical bits. This is a stochastic process, and we collected statistics from up to 10,000 measurements for each prepared quantum state. This is about the maximum number of measurements that users can make through cloud access, but it was sufficient for us because we were limited by noise and not by statistics. More complicated physical systems employing a larger number of qubits, or demanding a higher precision, could, however, require more measurements.

To compute the binding energy of the deuteron, we had to find the minimum energy of all the quantum states we prepared. This minimization was done with a classical computer, using the results from the quantum chip as input. We used two versions of the deuteron’s Hamiltonian, one for two and one for three qubits. The two-qubit calculation involved only a single CNOT operation and, as a consequence, did not suffer from significant noise.

However, the three-qubit calculation was considerably affected by noise, because the quantum circuit involved three CNOT operations. To understand the systematic effects of the noise, we inserted extra pairs of CNOT operations – equivalent to identity operators in the absence of noise – into the quantum circuits. This further increased the noise level and allowed us to measure and subtract the noise in the energy calculations. As a result, our efforts yielded the first quantum computation of an atomic nucleus, performed via the cloud.

What next?

For our calculation, we used quantum processors alongside classical computers. However, quantum computers hold great promise for standalone applications as well. The dynamics of interacting fermions, for instance, is generated by a unitary time-evolution operator and can therefore be naturally implemented by unitary gate operations on a quantum chip.

In a separate experiment, we used the IBM quantum cloud to simulate the Schwinger model – a prototypical quantum-field theory that describes the dynamics of electrons and positrons coupled via the electromagnetic field. Our work follows that carried out by Esteban Martinez and collaborators at the University of Innsbruck, who explored the dynamics of the Schwinger model in 2016 using a highly optimized trapped-ion system as a quantum device, which permitted them to apply hundreds(!) of quantum operations. To make our simulation possible via cloud access to a NISQ device, we exploited the model’s symmetries to reduce the complexity of our quantum circuit. We then applied the circuit to an initial ground state, generating the unitary time evolution, and measured the electron-positron content as a function of time using only two qubits.

The publicly available Python APIs from IBM and Rigetti made our cloud quantum-computing experience quite easy. They allowed us to test our programs on simulators (where imperfections such as noise can be avoided) and to run the calculations on actual quantum hardware without needing to know many details about the hardware itself. However, while the software decomposed our state-preparation unitary operation into a sequence of elementary quantum-logic operations, the decomposition was not optimized for the hardware. This forced us to tinker with the quantum circuits to minimize the number of two-qubit operations. Looking into the future, and considering more

complex systems, it would be great if this type of decomposition optimization could be automated.

For most of its history, quantum computing has only been experimentally available to a select few researchers with the know-how to build and operate such devices. Cloud quantum computing is set to change that. We have found it a liberating experience – a great equalizer that has the potential to bring quantum computing to many, just as the devices themselves are beginning to prove their worth.