For as long as computers have existed, physicists have used them as tools to understand, predict and model the natural world. Computing experts, for their part, have used advances in physics to develop machines that are faster, smarter and more ubiquitous than ever. This collection celebrates the latest phase in this symbiotic relationship, as the rise of artificial intelligence and quantum computing opens up new possibilities in basic and applied research

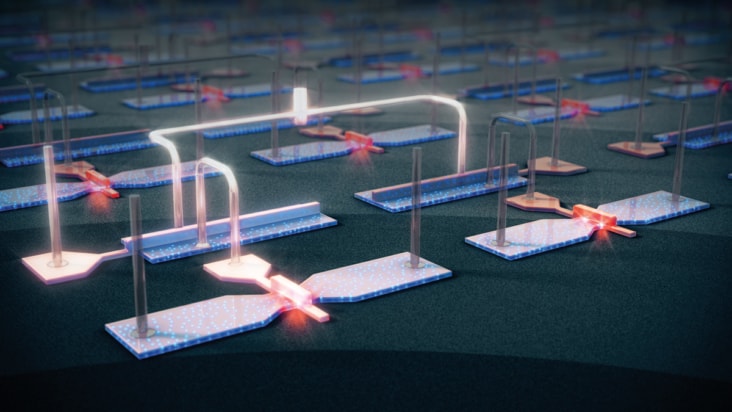

As quantum computing matures, will decades of engineering give silicon qubits an edge? Fernando Gonzalez-Zalba, Tsung-Yeh Yang and Alessandro Rossi think so

Physicist and Raspberry Pi inventor Eben Upton explains how simple computers are becoming integral to the Internet of Things

Physics World journalists discuss the week’s highlights

James McKenzie explains how Tim Berners-Lee's invention of the World Wide Web at CERN has revolutionized how we trade.

Tim Berners-Lee predicts the future of online publishing in an article he wrote for Physics World in 1992

Jess Wade illustrates the history of the World Wide Web, from the technology that enabled it to the staple it is today

Emerging technologies shaping our connected world

Fifth episode in mini-series revisits the birth of the Web and the challenges it now faces

Computing is transforming scientific research, but are researchers and software code adapting at the same rate? Benjamin Skuse finds out

MaterialsGalaxy platform will reduce unhelpful “silos” within the field, say scientists

Read article: New project takes aim at theory-experiment gap in materials data

Read article: New project takes aim at theory-experiment gap in materials data

CGI pioneer Pat Hanrahan is our podcast guest

Read article: Oscar-winning computer scientist on the physics of computer animation

Read article: Oscar-winning computer scientist on the physics of computer animation

Discovery could improve the performance of hovering robots and even artificial pollinators

Read article: Simple feedback mechanism keeps flapping flyers stable when hovering

Read article: Simple feedback mechanism keeps flapping flyers stable when hovering

New calculation of viral spread suggests that rapid elimination of SARS-CoV-2-like viruses is scientifically feasible, though social challenges remain

Read article: Staying the course with lockdowns could end future pandemics in months

Read article: Staying the course with lockdowns could end future pandemics in months

Simulations of improved technique generate fields as strong as those found near neutron stars

Read article: Laser-driven implosion could produce megatesla magnetic fields

Read article: Laser-driven implosion could produce megatesla magnetic fields

Otto engine design uses Bose gas as a working fluid and exchanges particles as well as heat with reservoirs

Read article: Quantum thermochemical engine could achieve high power with near-maximum efficiency

Read article: Quantum thermochemical engine could achieve high power with near-maximum efficiency

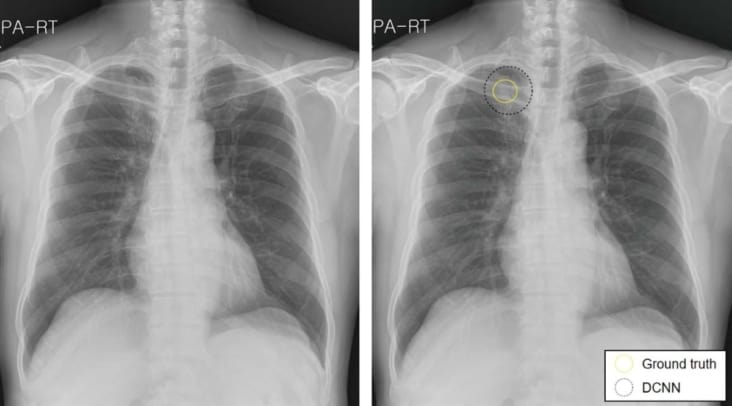

Introducing artificial intelligence into the clinical workflow helps radiologists detect lung cancer lesions on chest X-rays and dismiss false-positives

Algorithms help materials scientists recognize patterns in structure-function relationships

A deep learning algorithm detects brain haemorrhages on head CT scans with comparable performance to highly trained radiologists

An artificial intelligence model can identify patients with intermittent atrial fibrillation from scans performed during normal heart rhythm

Proof-of-concept demonstration done using two superconducting qubits

An image-based artificial intelligence framework predicts a personalized radiation dose that minimizes the risk of treatment failure

A machine learning algorithm can read electroencephalograms as well as clinicians

Mechanism could pave the way for more robust quantum computation, but questions remain over scalability

Read article: Read-out of Majorana qubits reveals their hidden nature

Read article: Read-out of Majorana qubits reveals their hidden nature

Technique lays the groundwork for neutral-atom quantum computers with more than 100,000 qubits, say physicists

Read article: Metasurfaces create super-sized neutral atom arrays for quantum computing

Read article: Metasurfaces create super-sized neutral atom arrays for quantum computing

Australian spin-out Silicon Quantum Computing makes the case with a modality-leading 11-qubit processor

Reducing atom loss and re-using already-measured atoms enables more complex quantum computations

Read article: Qubit ‘recycling’ gives neutral-atom quantum computing a boost

Read article: Qubit ‘recycling’ gives neutral-atom quantum computing a boost

Spin-orbit interaction adjustment produces "best of both worlds" scenario

Read article: Can fast qubits also be robust?

Read article: Can fast qubits also be robust?

A qubit encoded in a fluorescent protein could act as a sensor to measure quantum properties inside living systems

Read article: Protein qubit can be used as a quantum biosensor

Read article: Protein qubit can be used as a quantum biosensor

Can you make an impact through peer review?

Join more than 15,000 researchers who have achieved IOP Trusted Reviewer status – Tell me more